Data Insights

Epoch AI’s data insights break down complex AI trends into focused, digestible snapshots. Explore topics like training compute, hardware advancements, and AI training costs in a clear and accessible format.

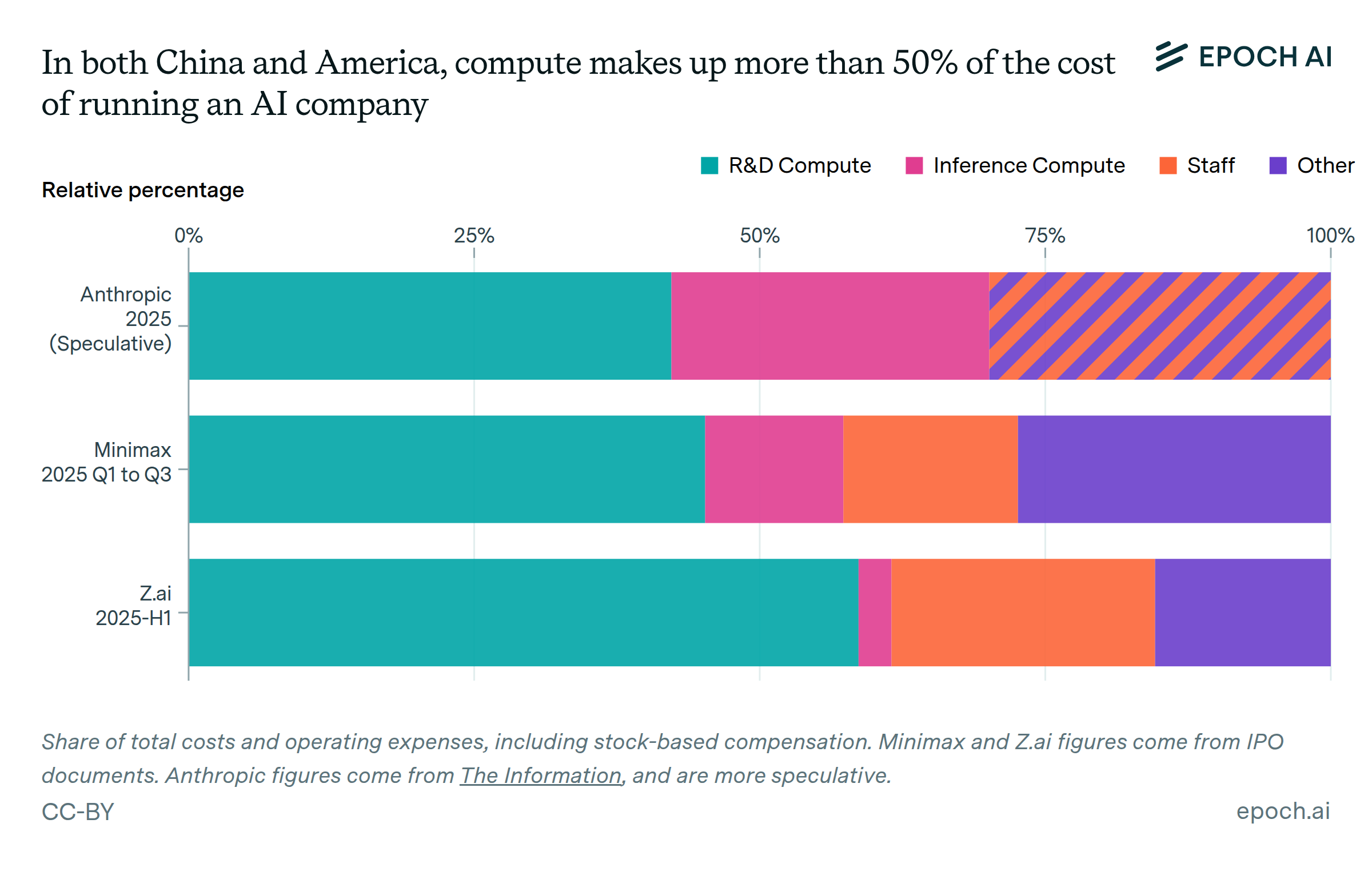

In both China and the US, compute makes up more than 50% of the cost of running an AI company

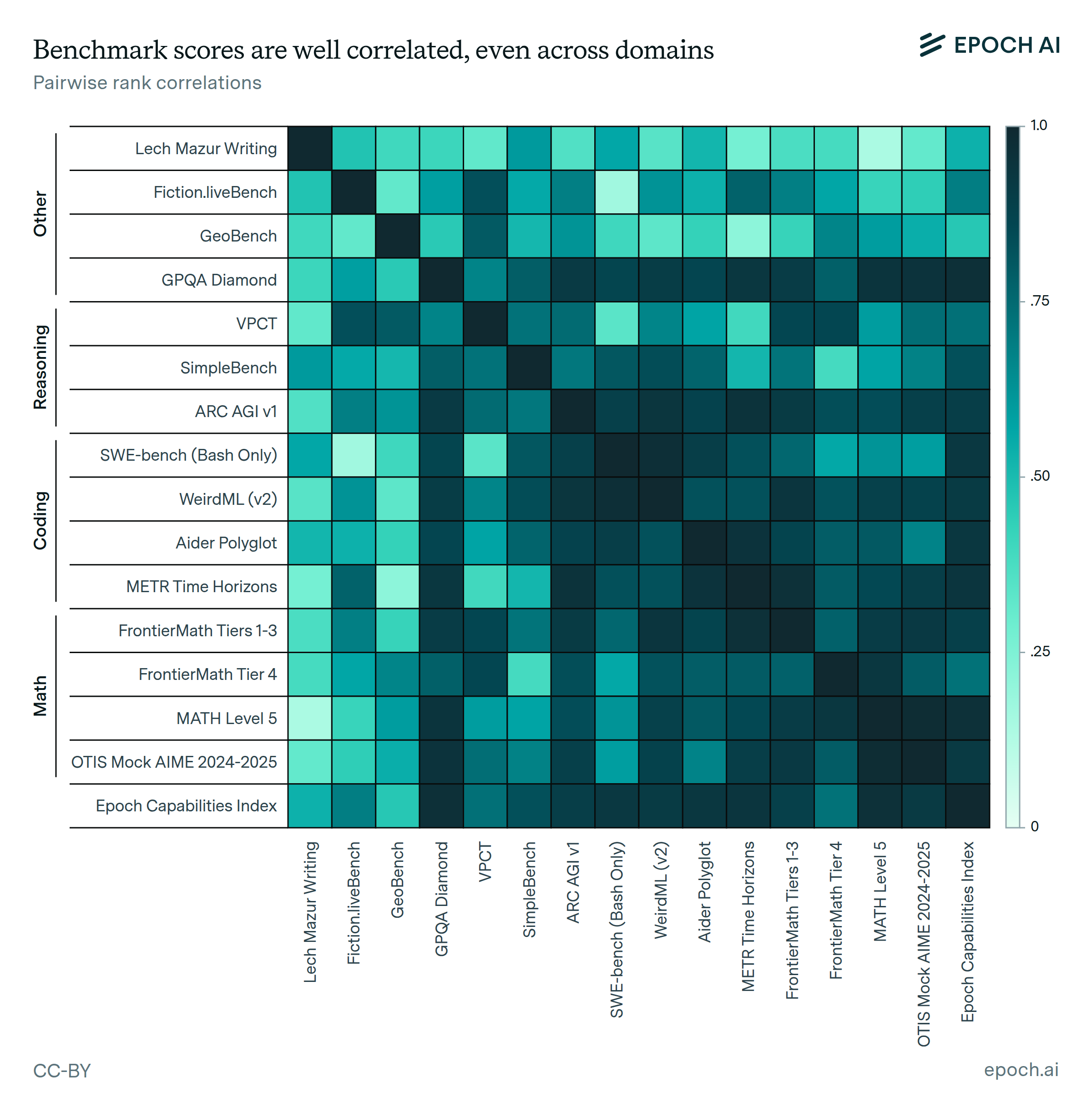

Benchmark scores are well correlated, even across domains

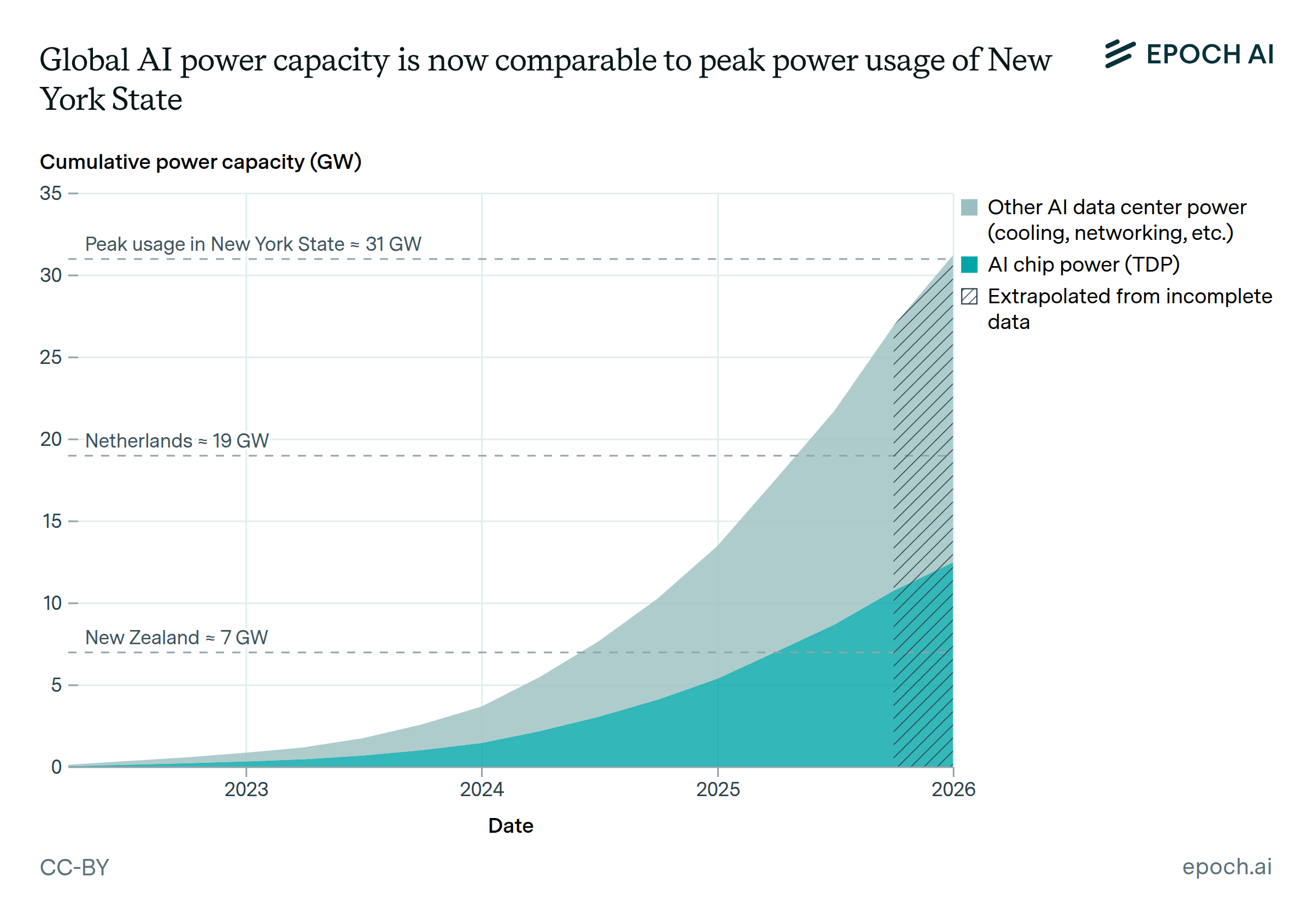

Global AI power capacity is now comparable to peak power usage of New York State

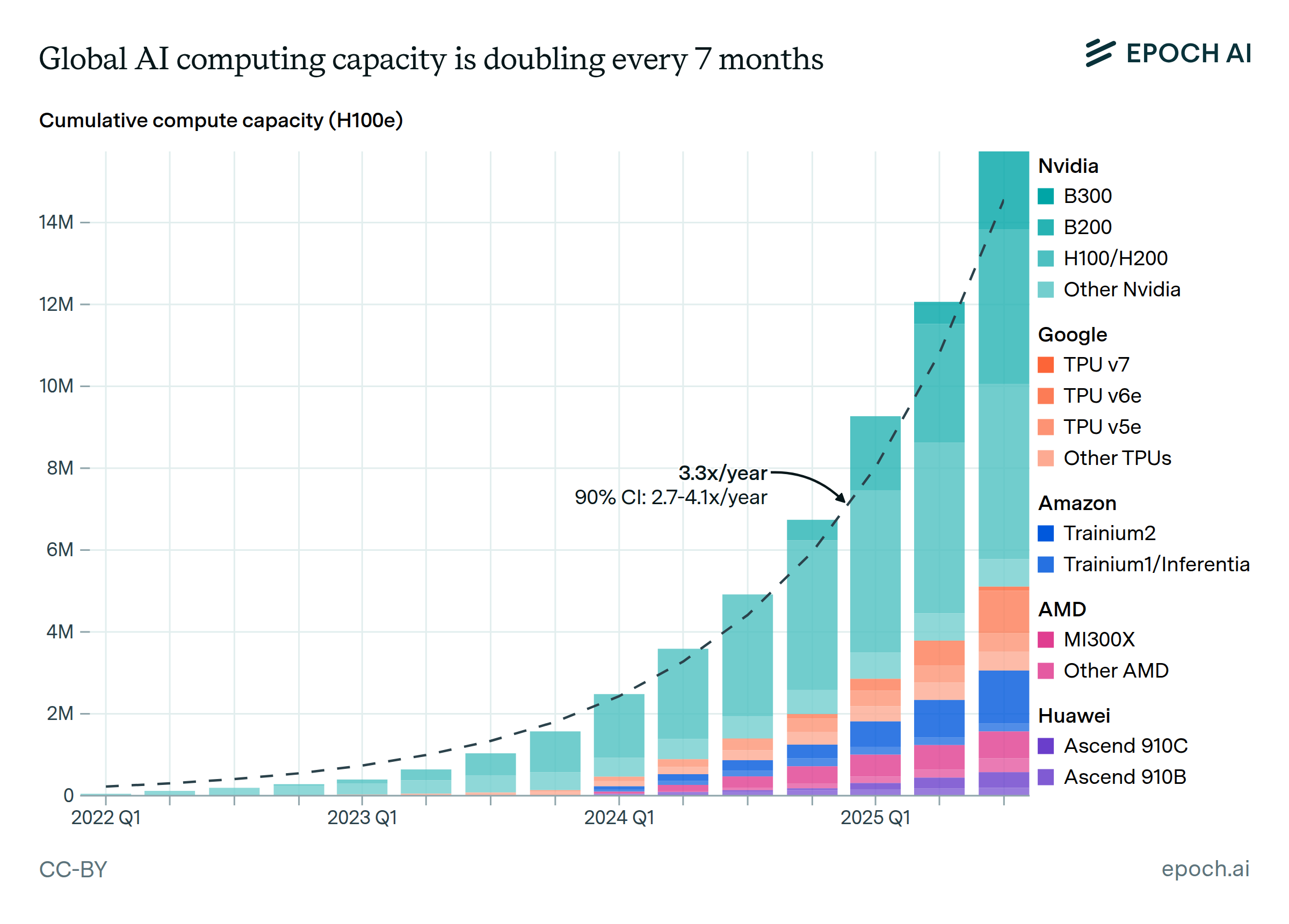

Global AI computing capacity is doubling every 7 months

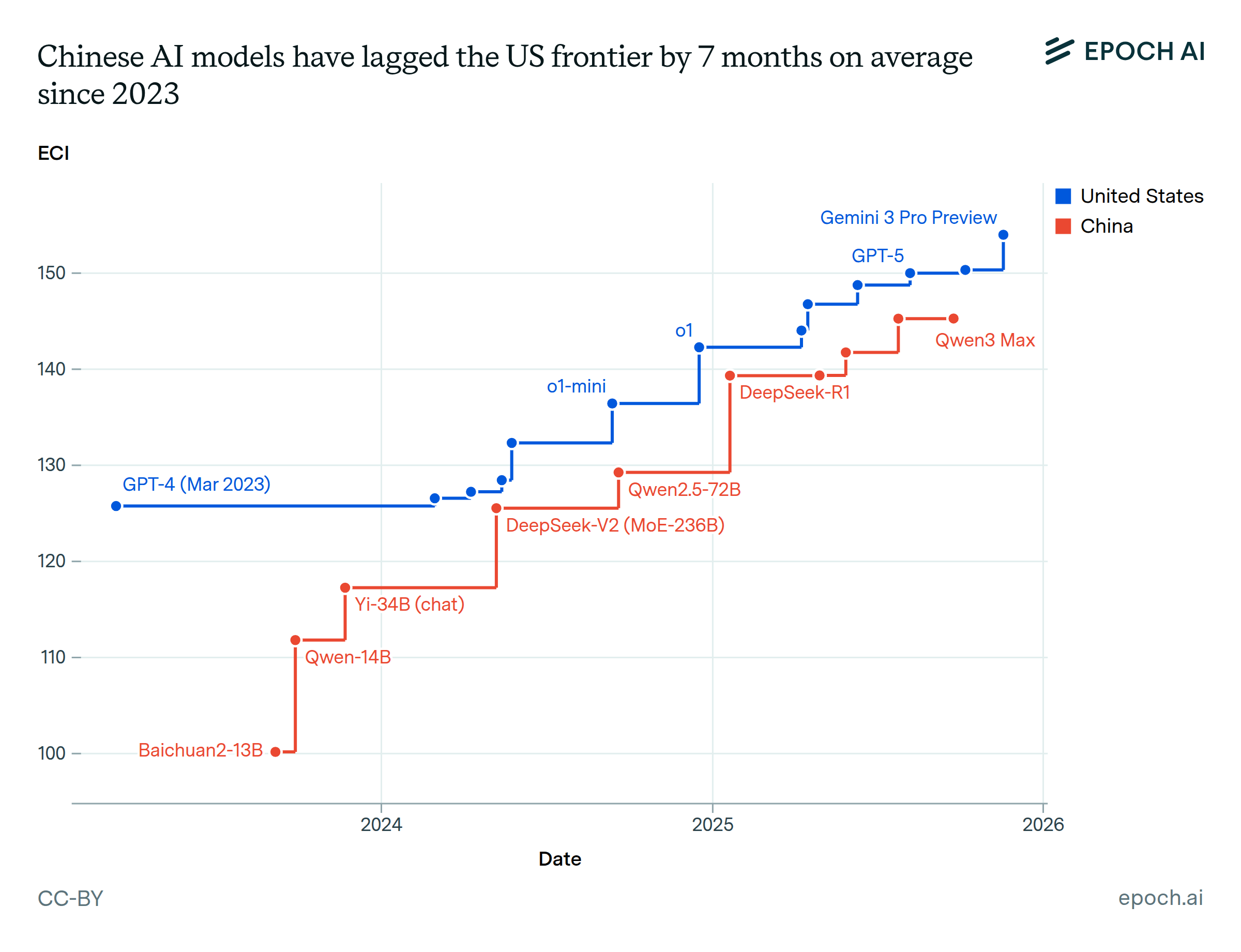

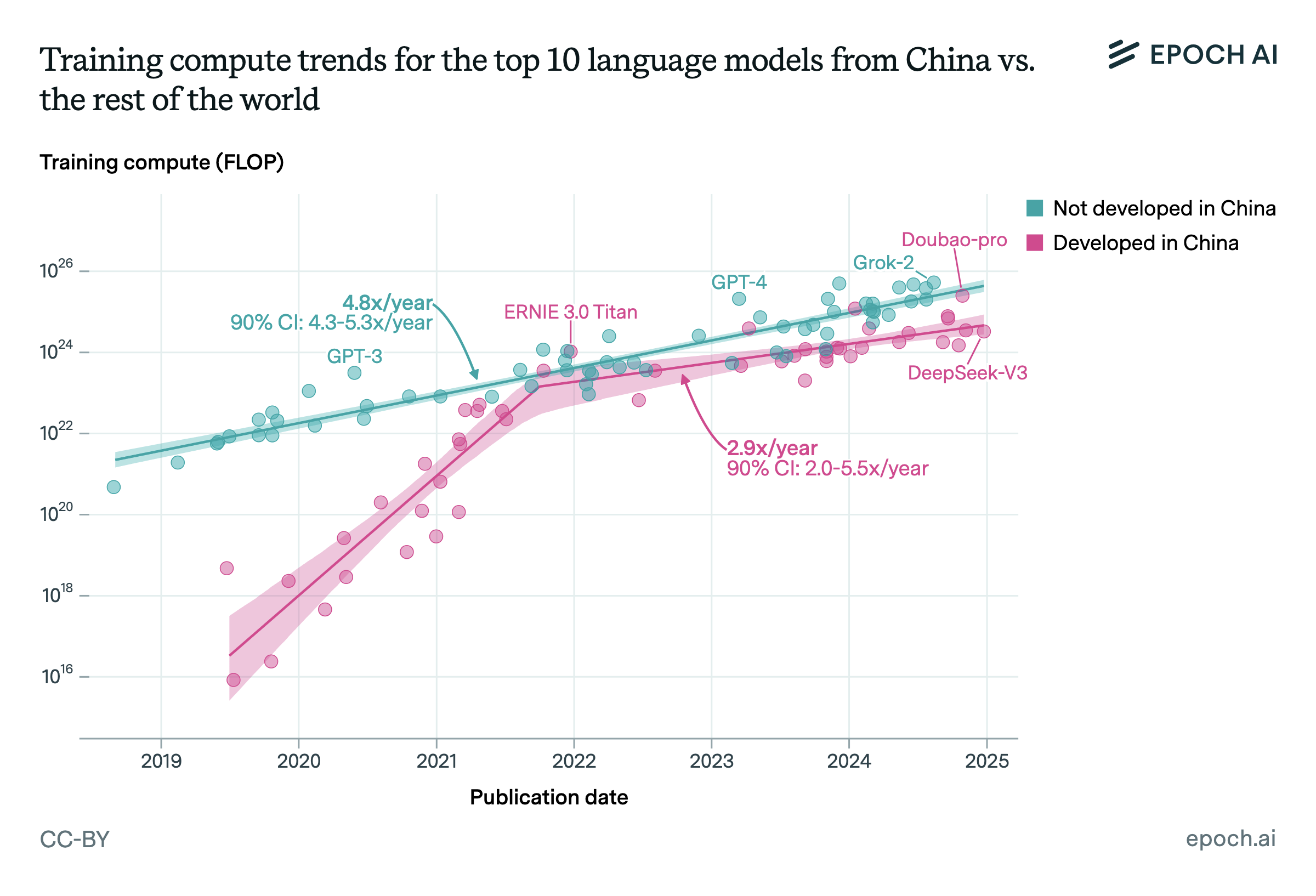

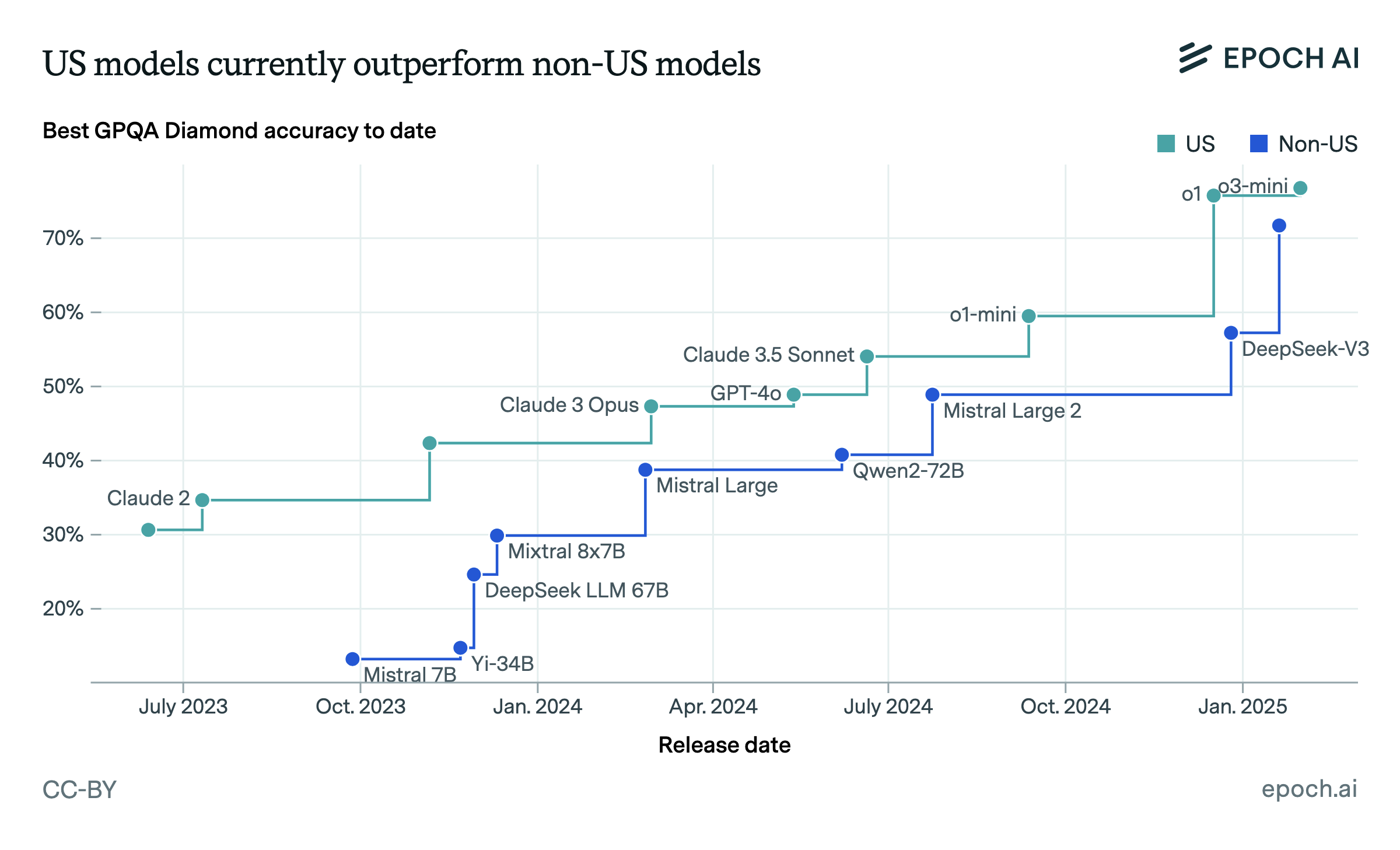

Chinese AI models have lagged the US frontier by 7 months on average since 2023

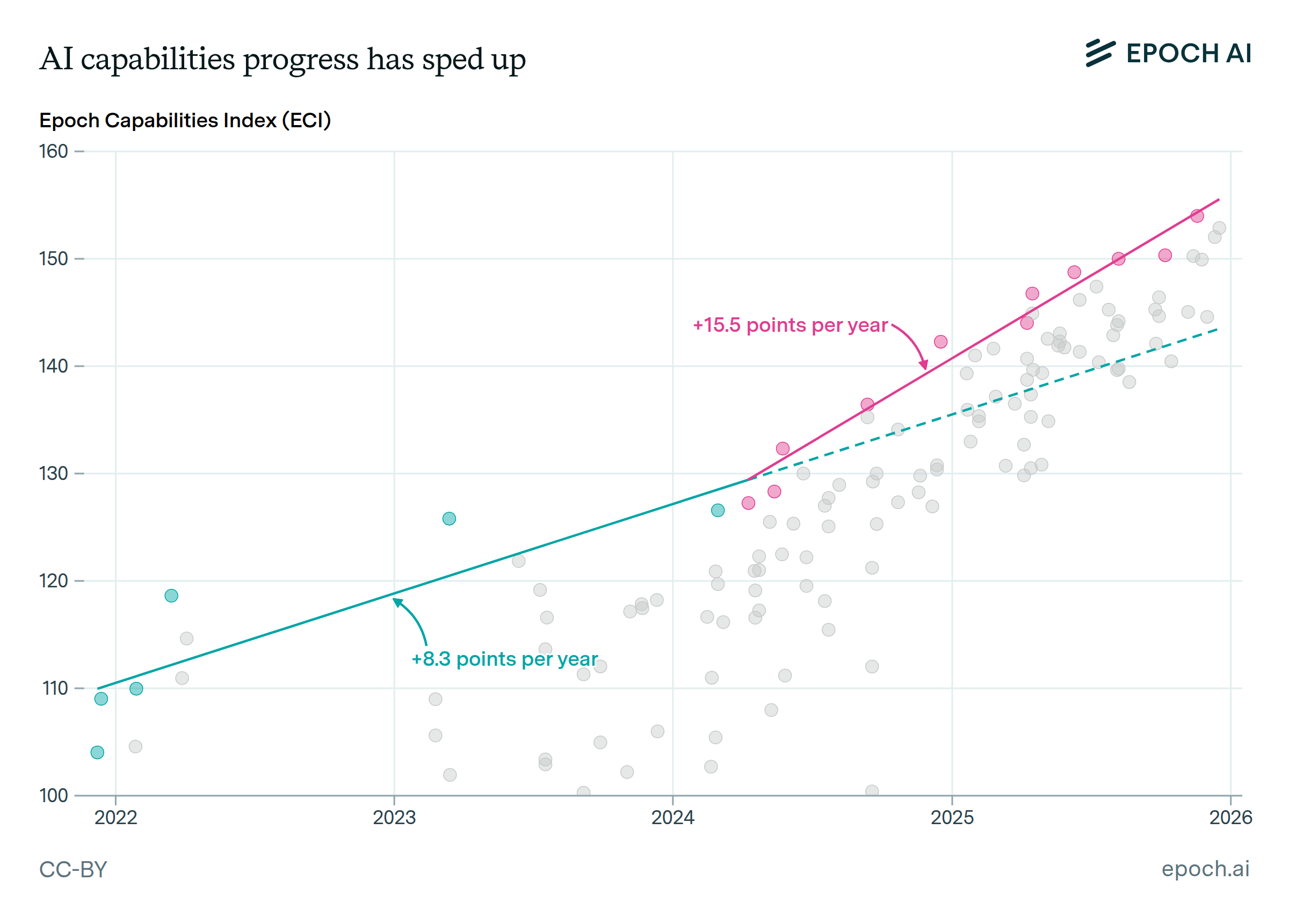

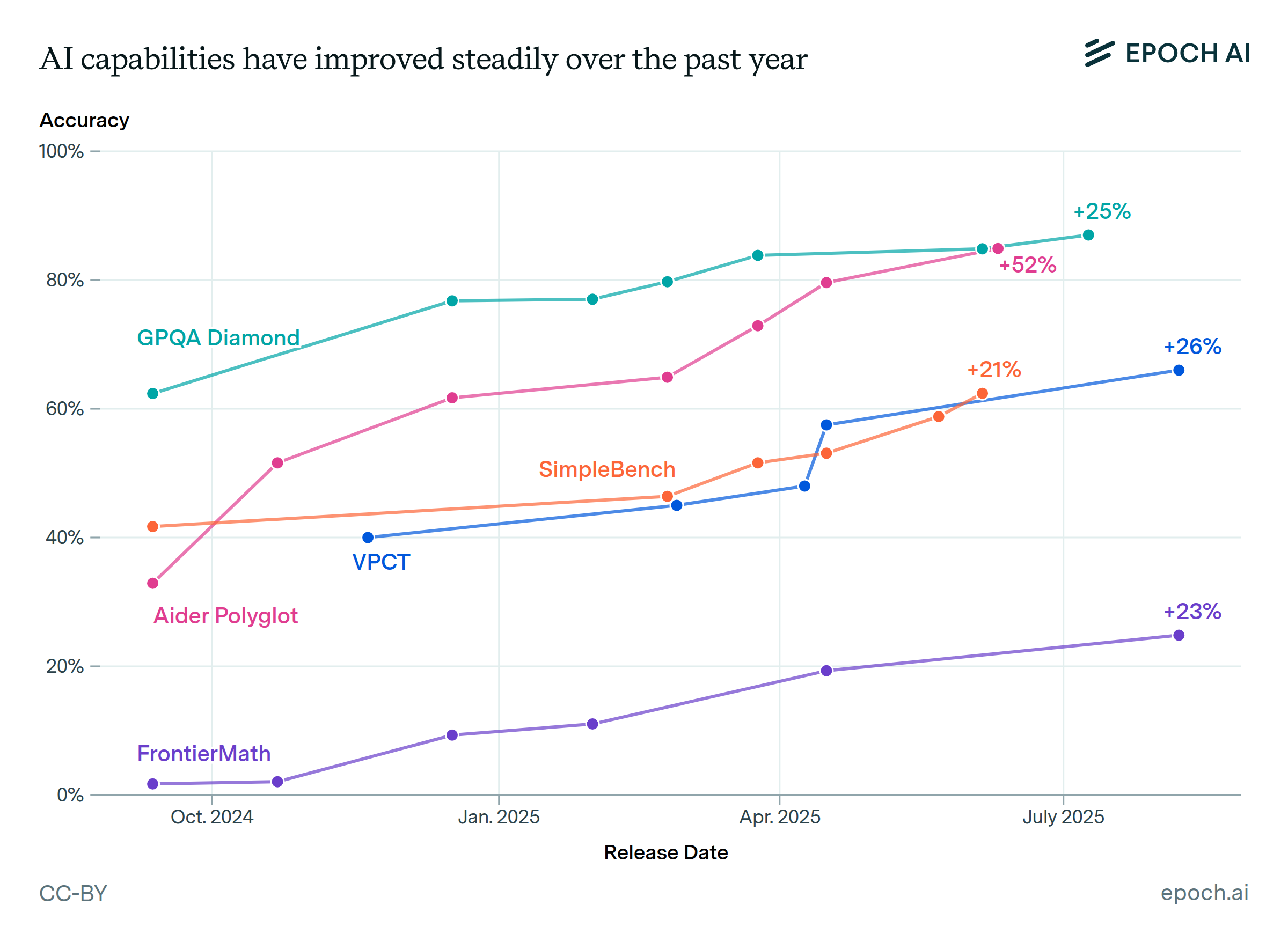

AI capabilities progress has sped up

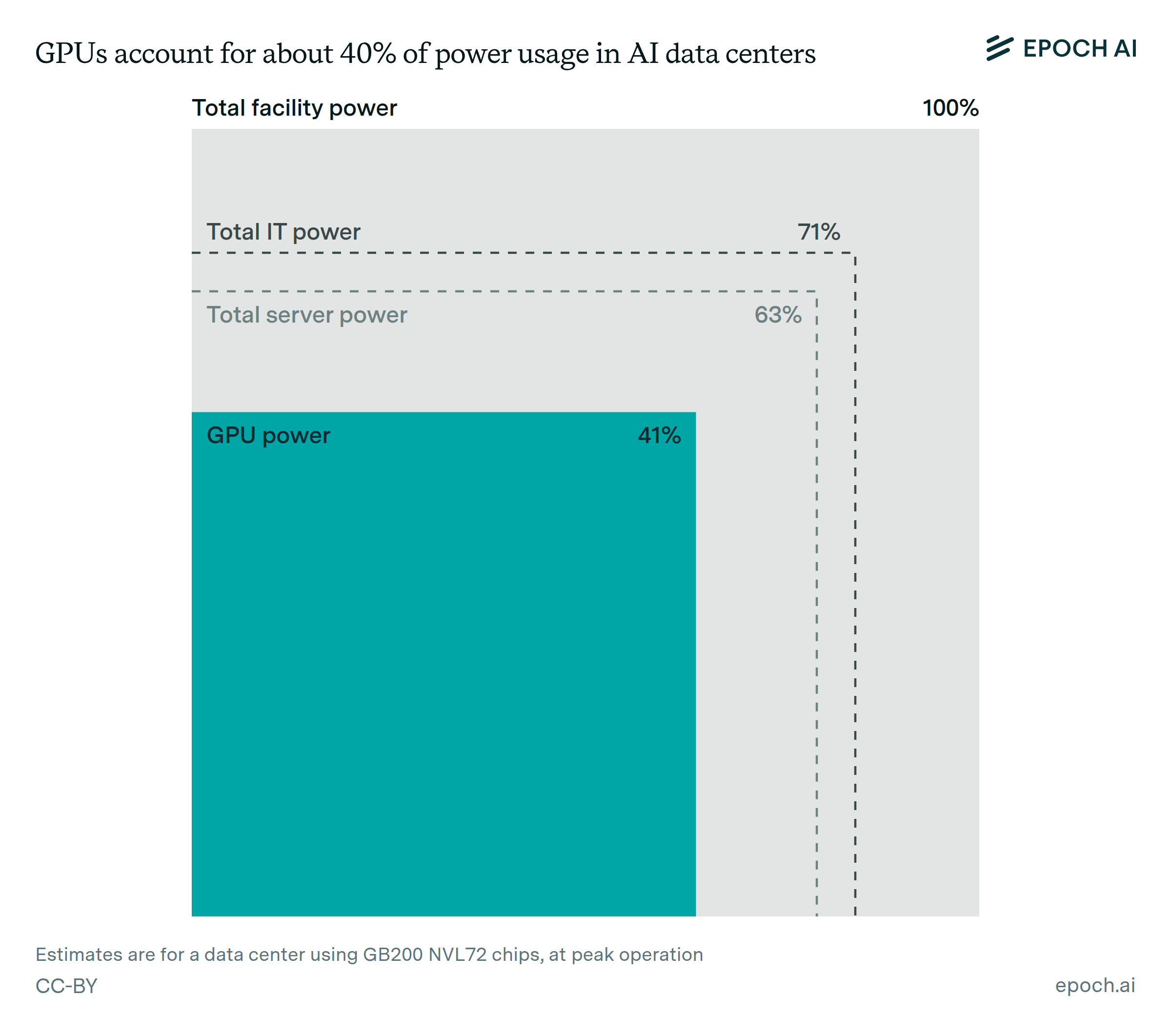

GPUs account for about 40% of power usage in AI data centers

NVIDIA’s B200 costs around $6,400 to produce, with memory accounting for half

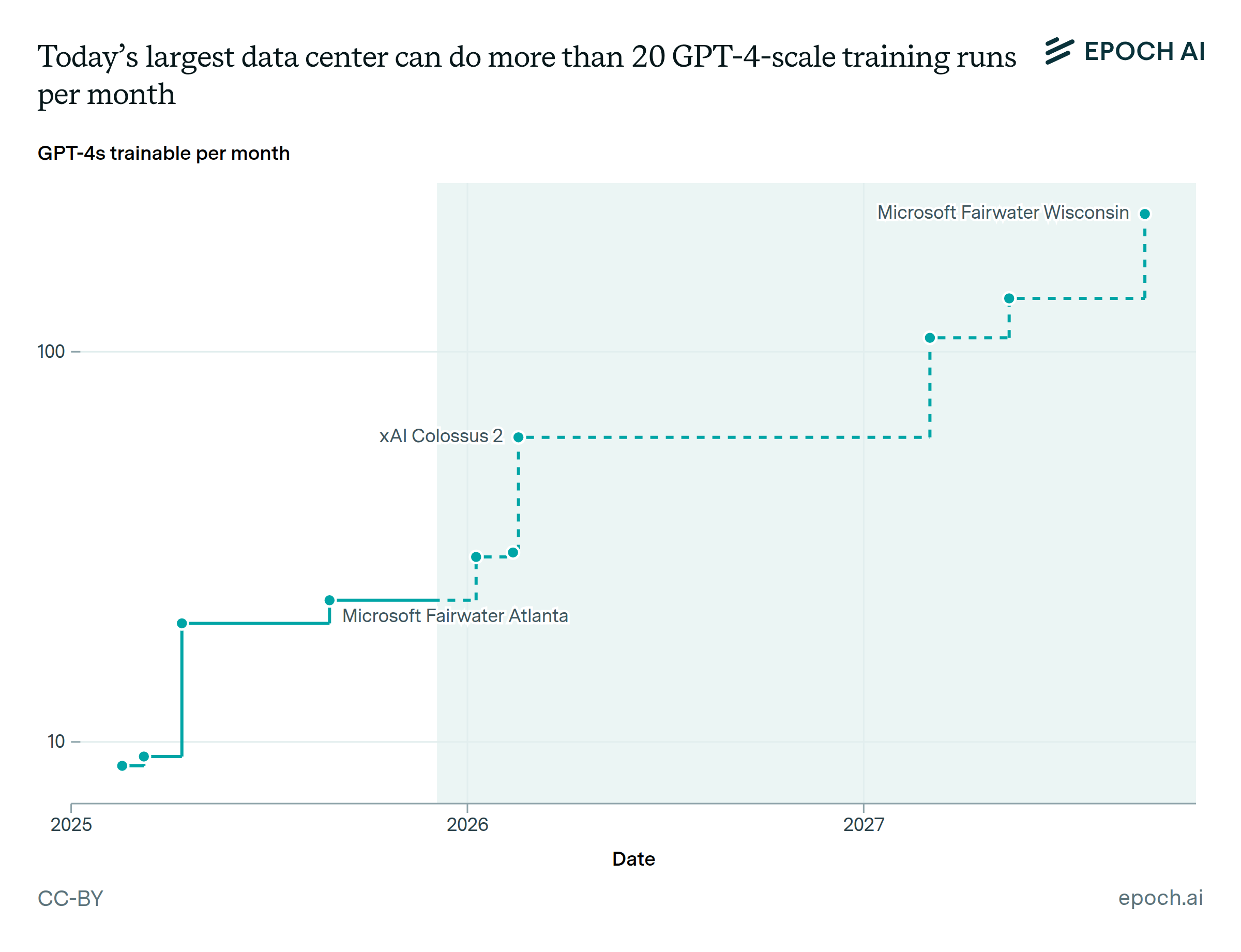

Today’s largest data center can do more than 20 GPT-4-scale training runs each month

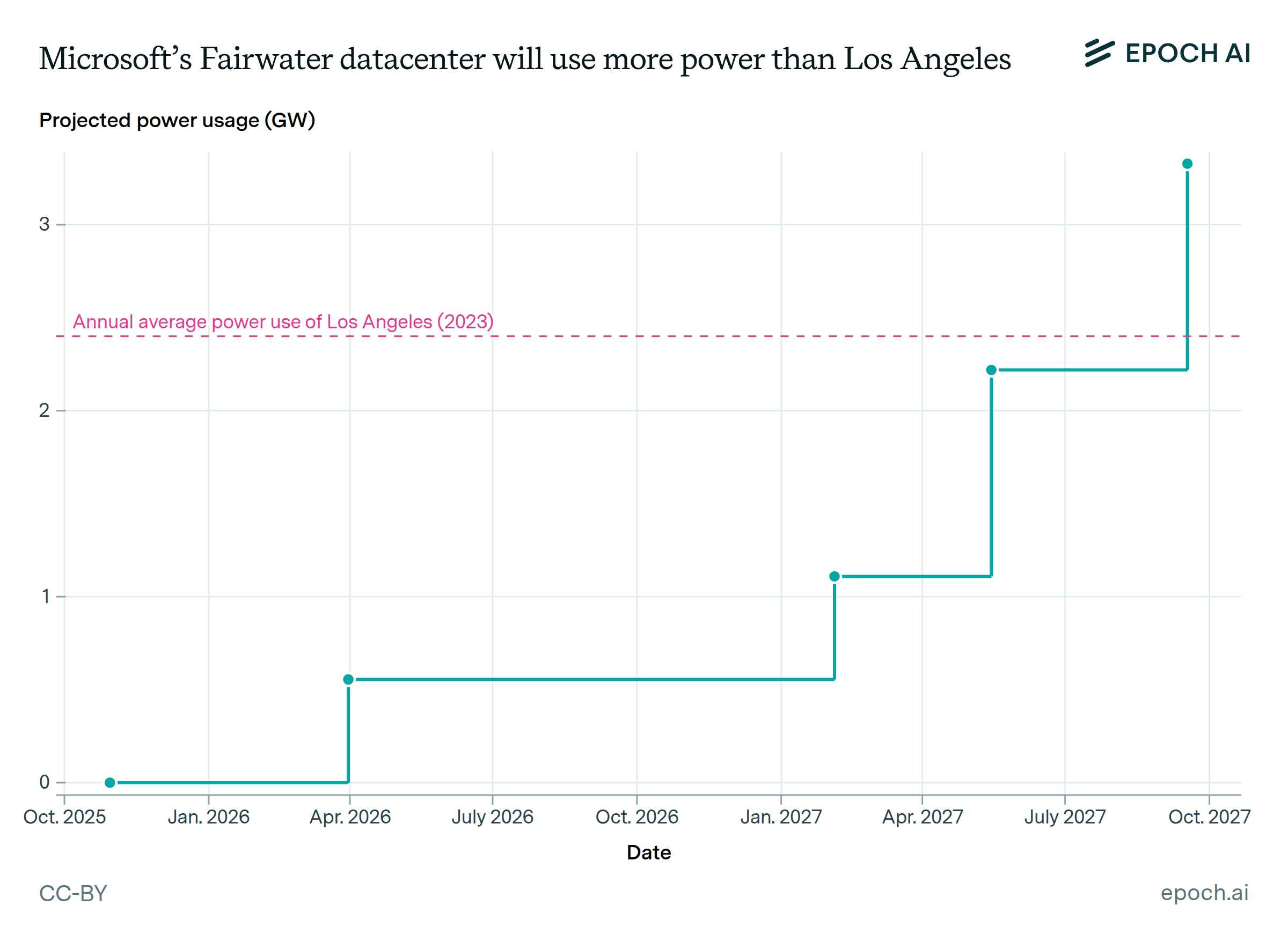

Microsoft’s Fairwater datacenter will use more power than Los Angeles

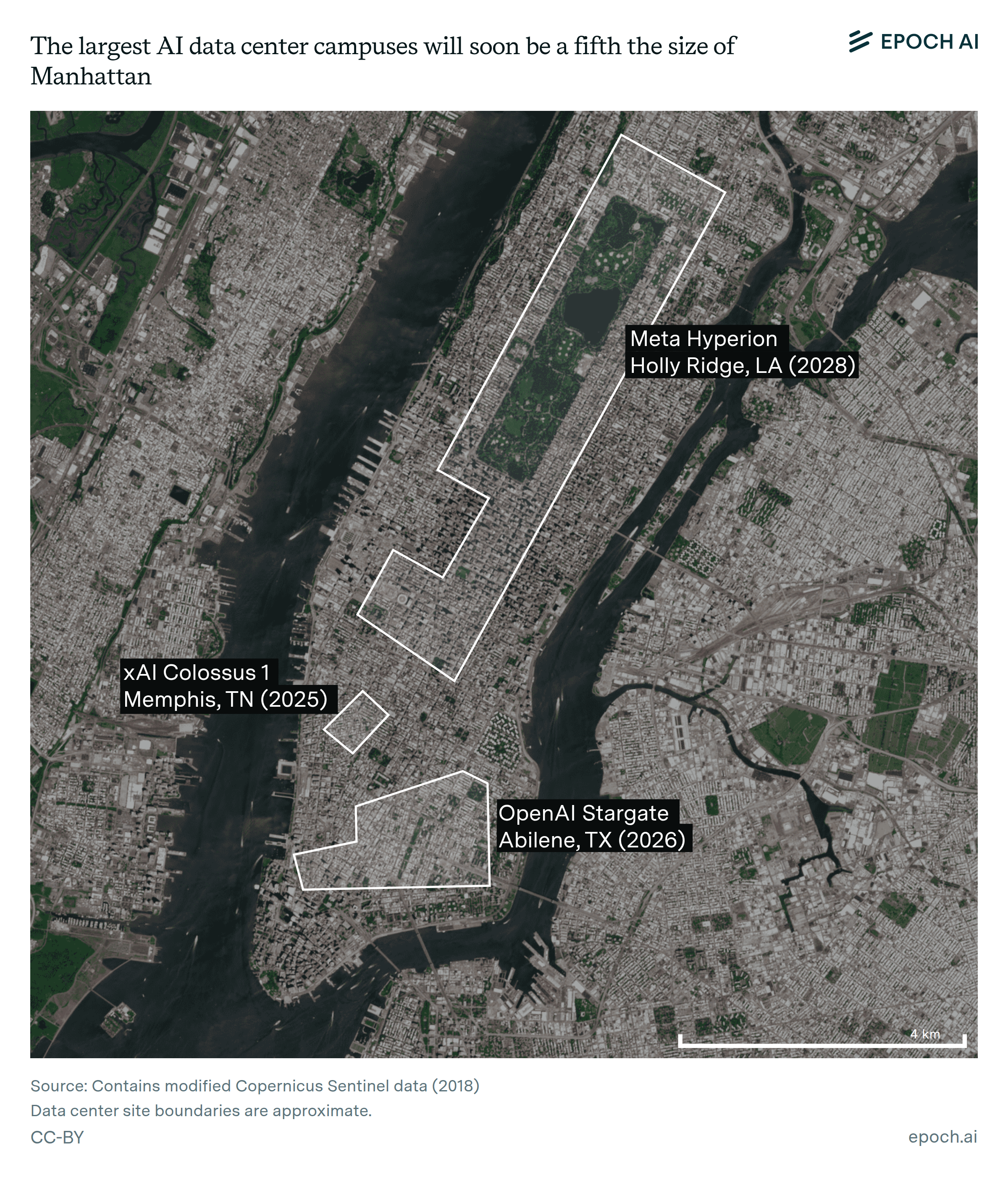

The largest AI data center campuses will soon be a fifth the size of Manhattan

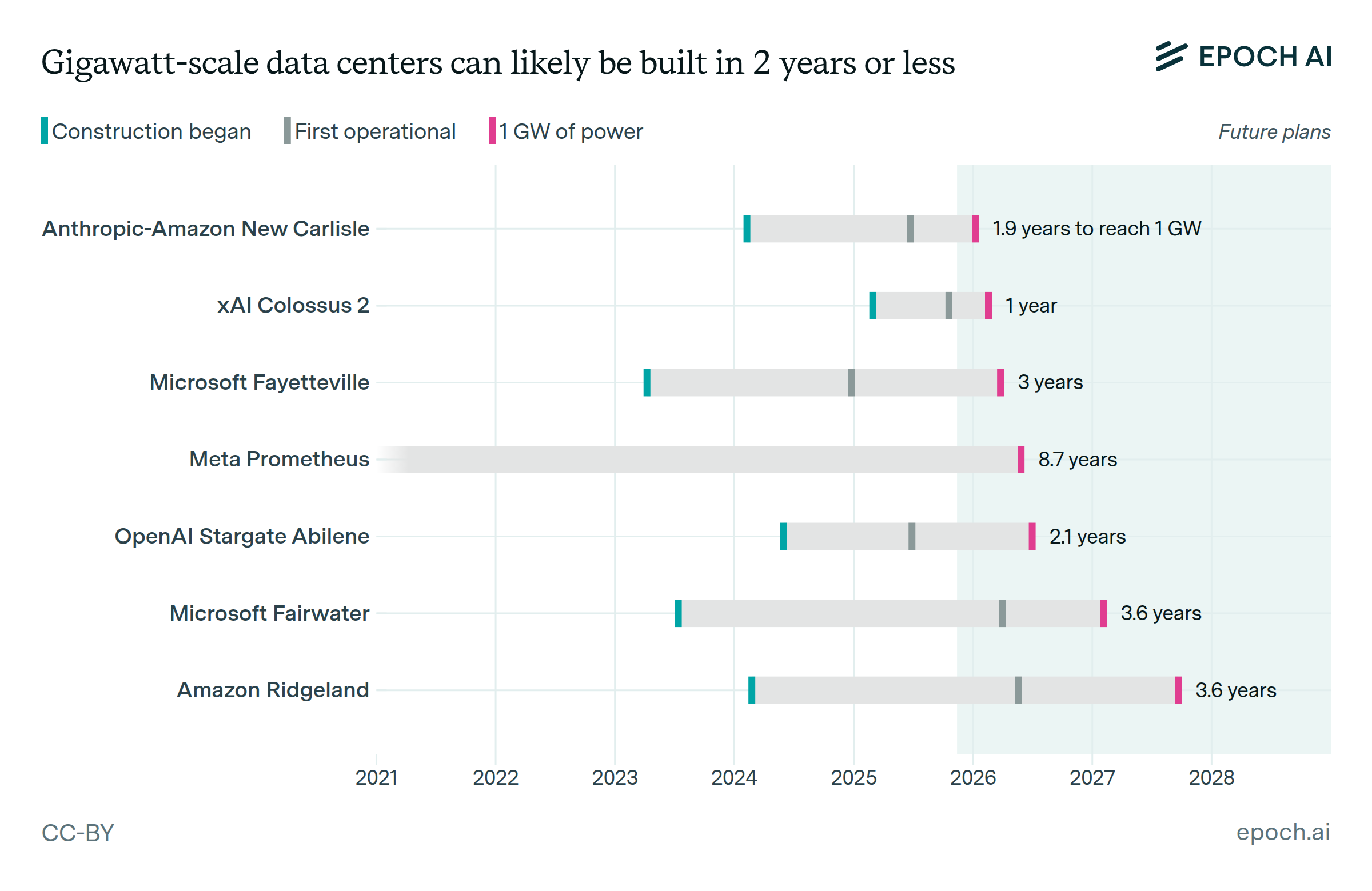

Build times for gigawatt-scale data centers can be 2 years or less

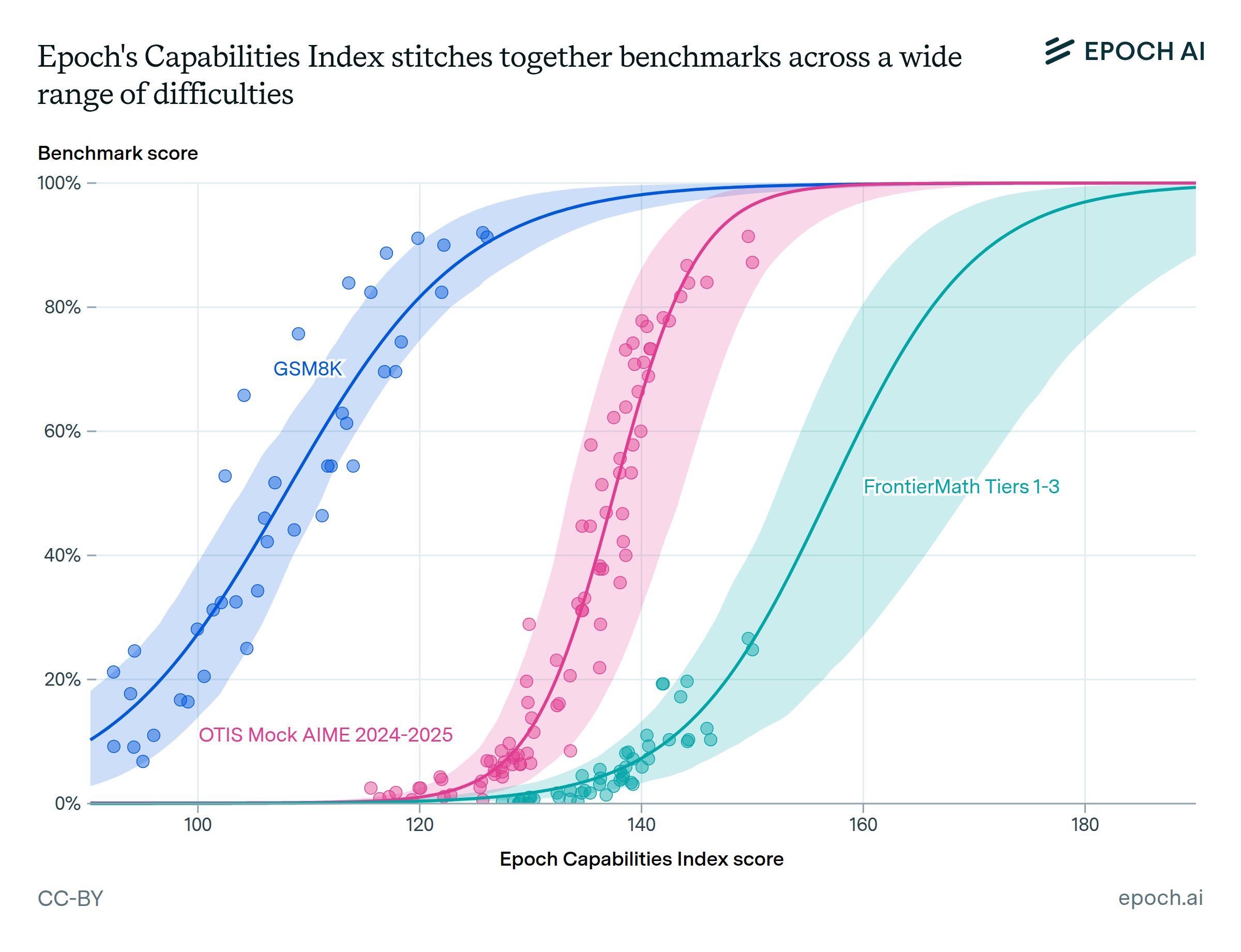

Epoch’s Capabilities Index stitches together benchmarks across a wide range of difficulties

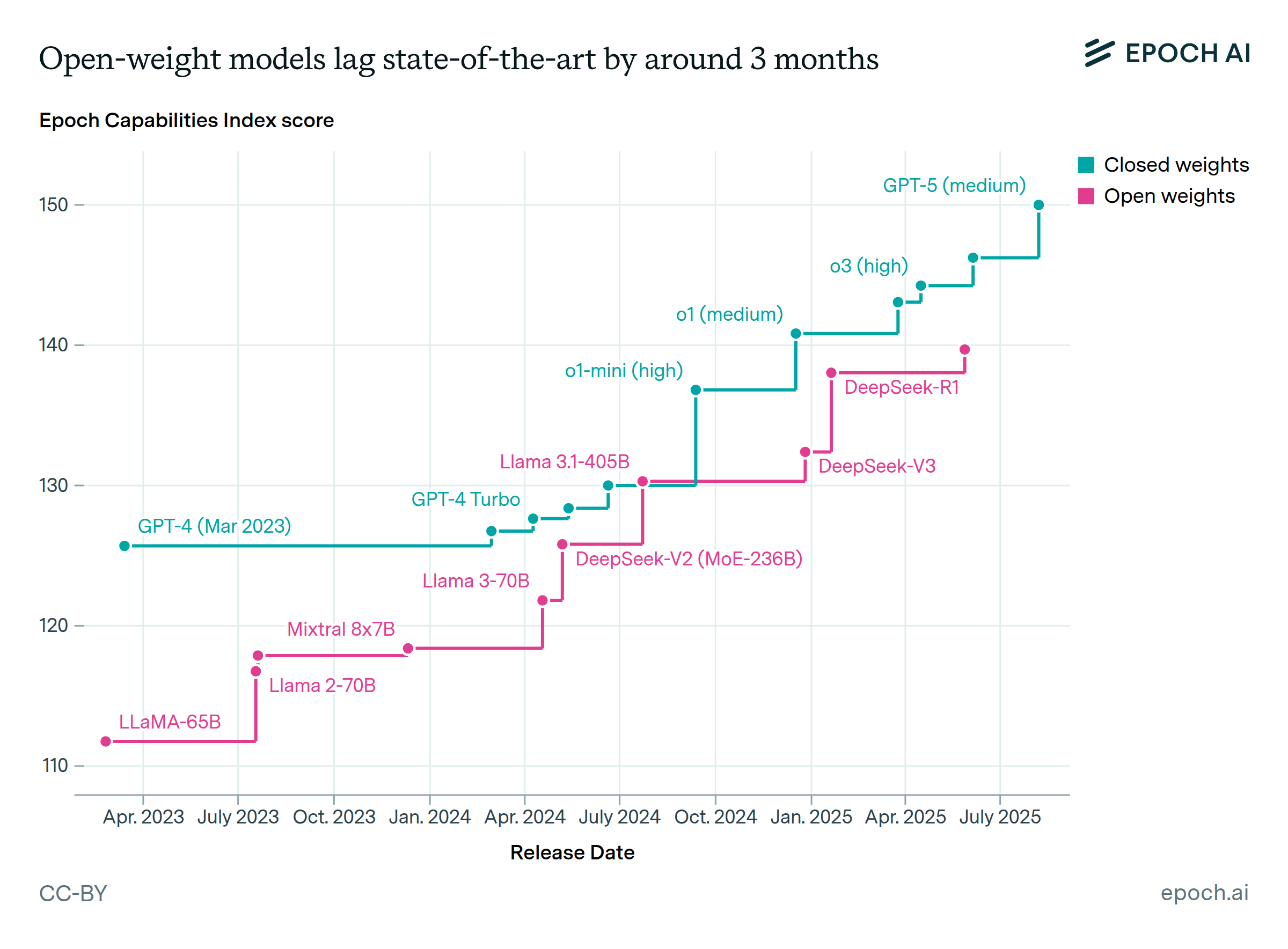

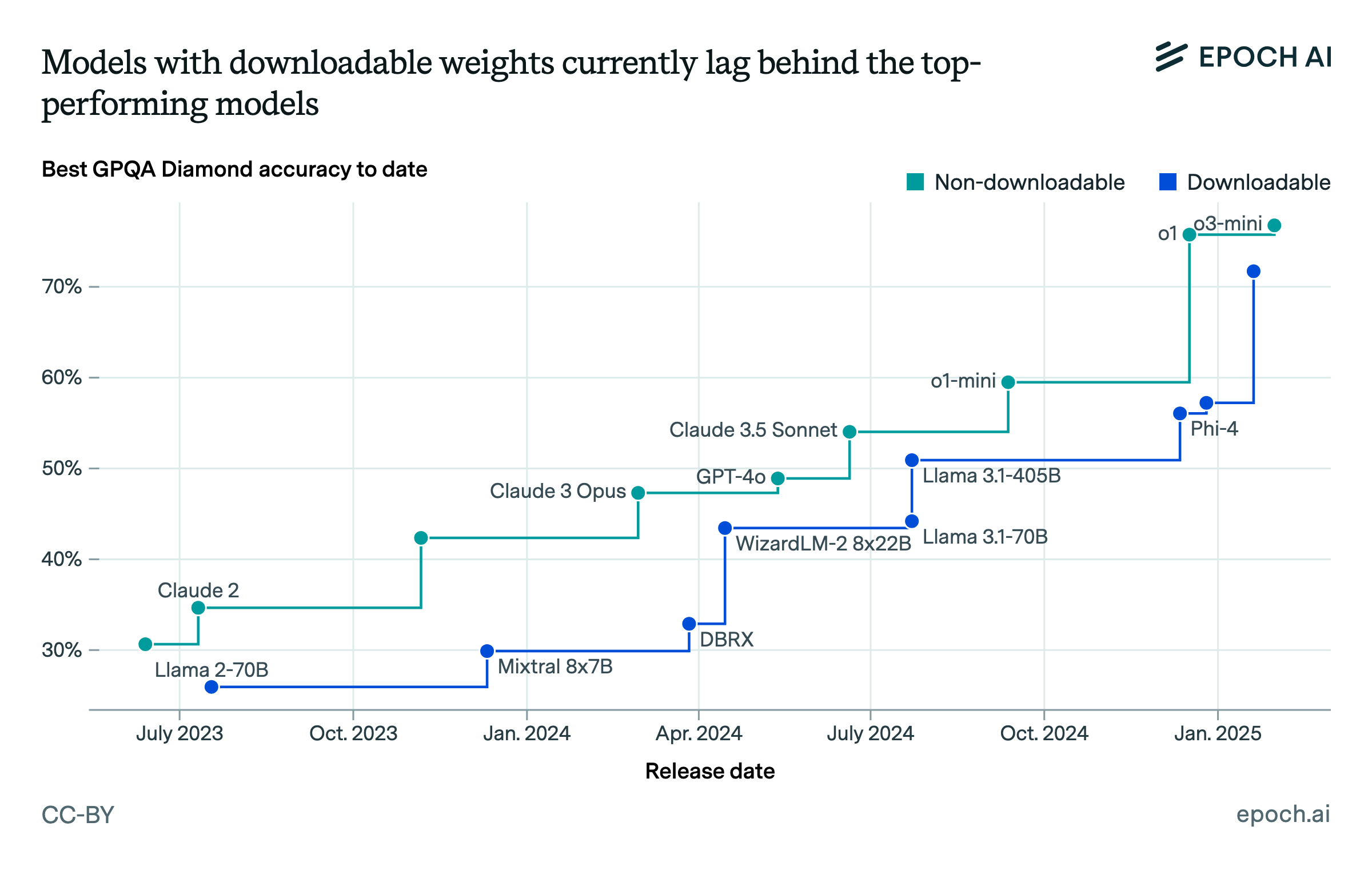

Open-weight models lag state-of-the-art by around 3 months on average

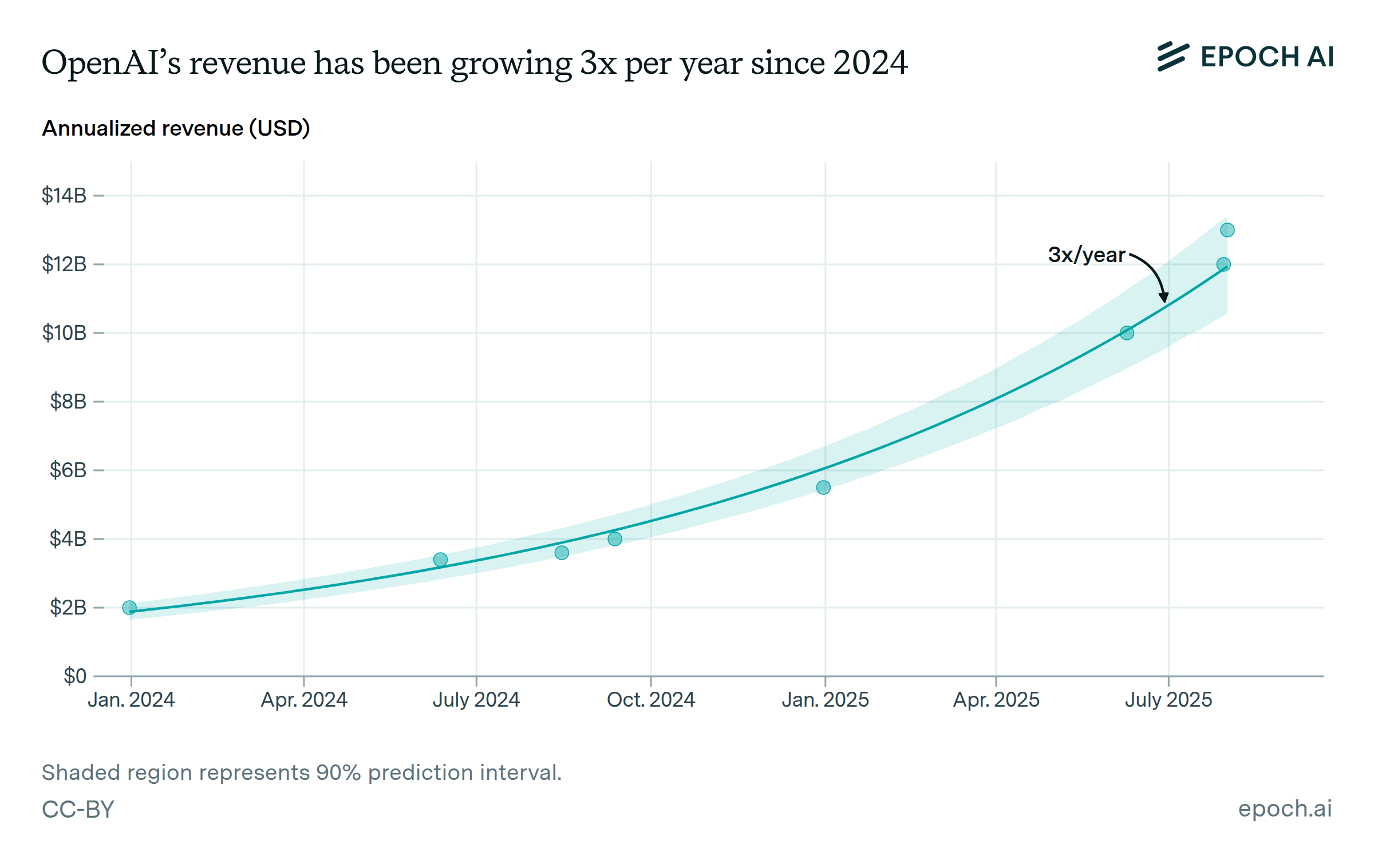

OpenAI’s revenue has been growing 3x a year since 2024

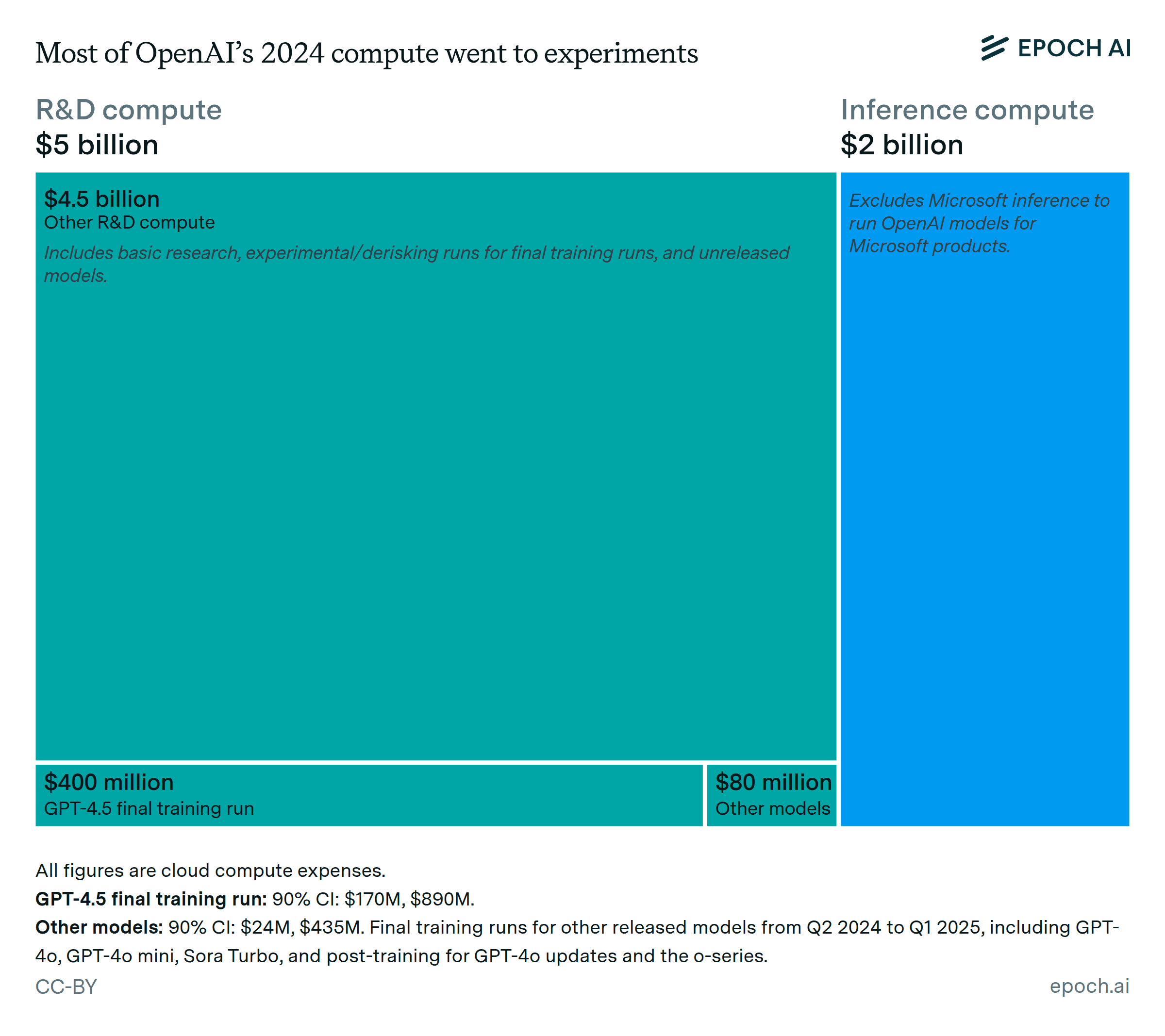

Most of OpenAI’s 2024 compute went to experiments

AI capabilities have steadily improved over the past year

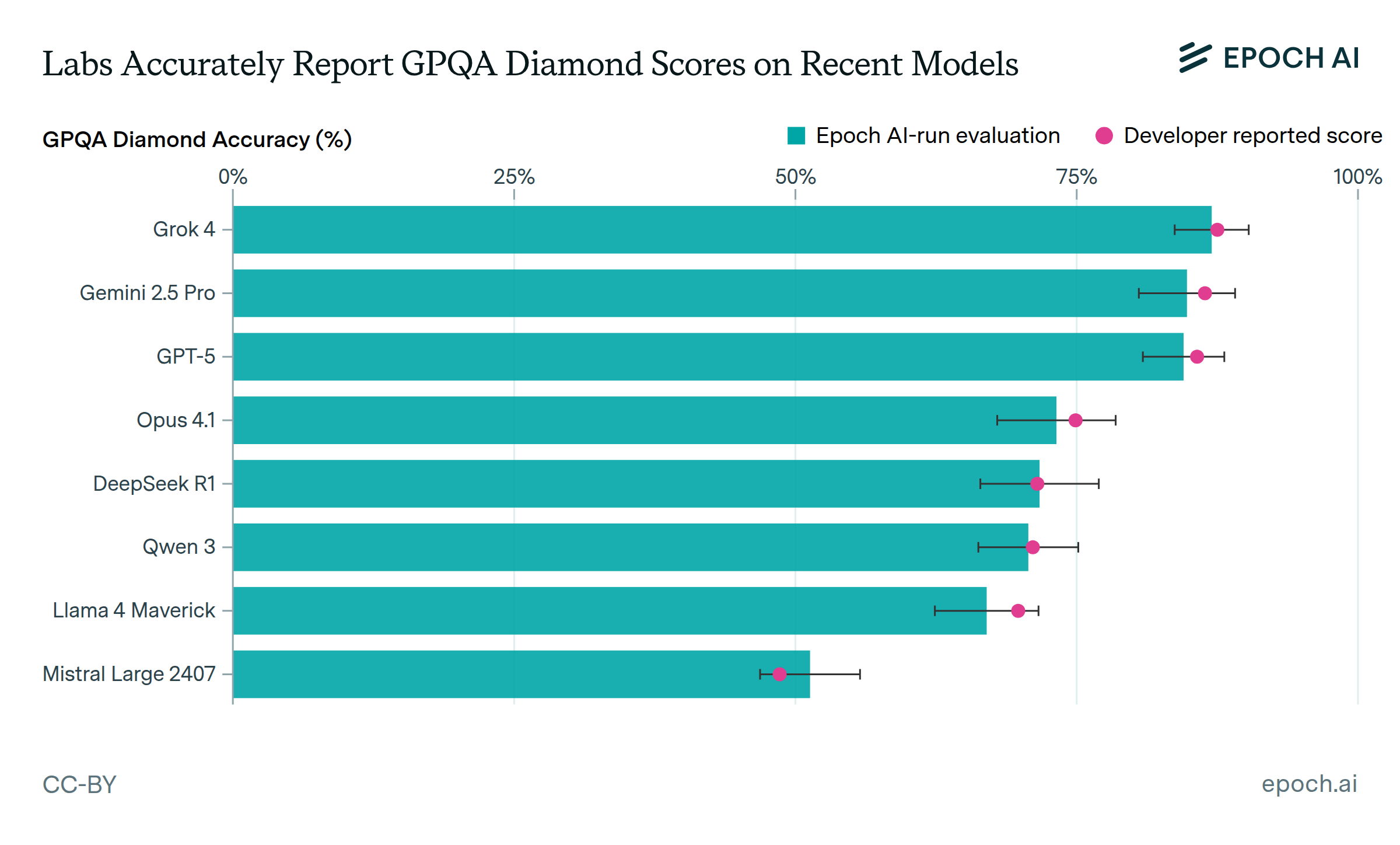

AI developers accurately report GPQA Diamond scores for recent models

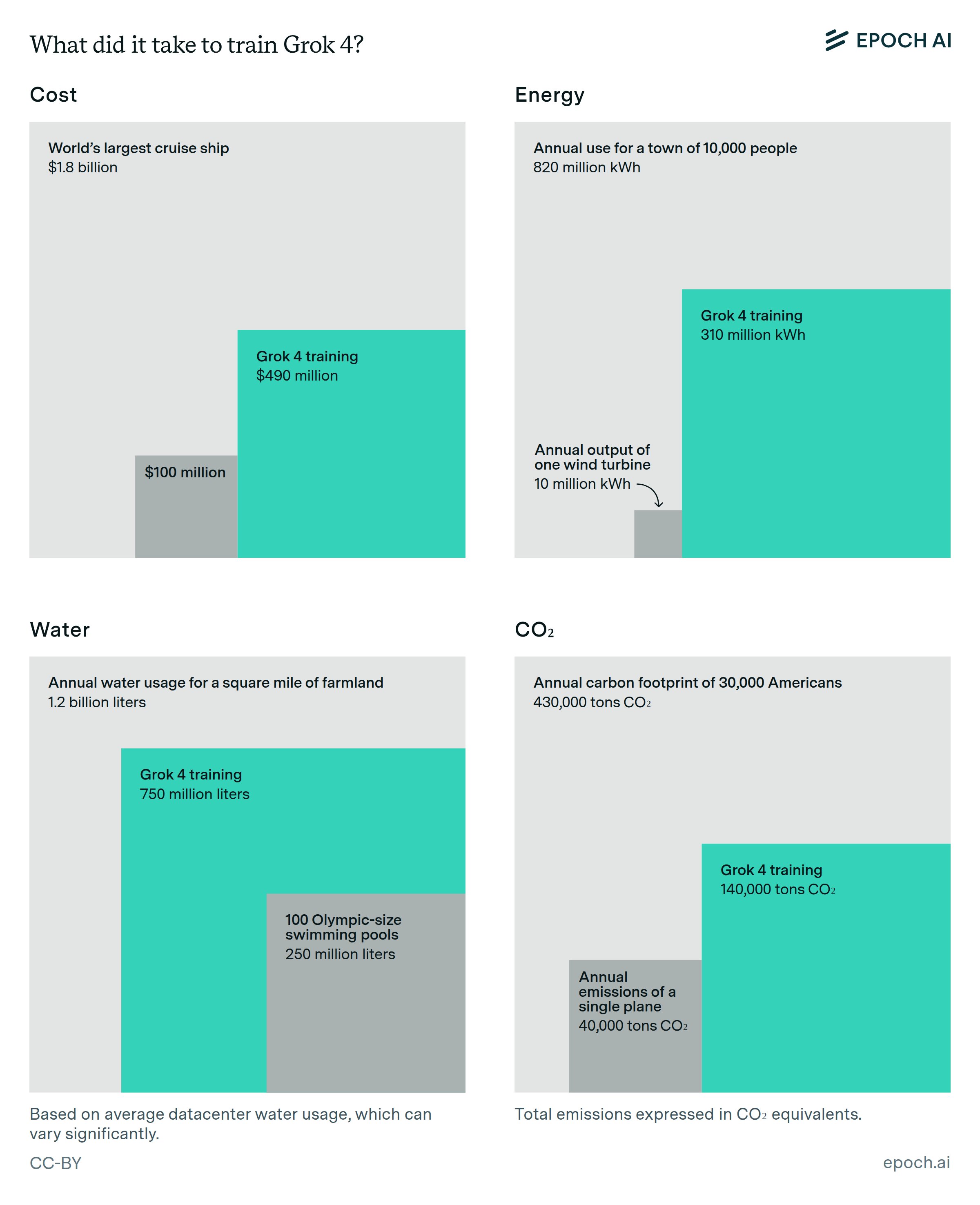

What did it take to train Grok 4?

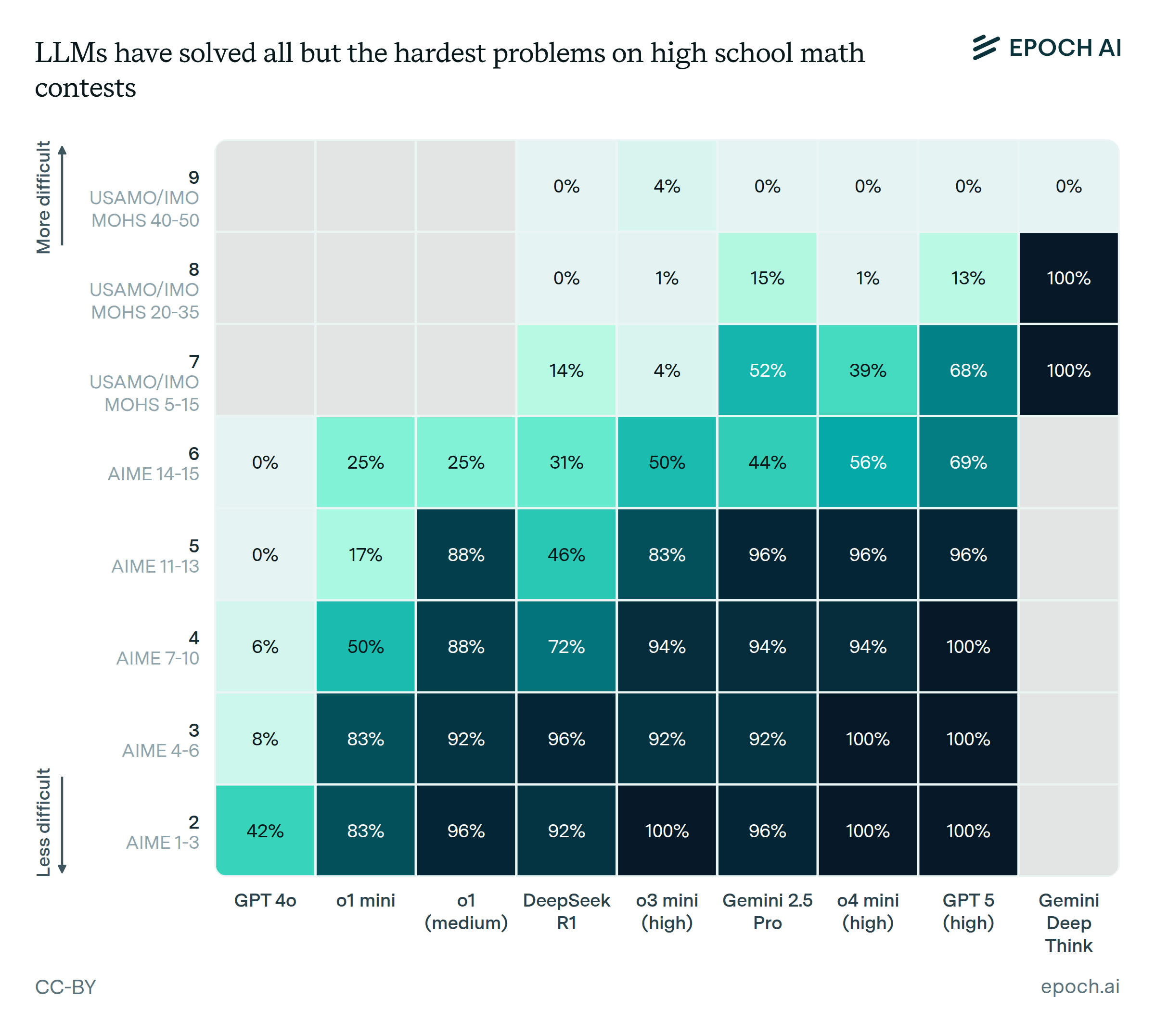

LLMs have not yet solved the hardest problems on high school math contests

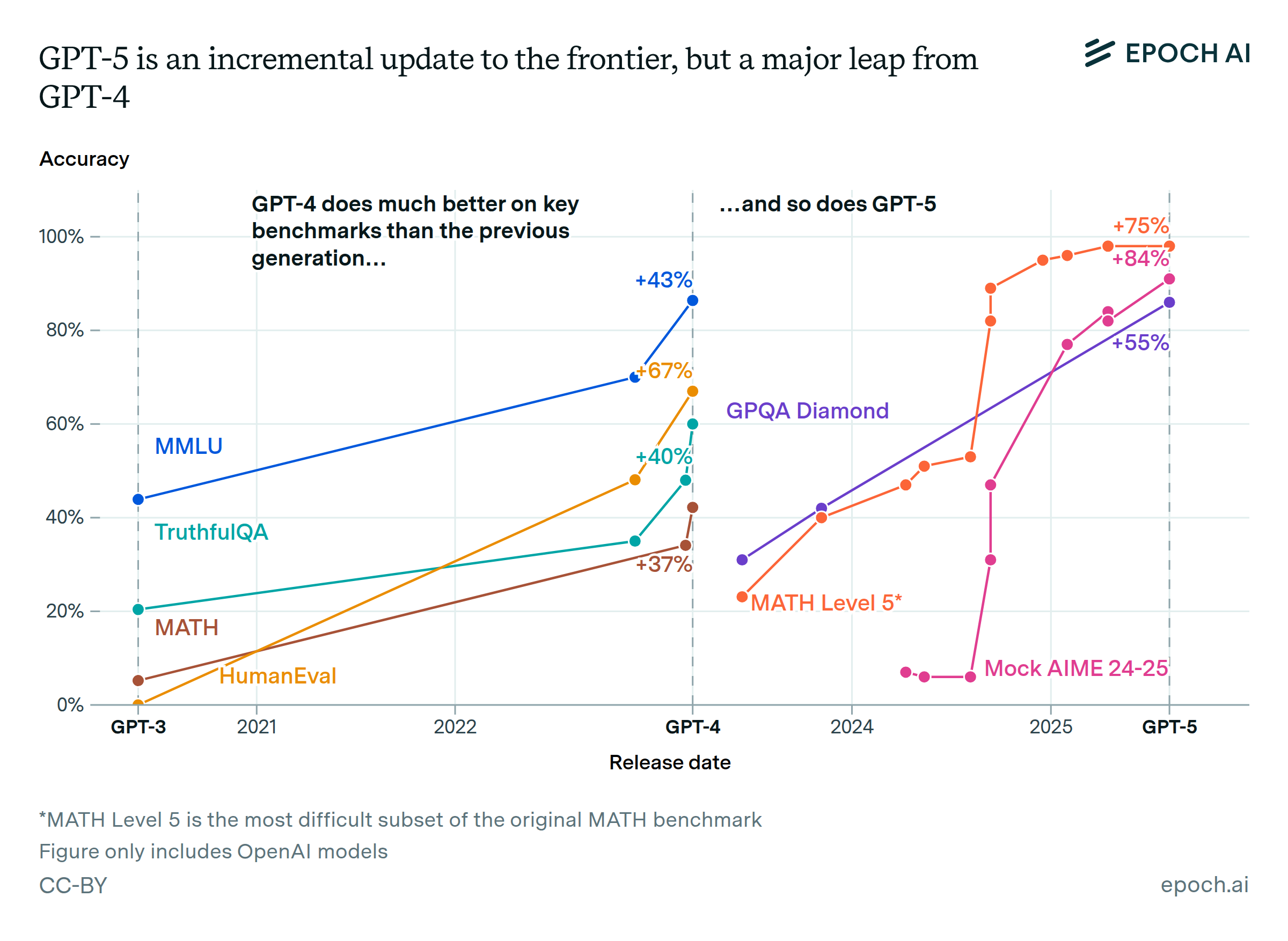

GPT-5 and GPT-4 were both major leaps in benchmarks from the previous generation

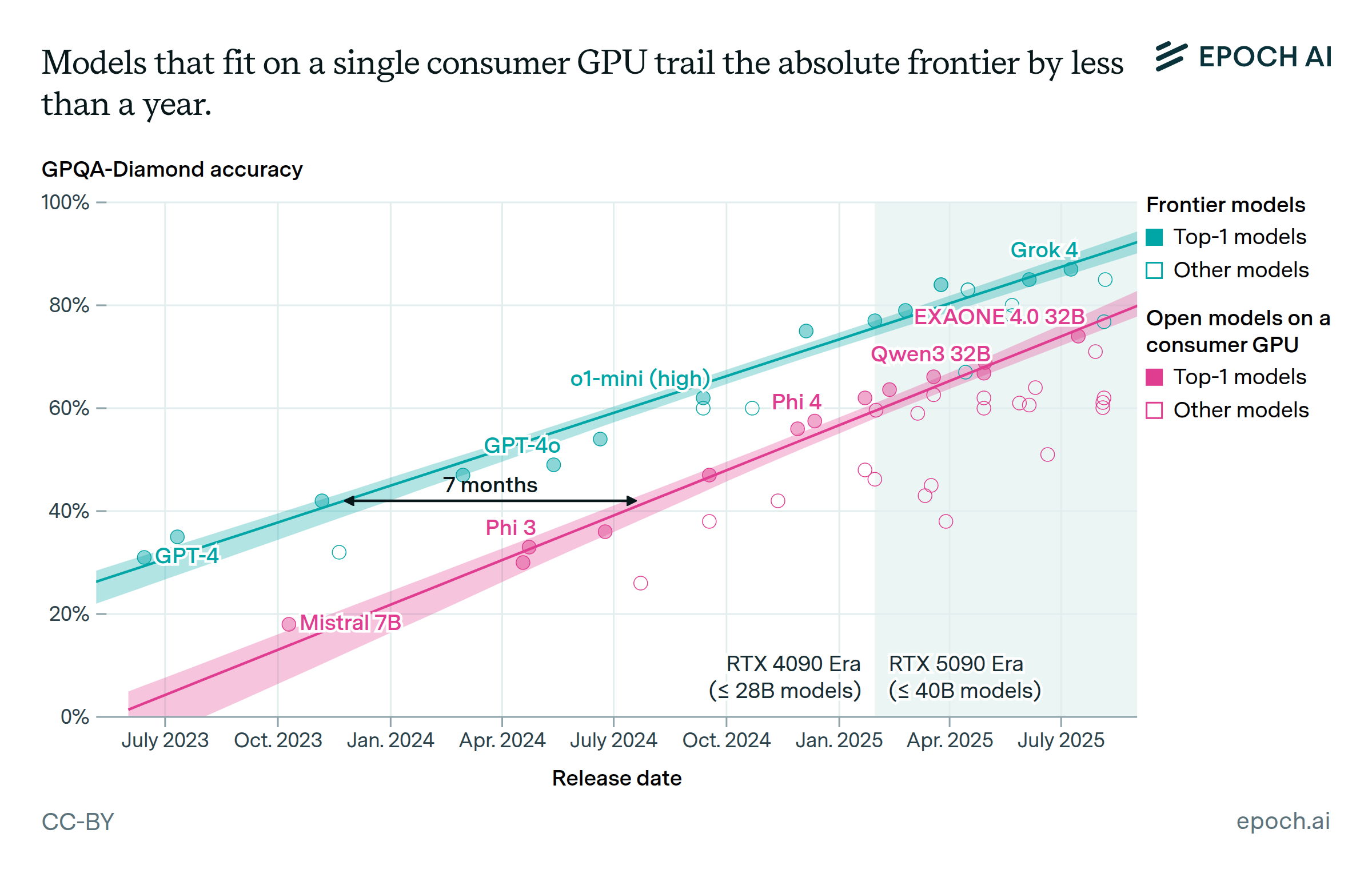

Frontier AI performance becomes accessible on consumer hardware within a year

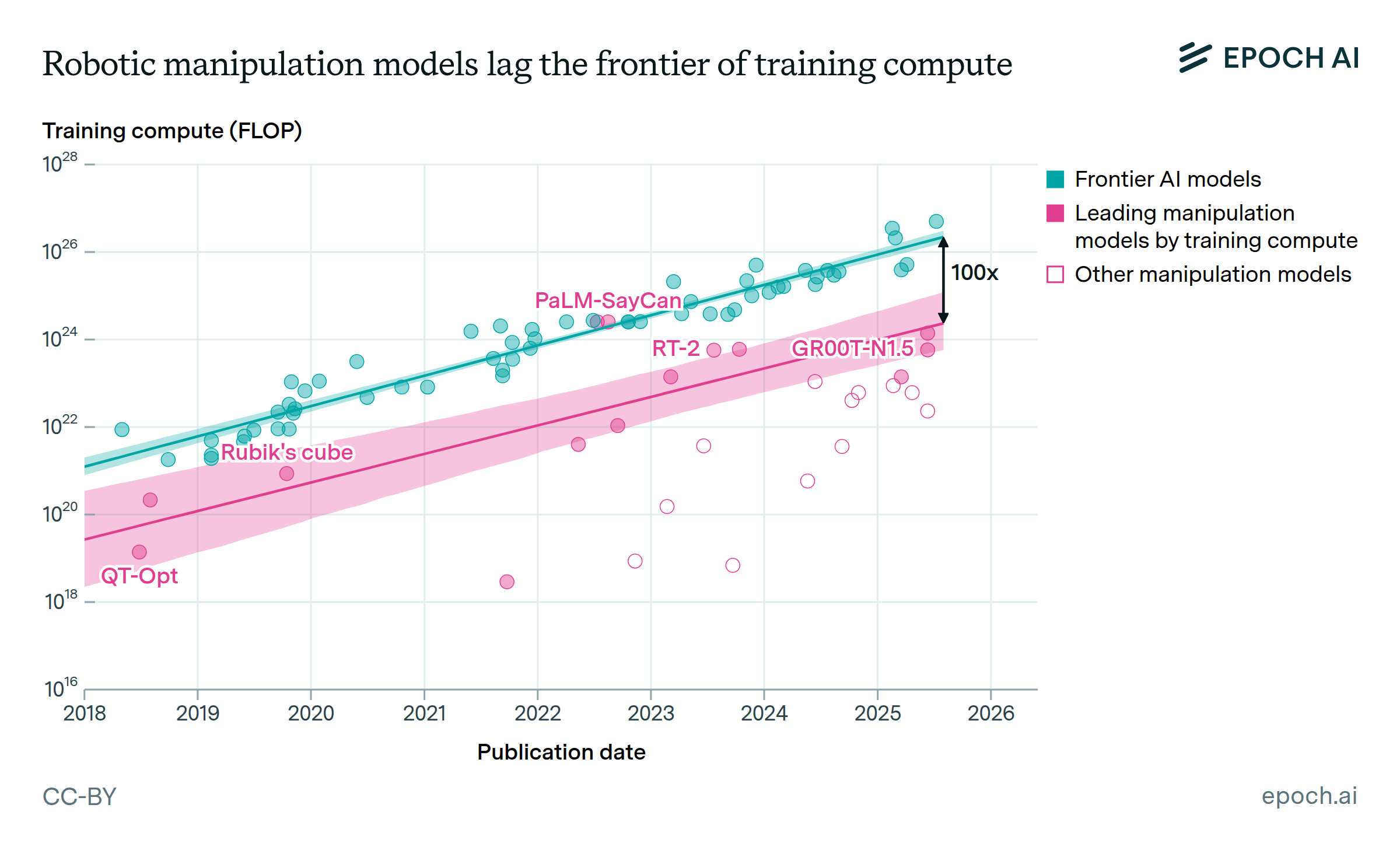

Compute is not a bottleneck for robotic manipulation

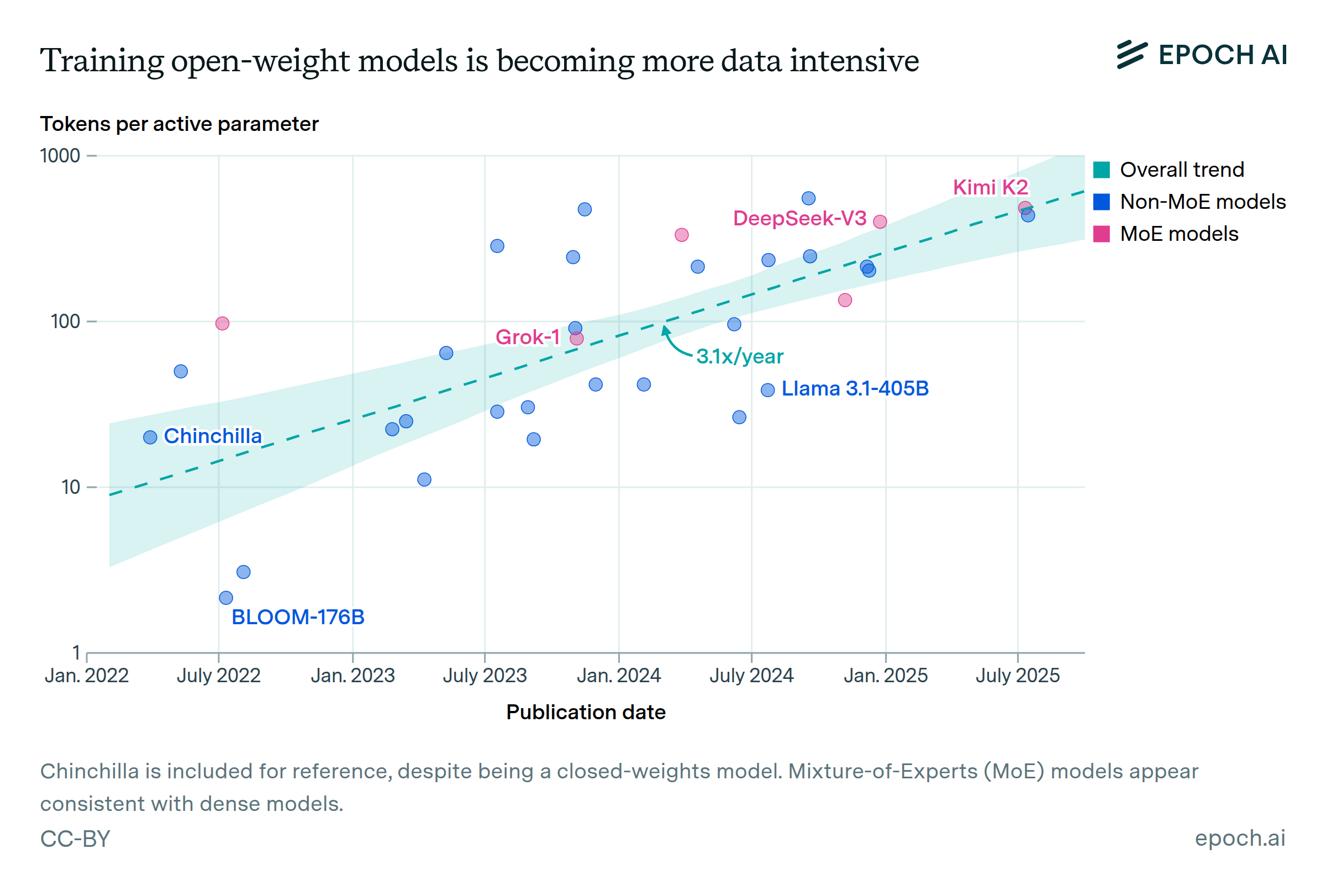

Training open-weight models is becoming more data intensive

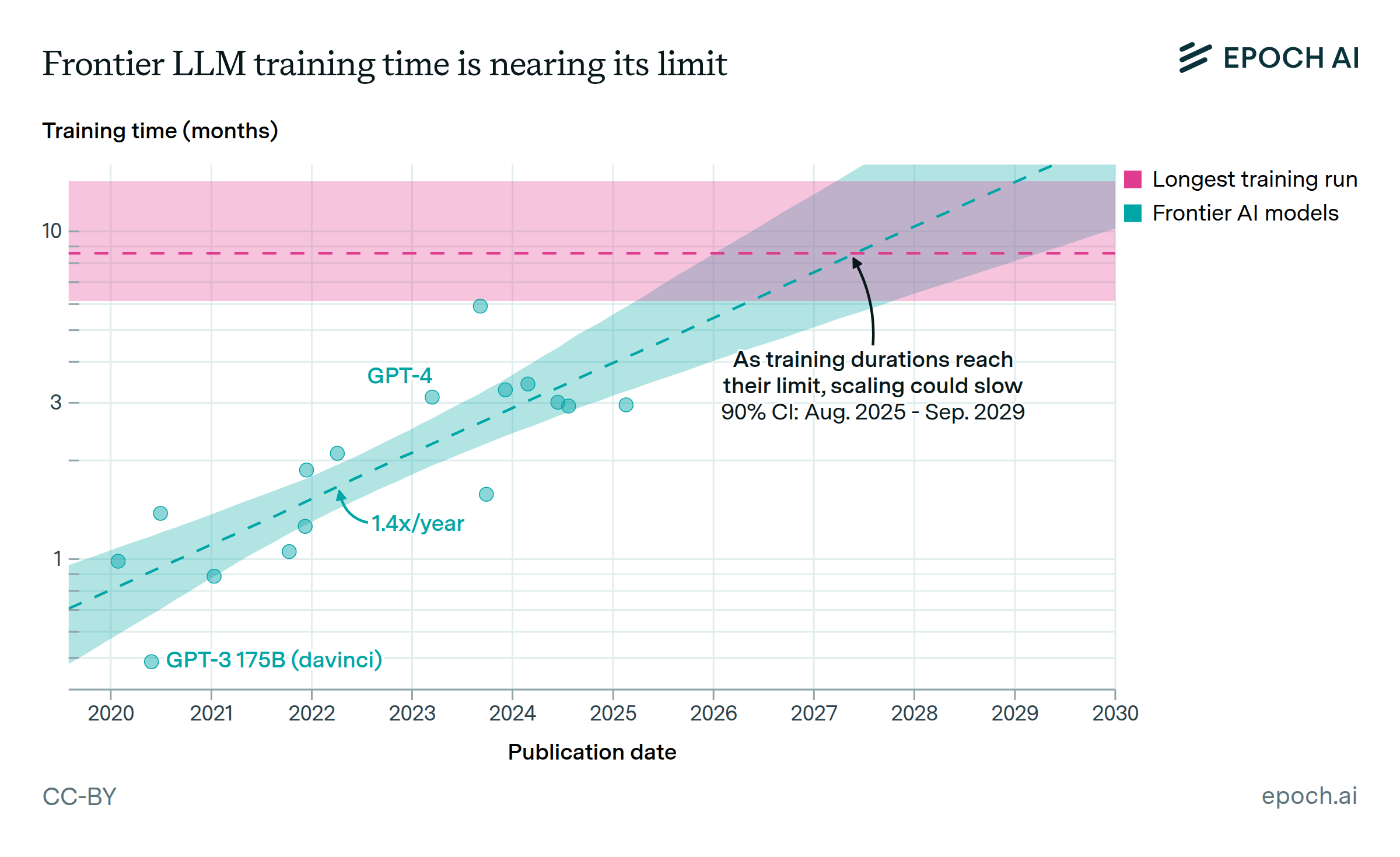

Frontier training runs will likely stop getting longer by around 2027

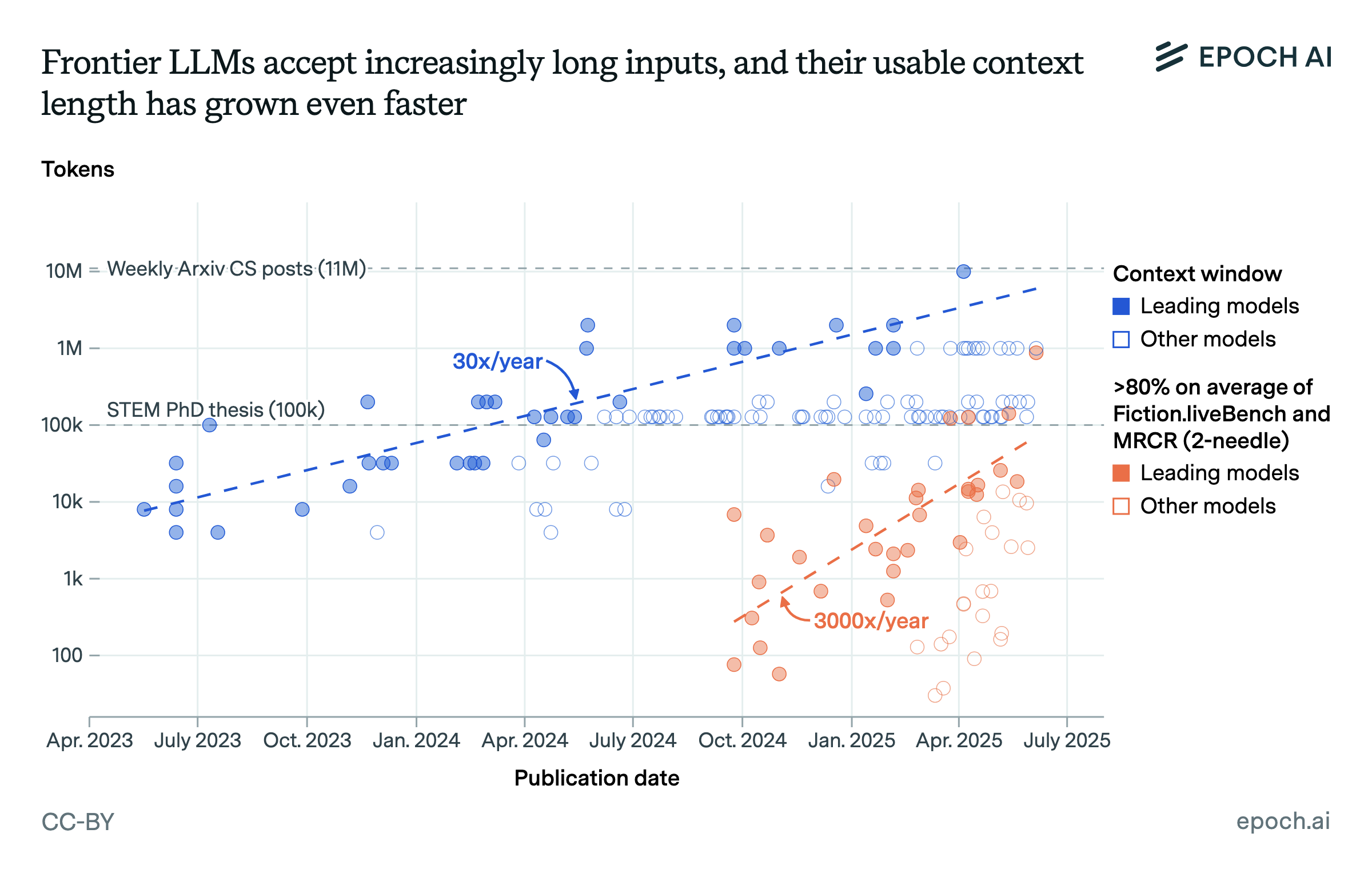

LLMs now accept longer inputs, and the best models can use them more effectively

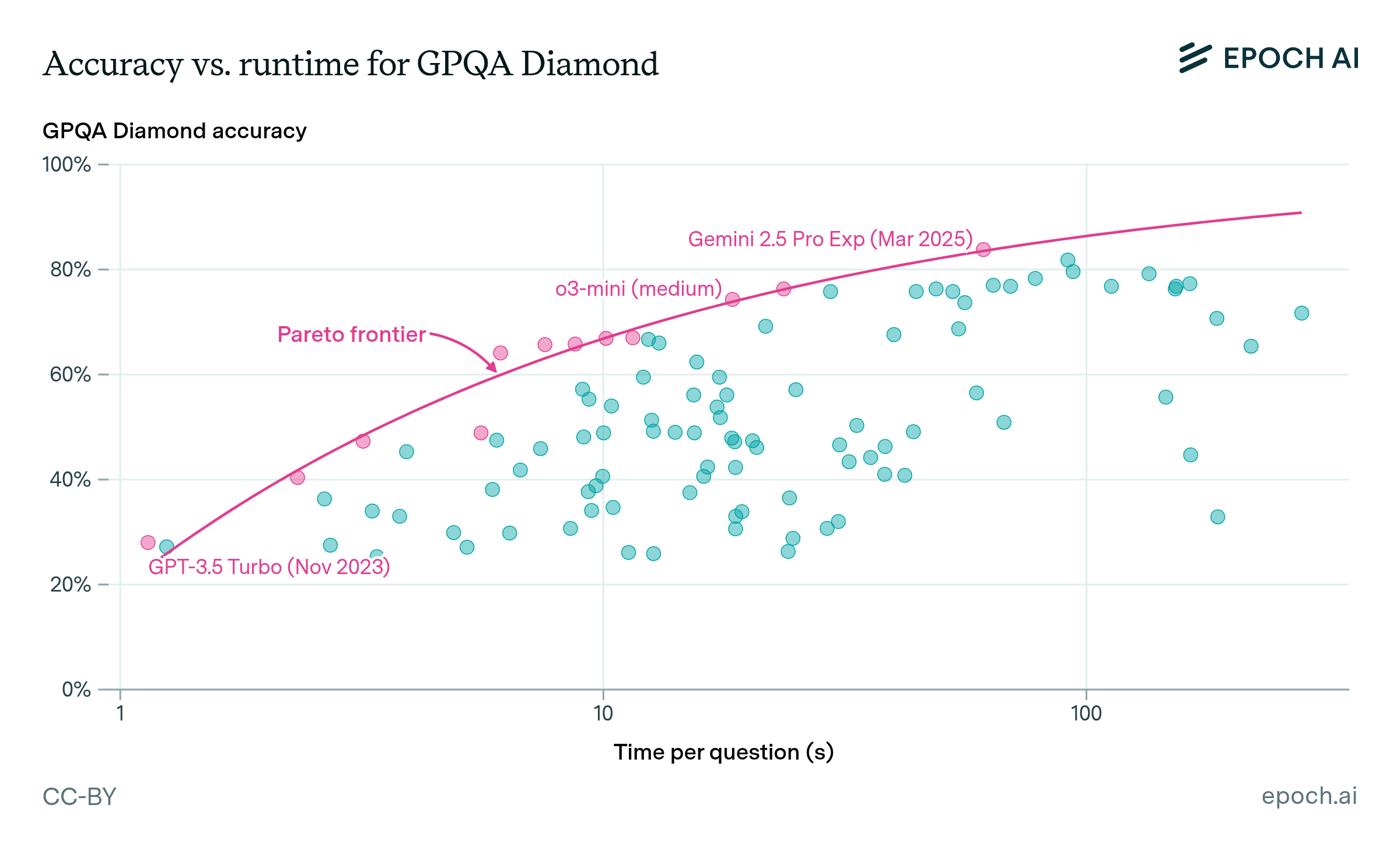

LLM providers offer a trade-off between accuracy and speed

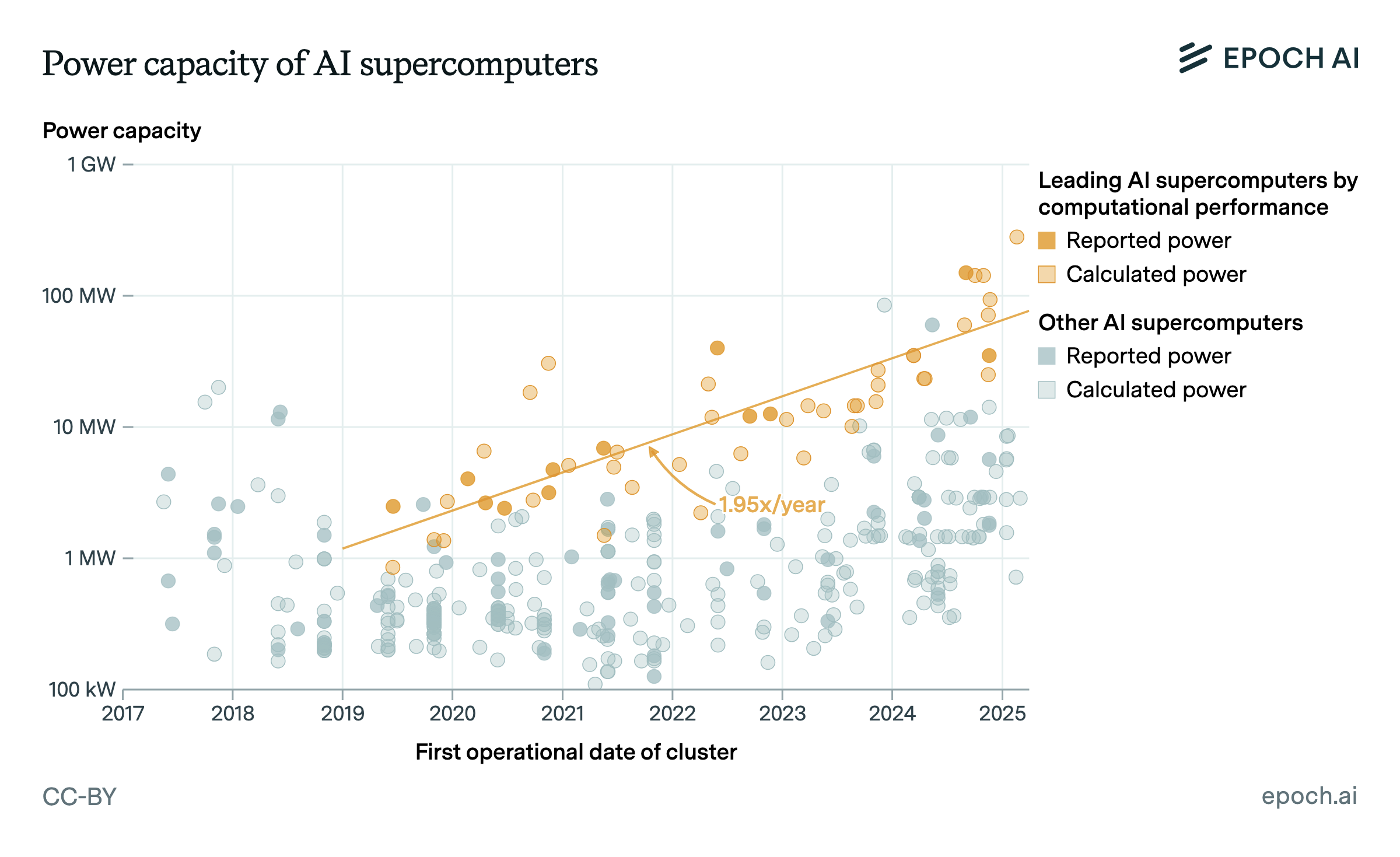

Power requirements of leading AI supercomputers have doubled every 13 months

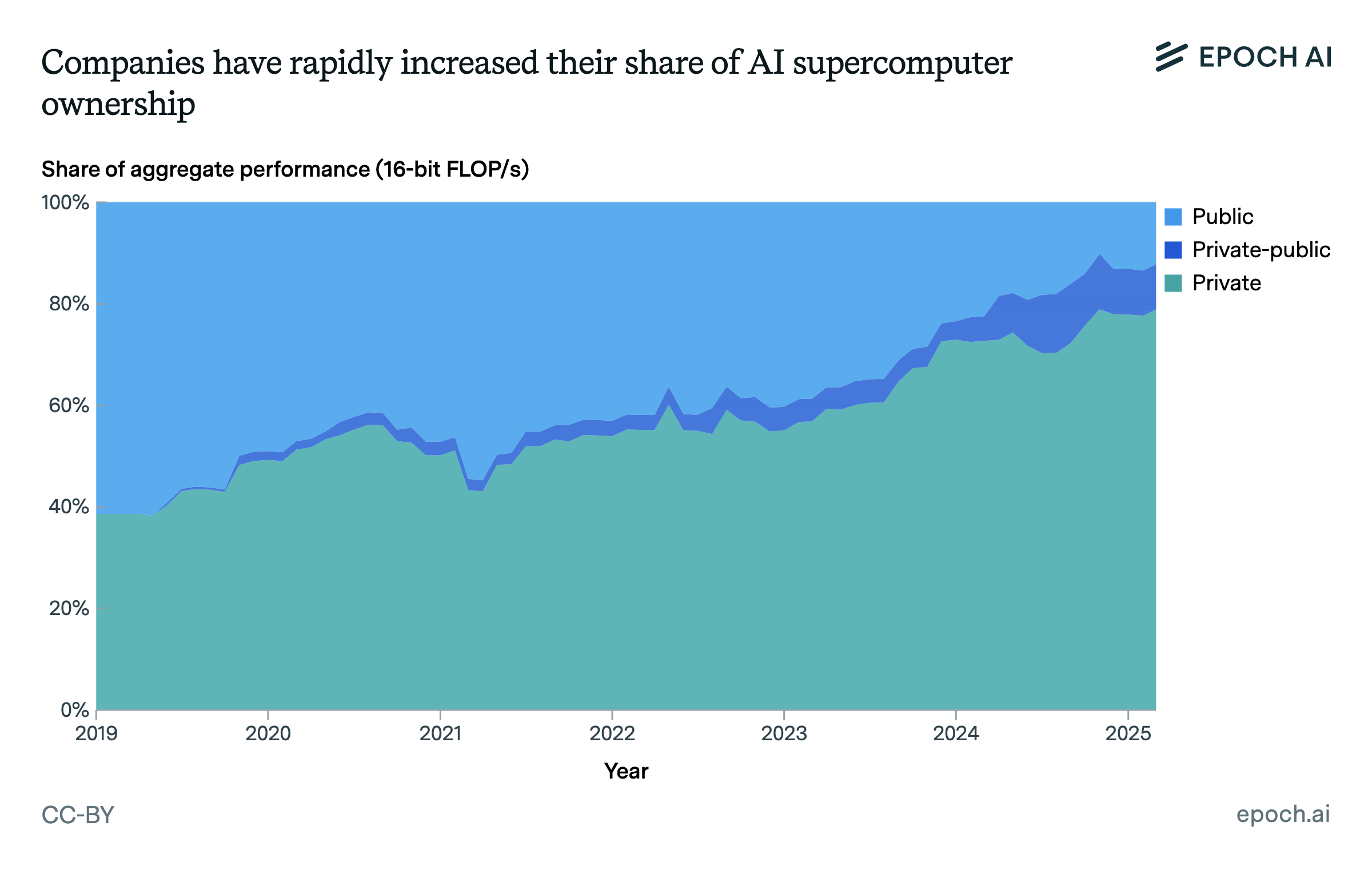

Private-sector companies own a dominant share of GPU clusters

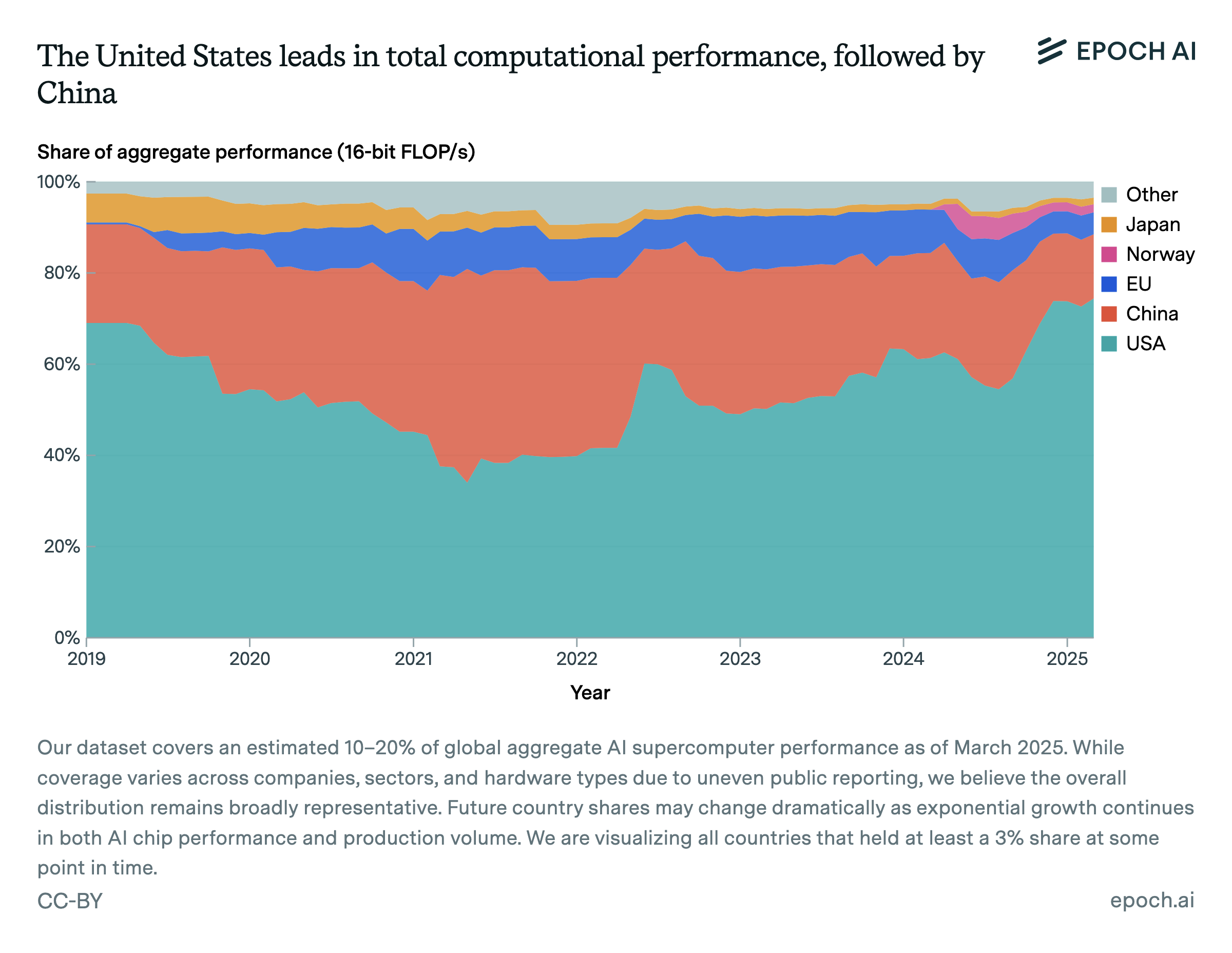

The US hosts the majority of GPU cluster performance, followed by China

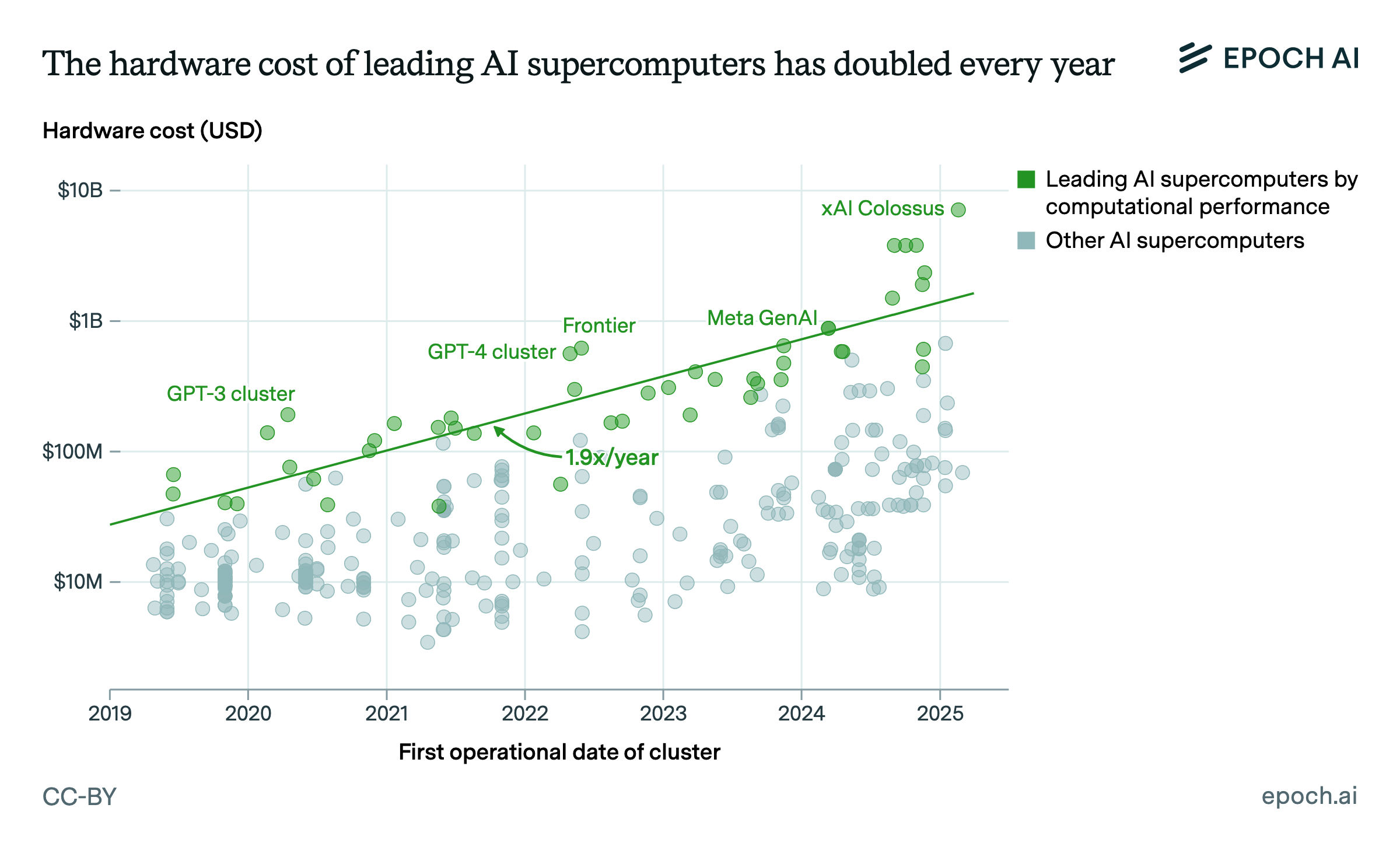

Acquisition costs of leading AI supercomputers have doubled every 13 months

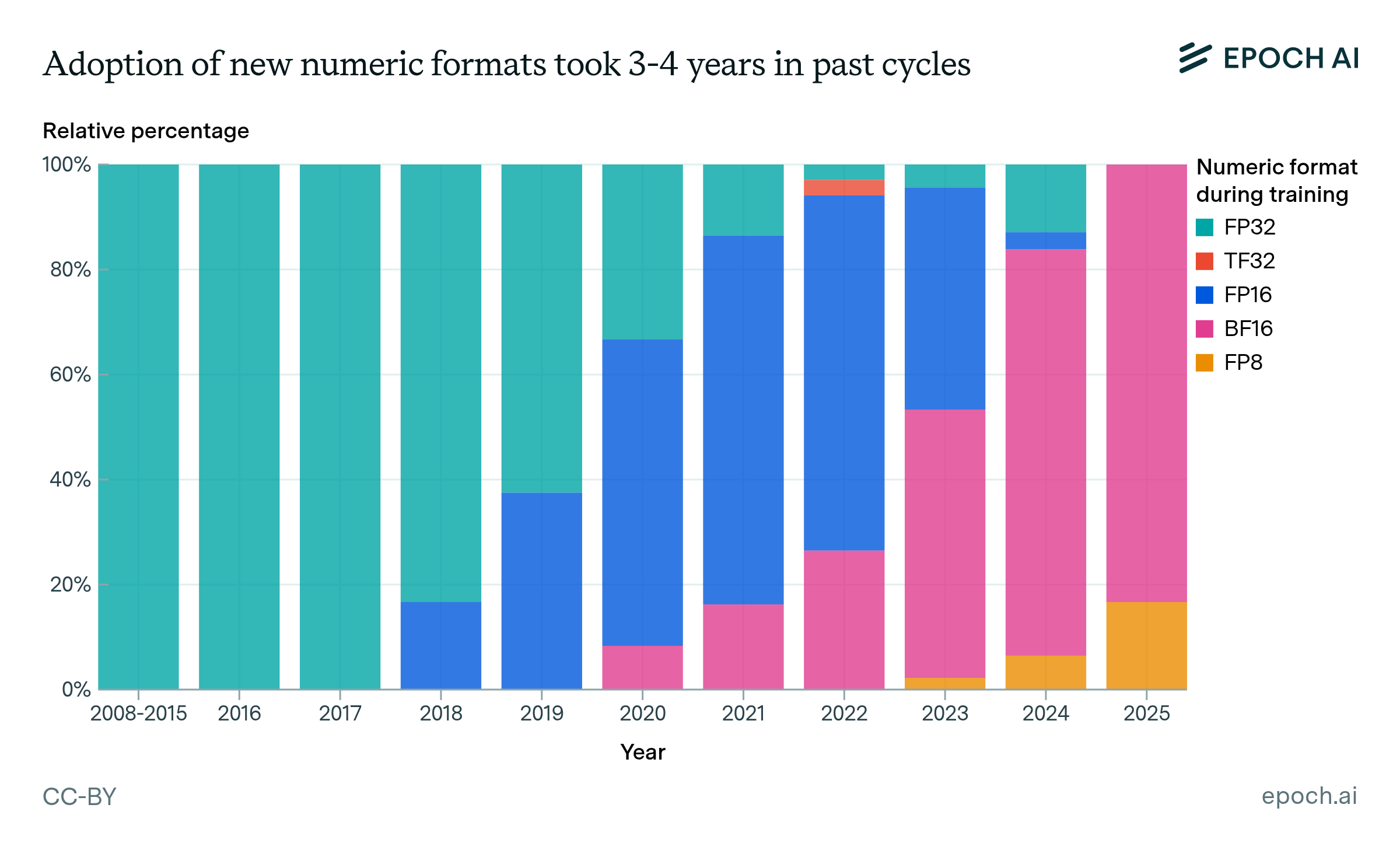

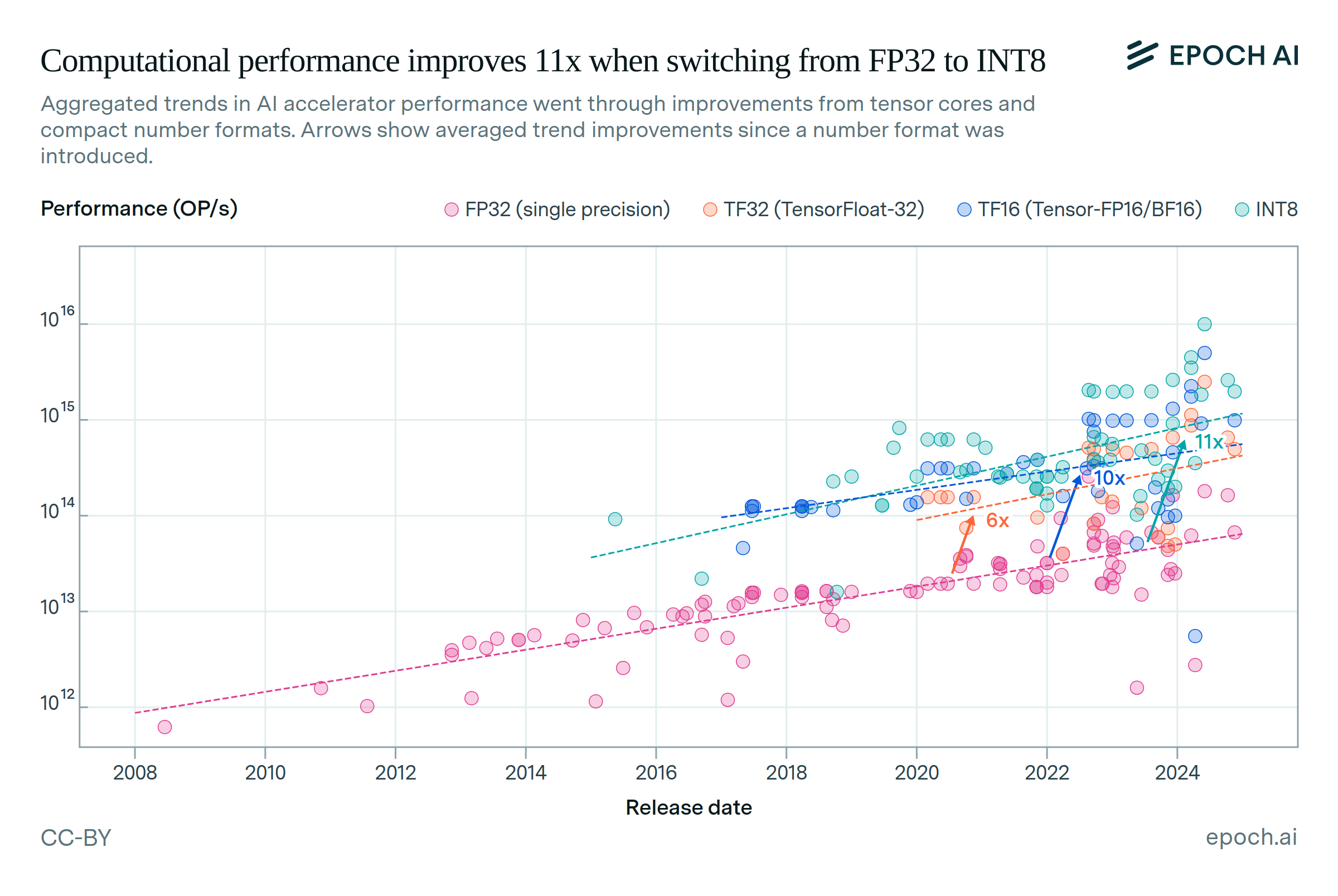

Widespread adoption of new numeric formats took 3-4 years in past cycles

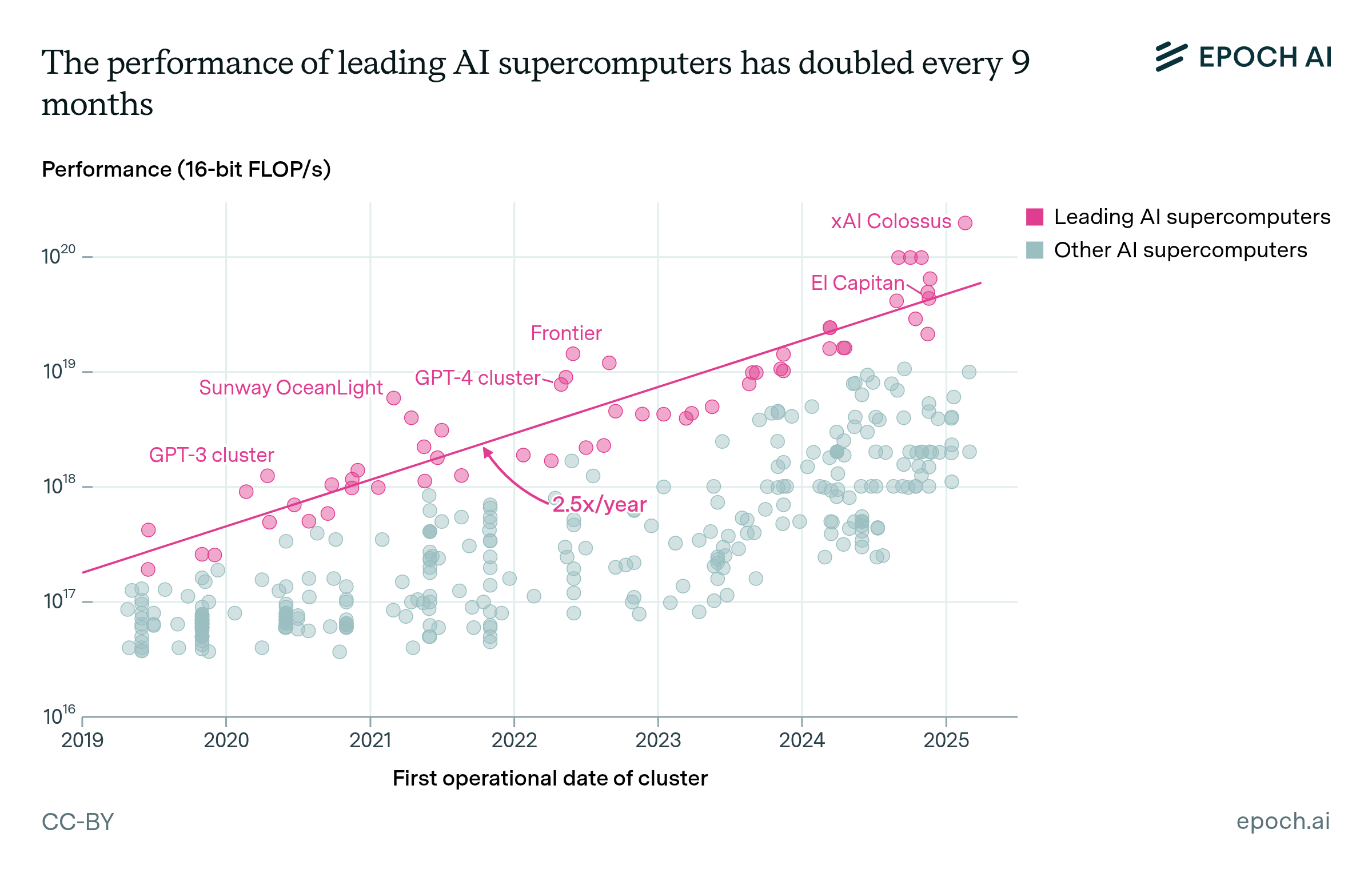

The computational performance of leading AI supercomputers has doubled every nine months

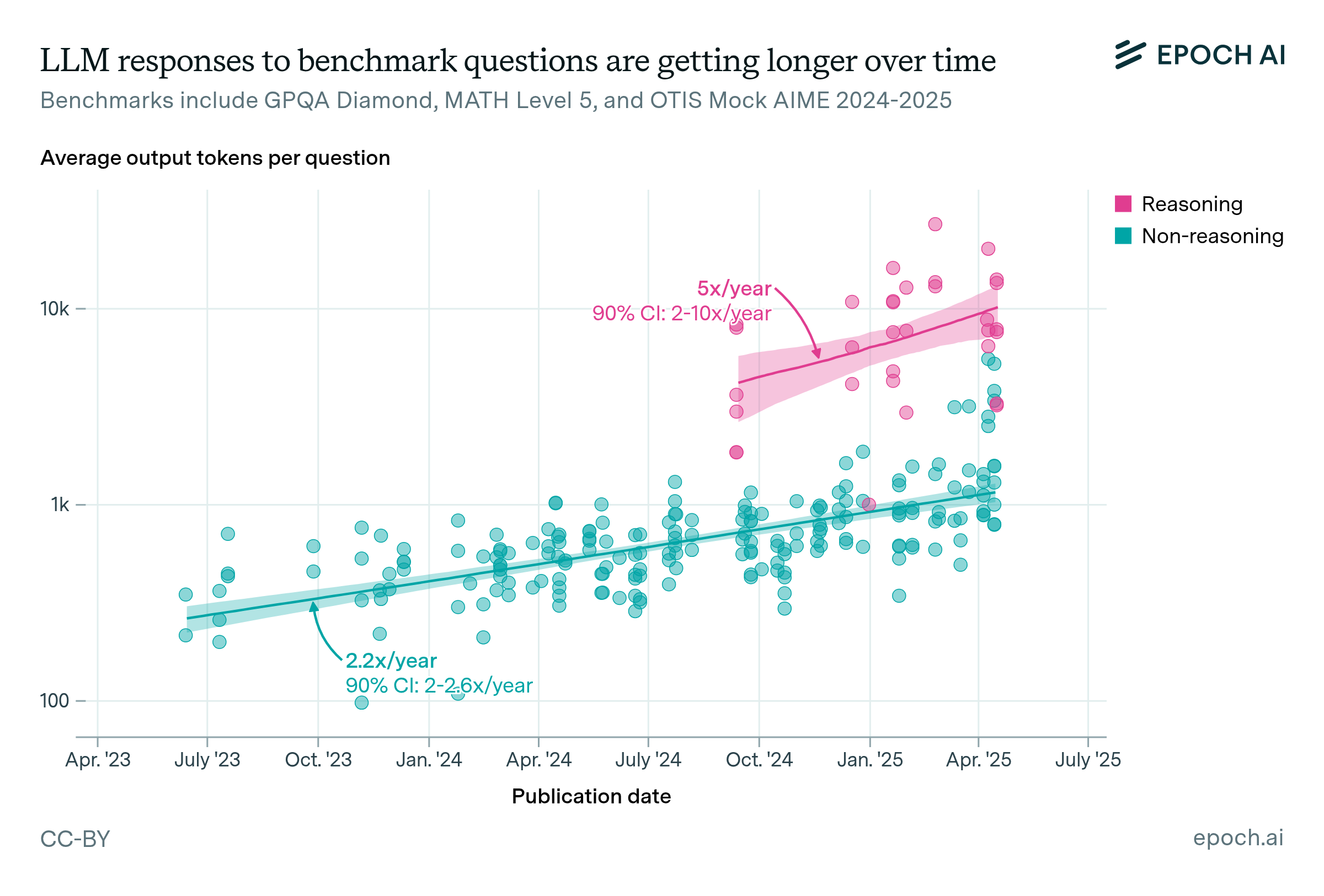

LLM responses to benchmark questions are getting longer over time

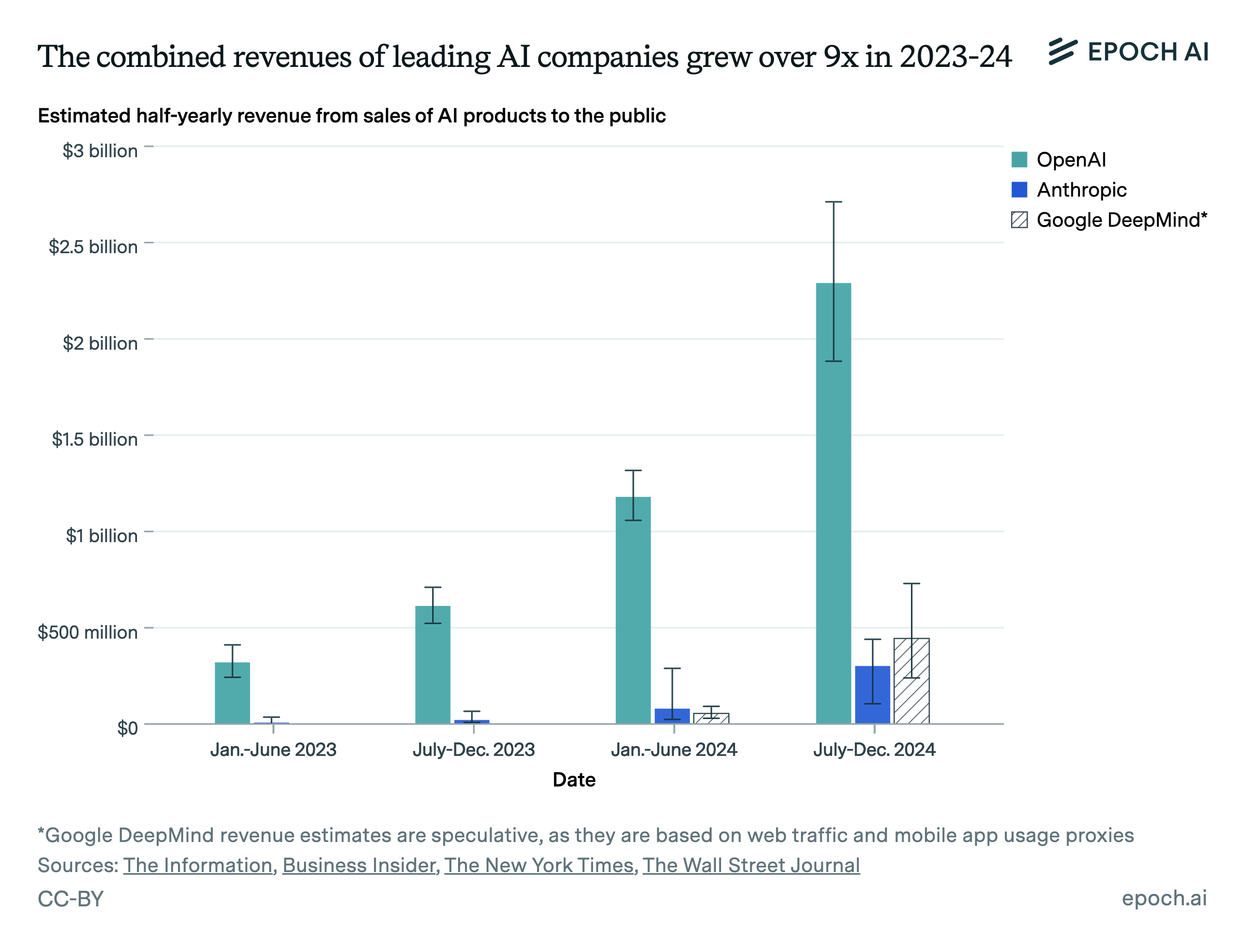

The combined revenues of leading AI companies grew by over 9x in 2023-2024

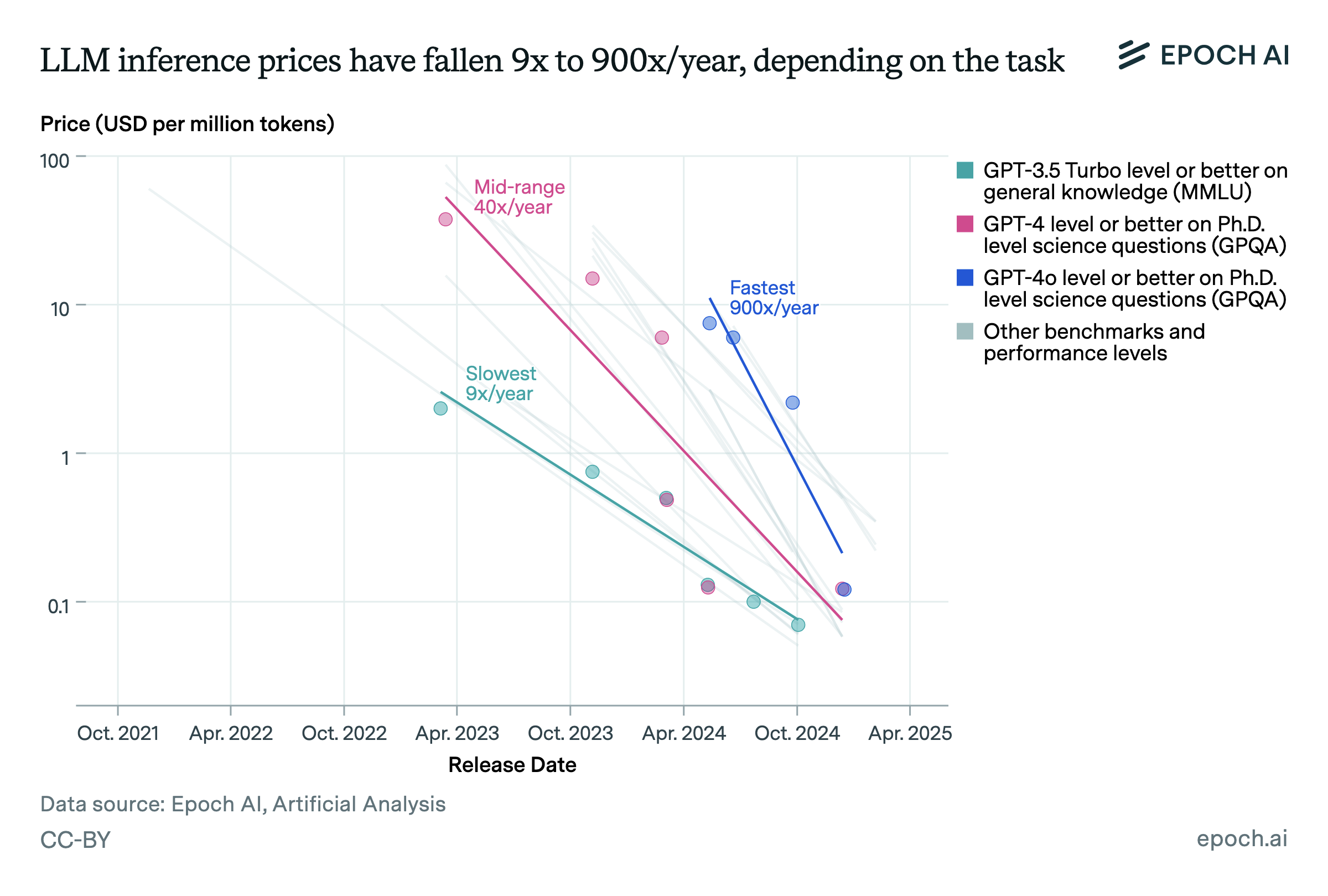

LLM inference prices have fallen rapidly but unequally across tasks

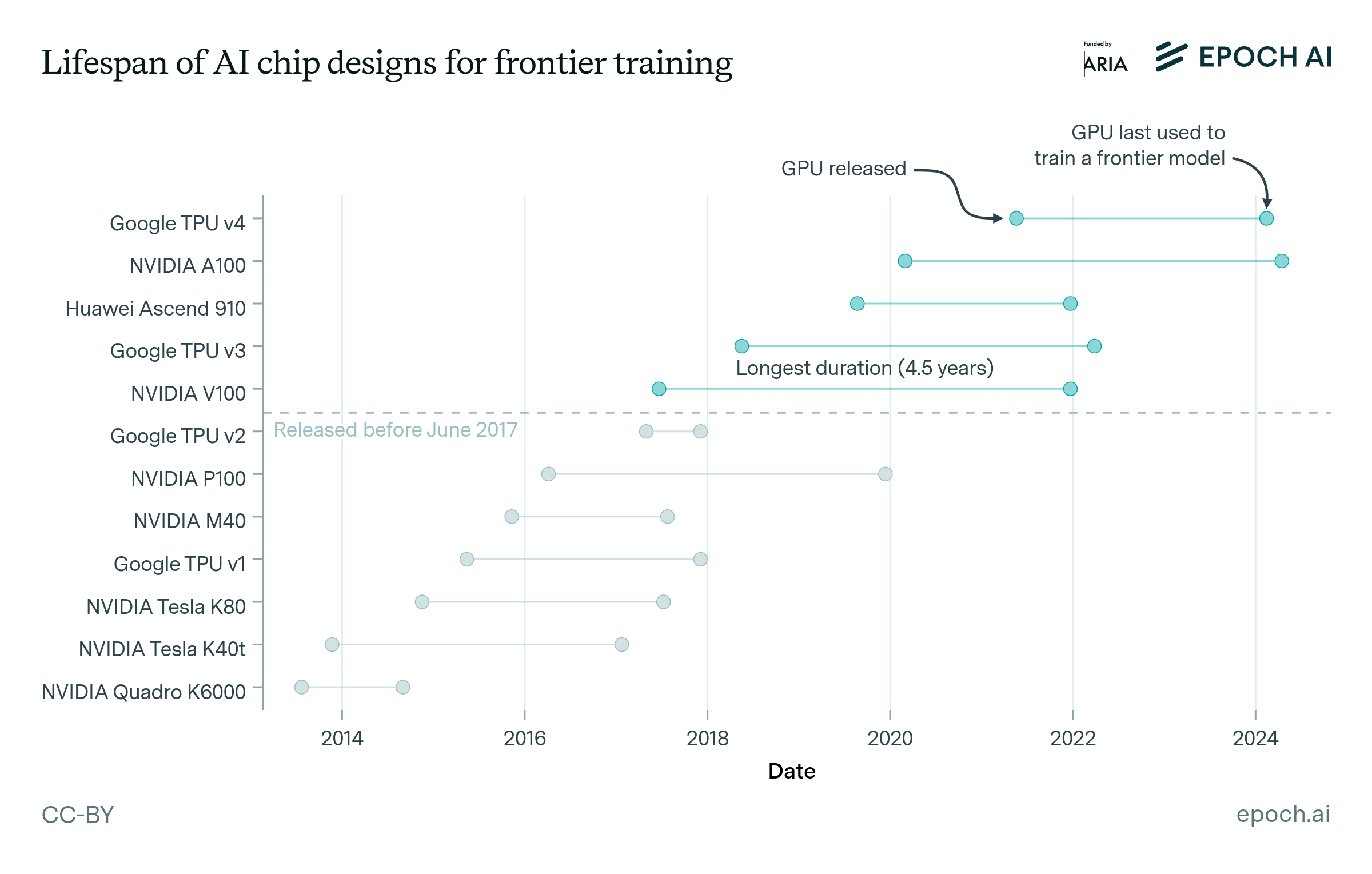

Leading AI chip designs are used for around four years in frontier training

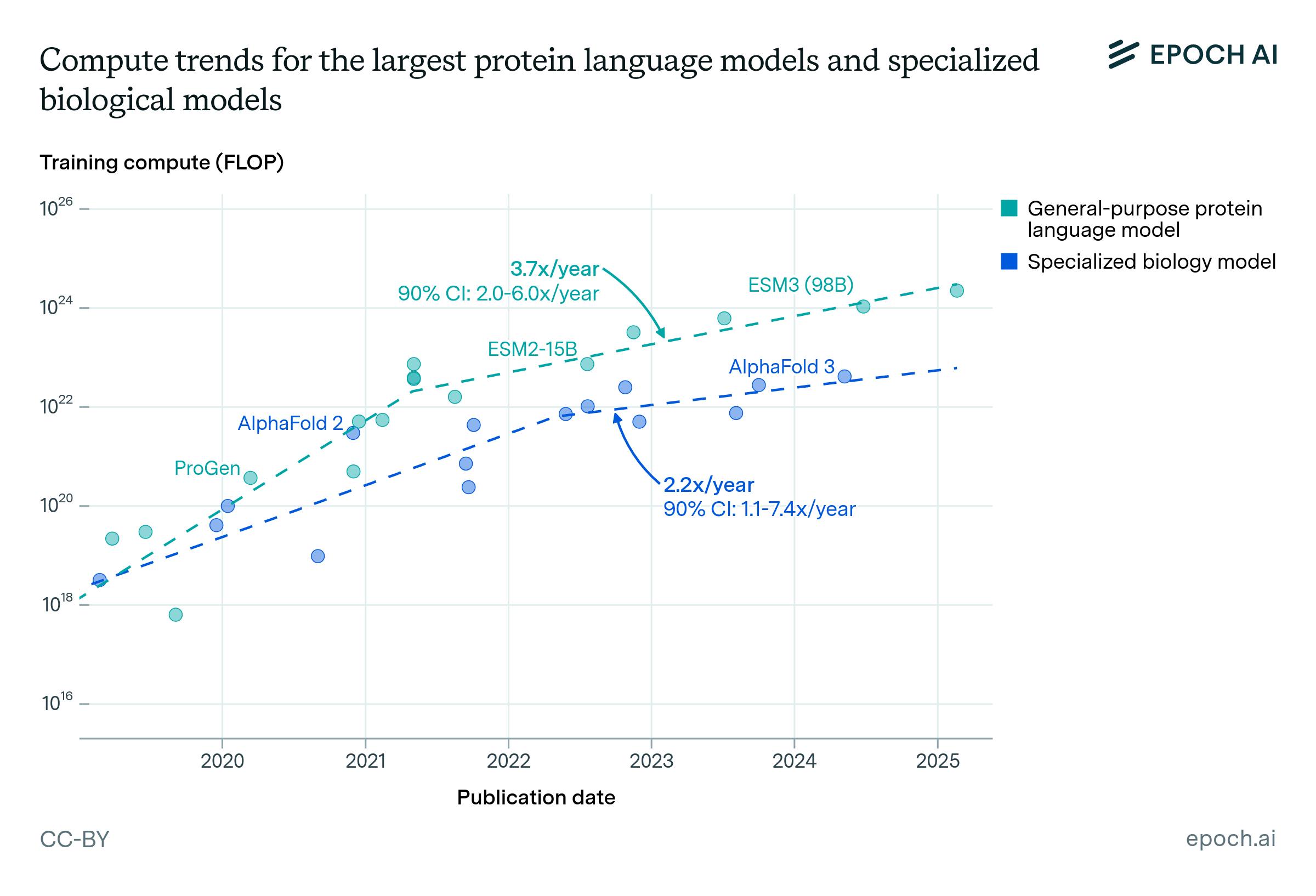

Biology AI models are scaling 2-4x per year after rapid growth from 2019-2021

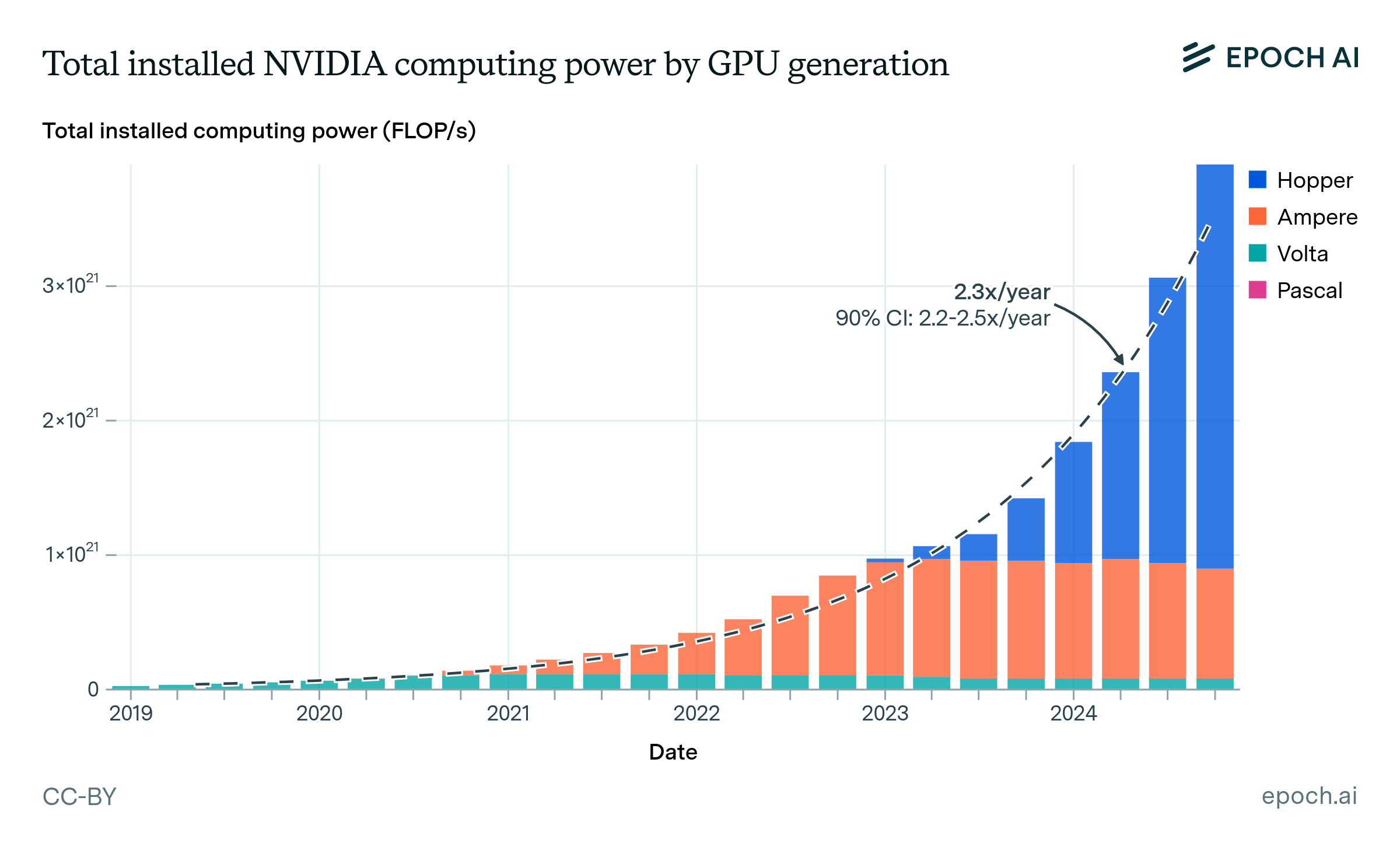

The stock of computing power from NVIDIA chips is doubling every 10 months

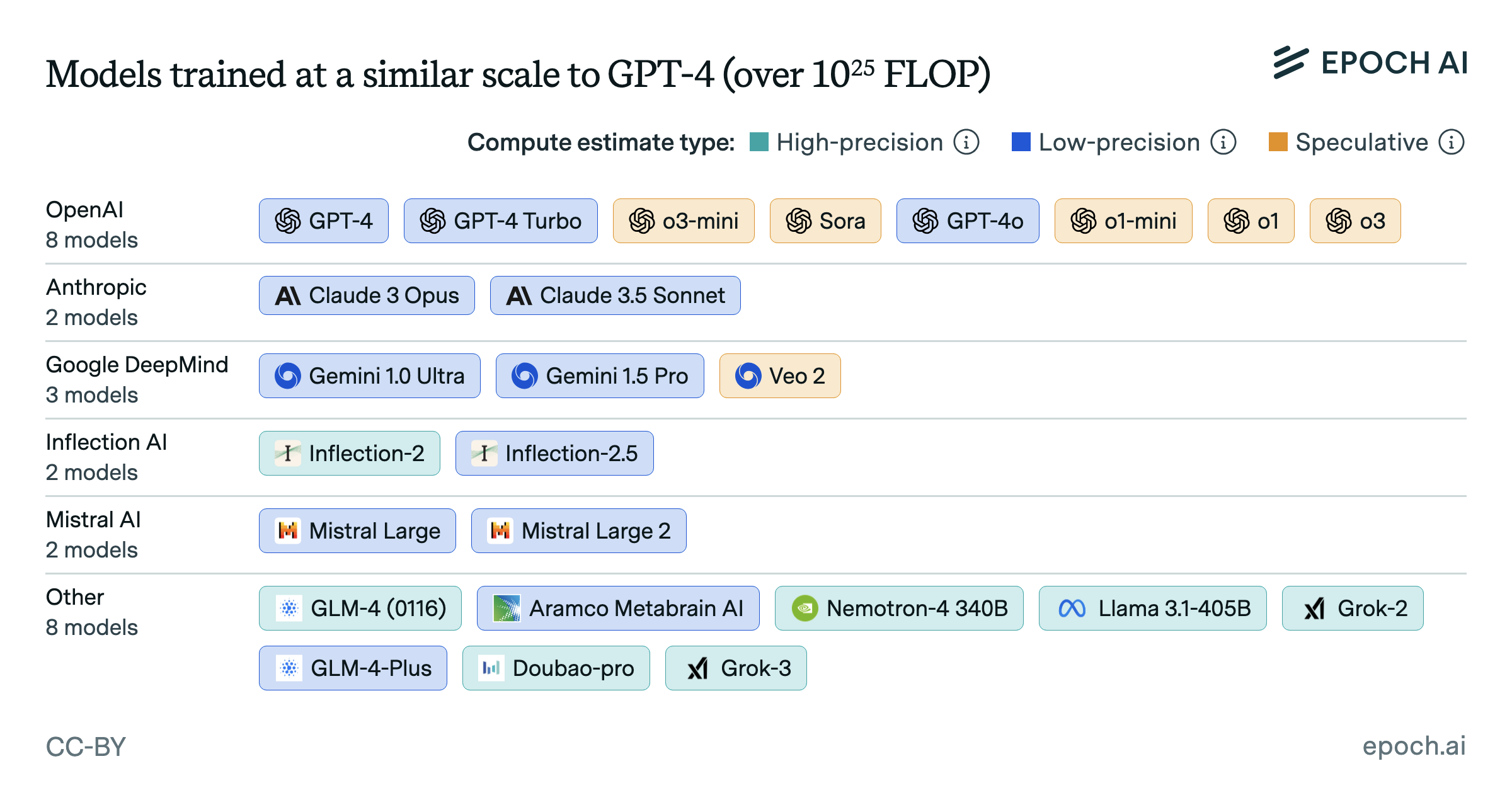

Over 30 AI models have been trained at the scale of GPT-4

Chinese language models have scaled up more slowly than their global counterparts

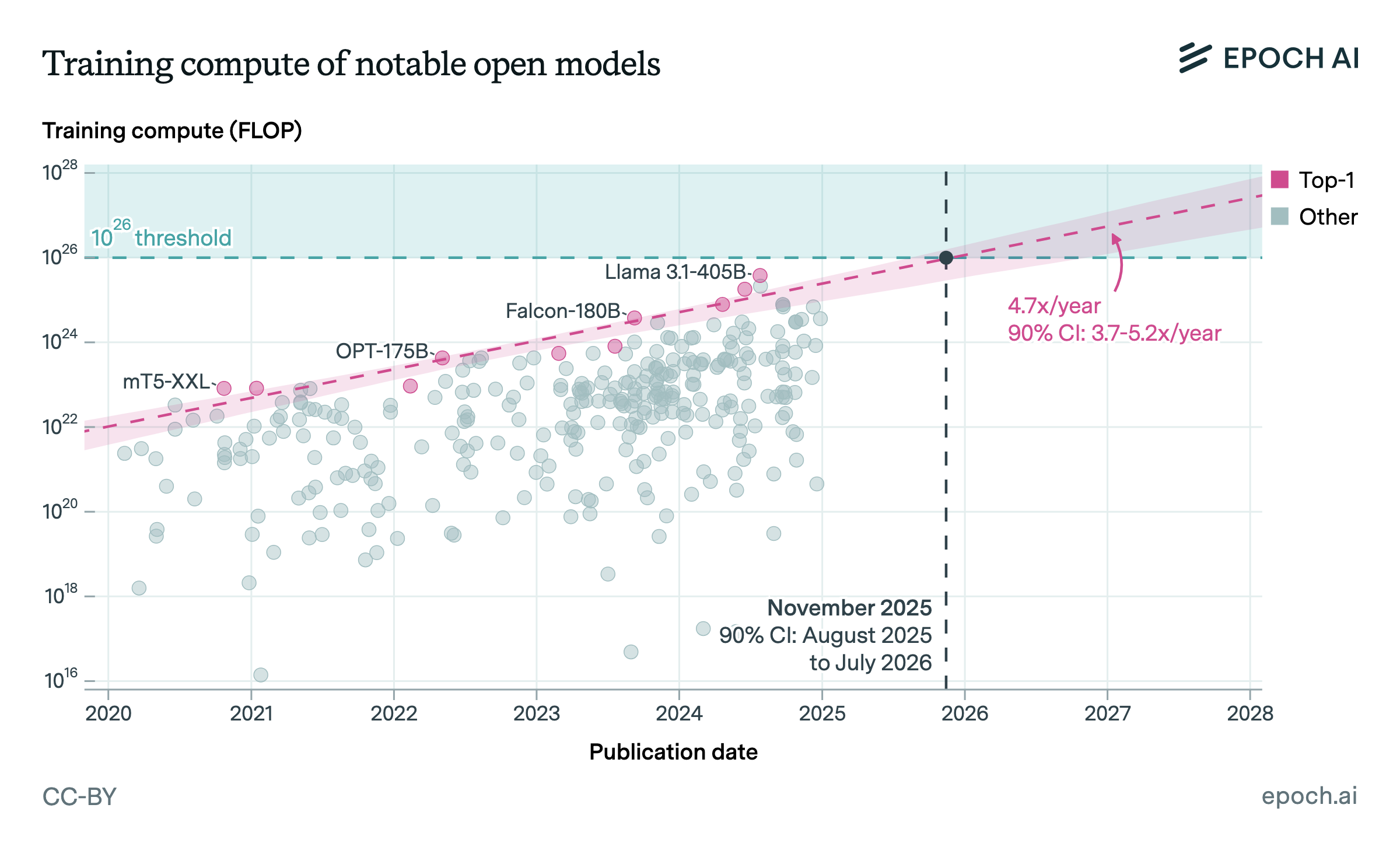

Frontier open models may surpass 1e26 FLOP of training compute before 2026

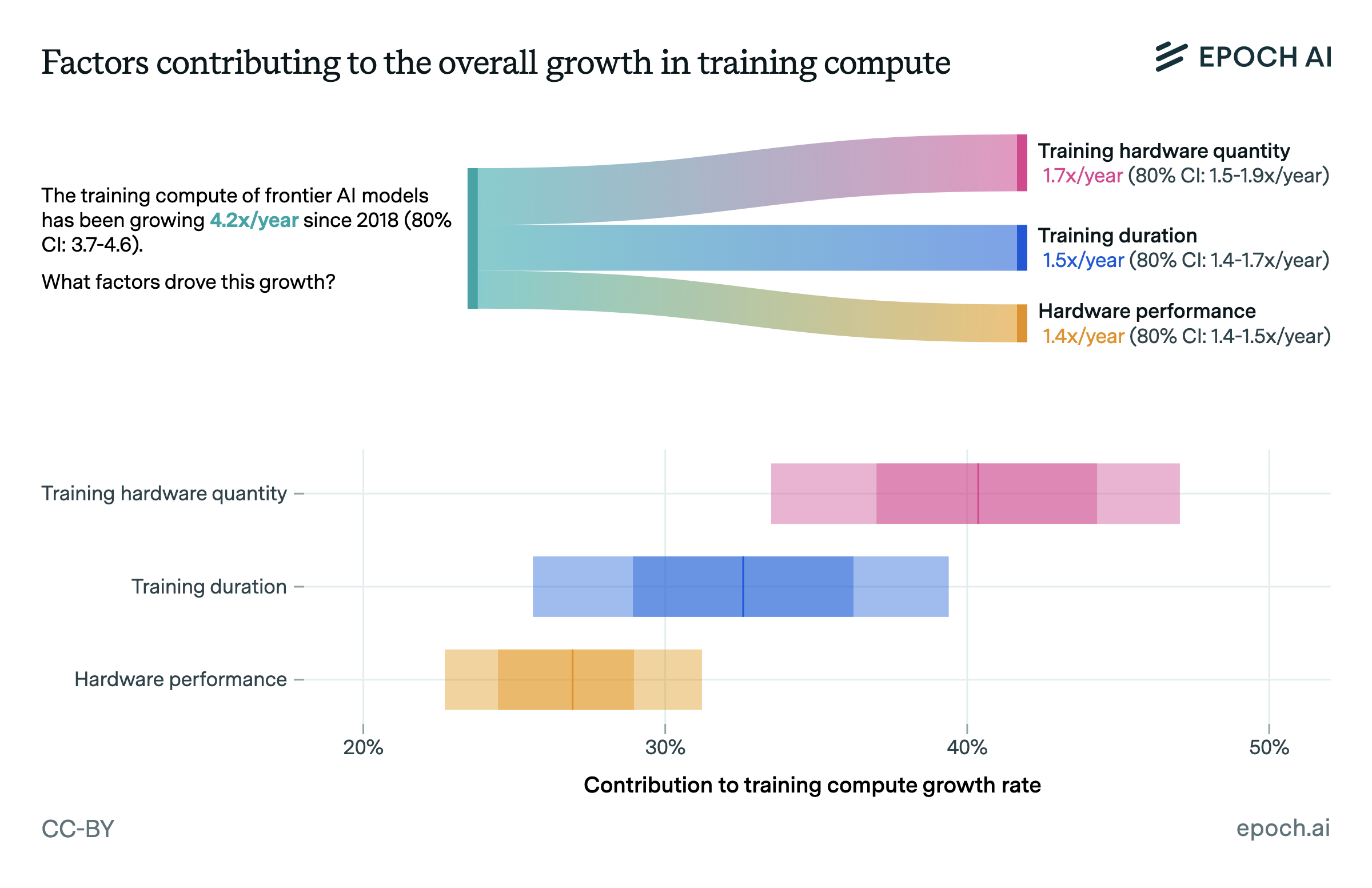

Training compute growth is driven by larger clusters, longer training, and better hardware

US models currently outperform non-US models

Models with downloadable weights currently lag behind the top-performing models

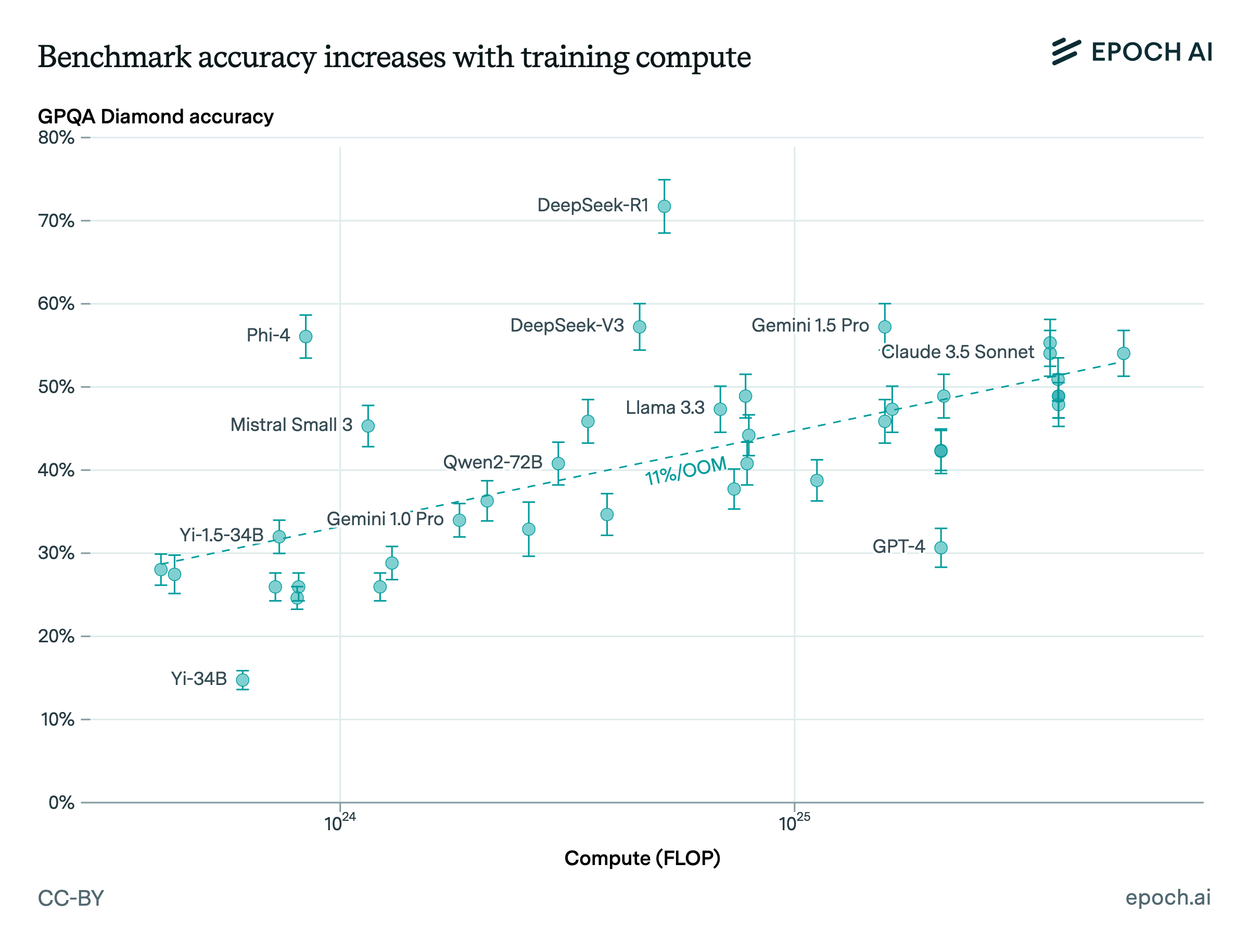

Accuracy increases with estimated training compute

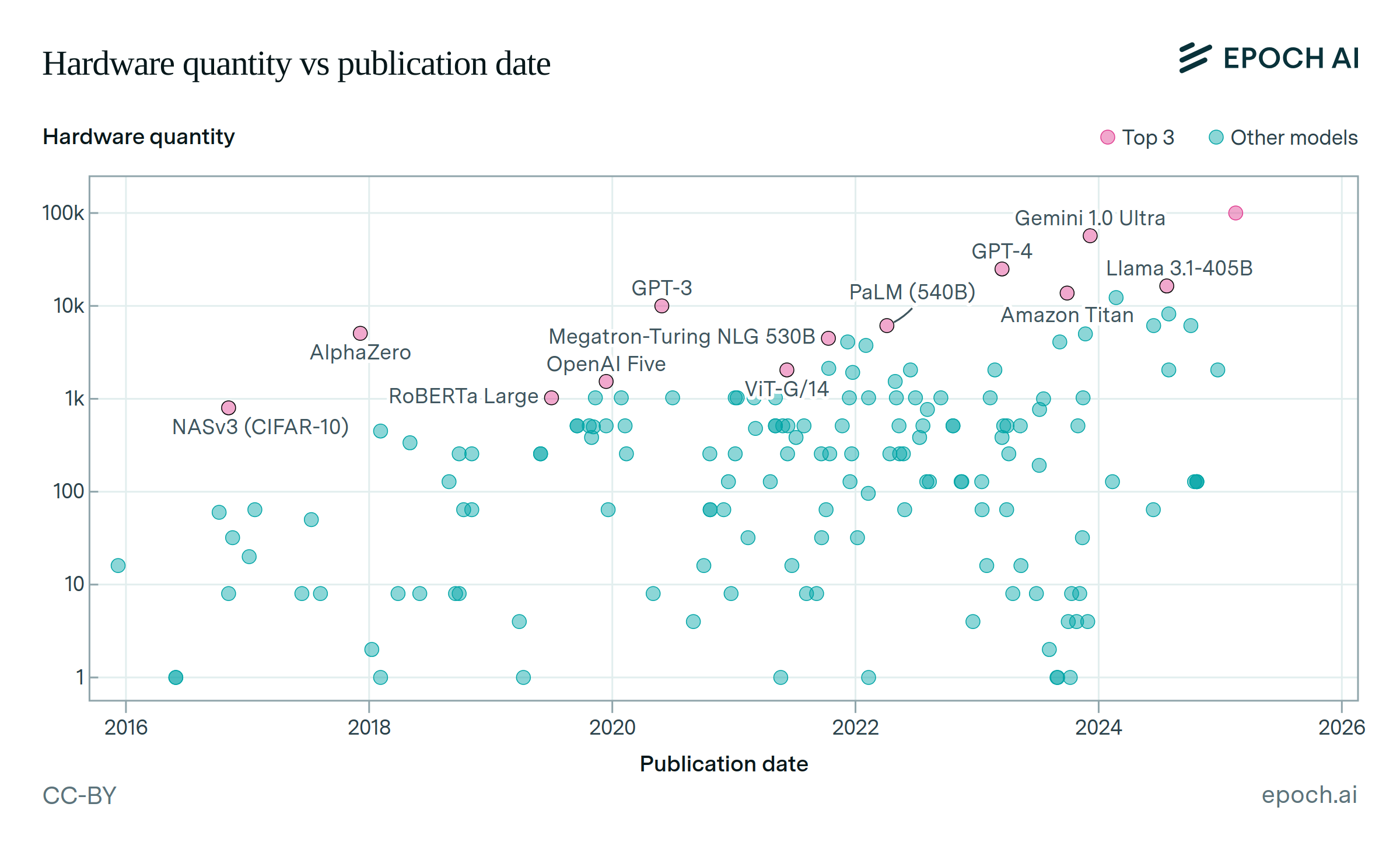

AI training cluster sizes increased by more than 20x since 2016

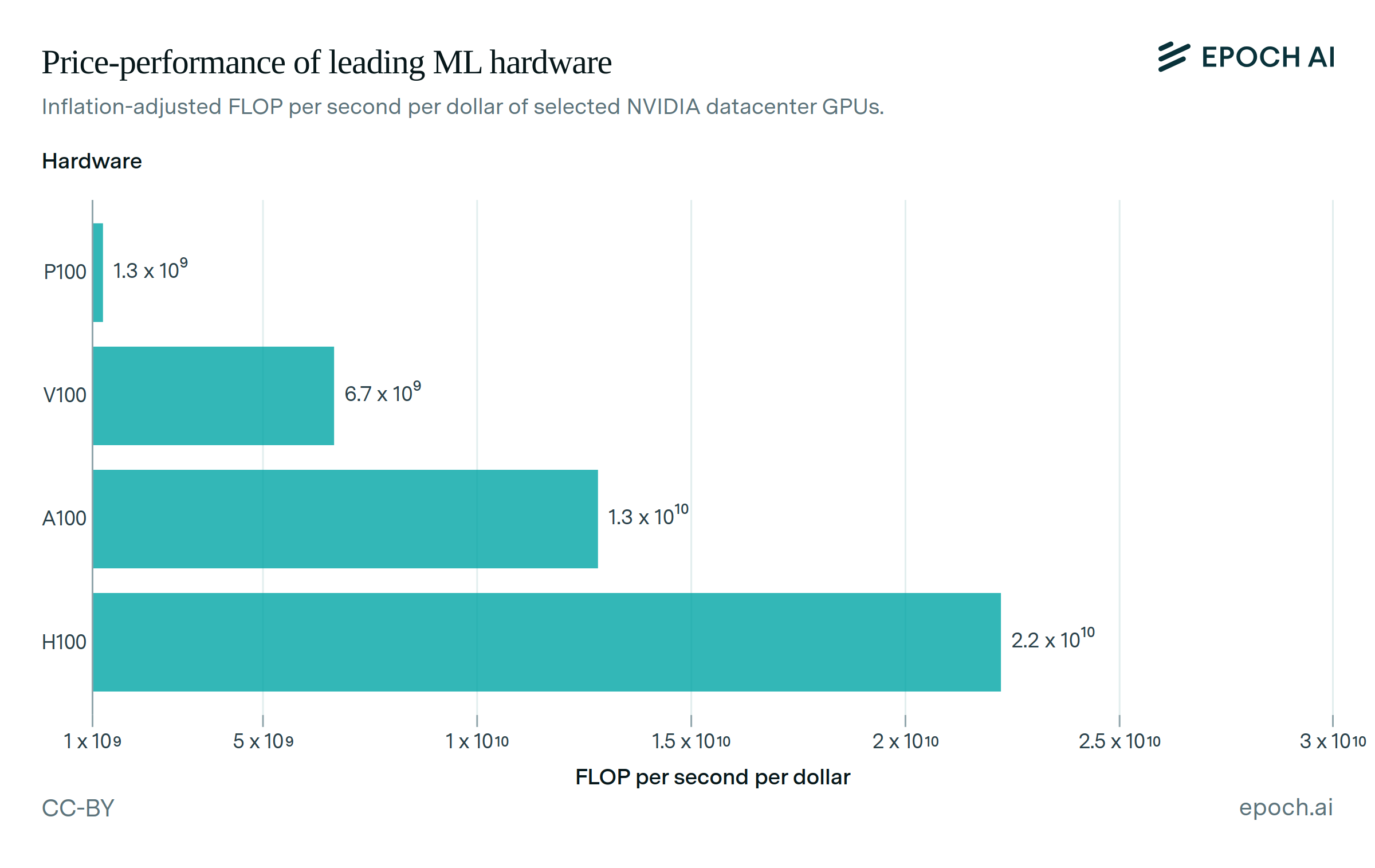

Performance per dollar improves around 30% each year

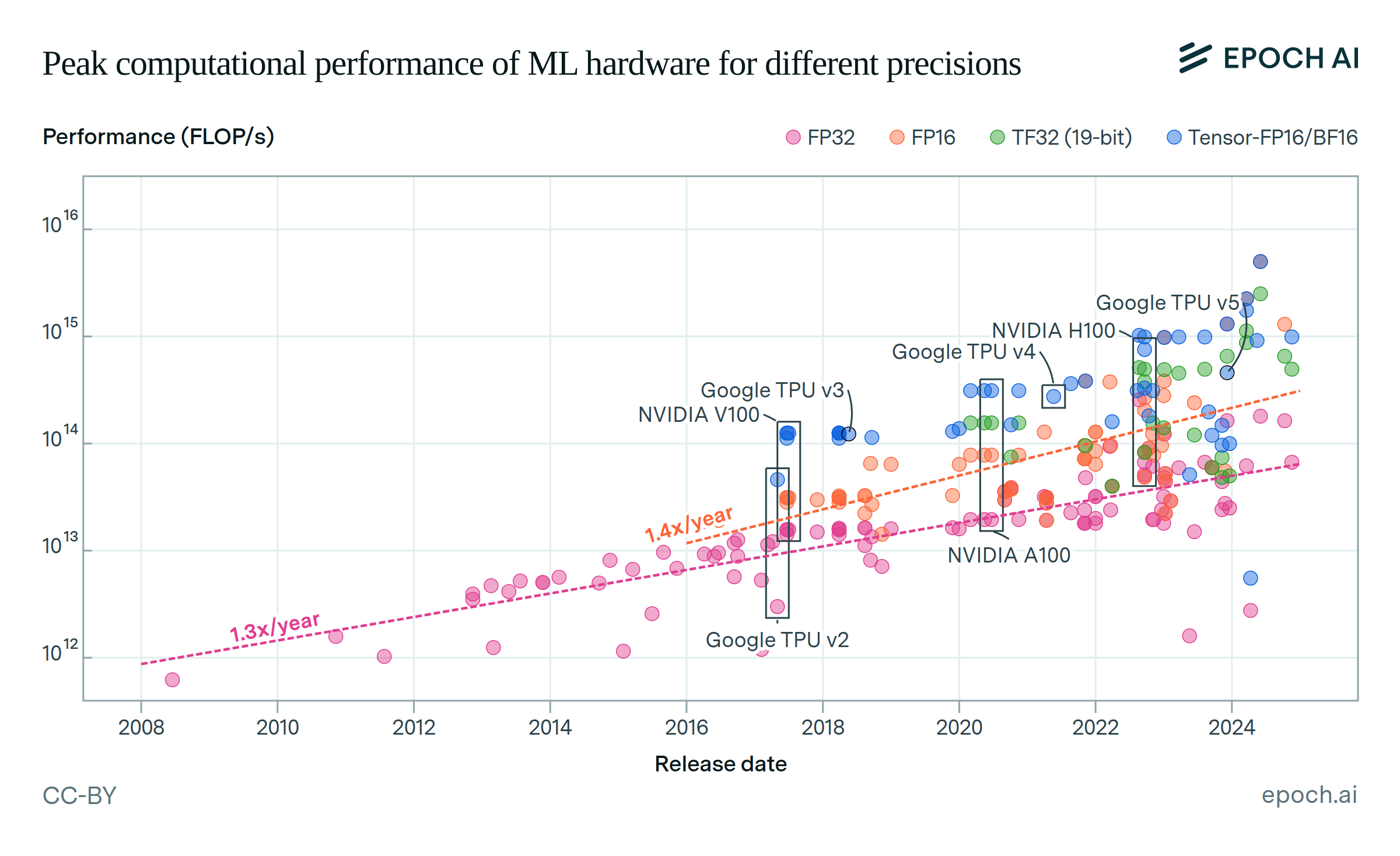

The computational performance of machine learning hardware has doubled every 2.3 years

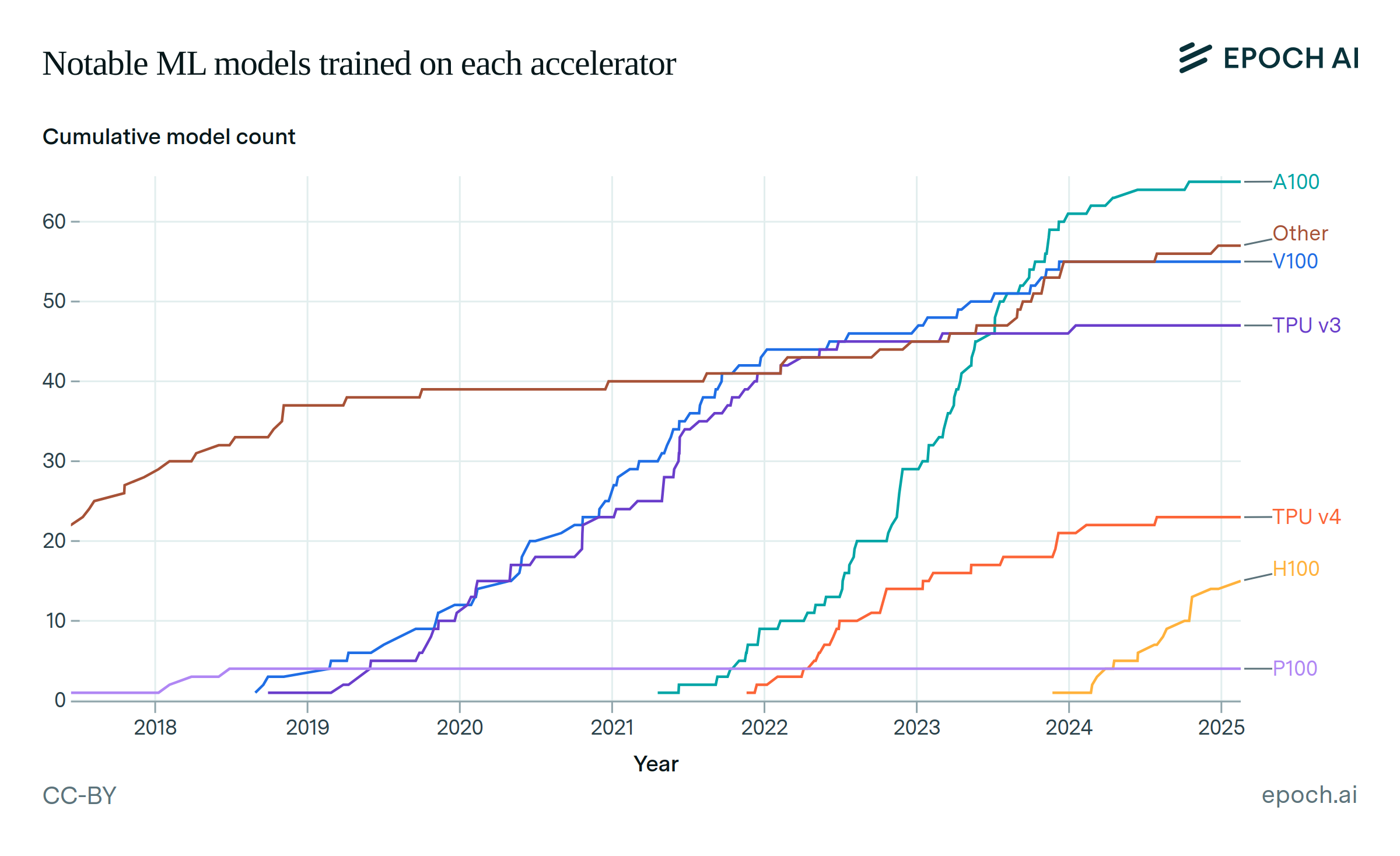

The NVIDIA A100 has been the most popular hardware for training notable machine learning models

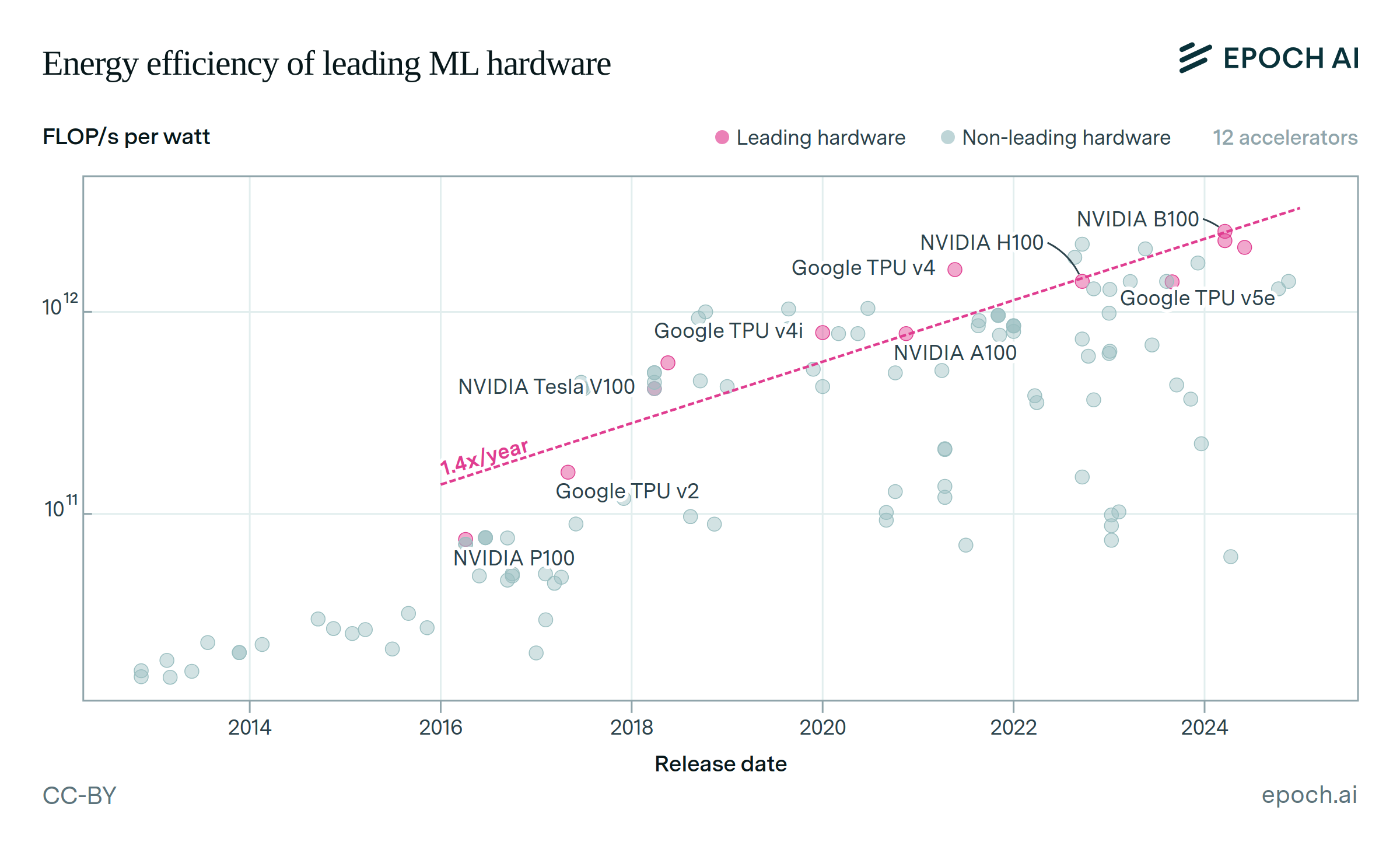

Leading ML hardware becomes 40% more energy-efficient each year

Performance improves 13x when switching from FP32 to tensor-INT8

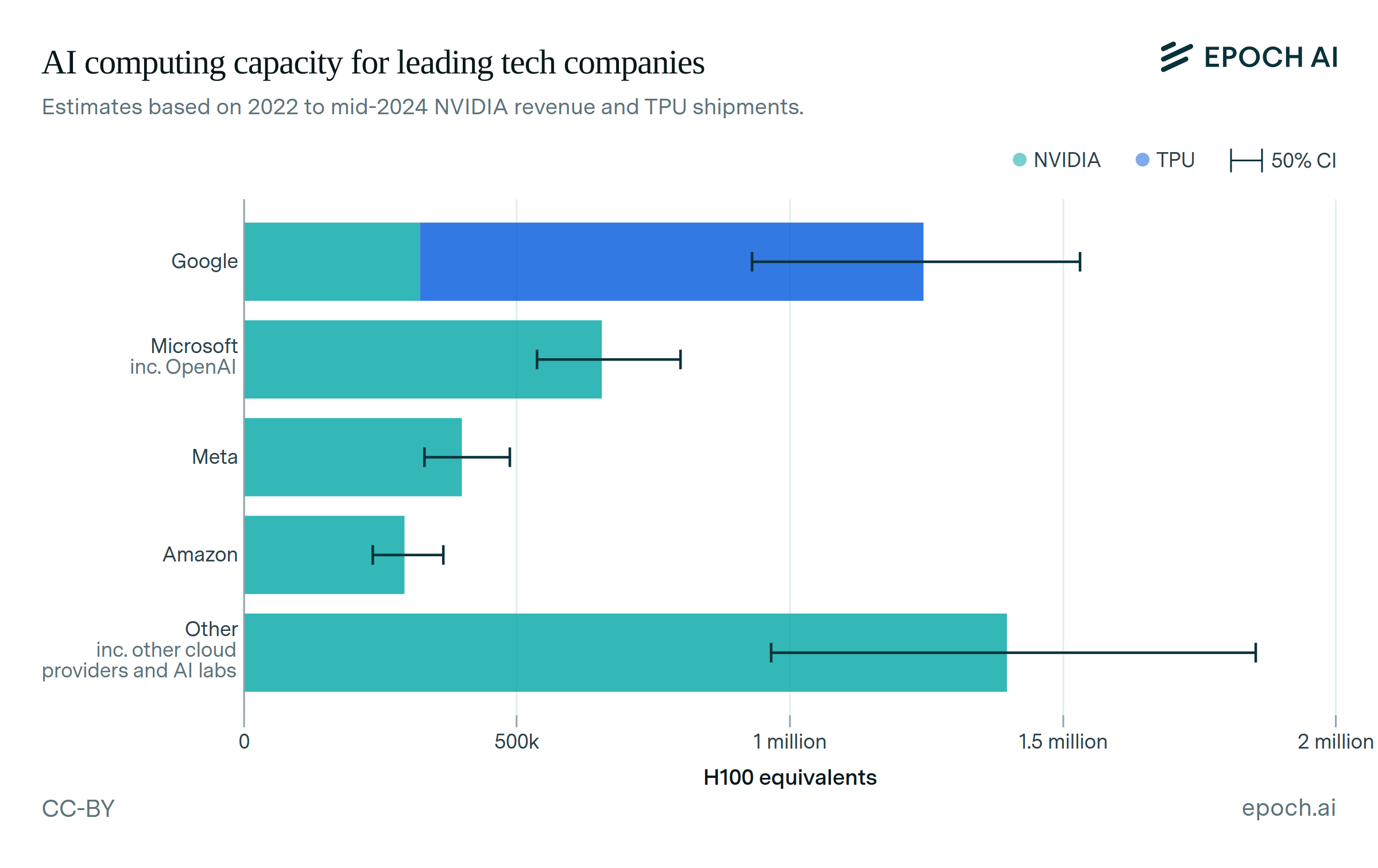

Leading AI companies have hundreds of thousands of cutting-edge AI chips

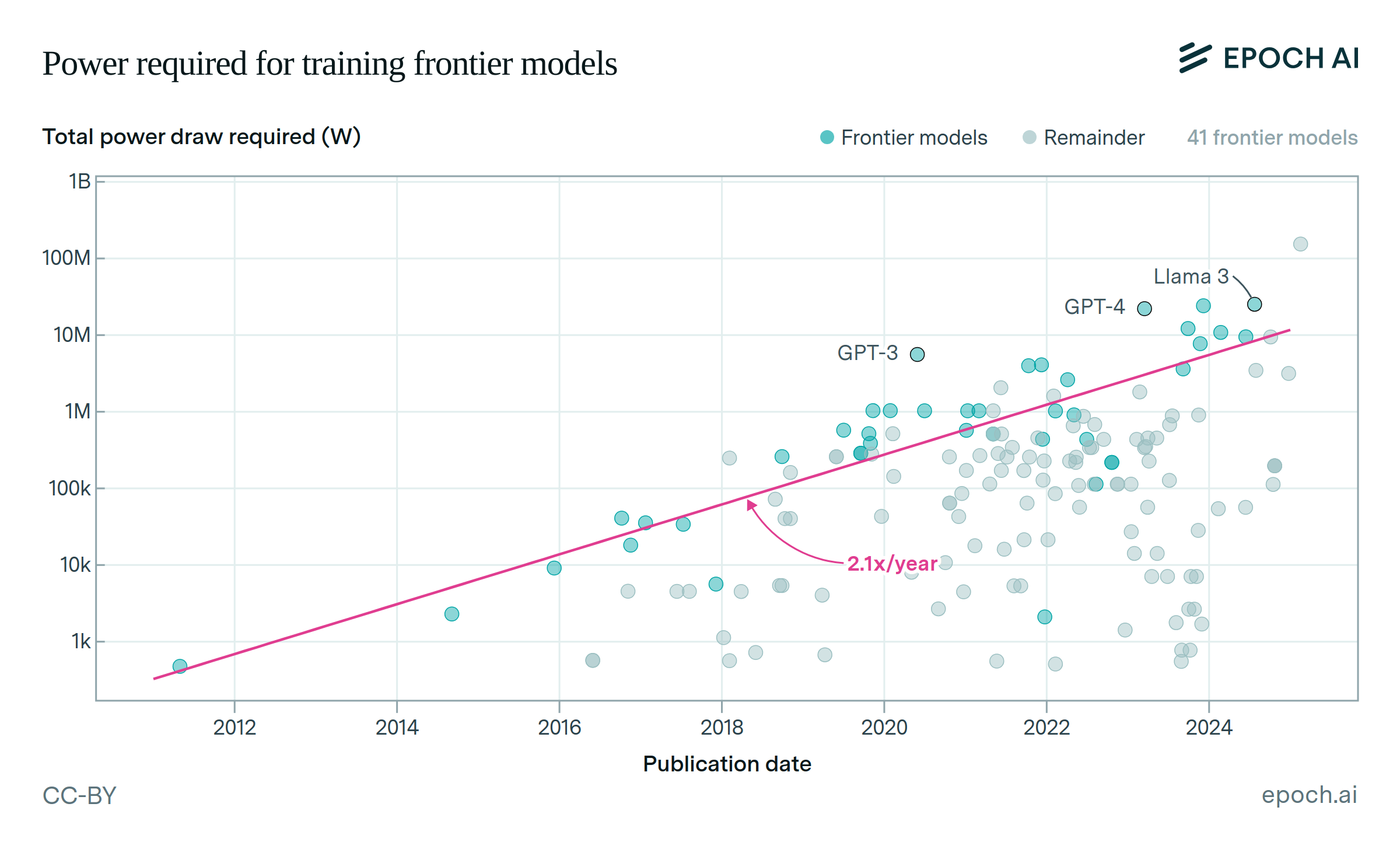

The power required to train frontier AI models is doubling annually

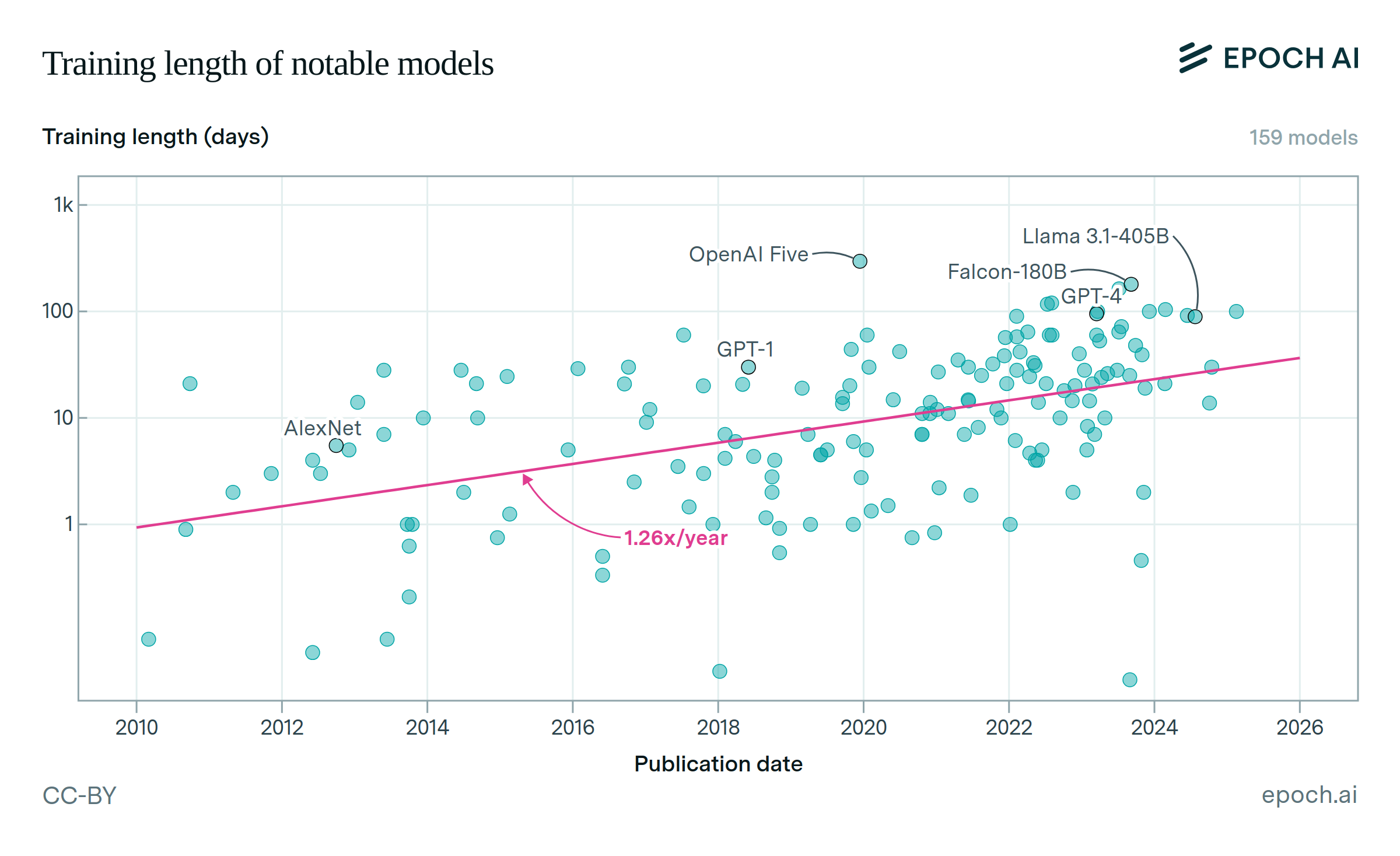

The length of time spent training notable models is growing

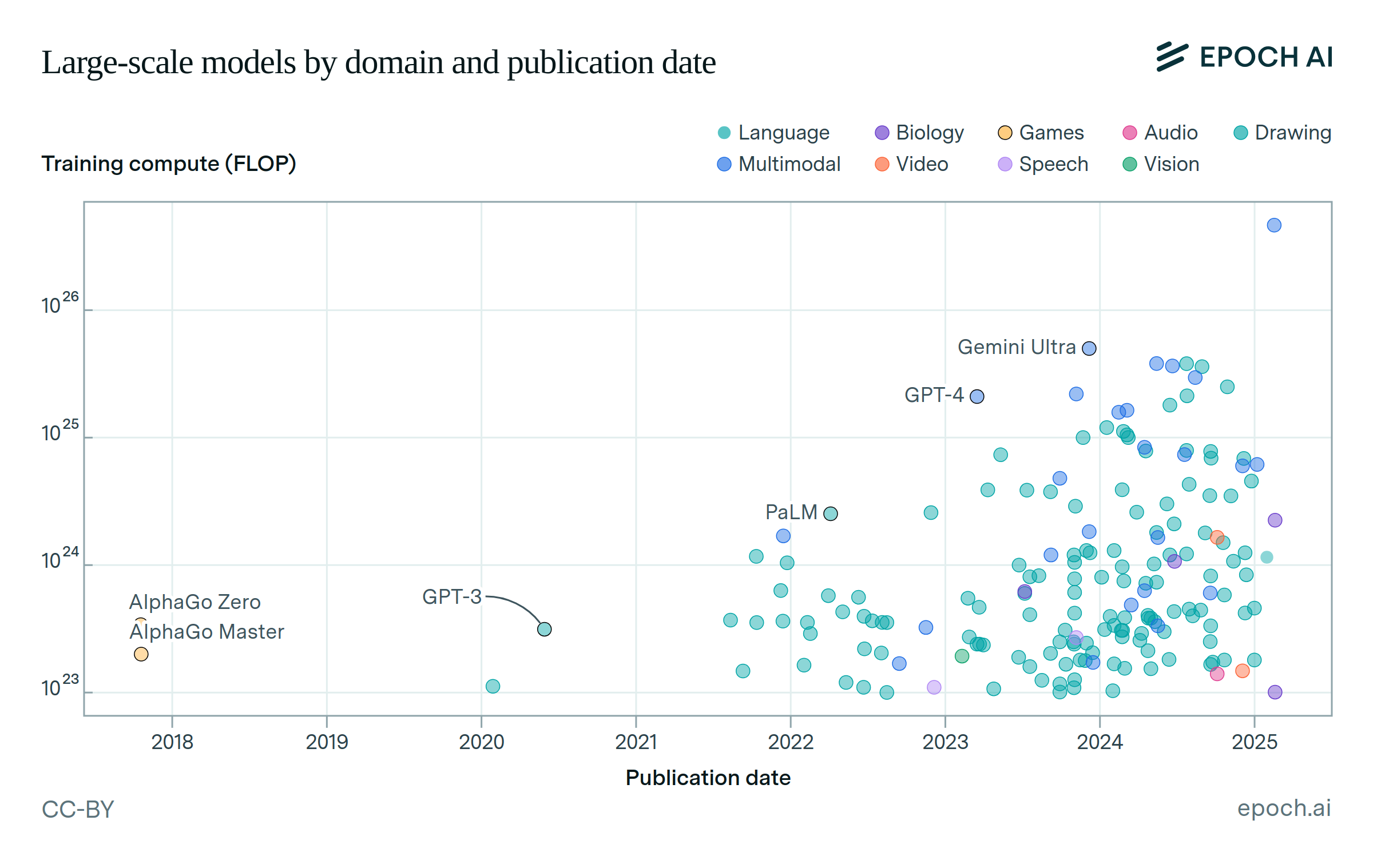

Language models compose the large majority of large-scale AI models

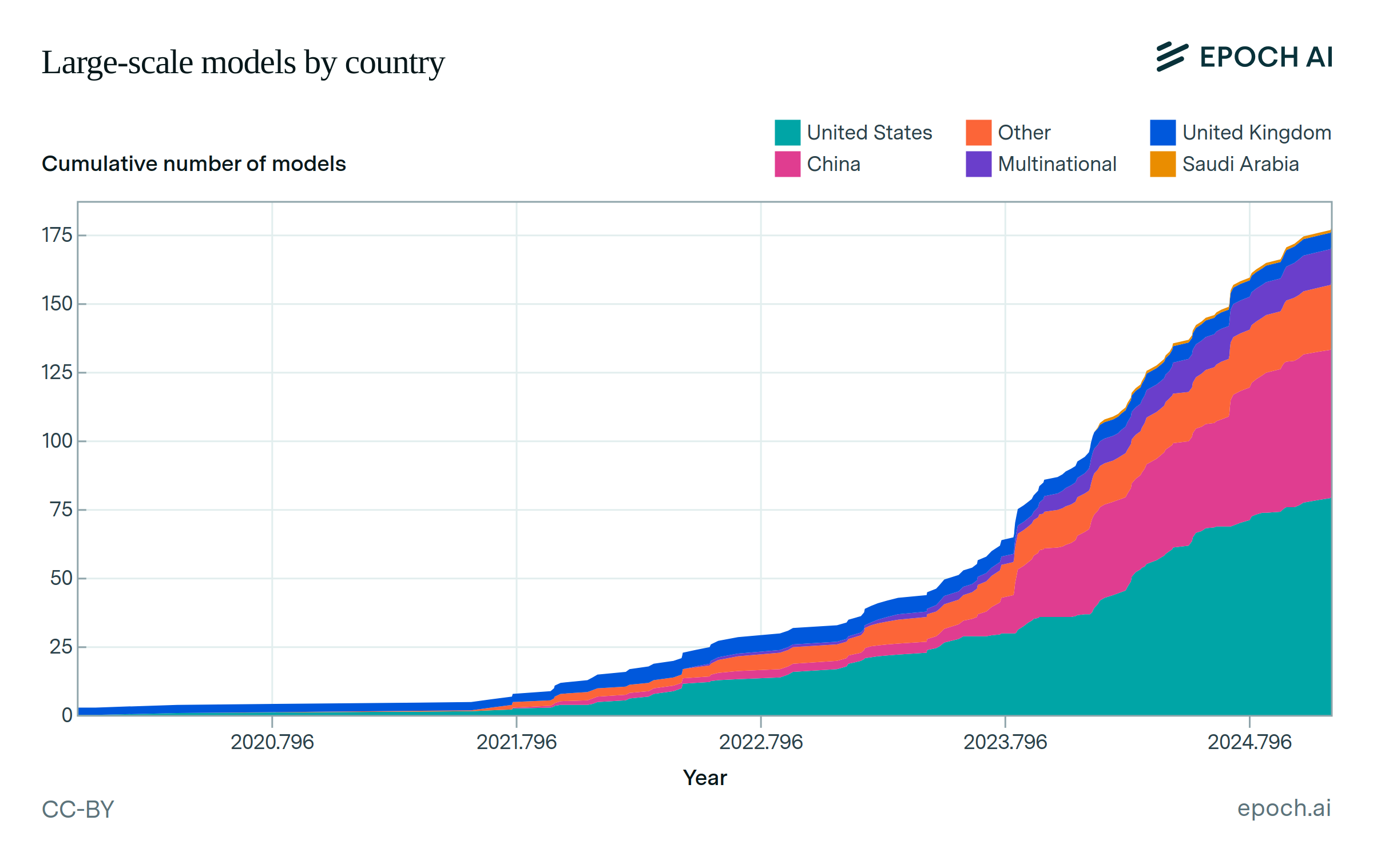

Most large-scale models are developed by US companies

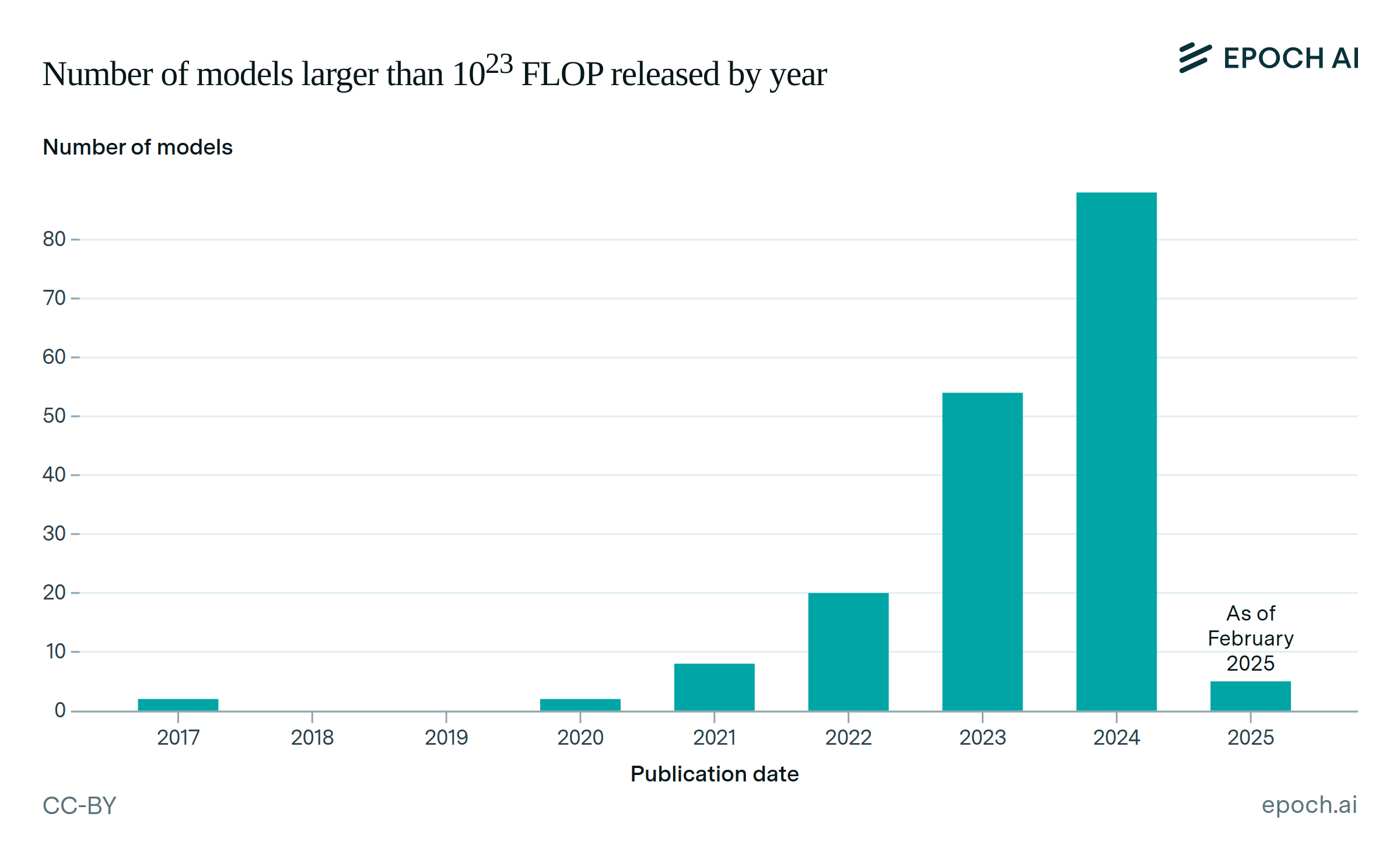

The pace of large-scale model releases is accelerating

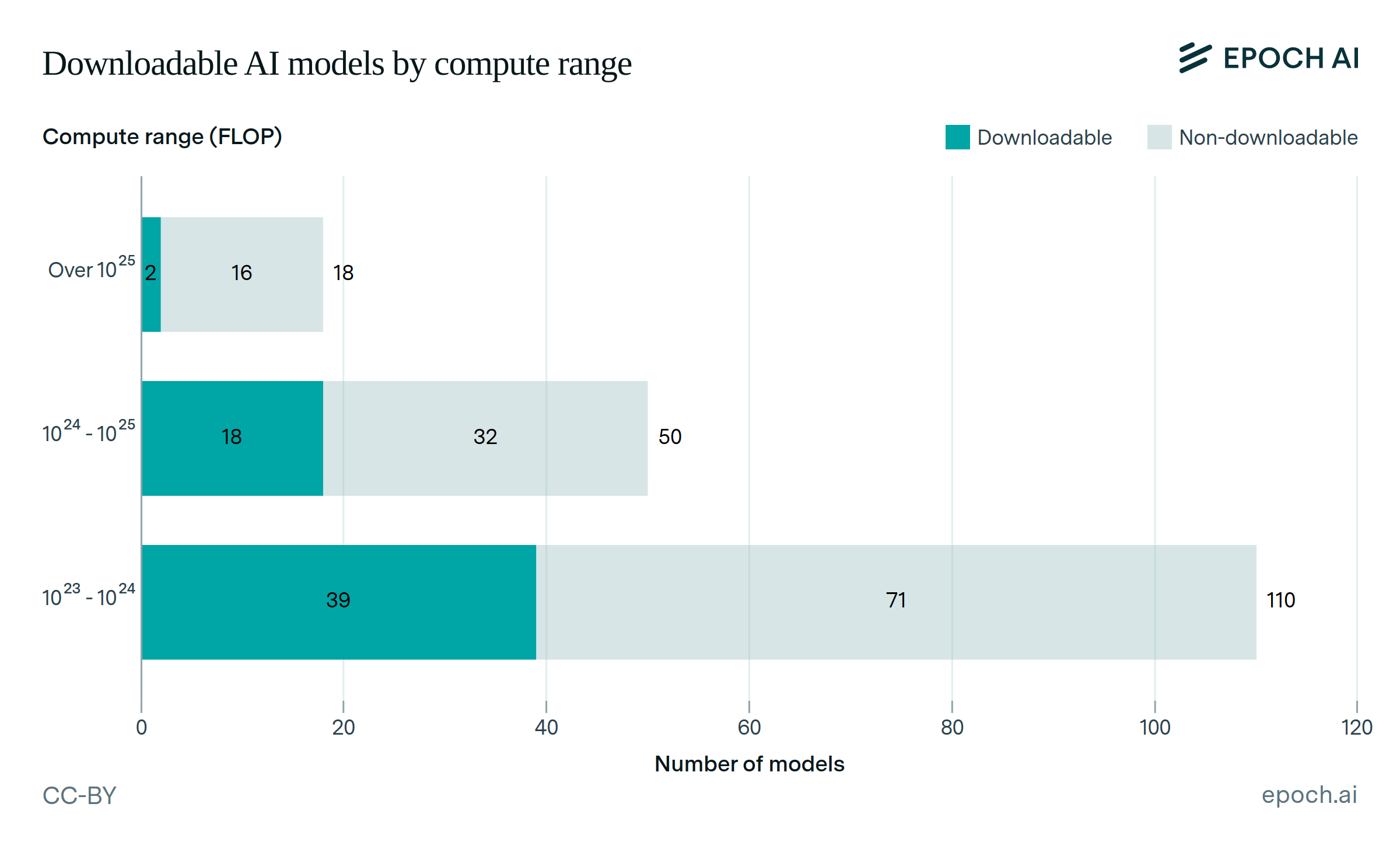

Almost half of large-scale models have published, downloadable weights

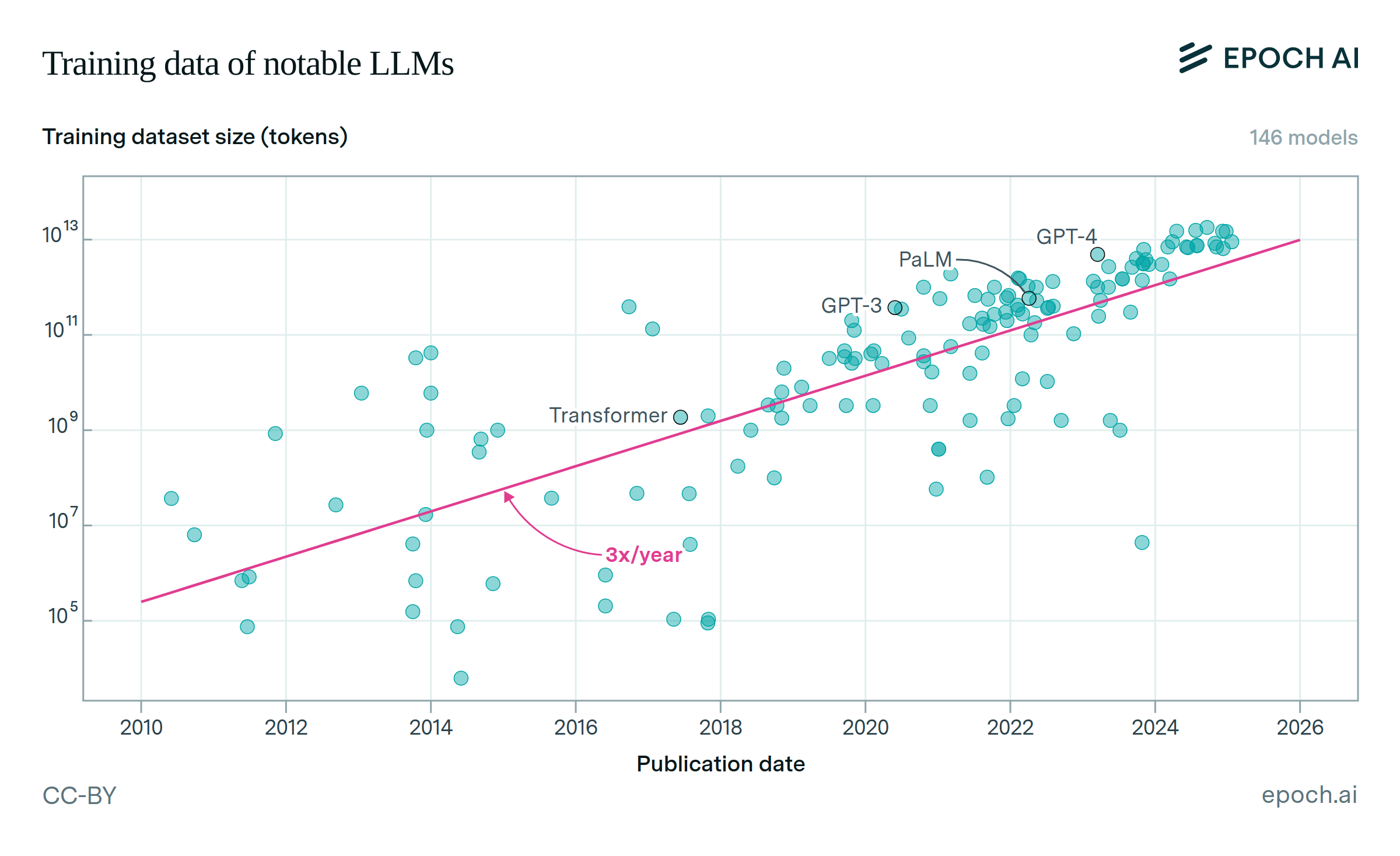

The size of datasets used to train language models doubles approximately every six months

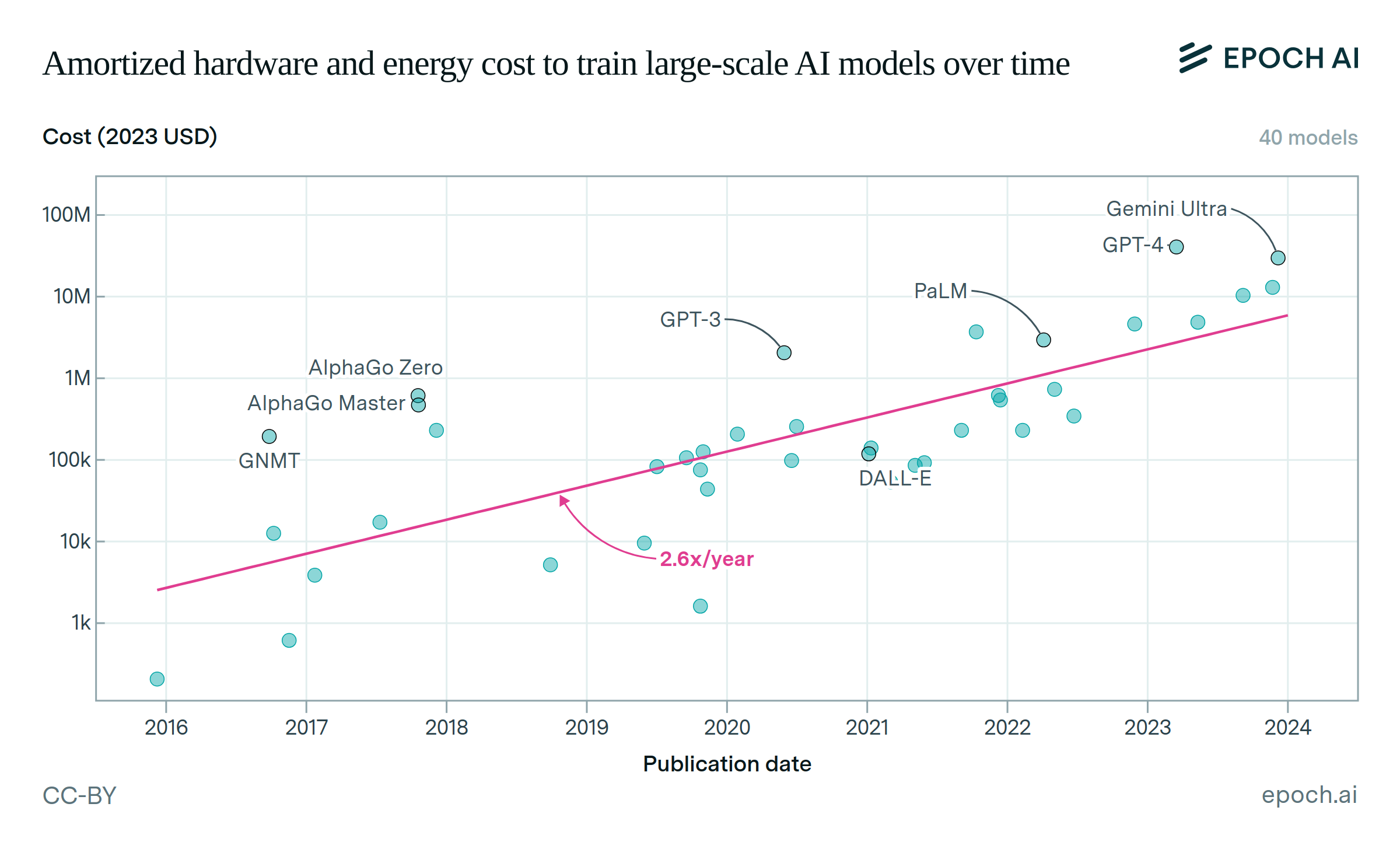

Training compute costs are doubling every eight months for the largest AI models

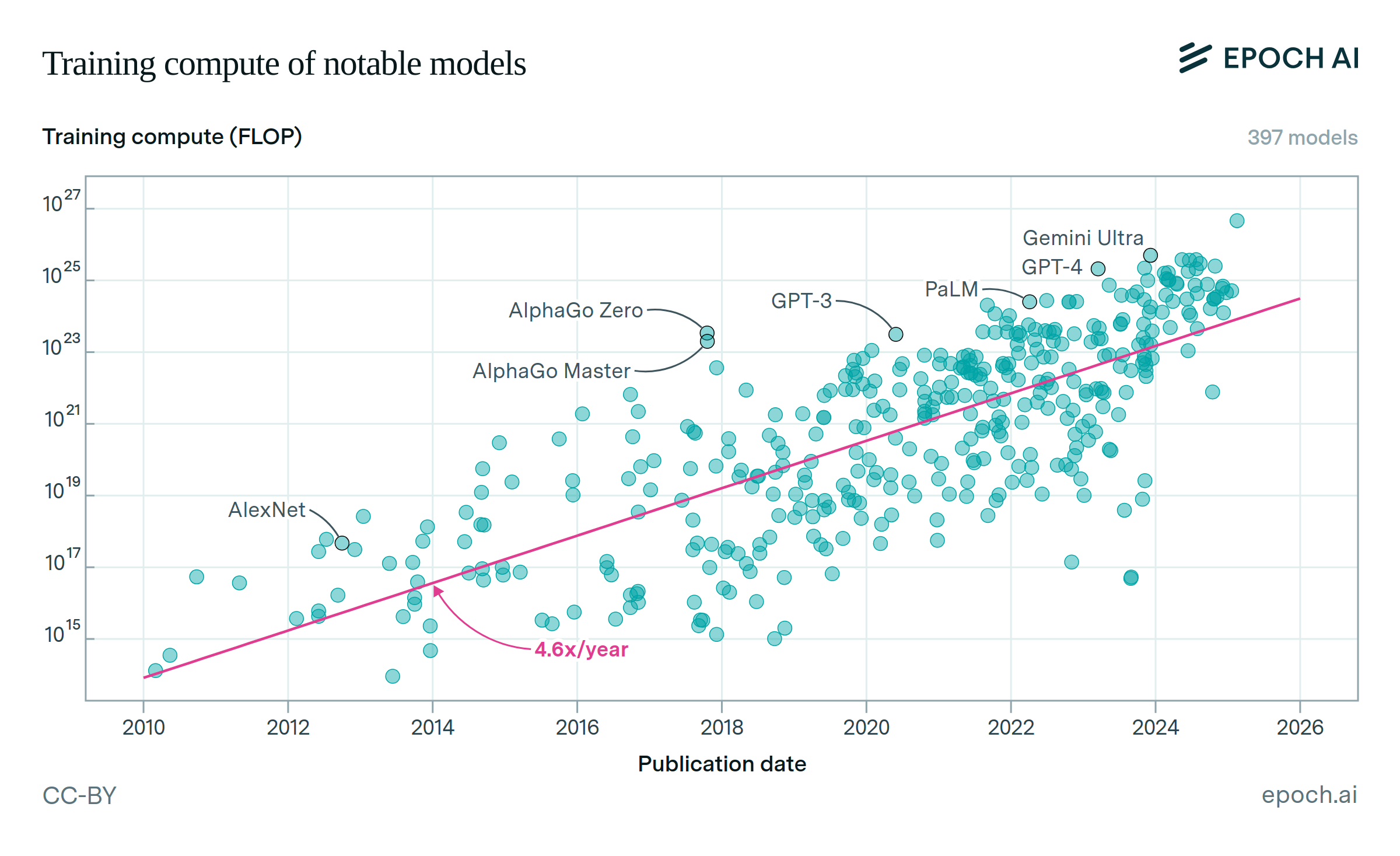

The training compute of notable AI models has been doubling roughly every six months

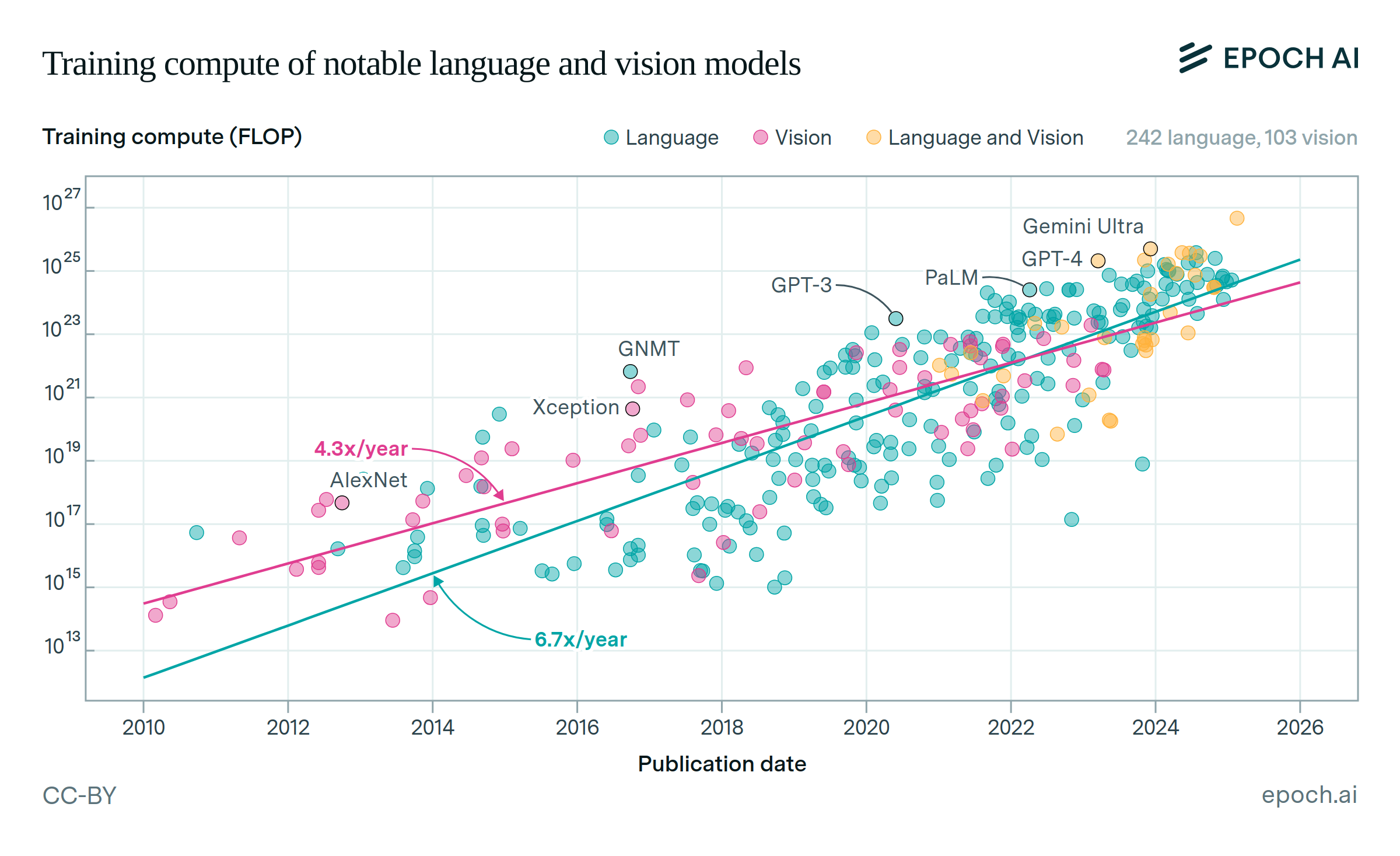

Training compute has scaled up faster for language than vision