AI capabilities have steadily improved over the past year

Across benchmarks measuring skills in research-level math, agentic coding, visual understanding, common sense reasoning, and more, AI capabilities have grown rapidly and consistently over the last 12 months.

While these benchmarks do not capture all of the nuanced abilities needed for economically valuable tasks, the clear upward trends reflect genuine improvements in AI’s utility. This growth in capabilities shows no sign of slowing down.

Authors

Published

September 30, 2025

Learn more

Overview

We show trends in a selection of benchmarks from Epoch’s Benchmarking Hub. These benchmarks are chosen to cover a diverse range of skills:

- FrontierMath tests models’ ability to solve research-level mathematical problems.

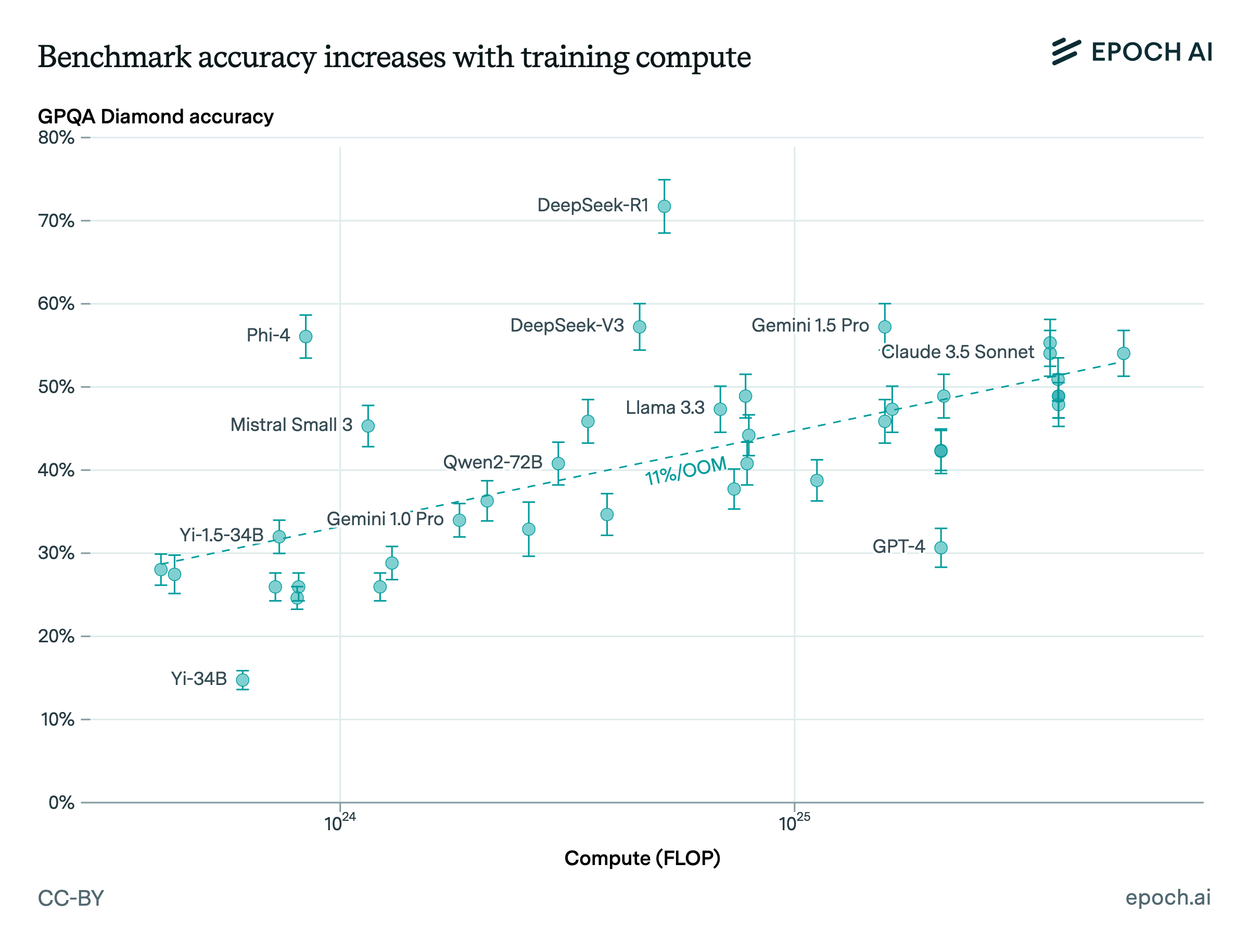

- GPQA Diamond poses graduate-level science questions across biology, physics, and chemistry, where answers are designed to be “Google-proof”.

- Aider Polyglot evaluates models’ performance on a set of challenging programming problems.

- SimpleBench is designed to test common sense reasoning by posing problems that are difficult for present-day models but easy for humans.

- The Visual Physics Comprehension Test (VPCT) is a benchmark designed to evaluate models’ understanding of basic physical scenarios.

Data

Data comes from a combination of internal evaluations and external reports. Aider Polyglot, SimpleBench, and VPCT are each collected from external benchmark leaderboards. GPQA Diamond and FrontierMath are run internally by Epoch. See the FAQs of our Benchmarking Hub for more information on how these evaluations are run.

Assumptions and limitations

The selected benchmarks cover only a subset of relevant skills which models have improved at. Notably, we do not yet track benchmarks for domains like robotics or biology, both of which appear to have improved notably over the past year.

Conversely, strong benchmark scores do not guarantee that models will generalize perfectly to real-world scenarios. For example, scoring 100% on GPQA Diamond does not immediately imply that model can replace a human scientist; models can be overfit to benchmarks, and benchmarks typically do not capture all aspects of real-world work.