Distributed Training Simulator

FLOP Utilization Rates of Training Runs

How does utilization scale with the size of the training run?

Configurations

How are training runs optimally parallelized?

Parallelism strategies

Maximum feasible training compute

What is the largest feasible training run that can be run at appreciable levels of utilization?

| Configuration | GPU | Model Type | Maximum feasible training compute |

|---|

Settings

Learn more

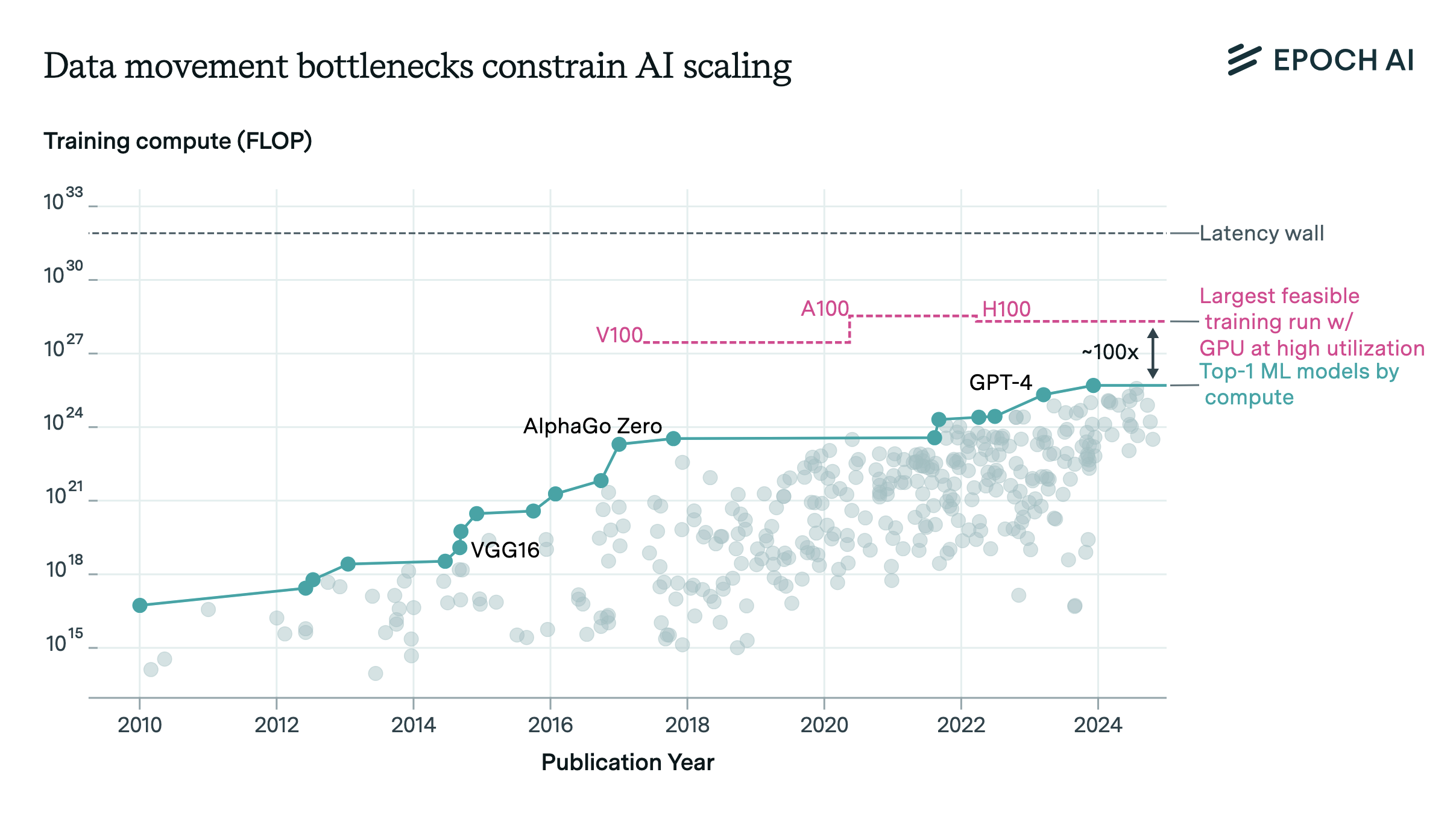

Data Movement Bottlenecks to Large-Scale Model Training: Scaling Past 1e28 FLOP

Data movement bottlenecks limit LLM scaling beyond 2e28 FLOP, with a “latency wall” at 2e31 FLOP. We may hit these in ~3 years. Aggressive batch size scaling could potentially overcome these limits.

Introducing the Distributed Training Interactive Simulator

We introduce an interactive simulation tool which can simulate distributed training runs of large language models under ideal conditions.