AI Models

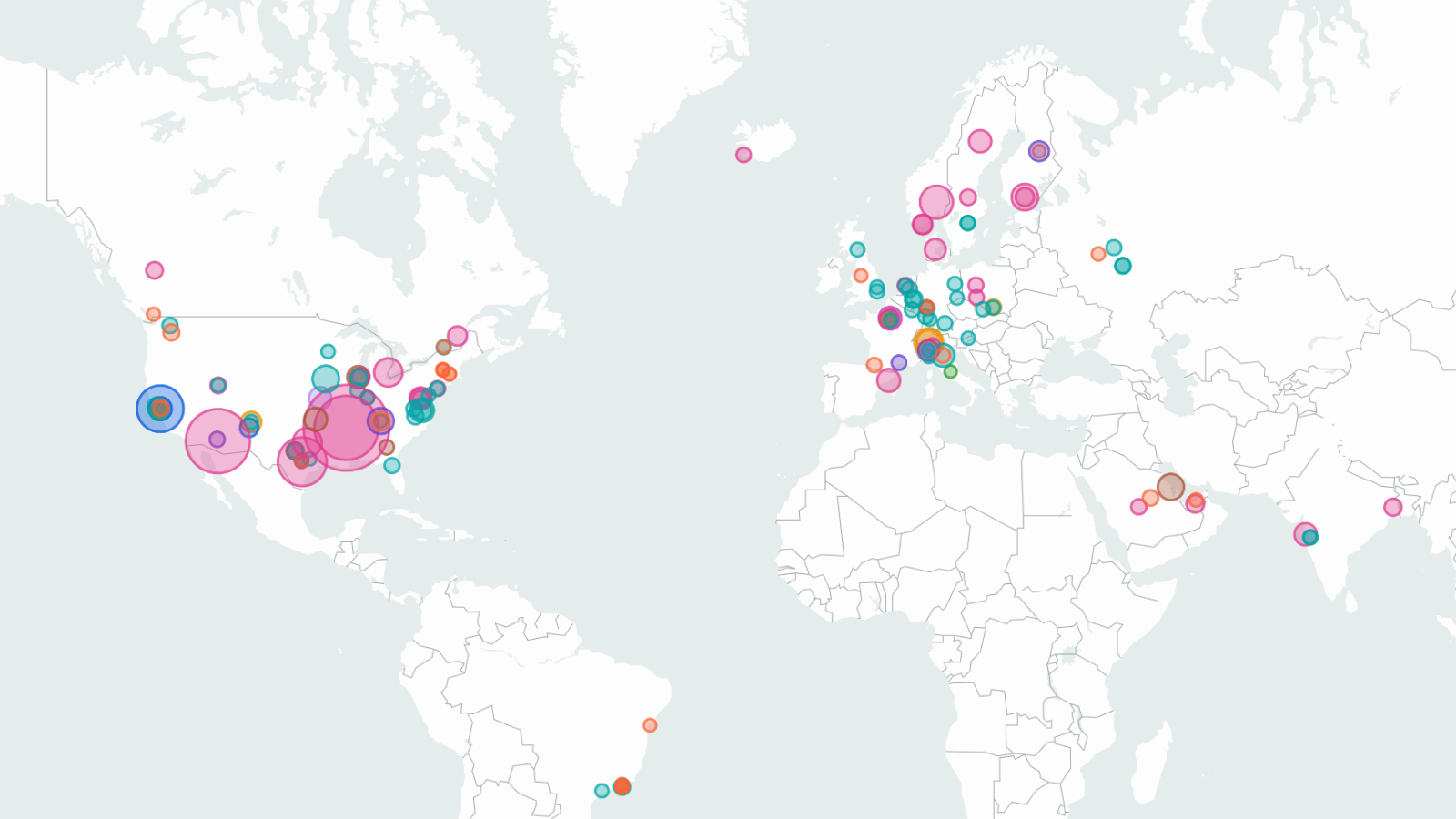

Our comprehensive database of over 3200 models tracks key factors driving machine learning progress.

Last updated February 27, 2026

Data insights

Selected insights from this dataset.

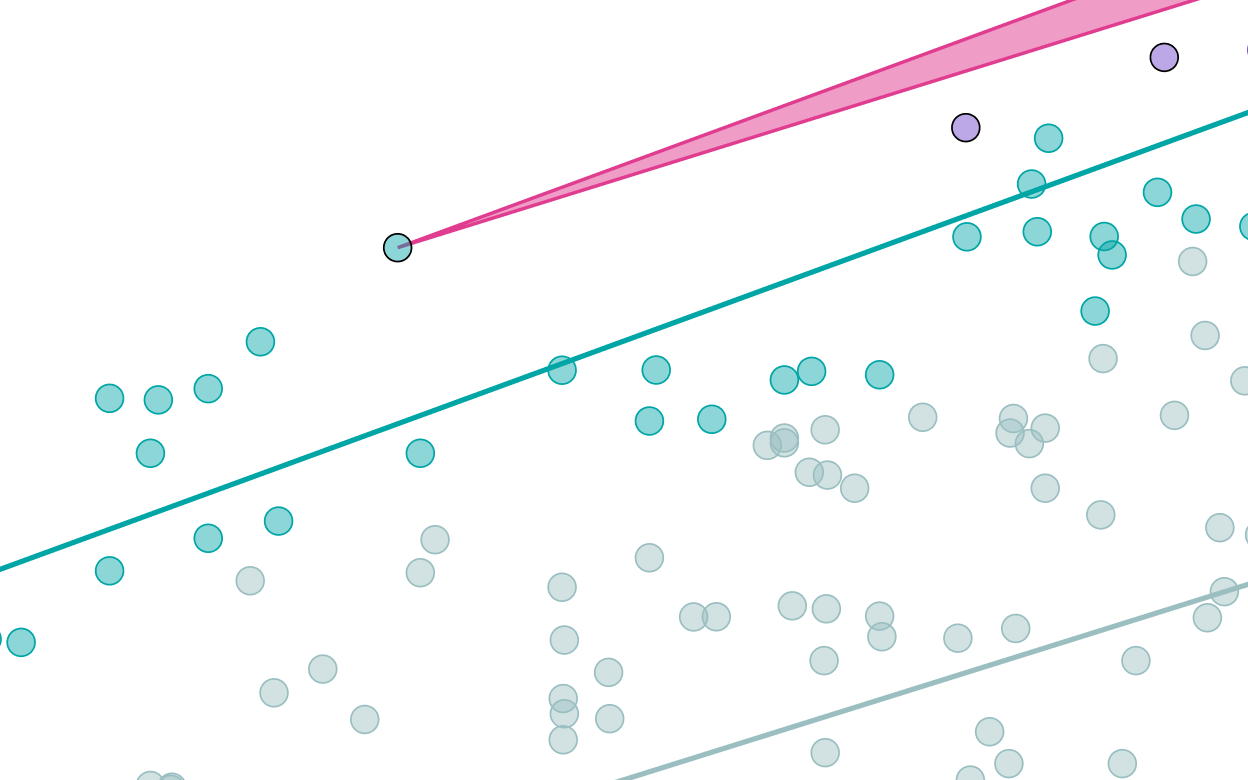

See all our insightsThe training compute of notable AI models has been doubling roughly every six months

Since 2010, the training compute used to create AI models has been growing at a rate of 4.4x per year. Most of this growth comes from increased spending, although improvements in hardware have also played a role.

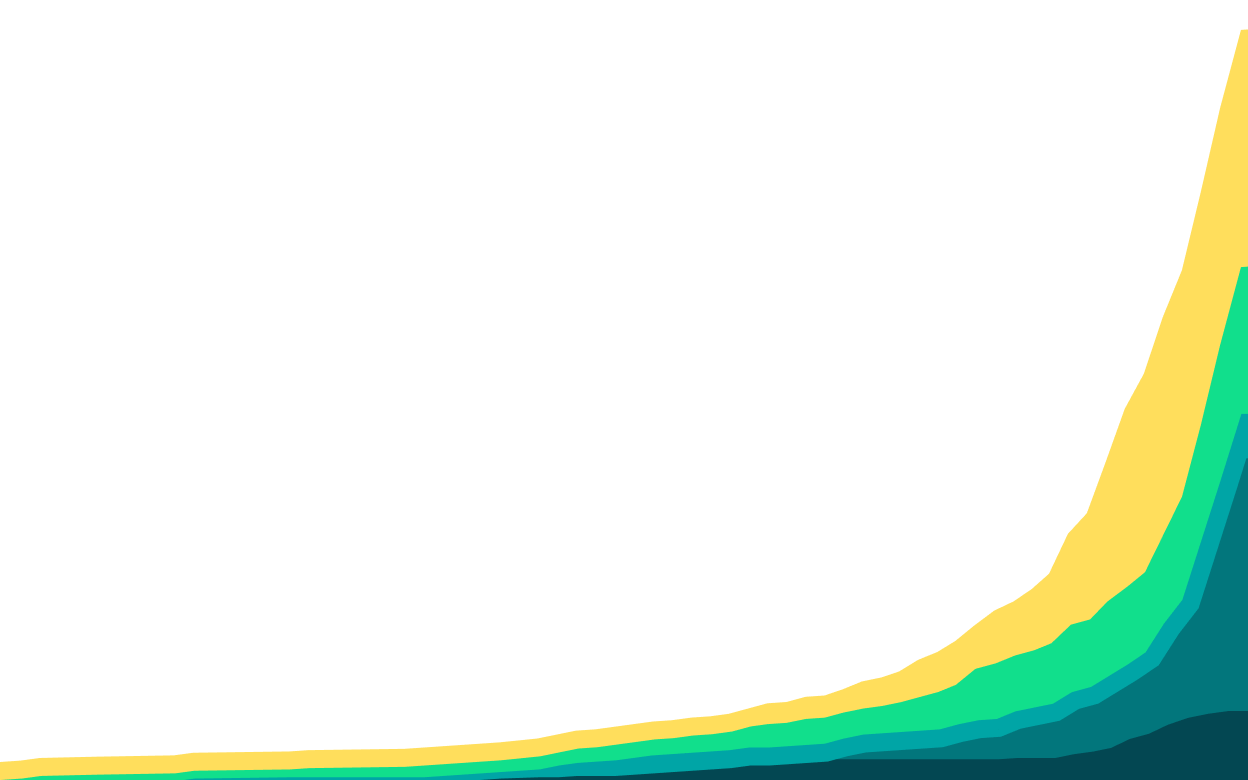

Learn moreTraining compute growth is driven by larger clusters, longer training, and better hardware

Since 2018, the most significant driver of compute scaling across frontier models has likely been an increase in the quantity of hardware used in training clusters. Also important have been a shift towards longer training runs, and increases in hardware performance.

These trends are closely linked to a massive surge in investment. AI development budgets have been expanding by around 2-3x per year, enabling vast training and inference clusters and ever-larger models.

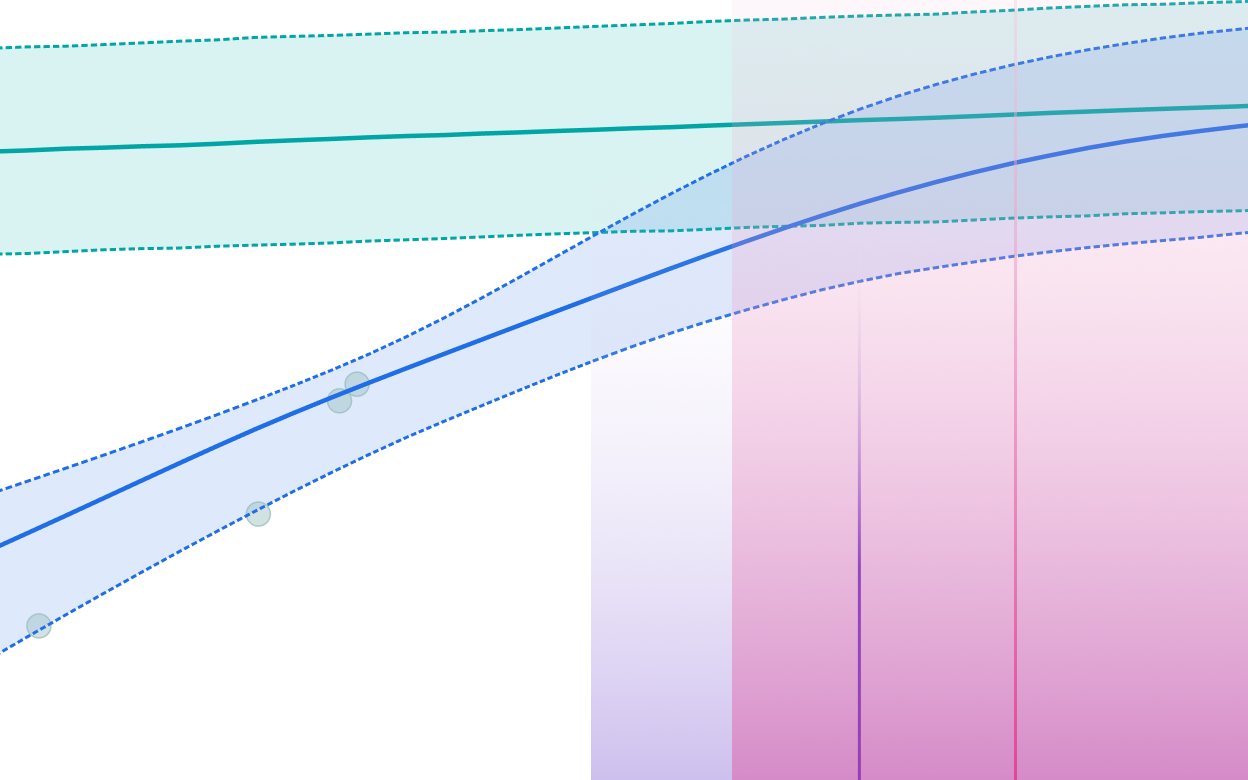

Learn moreThe power required to train frontier AI models is doubling annually

Training frontier models requires a large and growing amount of power for GPUs, servers, cooling and other equipment. This is driven by an increase in GPU count; power draw per GPU is also growing, but at only a few percent per year.

Training compute has grown even faster — around 4x/year. However, hardware efficiency (a 12x improvement in the last ten years), the adoption of lower precision formats (an 8x improvement) and longer training runs (a 4x increase) account for a roughly 2x/year decrease in power requirements relative to training compute.

Our methodology for calculating or estimating a model’s power draw during training can be found here.

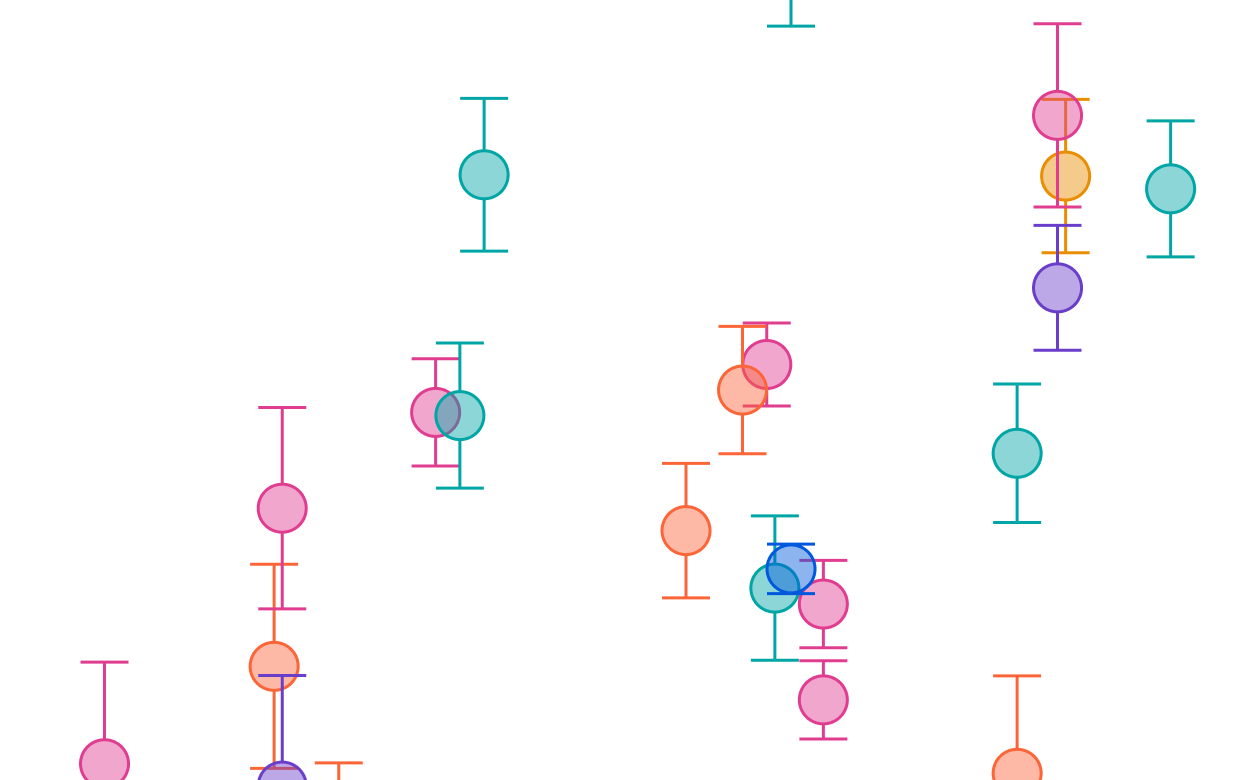

Learn moreTraining compute costs are doubling every eight months for the largest AI models

Spending on training large-scale ML models is growing at a rate of 2.4x per year. The most advanced models now cost hundreds of millions of dollars, with expenses measured by amortizing cluster costs over the training period. About half of this spending is on GPUs, with the remainder on other hardware and energy.

Learn moreOver 30 AI models have been trained at the scale of GPT-4

The largest AI models today are trained with over 1025 floating-point operations (FLOP) of compute. The first model trained at this scale was GPT-4, released in March 2023. As of June 2025, we have identified over 30 publicly announced AI models from 12 different AI developers that we believe to be over the 1025 FLOP training compute threshold.

Training a model of this scale costs tens of millions of dollars with current hardware. Despite the high cost, we expect a proliferation of such models—we saw an average of roughly two models over this threshold announced every month during 2024. Models trained at this scale will be subject to additional requirements under the EU AI Act, coming into force in August 2025.

Learn moreFAQ

What is a notable model?

A notable model meets any of the following criteria: (i) state-of-the-art improvement on a recognized benchmark; (ii) highly cited (over 1000 citations); (iii) historical relevance; (iv) significant use.

How was the AI Models dataset created?

The dataset was originally created for the report “Compute Trends Across Three Eras of Machine Learning” and has continually grown and expanded since then.

What are notable, frontier, and large-scale models?

We flag models as notable if they advanced the state of the art, achieved many citations in an academic publication, had over a million monthly users, were highly significant historically, or were developed at a cost of over one million dollars. You can learn more about these notability criteria by reading our AI Models Documentation.

Frontier models are models that were in the top 10 by training compute at the time of their release, a threshold that grows over time as larger models are developed.

Large-scale models are models that were trained with over 10^23 FLOP of compute, which is a static threshold that is used in some AI regulatory frameworks.

Why are the number of models in the database and the results in the explorer different?

The explorer only shows models where we have estimates to visualize, e.g. for training compute, parameter count, or dataset size. While we do our best to collect as much information as possible about the models in our databases, this process is limited by the amount of publicly available information from companies, labs, researchers, and other organizations. Further details about coverage can be found in the Records section of the documentation.

How is the data licensed?

Epoch AI’s data is free to use, distribute, and reproduce provided the source and authors are credited under the Creative Commons Attribution license. Complete citations can be found here.

How do you estimate details like training compute?

Where possible, we collect details such as training compute directly from publications. Otherwise, we estimate details from information such as model architecture and training data, or training hardware and duration. The documentation describes these approaches further. Per-entry notes on the estimation process can be found within the database.

How accurate is the data?

Records are labeled based on the uncertainty of their training compute, parameter count, and dataset size. “Confident” records are accurate within a factor of 3x, “Likely” records within a factor of 10x, and “Speculative” records within a factor of 30x, larger or smaller. Further details are available in the documentation. If you spot a mistake, please report it to data@epochai.org.

What are the question marks in some plots?

Models with the “Speculative” confidence level are indicated with a small question mark icon on the graph, to alert users not to treat this data as very precise. In some cases, numbers may be based on partial information about training hardware, reported benchmark scores, or leaked sources. In other cases, developers provide information that is consistent with a wide range of values, such as “months” of training time, or “trillions” of data points.

How up-to-date is the data?

The dataset is kept up-to-date by monitoring a variety of sources, including academic publications, press releases, and online news. An automated search process identifies newly released models each week using the Google Search API, and this is supplemented by models identified manually by Epoch staff.

The field of machine learning is highly active with frequent new releases, so there will inevitably be some models that have not yet been added. Generally, major models should be added within two weeks of their release, and others are added periodically during literature reviews. If you notice a missing model, you can notify us at data@epochai.org.

How can I access this data?

Download the data in CSV format.

Explore the data using our interactive tools.

View the data directly in a table format.

Who can I contact with questions or comments about the data?

Feedback and questions can be directed to the data group at data@epochai.org.

Documentation

Models in this dataset have been collected from various sources, including literature reviews, Papers With Code, historical accounts, highly-cited publications, proceedings of top conferences, and suggestions from individuals. The list of models is non-exhaustive, but aims to cover most models that were state-of-the-art when released, have over 1000 citations, one million monthly active users, or an equivalent level of historical significance. Additional information about our approach to measuring parameter counts, dataset size, and training compute can be found in the accompanying documentation.

Use this work

Licensing

Epoch AI's data is free to use, distribute, and reproduce provided the source and authors are credited under the Creative Commons Attribution license.

Citation

Epoch AI, ‘Data on AI Models’. Published online at epoch.ai. Retrieved from ‘https://epoch.ai/data/ai-models’ [online resource]. Accessed .BibTeX Citation

@misc{EpochAIModels2025,

title = {Data on AI Models},

author = {{Epoch AI}},

year = {2025},

month = {07},

url = {https://epoch.ai/data/ai-models},

note = {Accessed: }

}Python Import

import pandas as pd

data_url = "https://epoch.ai/data/all_ai_models.csv"

models_df = pd.read_csv(data_url)

Download this data

Notable AI Models

CSV, Updated February 27, 2026

Large-Scale AI Models

CSV, Updated February 19, 2026

Frontier Models

CSV, Updated February 27, 2026

All Models

CSV, Updated February 27, 2026