The computational performance of machine learning hardware has doubled every 2.3 years

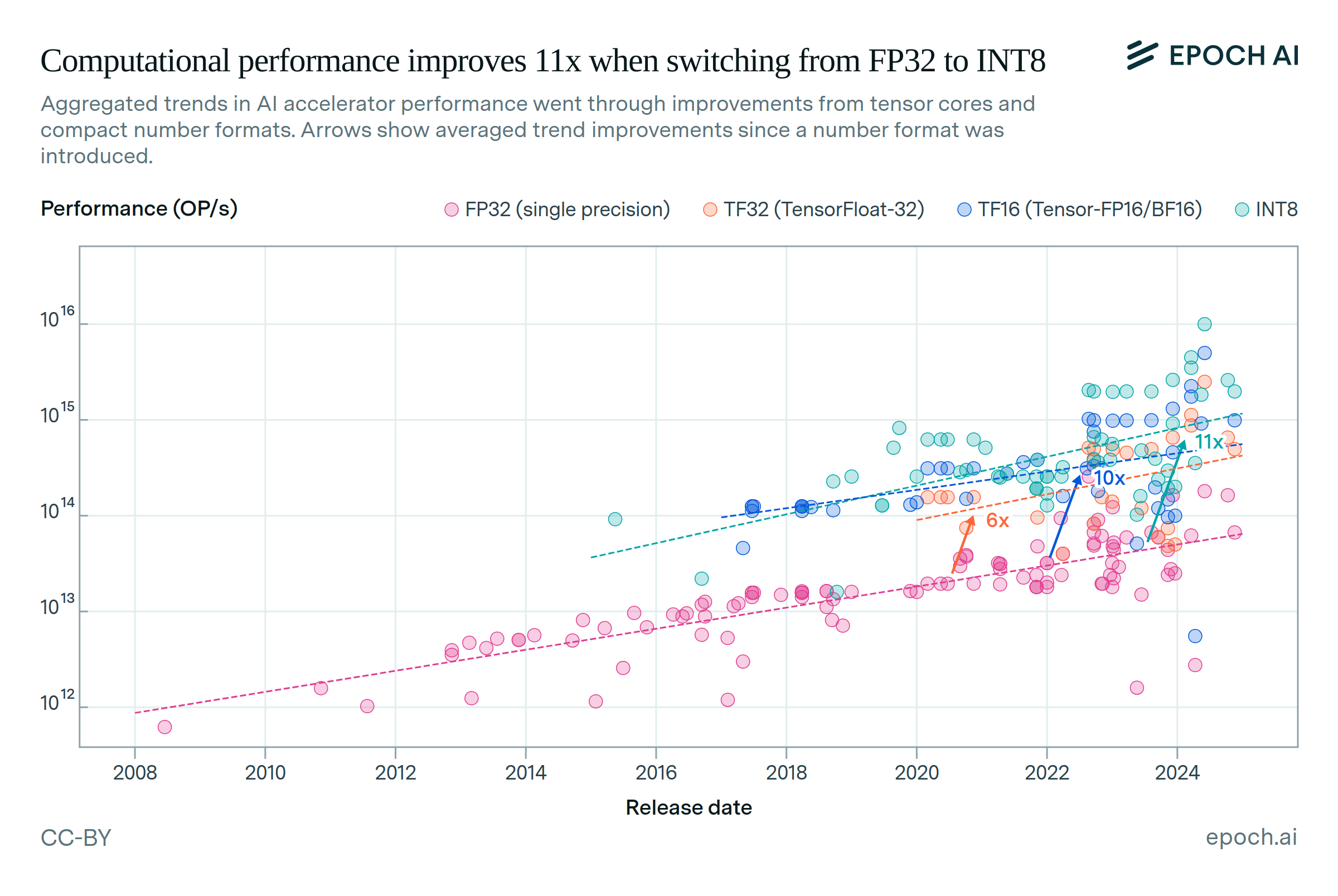

Measured in 16-bit floating point operations, ML hardware performance has increased at a rate of 36% per year, doubling every 2.3 years. A similar trend exists in 32-bit performance. Optimized ML number formats and tensor cores provided additional improvements.

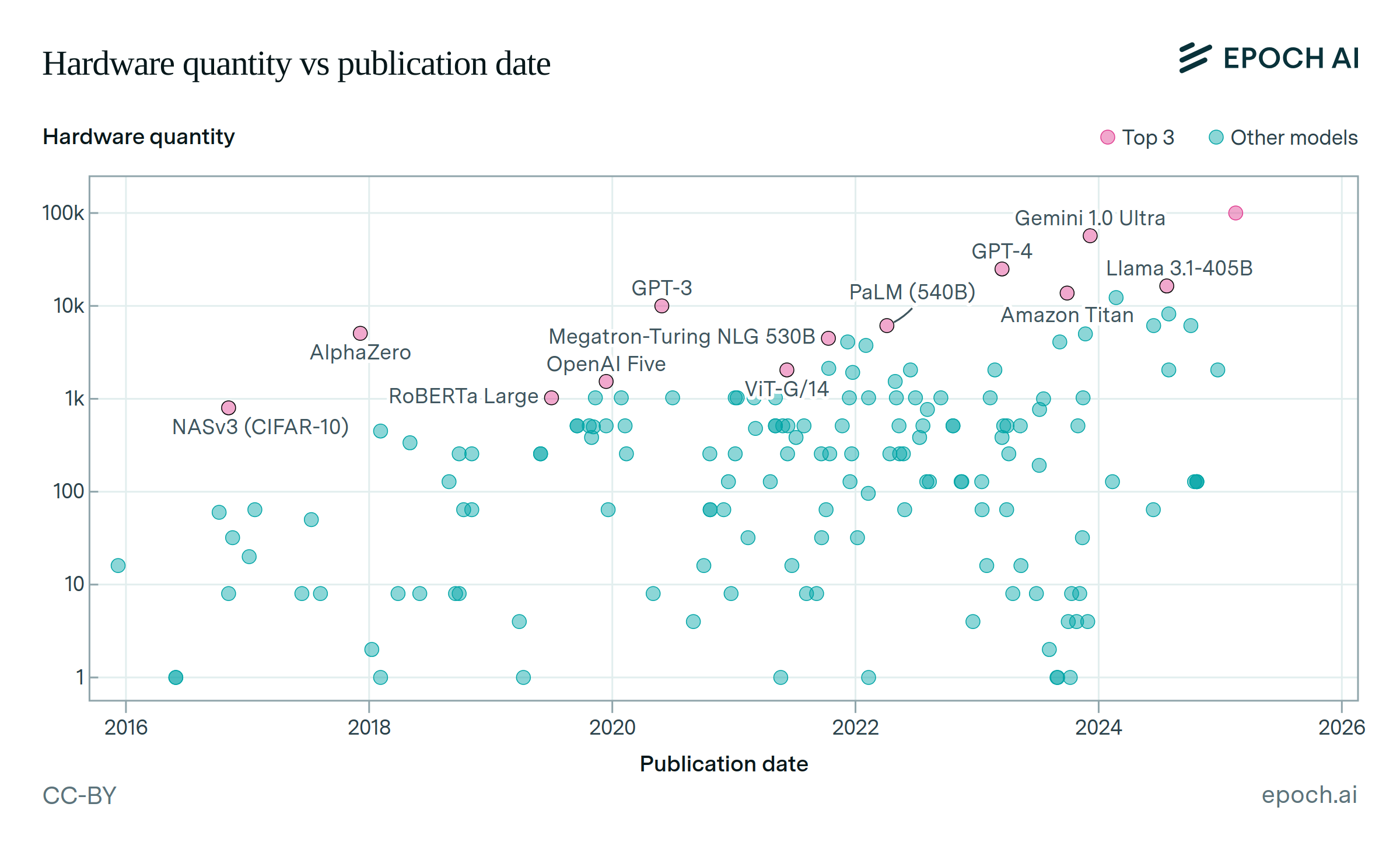

The improvement was driven by increasing transistor counts and other semiconductor manufacturing improvements, as well as specialized design for AI workloads. This improvement lowered cost per FLOP, increased energy efficiency, and enabled large-scale AI training.

Authors

Published

October 23, 2024