Machine Learning Hardware

We present key data on over 170 AI accelerators, such as graphics processing units (GPUs) and tensor processing units (TPUs), used to develop and deploy machine learning models in the deep learning era.

Last updated February 11, 2026

Data insights

Selected insights from this dataset.

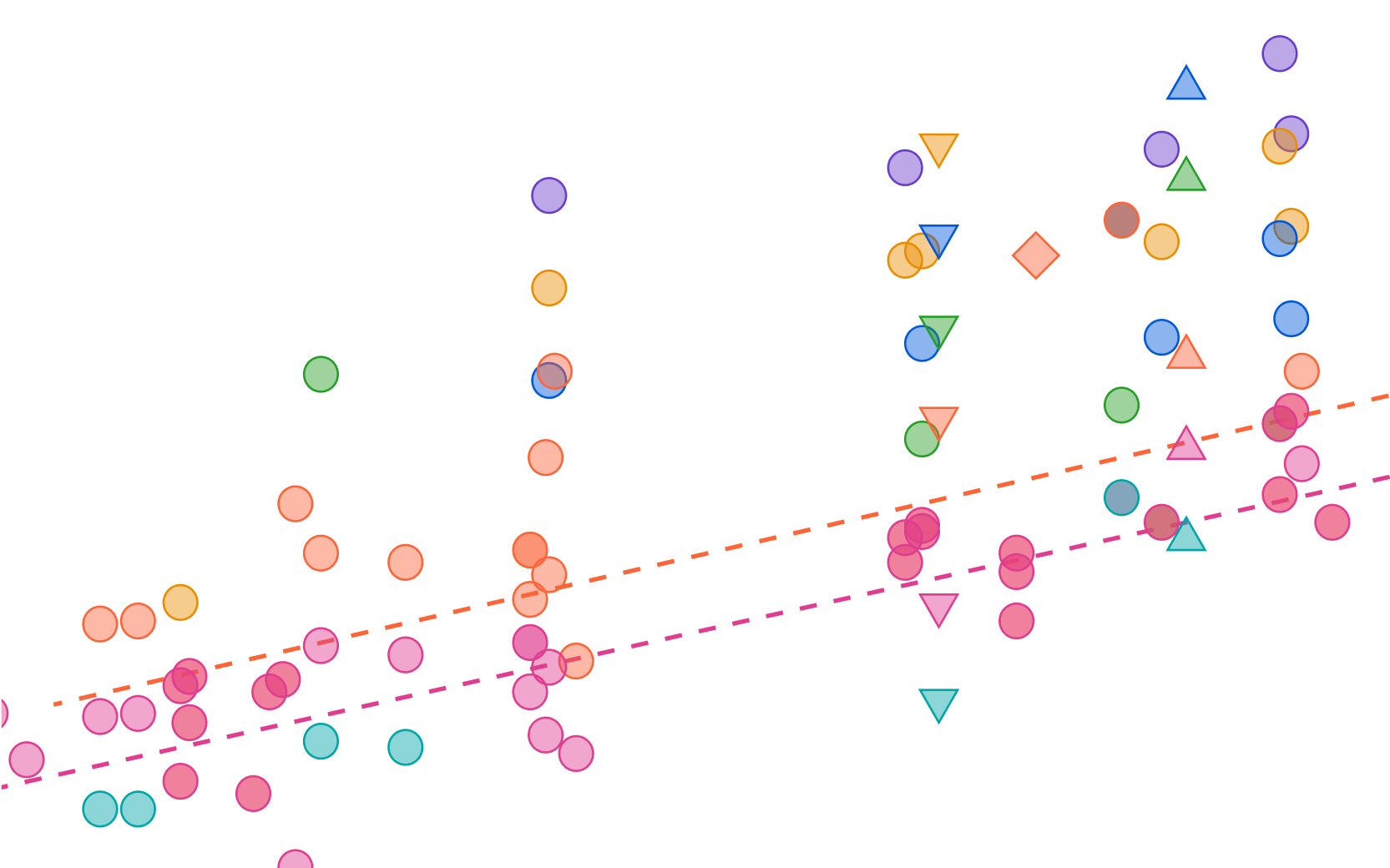

See all our insightsThe computational performance of machine learning hardware has doubled every 2.3 years

Measured in 16-bit floating point operations, ML hardware performance has increased at a rate of 36% per year, doubling every 2.3 years. A similar trend exists in 32-bit performance. Optimized ML number formats and tensor cores provided additional improvements.

The improvement was driven by increasing transistor counts and other semiconductor manufacturing improvements, as well as specialized design for AI workloads. This improvement lowered cost per FLOP, increased energy efficiency, and enabled large-scale AI training.

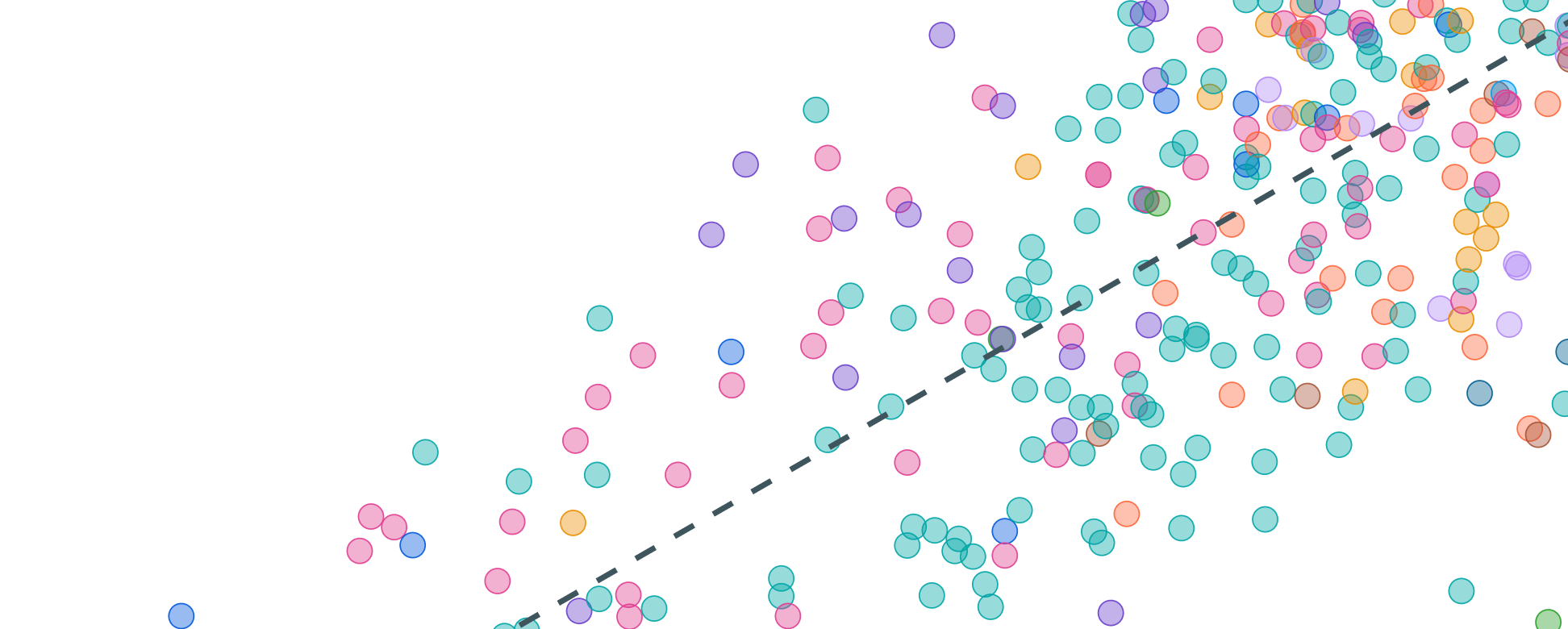

Learn morePerformance per dollar improves around 30% each year

Performance per dollar has improved rapidly, and hardware at any given precision and fixed performance level becomes 30% cheaper each year. At the same time, manufacturers continue to introduce more powerful and expensive hardware.

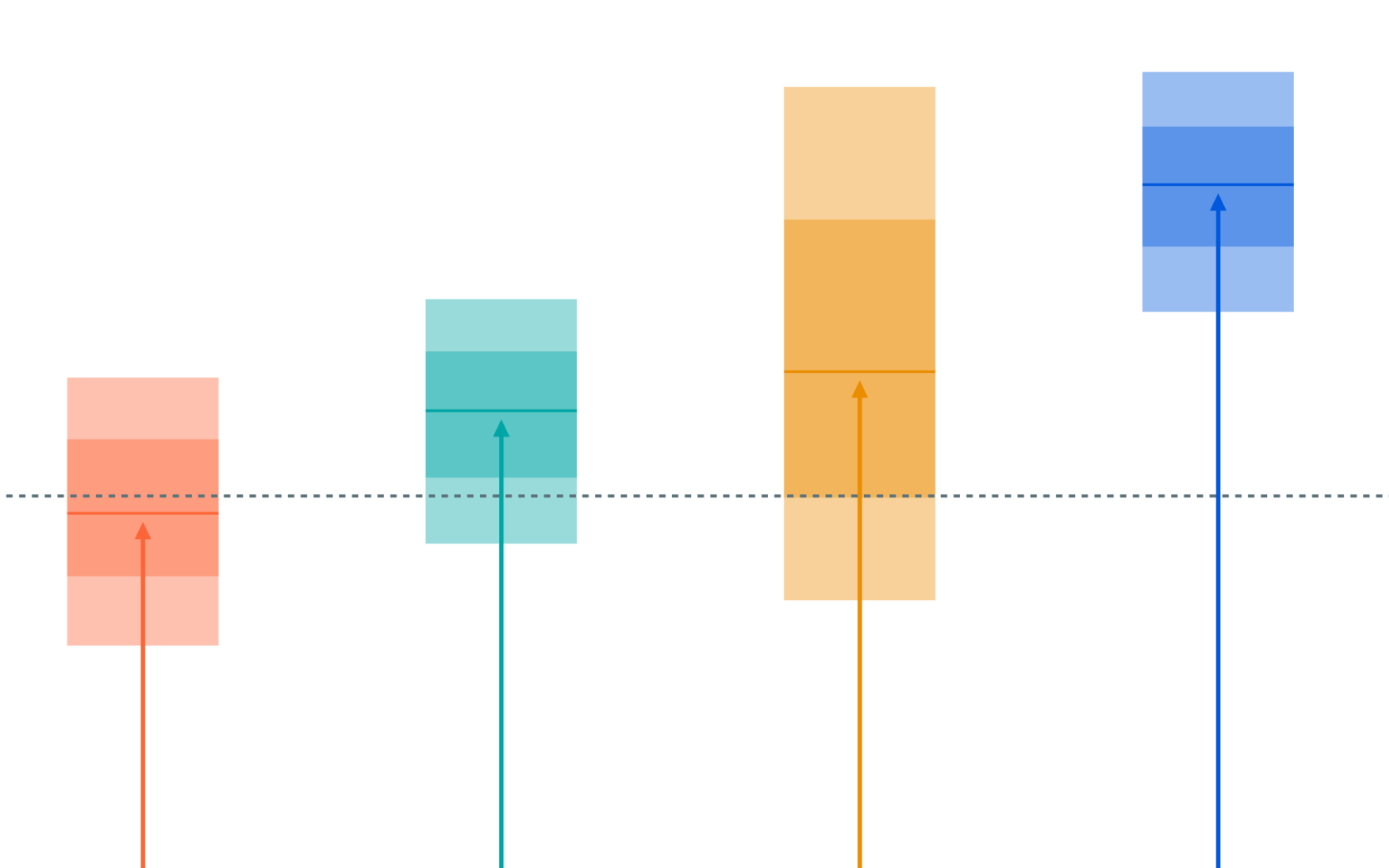

Learn morePerformance improves 13x when switching from FP32 to tensor-INT8

GPUs are typically faster when using tensor cores and number formats optimized for AI computing. Compared to using non-tensor FP32, TF32, tensor-FP16, and tensor-INT8 provide around 6x, 11x, and 13x greater performance on average in the aggregate performance trends. Some chips achieve even larger speedups. For example, the H100 is 59x faster in INT8 than in FP32.

These improvements account for about half of the overall performance trend improvement since their introduction. Models trained with lower precision formats have become common, especially tensor-FP16, as developers take advantage of this boost in performance.

Learn moreLeading ML hardware becomes 40% more energy-efficient each year

Historically, the energy efficiency of leading GPUs and TPUs has doubled every 2 years. In tensor-FP16 format, the most efficient accelerators are Meta’s MTIA, at up to 2.1 x 1012 FLOP/s per watt, and the NVIDIA H100, at up to 1.4 x 1012 FLOP/s per watt. The upcoming Blackwell series of processors may be even more efficient, depending on their power consumption.

Learn moreFAQ

Which processors count as machine learning hardware?

GPUs and TPUs are identified as machine learning hardware if they are used to train a notable ML model, or are offered on a rental basis for ML workloads by major cloud compute providers. CPUs are not included in this dataset.

What do number formats like FP32, BF16, and INT8 mean?

Numerical values used in computing are represented in several different formats, and these formats vary in the number of bits (0s or 1s) required to represent one number. Higher-bit formats are more precise, but require more storage and more compute to process, and AI developers have been moving to lower-precision formats over time using new hardware optimized for those formats (more details here).

Commonly used formats include FP64, FP32/TF32, BF16, and INT8:

- FP64 is a 64-bit floating-point format, also known as double-precision floating-point. It typically consists of 1 sign bit, 11 exponent bits, and 52 mantissa (or significand) bits.

- FP32 is a 32-bit floating-point format that is commonly used in computing. TF32 is an NVIDIA variant of FP32, used primarily in AI and ML applications, truncated to 19 bits for faster computation while maintaining adequate precision for deep learning.

- BF16 is a 16-bit floating-point format designed for AI and machine learning applications. It uses 1 sign bit, 8 exponent bits (like FP32), 7 mantissa bits, and offers about 3 decimal digits of precision. Despite its reduced precision, BF16 retains the same range as FP32 due to the same number of exponent bits. It is widely used in AI/ML for training and inference, particularly in neural networks, where high precision in the mantissa is less critical. In this page we group together BF16 and NVIDIA tensor operations at FP16 precision as “tensor-FP16”.

- INT8 is an 8-bit integer format. It represents values in a fixed range, typically -128 to 127 for signed integers or 0 to 255 for unsigned integers. It’s used in AI/ML for quantizing neural networks, reducing model size, and speeding up inference by lowering the precision of weights and activations.

How is the data licensed?

Epoch AI’s data is free to use, distribute, and reproduce provided the source and authors are credited under the Creative Commons Attribution license. Complete citations can be found here.

How can I access this data?

Download the data in CSV format.

Explore the data using our interactive tools.

View the data directly in a table format.

Who can I contact with questions or comments about the data?

Feedback can be directed to the data team at data@epoch.ai.

Documentation

To identify ML hardware, we annotated chips used for ML training in our database of Notable AI Models. We additionally added ML hardware that has not been documented in training those systems, but is clearly manufactured for ML - based on its description, supported numerical formats, or belonging to the same family as other ML hardware.

We use hardware datasheets, documented for each chip in the dataset, to fill in key information such as computing performance, die size, etc. Not all information is available, or even applicable, for all hardware, so columns are often left empty. We additionally use other sources, such as news coverage or hardware price archives, to fill in the price on release.

Use this work

Licensing

Epoch AI's data is free to use, distribute, and reproduce provided the source and authors are credited under the Creative Commons Attribution license.

Citation

Epoch AI, ‘Data on Machine Learning Hardware’. Published online at epoch.ai. Retrieved from ‘https://epoch.ai/data/machine-learning-hardware’ [online resource]. Accessed .BibTeX Citation

@misc{EpochMachineLearningHardware2024,

title = {Data on Machine Learning Hardware”},

author = {{Epoch AI}},

year = {2024},

month = {10},

url = {https://epoch.ai/data/machine-learning-hardware},

note = {Accessed: }

}Download this data

Machine Learning Hardware

CSV, Updated February 11, 2026