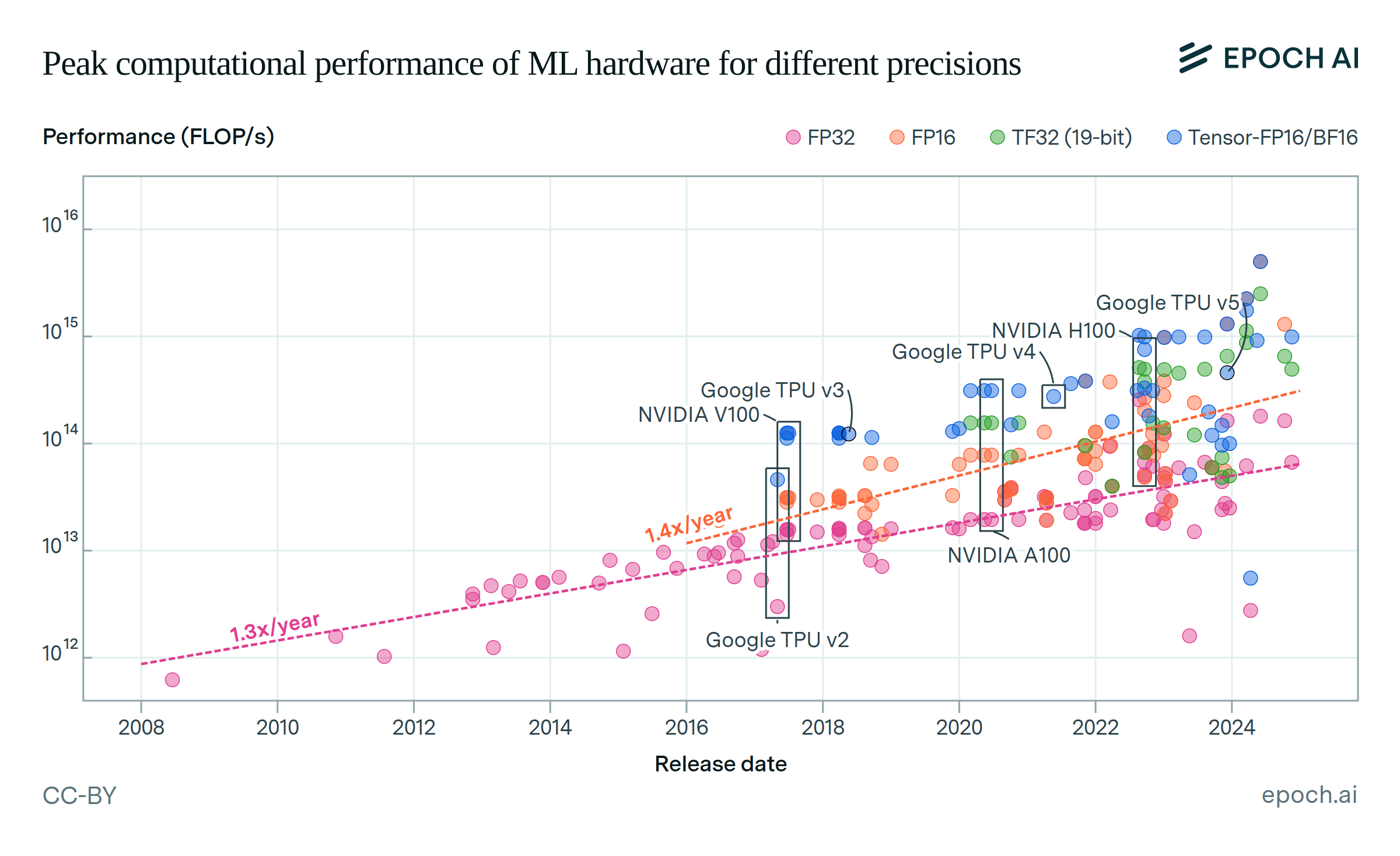

Performance improves 13x when switching from FP32 to tensor-INT8

GPUs are typically faster when using tensor cores and number formats optimized for AI computing. Compared to using non-tensor FP32, TF32, tensor-FP16, and tensor-INT8 provide around 6x, 11x, and 13x greater performance on average in the aggregate performance trends. Some chips achieve even larger speedups. For example, the H100 is 59x faster in INT8 than in FP32.

These improvements account for about half of the overall performance trend improvement since their introduction. Models trained with lower precision formats have become common, especially tensor-FP16, as developers take advantage of this boost in performance.

Authors

Published

October 23, 2024

Learn more

Overview

We estimate the individual per-format trends, then use these to estimate the average performance improvement compared to the non-tensor FP32 trend. This average improvement is evaluated across the limits of the shorter data series, so typically begins around 2016. Note that individual chips can vary significantly in how much they benefit from different number formats.

Analysis

We perform separate log-linear regressions, for each numeric format, between computational performance and release date. The results from these regressions are used to estimate the average performance improvement relative to FP32. Confidence intervals for this difference are derived from the standard errors of the regression averages.

Assumptions

We assume that the aggregate performance trends across ML accelerators in our dataset are representative of hardware used for ML. This is an imperfect assumption, because a few leading hardware models are used more often. We believe that including other ML hardware is nevertheless useful to better estimate performance trends, but if flagship ML hardware follows different trends to ML hardware more broadly, these results will be less accurate.

For average performance improvement to reflect performance improvement at a given time, we must assume that the individual performance trends are close to parallel. So far, this appears to have held true.