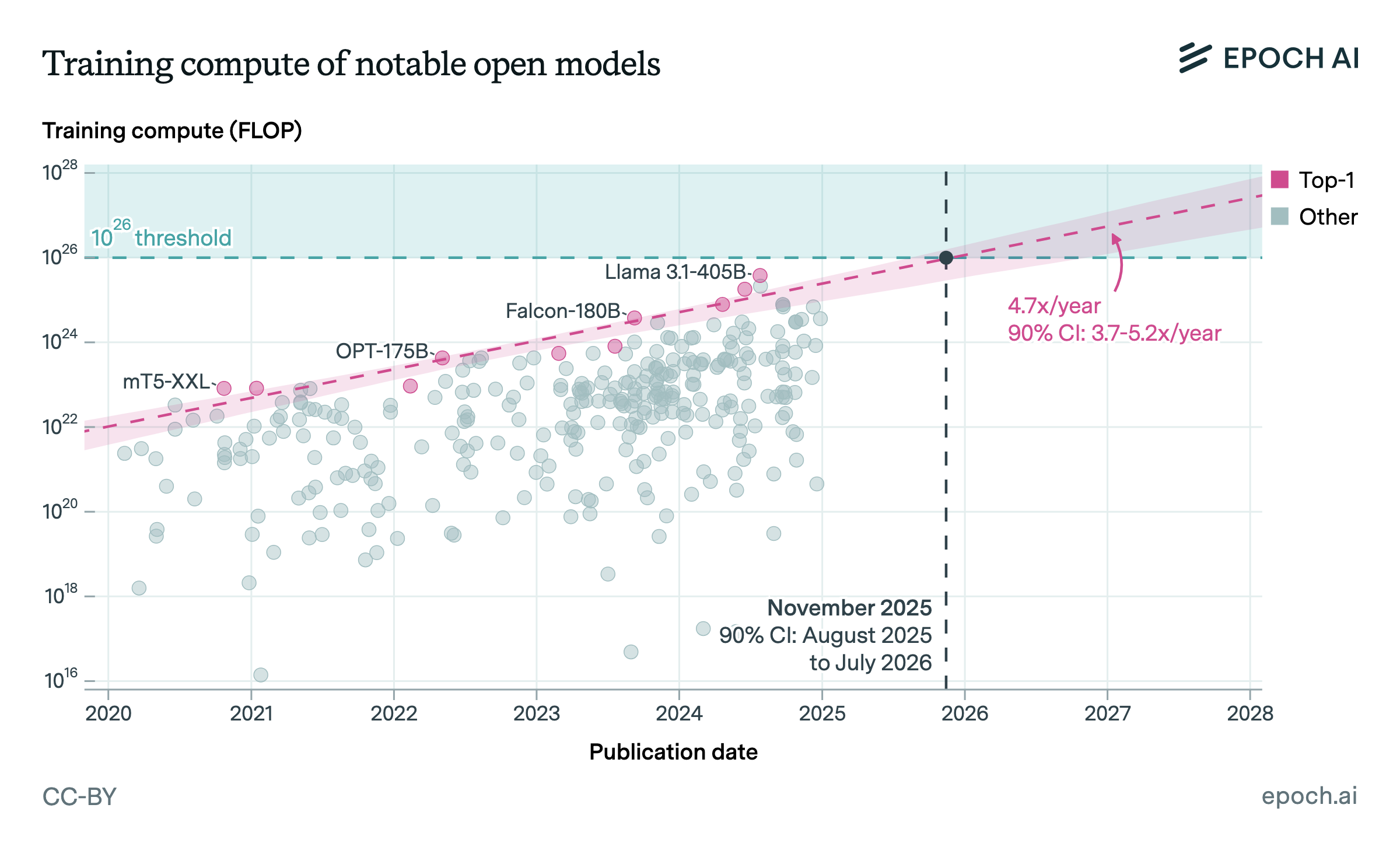

The training compute of notable AI models has been doubling roughly every six months

Since 2010, the training compute used to create AI models has been growing at a rate of 4.4x per year. Most of this growth comes from increased spending, although improvements in hardware have also played a role.

Authors

Published

June 19, 2024

Learn more

Data

Data come from Epoch AI’s AI Models database, which contains information on over 2700 models trained since 1950. We begin by filtering to models meeting one of our notability criteria, and to models trained after 2010, in order to focus on recent trends in AI. We additionally filter out any models missing values for either publication date or training compute, leaving us with a final dataset of 428 observations.

Analysis

We perform a simple log-linear regression to obtain a trend in training compute over time, using the slope coefficient’s standard errors to estimate a 90% confidence interval. For a more extensive justification of the log-linear trend, see Appendix 1: Compute-Trend Model Selection in our full report.

Results are presented in the table below.

| R2 | Annual growth | 90% confidence interval |

|---|---|---|

| 0.60 | 4.7x / year | (4.3x to 5.2x) |

Assumptions and limitations

- We present results for all notable models. Different subsets of the data present somewhat different trends (e.g. frontier models, language models, etc.)

- Our training compute figures are estimated with some uncertainty. We assume our estimates are unbiased, and that measurement noise does not substantially invalidate our findings.