The pace of large-scale model releases is accelerating

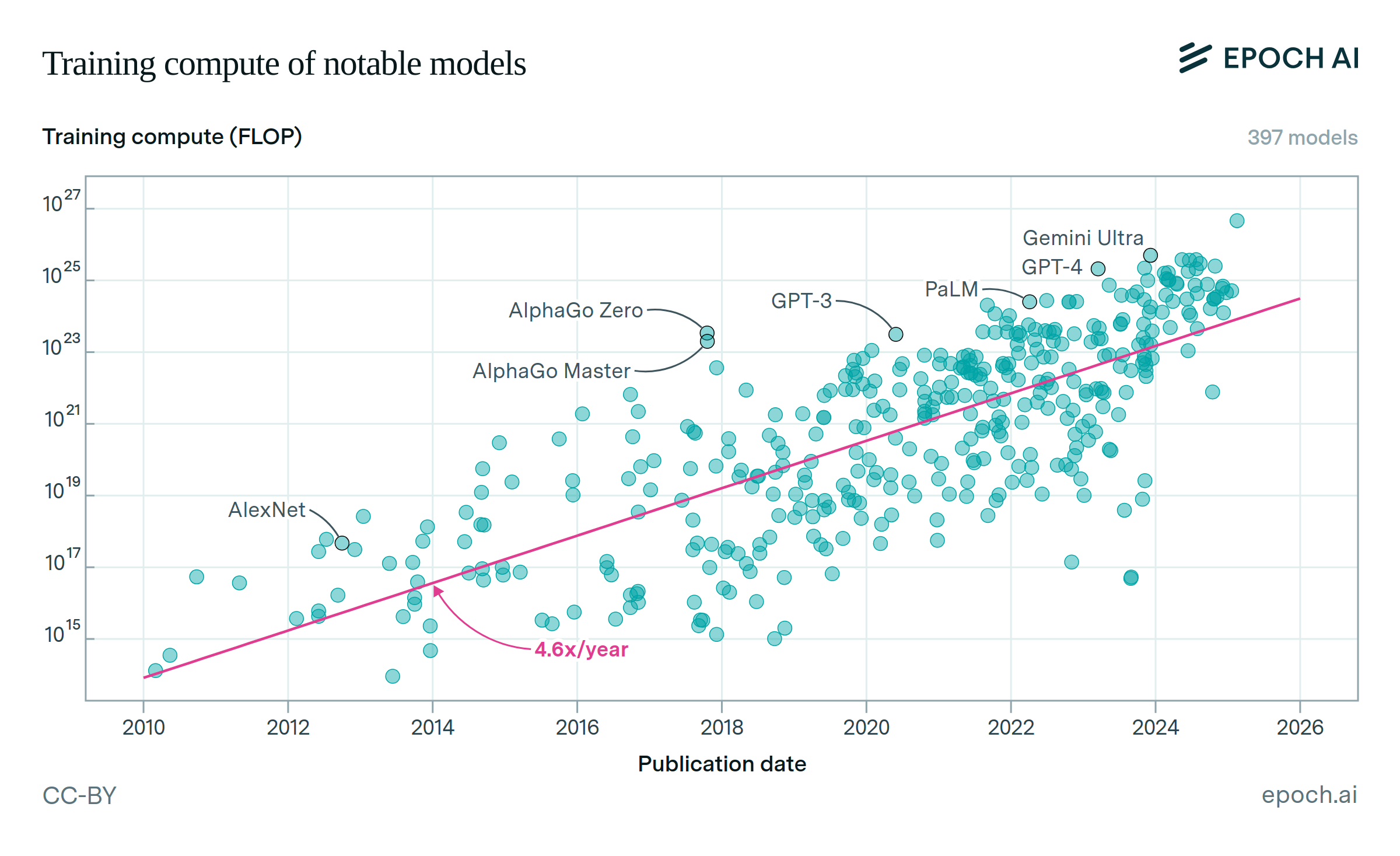

In 2017, only zero models exceeded 1023 FLOP in training compute. By 2020, this grew to three models; by 2022, there were 36, and by 2024, there were 205 models known to exceed 1023 FLOP in our database, and 126 more with unconfirmed training compute that likely exceed 1023 FLOP. As AI investment increases and training hardware becomes more cost-effective, models at this scale come within reach of more and more developers.

Authors

Published

June 19, 2024