Frontier open models may surpass 1e26 FLOP of training compute before 2026

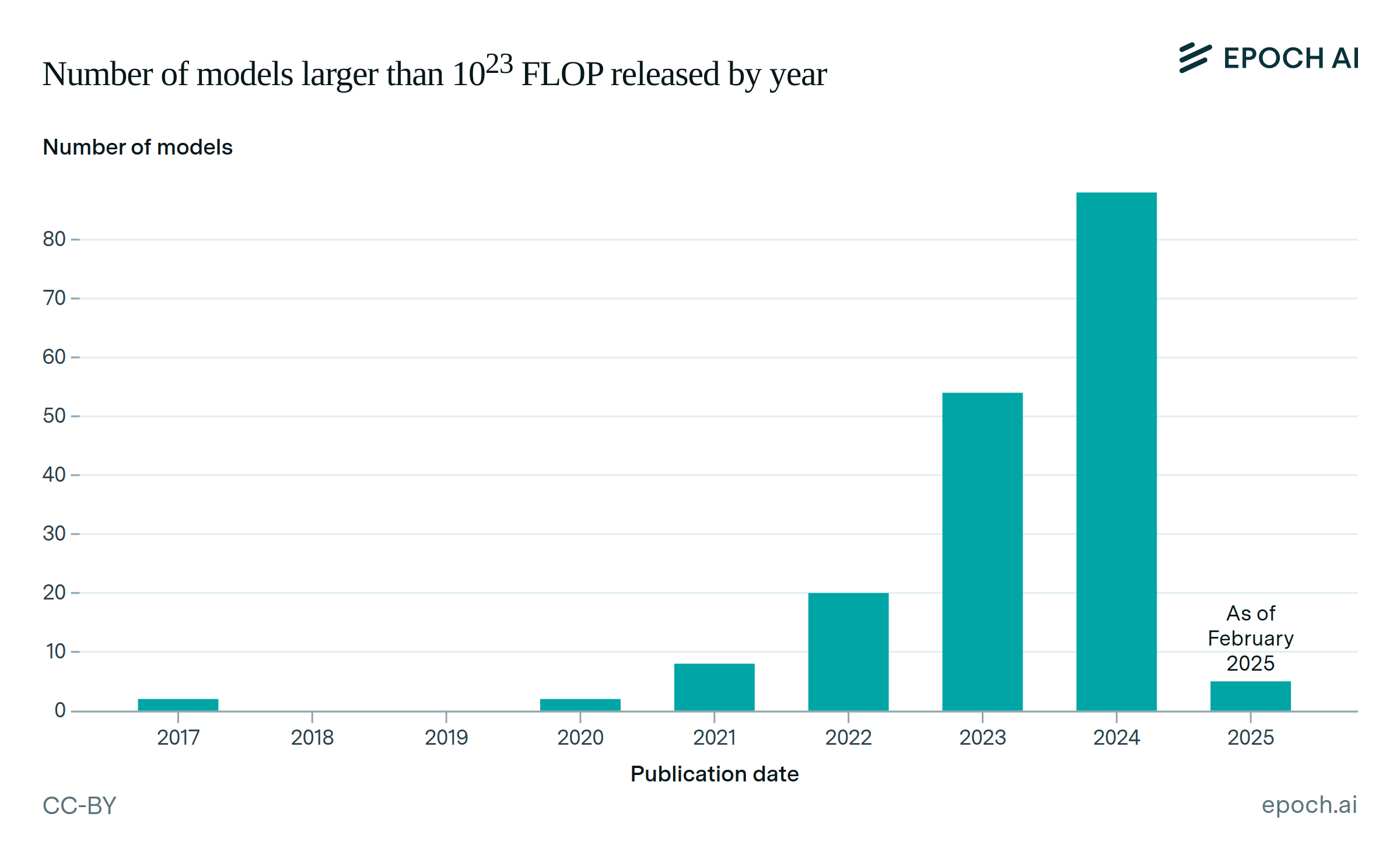

The Biden Administration’s diffusion framework places restrictions on closed-weight models if their training compute surpasses either 1026 FLOP or the training compute of the largest open model. Historical trends suggest that the largest open model will surpass 1026 FLOP by November, 2025, and grow at close to 5x per year thereafter.

There is an additional reason to expect a large open model before 2026: Mark Zuckerberg indicated in October 2024 that Llama 4 models were already being trained on a cluster “larger than 100k H100s”. In the same statement, it is strongly implied that these models will continue to be released with open weights. Models trained at this scale are very likely to surpass 1026 FLOP, and appear to be planned for release in 2025.

Authors

Published

January 15, 2025

Learn more

Overview

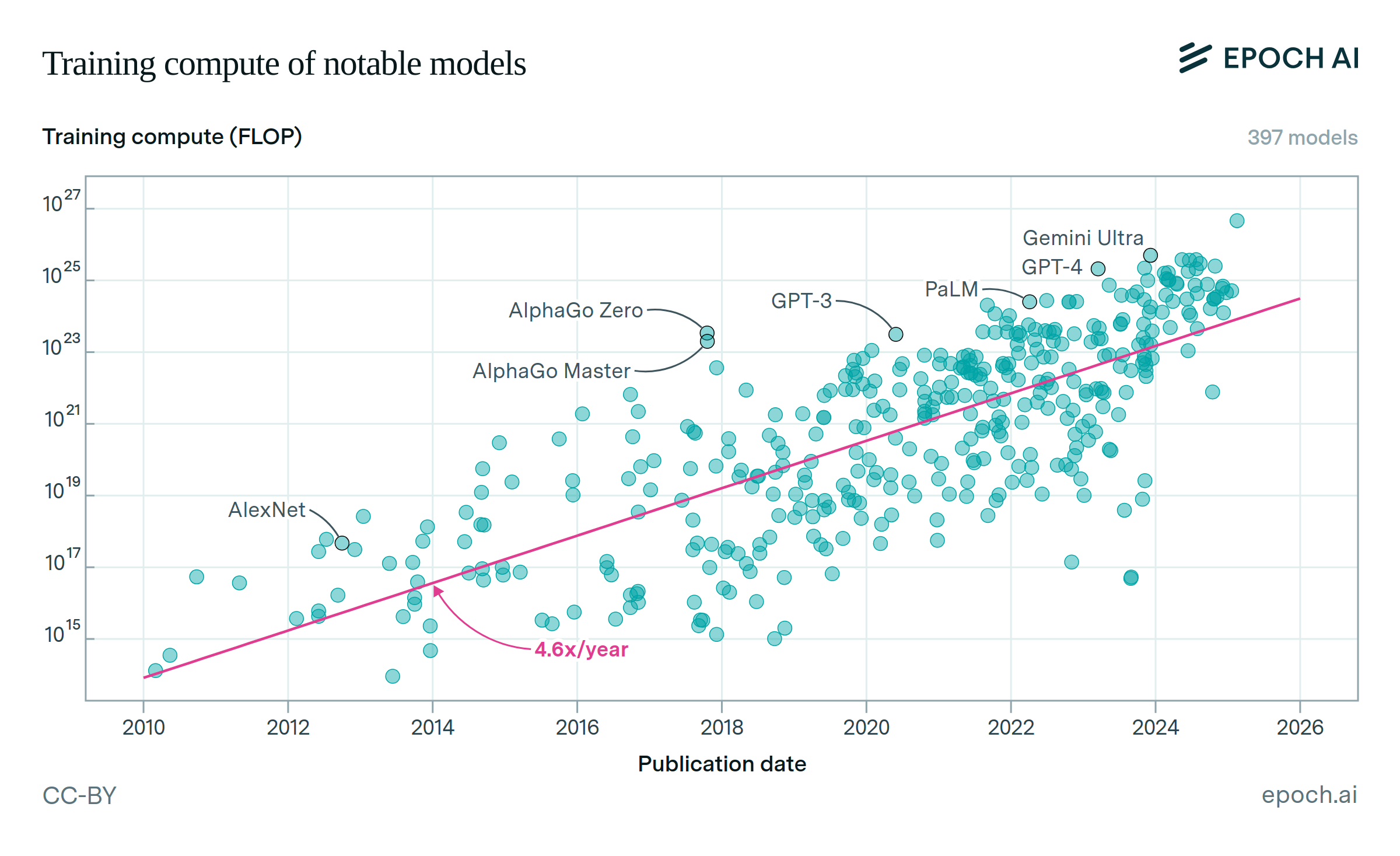

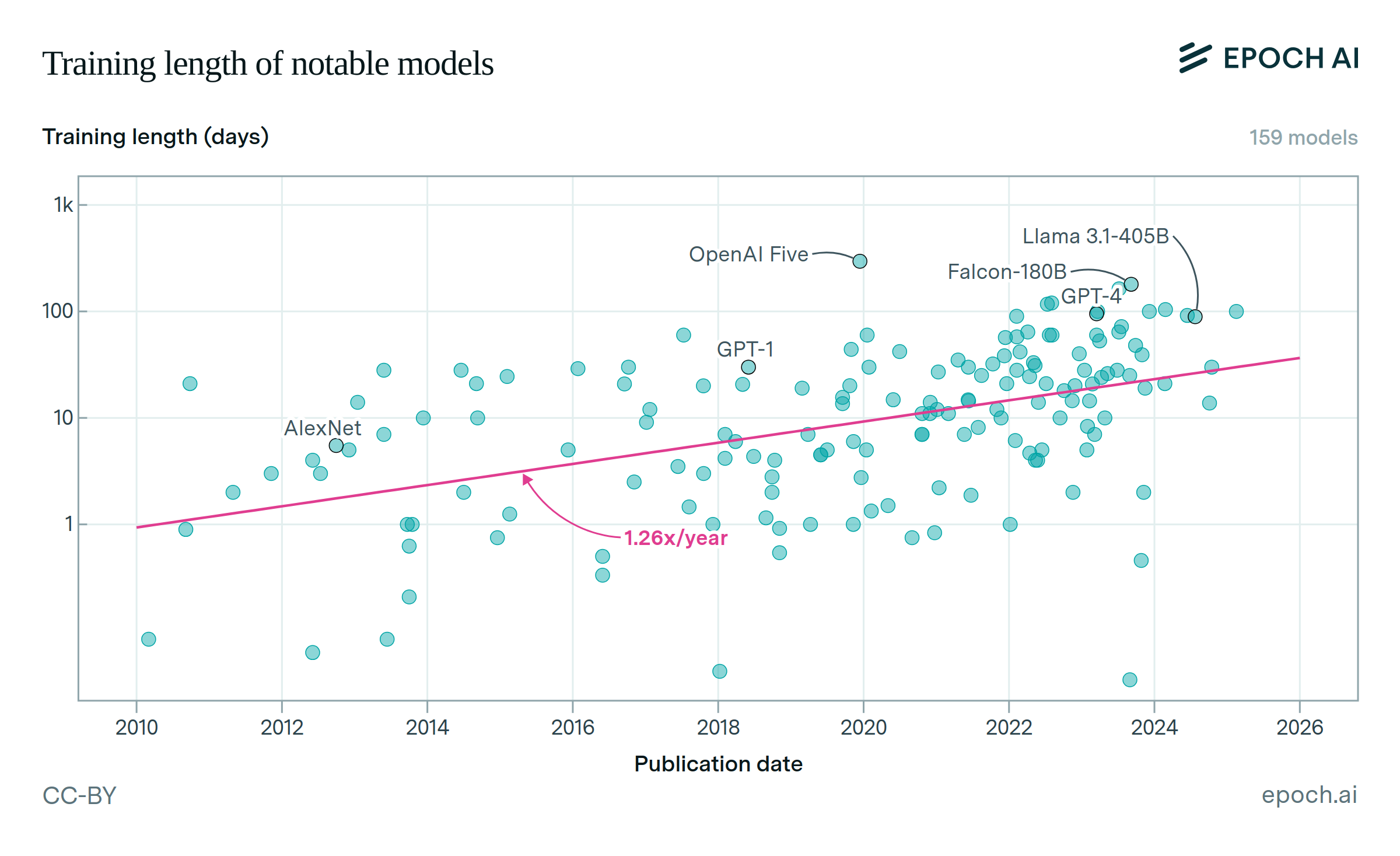

We estimate the release date of the first open-weight model trained with over 1026 FLOP of training compute, by extrapolating historic trends. Our methodology suggests that the frontier open weight model scales in compute by around 4.7x per year (90% confidence interval: 3.6 – 6.1), and we project that this trend will surpass 1e26 FLOPs in November, 2025 (90% confidence interval: August 2025 – November 2026). This analysis does not incorporate specific information about the timing of expected large open models, such as Llama 4.

Data

Our data come from Epoch’s AI Models dataset, a superset of our Notable Models dataset which removes the notability requirement. The ‘Model accessibility’ column in this data assigns fine-grained classifications related to the degree to which model weights are accessible. We classify models as open-weight if they are assigned any of ‘Open weights (unrestricted)’, ‘Open weights (restricted use)’, or ‘Open weights (non-commercial)’. Note that model accessibility is distinct from the accessibility of inference and training code. These features are also recorded in our data, but omitted from analysis here.

Before filtering, there are 1998 models in our data. We filter out models that are missing values for publication date or training compute, and drop models that have been classified as closed-weight or unknown under model accessibility. We then identify the open-weight models which were the largest by training compute at the time of publication, which we dub “top-1 frontier models”. Finally, we drop models published prior to January 1, 2020, in order to focus on the most recent trends. After our filters, we are left with 309 open models published after 2020, of which 10 are top-1 frontier models.

Analysis

We fit an exponential trend on training compute over time among top-1 frontier models. The straightforward regression yields a slope of 4.7x per year, and crosses 1e26 FLOPs in November 2025. To establish confidence intervals on the slope of the fit and the time at which we expect open models to surpass a 1e26 FLOP threshold, we run a bootstrapping exercise (n=500), re-identifying top-1 frontier models for each sample. We find a median slope of 4.6x/year, with a 90% confidence interval of (3.6, 6.1). The median time at which the top-1 frontier model trend surpasses 1e26 FLOP is January 2026, with a 90% confidence interval of (Aug 2025 – Nov 2026).

Our main analysis relies on regressions fit over a small sample of top-1 open-weight models. To validate our results, we repeat our above bootstrap analysis using the top-10 open-weight models. In order to estimate when the first of these top-10 models will surpass the 1e26 FLOP threshold, we identify the magnitude of the 90th percentile residual for each sample, and offset the fitted line to pass through this residual. In this methodology we obtain a median slope of 4.3 (90% confidence interval: 3.7 – 5.2) and a median arrival date of January 2026 (90% confidence interval: August 2025 – July 2026).

Finally, we perform robustness analysis on the filtering cutoff date. We test cutoffs of 2018 through 2022, using January 1st as the date in each case, and run a n=100 bootstrap for each. Results are as follows:

| Cutoff Date | Total # models | Slope | 1e26 Date |

|---|---|---|---|

| 2018 | 347 | 3.7 (3.1, 5.9) | June 2026 (Jul 2025 – Mar 2027) |

| 2019 | 334 | 4.0 (3.3, 4.7) | May 2026 (Dec 2025 – Mar 2027) |

| 2020 | 309 | 4.5 (3.4, 6.3) | January 2026 (Aug 2025 – Dec 2026) |

| 2021 | 288 | 5.6 (4.1, 8.2) | October 2025 (May 2025 – Jun 2026) |

| 2022 | 238 | 8.1 (5.5, 9.6) | May 2025 (Feb 2025 – Jan 2026) |

Notably, the slope monotonically increases as our cutoff date moves forward in time, suggesting that the pace of compute scaling for frontier open models may have accelerated in recent years.

Code for our analysis is available here.