Over 30 AI models have been trained at the scale of GPT-4

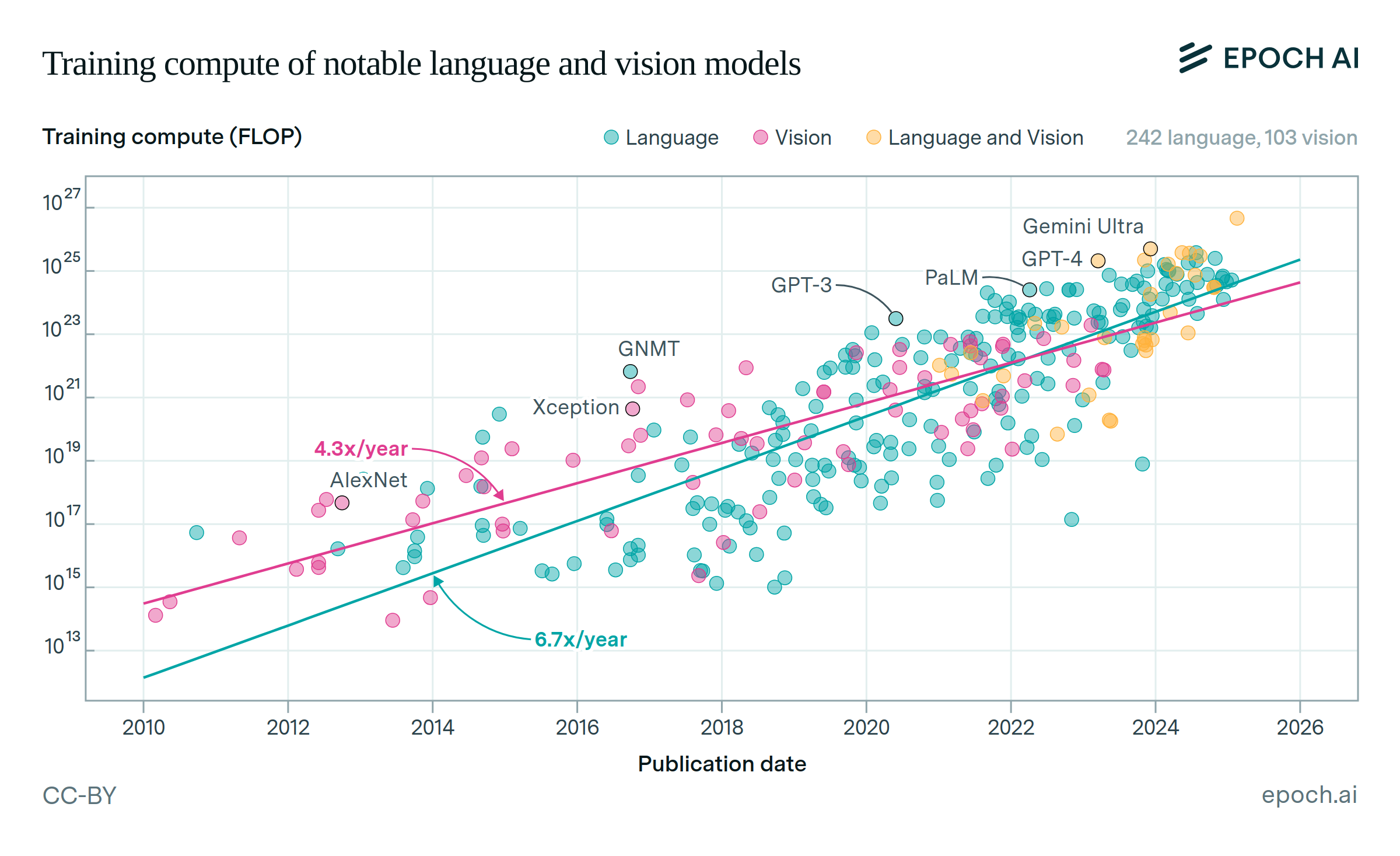

The largest AI models today are trained with over 1025 floating-point operations (FLOP) of compute. The first model trained at this scale was GPT-4, released in March 2023. As of June 2025, we have identified over 30 publicly announced AI models from 12 different AI developers that we believe to be over the 1025 FLOP training compute threshold.

Training a model of this scale costs tens of millions of dollars with current hardware. Despite the high cost, we expect a proliferation of such models—we saw an average of roughly two models over this threshold announced every month during 2024. Models trained at this scale will be subject to additional requirements under the EU AI Act, coming into force in August 2025.

Published

January 30, 2025

Last major update

June 6, 2025

Learn more

Overview

To search for AI models exceeding 1025 FLOP we examined three sources:

- Recent releases from leading AI labs

- Top-scoring models on AI benchmarks and leaderboards

- The most-downloaded large models on the open model repository, Hugging Face

We performed an exhaustive review of recent model releases from AI developers known to have access to enough AI hardware for large-scale training: Google (including Google DeepMind), Meta, Microsoft, OpenAI, xAI, NVIDIA, and ByteDance. Other organizations that have developed models in the Epoch database of notable AI models were examined briefly for recent, large-scale model releases.

To identify other large models developed by other, lesser-known labs, we examined common language benchmarks such as MMLU, MATH and GPQA, as well as the Chatbot Arena and HELM leaderboards. We searched for any scores that matched or exceeded the performance of lower-performing models known to be trained with over 1025 FLOP (i.e. the minimum score across the original GPT-4, Inflection-2, or Gemini Ultra). We also searched for large models developed by Chinese developers. To do this, we examined the China-focused CompassRank and CompassArena leaderboards. We looked at all models scoring above models known to be trained with just under 1025 FLOP, such as Yi Lightning and Llama-3 70B.

Models that met these criteria were added to our wider database of AI models. We then estimated their training compute, and whether it might exceed 1025 FLOP. For several AI models, developers provided insufficient information for us to directly estimate training compute. In this case, we estimated training compute from model performance. For language models, we did this using imputation based on benchmark scores. For image and video models, where fewer standardised benchmark scores were available, we relied on other measures of output quality, such as user preference testing.

There are no standardized benchmarks for frontier image and video models, meaning we could not use benchmark scores to search for these models. For example, recent video models such as Veo 2 were evaluated in their release materials by human preference of their outputs versus competitors. To address this, we individually examined the leading video generation and drawing services. We used user preferences and developers’ compute resources to assess whether such models might exceed the 1025 FLOP threshold.

Data

We have identified 33 publicly disclosed models estimated to have been trained with at least 1025 FLOP. Our database of Notable AI Models documentation describes how we estimate training compute. Where possible, we use details such as parameter count and dataset size, or training hardware quantity and duration. For models without reported details, we instead estimate training compute based on benchmark performance. We discuss this further under Assumptions.

We do not separately count different releases built on top of models using 1025 FLOP. For example, we do not count different Llama-3.1 405B variants and finetunes as separate 1025 FLOP models. Implementation of the EU AI Act is ongoing, but current discussions suggest regulation of 1025 FLOP models will not separately cover finetunes, so long as the resulting model does not “significantly modify” the risk level of the base system (see Section 1.1 of Novelli et al., 2024).

Models included in this dataset:

| Model | Training FLOP estimate | Confidence | Inclusion justification |

|---|---|---|---|

| Aramco Metabrain AI | 1.1e25 | Low-precision | Compute estimated using parameter count and dataset size. More information is available for each model in their respective database entries. |

| Claude 3 Opus | 1.6e25 | Low-precision | Compute imputed from benchmark scores. Known to be similar in training compute to GPT-4. |

| Claude 3.5 Sonnet | 3.6e25 | Low-precision | Compute imputed from benchmark scores. According to Dario Amodei, this model cost “a few $10M’s to train” and “was not trained in any way that involved a larger or more expensive model”. |

| Claude 3.7 Sonnet | 3.4e25 | Low-precision | Comments from Anthropic suggest the model cost “a few tens of millions of dollars” to train. Based on this, we estimate that training used between 1.1e25 and 1.0e26 FLOP. |

| Claude Opus 4 | 1.5e26 | Speculative | Sonnet 3.5 was likely trained on around 1-4e25 FLOP, similar to GPT-4. Sonnet 4 may be scaled up from Sonnet 3.5. Opus 4 was probably trained on between 5e25 to 2e26 FLOP. As a larger model, it was almost certainly trained on a larger scale than Sonnet 3.5. It could have a comparable compute scale as “next-generation” models such as GPT-4.5 and Grok-3. |

| Claude Sonnet 4 | 5.0e25 | Speculative | |

| Doubao-pro | 2.5e25 | High-precision | Compute estimated using parameter count and dataset size. More information is available for each model in their respective database entries. |

| Gemini 1.0 Ultra | 5.0e25 | Low-precision | Aggregation of benchmark imputation and estimates based on known hardware details. Details are available in this notebook. |

| GLM-4 (0116) | 1.2e25 | High-precision | Compute estimated using parameter count and dataset size. More information is available for each model in their respective database entries. |

| GPT-4 | 2.1e25 | Low-precision | Compute estimated using training hardware and training duration. Details are available in this notebook. |

| Inflection-2 | 1.0e25 | High-precision | Directly reported in the announcement blog post. |

| Inflection-2.5 | 1.0e25 | Low-precision | Authors report using “40% of the compute of GPT-4”. Our estimate of GPT-4 training compute would put this at 8.4e24 FLOPs, but we assume they’ve used at least as much as the 1e25 FLOPs reported for Inflection-2, since 2.5 outperforms that model across a range of benchmarks. |

| Llama 3.1-405B | 3.8e25 | High-precision | Compute estimated using parameter count and dataset size. More information is available for each model in their respective database entries. |

| Llama 4 Behemoth | 5.2e25 | High-precision | Compute estimated using parameter count and dataset size. Model is still in training; this estimate is preliminary. |

| Mistral Large | 1.1e25 | Low-precision | Compute estimated using known cost of training, combined with pricing rate from GPU provider Scaleway. |

| Mistral Large 2 | 2.1e25 | Low-precision | Compute estimated using a combination of Mistral Large details, training cluster details, and imputation via benchmark. Details are available in this document. |

| Nemotron-4 340B | 1.8e25 | High-precision | Compute estimated using parameter count and dataset size. More information is available for each model in their respective database entries. |

| Pangu Ultra | 1.1e25 | High-precision | Compute estimated using parameter count and dataset size. The developers also report a comparison of their compute usage to other models. Details are included in the database entry. |

| Gemini 1.5 Pro | 1.6e25 | Low-precision | Compute imputed from benchmark scores. See “Assumptions” for more details. |

| GLM-4-Plus | 3.6e25 | Low-precision | |

| GPT-4 Turbo | 2.2e25 | Low-precision | |

| GPT-4o | 3.8e25 | Low-precision | |

| GPT-4.5 | 6.4e25 | Low-precision | |

| GPT-4.1 | Speculative | Training compute estimated to be over 1025 FLOP due to superior performance compared to GPT-4.5 and more hardware available to OpenAI at the time of its release. | |

| Grok-2 | 3.0e25 | High-precision | Estimates are based on training time, available hardware, and published materials and statements from xAI and Elon Musk. Details are available in this document. |

| Grok-3 | 4.6e26 | High-precision | |

| o1 | Speculative | We cannot estimate the training compute of these reasoning models from their benchmark performance due to their augmented compute usage for inference, but they are likely built upon a frontier model with capabilities at least equivalent to GPT-4o. Additionally, the compute resources available to OpenAI suggest training on this scale. The corresponding mini models (o1-mini, o3-mini, o4-mini) are likely below 1025 FLOP, given their small size. | |

| o3 | Speculative | ||

| Gemini 2.0 Pro | Speculative | We did not impute training compute from Gemini 2.0 Pro’s benchmark scores since this model was released later than others whose compute was imputed, meaning that true training compute would likely be lower than it appears based on imputation due to algorithmic progress. However, due to its benchmark performance equal to or greater than Gemini 1.5 Pro, and the compute resources available to Google DeepMind, training was likely on this scale. | |

| Gemini 2.5 Pro | Speculative | We cannot estimate the training compute from Gemini 2.5 Pro's benchmark performance due to its augmented compute usage for inference, but Gemini 2.5 Pro is likely built upon a frontier model with capabilities at least equivalent to Gemini 1.5 Pro. Additionally, the compute resources available to Google DeepMind suggest training on this scale. | |

| Sora | Speculative | Training compute estimated to be over 10 25 due to superior performance compared to Meta Movie Gen and greater compute resources available to OpenAI and Google DeepMind. | |

| Veo 2 | Speculative | ||

| Veo 3 | Speculative |

Additionally, we identified 22 models which we believe to be under 1025 FLOP, but cannot confidently rule out from being over the threshold. These include models where our estimates’ confidence intervals overlap the 1025 FLOP threshold, and models where we had insufficient details to make a compute estimate, but suspect the model might exceed 1025 FLOP based on its leaderboard ranking and developer’s resources.

Assumptions

For several AI models, developers provided insufficient information for us to directly estimate training compute. In this case, we estimated training compute from model performance. For language models, we did this using imputation based on benchmark scores. For image and video models, where fewer standardised benchmark scores were available, we relied on other measures of output quality, such as user preference testing.

Estimating compute from benchmark performance is more speculative than direct calculation from training details, but benchmark performance and training compute are correlated. This can provide valuable evidence when developers don’t provide enough information to apply other approaches. For the candidate language models we considered, we followed a straightforward methodology:

- Collect reported performance on benchmarks such as MMLU, GPQA, the SEAL Leaderboards, etc. We collected this for several AI models with known compute, in addition to the ones we were trying to estimate.

- Fit a per-benchmark sigmoid curve for the relationship between training compute and benchmark performance. Algorithmic efficiency improves over time, so we limited the “ground truth” datapoints to models that were at least as compute-efficient as the Llama-3 family, i.e. with higher benchmark performance versus compute than the fir for Llama-3 models only. The models for which we were estimating compute (e.g. Claude 3.5 Sonnet, Gemini Pro 1.5, GPT-4o) were likely to be more compute-efficient than the Llama-3 series.

- Estimate training compute given benchmark performance for the models without known training compute. For each model, our estimate is the median compute estimate across all available benchmarks.

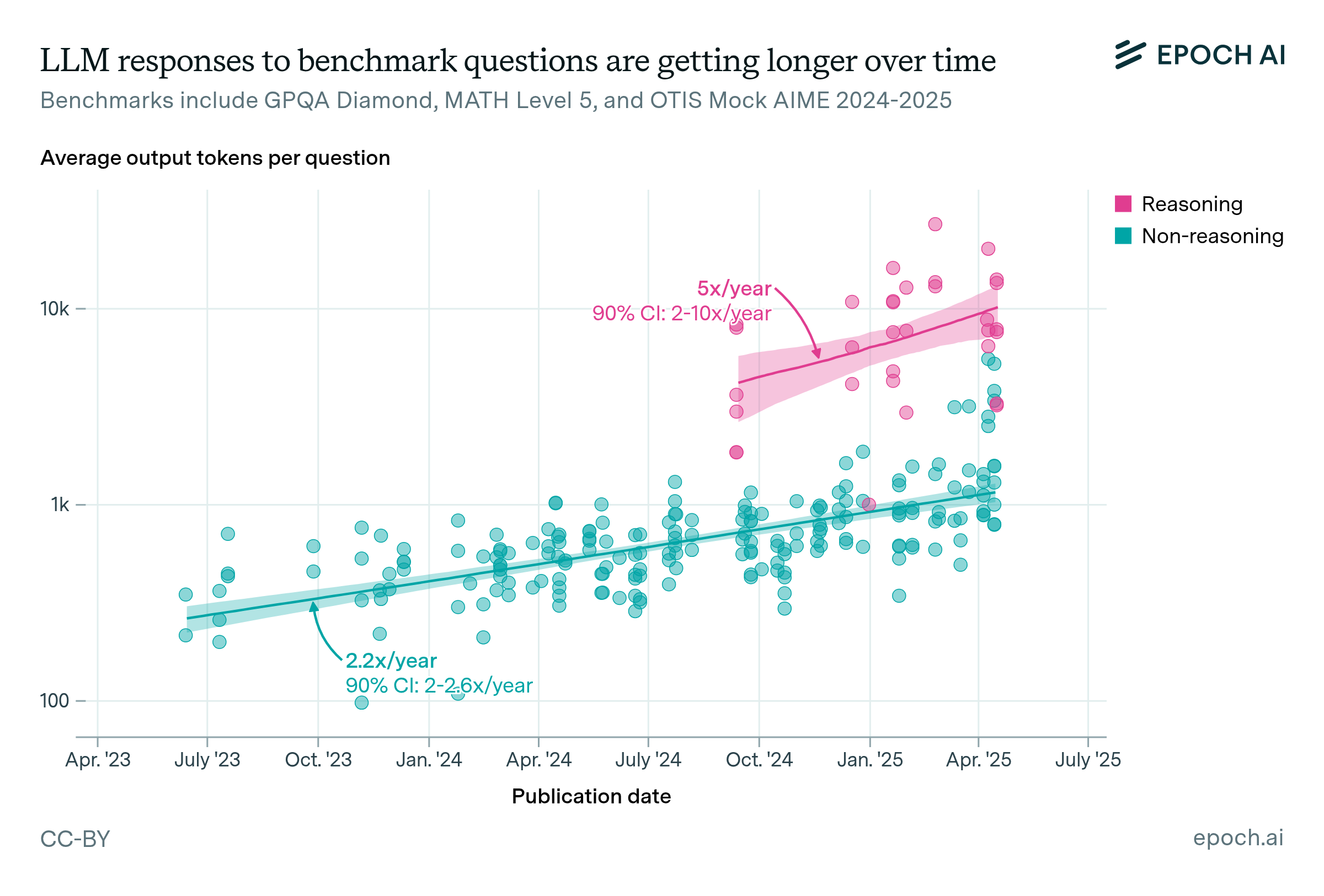

- We did not use this approach to estimate compute for “reasoning” models such as o1, as they may show a different relationship between training compute and benchmark performance.

To assess this method’s accuracy, we performed leave-one-out cross-validation across several different AI models with known training compute. Benchmark-derived estimates of compute are, on average, within a factor of 2.2x of ground truth. Hence they should be treated as fairly speculative, but nevertheless provide useful information for estimating training compute.

| Held out | True compute (FLOP) | Estimated compute (FLOP) |

|---|---|---|

| DeepSeek-v2 | 1.0e24 | 4.6e24 |

| Qwen-2 | 3.0e24 | 3.4e24 |

| Qwen-2.5 72B | 7.9e24 | 1.5e25 |

| Llama-3 405B | 3.8e25 | 2.6e25 |

| Llama-3 family | 7.2e23 | 3.5e23 |

| 6.3e24 | 2.0e25 | |

| 3.8e25 | 4.6e25 | |

| LOO over all | Over all datapoints, the mean absolute error is a factor of 2.2x (90% CI: 1.1x-5.0x). | |

Image and video generation models at this scale could not be identified using benchmark scores, as they often do not report benchmark scores or report on different benchmarks between models. Instead, we examined the qualitative performance of leading AI image and video products since 2023 to find models likely to be above 1025 FLOP.

To guess the compute scale of image generation models, we compared against Stable Diffusion 3, with training compute reported less than 1023 FLOP. Image generation models released within a year, with worse performance, are likely to have used less training compute, and are therefore not included in this list. As of mid-2025, image generation models such as Recraft V3 are near the top of leaderboards, despite developers’ resources suggesting they are unlikely to exceed 1025 FLOP. Based on this evidence, we do not identify any image models as over 1025 FLOP.

To guess the compute scale of video generation models, we compared against Meta Movie Gen, with training compute reported slightly under 1025 FLOP. Video generation models released within a year, with worse performance, are likely to have used less training compute and are therefore not included in this list.

OpenAI’s Sora and Google DeepMind’s Veo 2 have shown superior qualitative performance to Meta Movie Gen, including in user preference testing and leaderboards. Along with the compute resources available to OpenAI and Google DeepMind, their performance suggests the models are likely to have been trained with more than 1025 FLOP. Based on available GPU resources and performance, we believe that other leading video generation models were not trained with more than than 1025 FLOP, although models such as Kling and Seedance perform highly, and we include them in the table of models which we cannot confidently rule out.

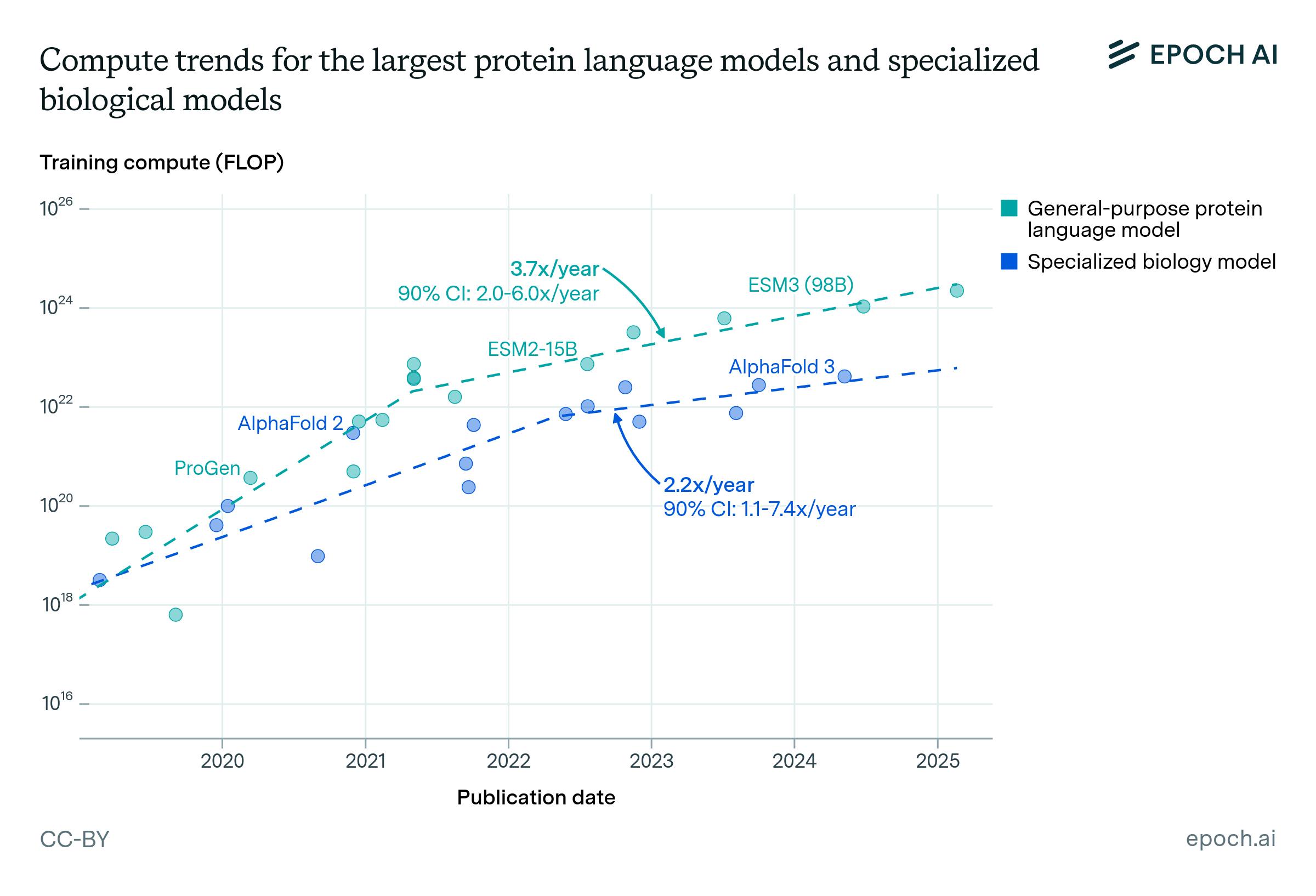

We searched for models over 1025 FLOP in other domains, including audio generation, biology, and game-playing AI. However, we didn’t identify any publicly disclosed models at this scale of training compute.