Training compute has scaled up faster for language than vision

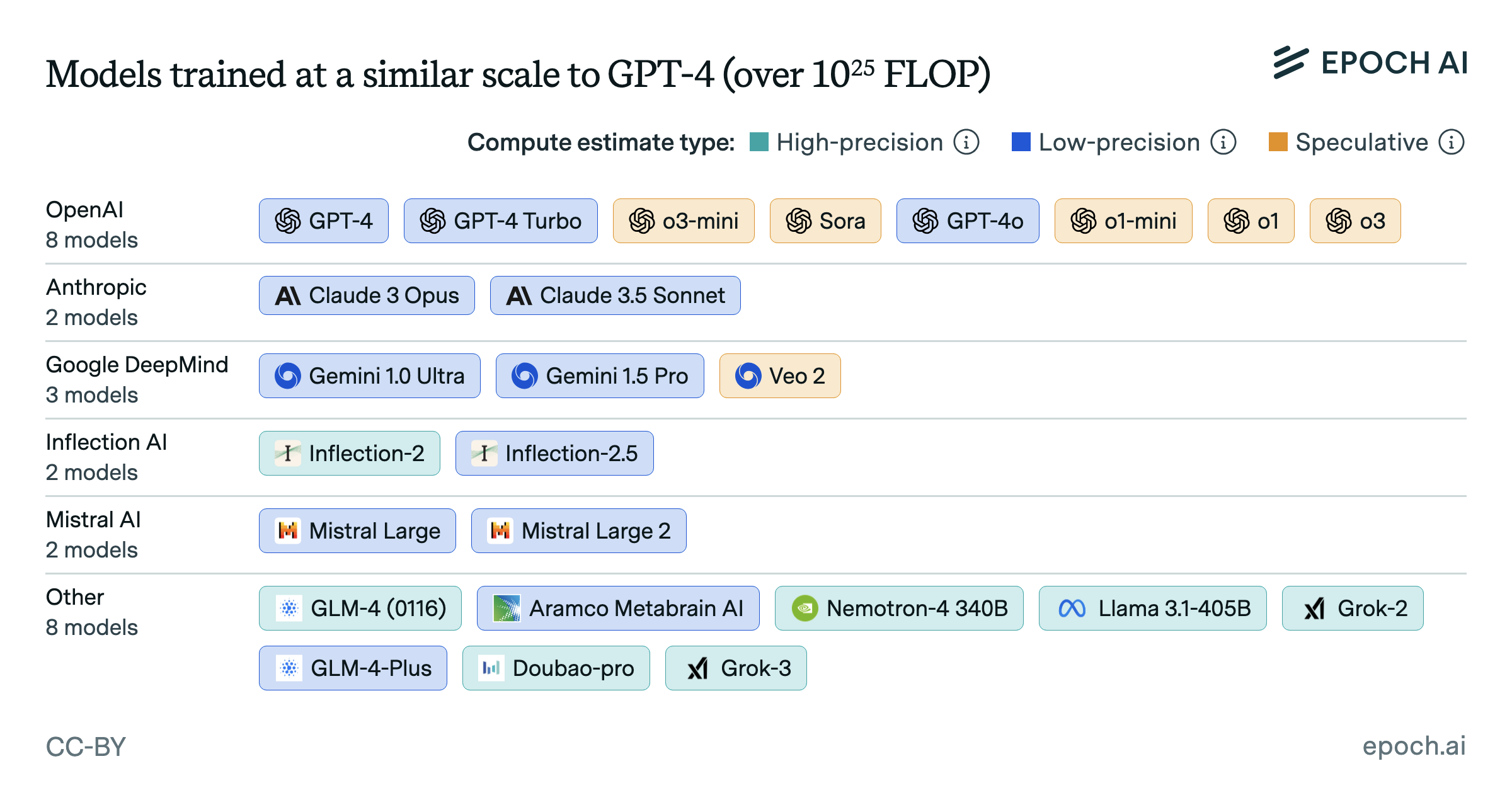

Before 2020, the largest vision and language models had similar training compute. After that, language models rapidly scaled to use more training compute, driven by the success of transformer-based architectures. Standalone vision models never caught up. Instead, the largest models have recently become multimodal, integrating vision and other modalities into large models such as GPT-4 and Gemini.

Authors

Published

June 19, 2024