Frontier Data Centers

Our open database of large AI data centers, using satellite and permit data to track compute, power use, and construction timelines.

Last updated February 12, 2026

Data insights

Selected insights from this dataset.

See all our insightsBuild times for gigawatt-scale data centers can be 2 years or less

Gigawatt-scale AI data centers are massive undertakings, requiring extensive permitting, construction, and power infrastructure. Nevertheless, many hyperscalers have concrete plans to build data centers at this scale in 2 years or less.

Among the facilities we track that broke ground in the past 3 years, the time from starting construction to achieving 1 GW of total facility power ranges from 1 to 3.6 years1, with xAI projecting just 12 months to build Colossus 2. We expect the first GW scale datacenters to come online in early 2026.

Learn moreThe largest AI data center campuses will soon be a fifth the size of Manhattan

AI data centers have a rapidly growing compute and energy demand, and their physical footprint is expanding to match. While the buildings that house IT equipment form the core of these facilities, data center campuses also need land for power and cooling infrastructure, parking areas, and access roads.

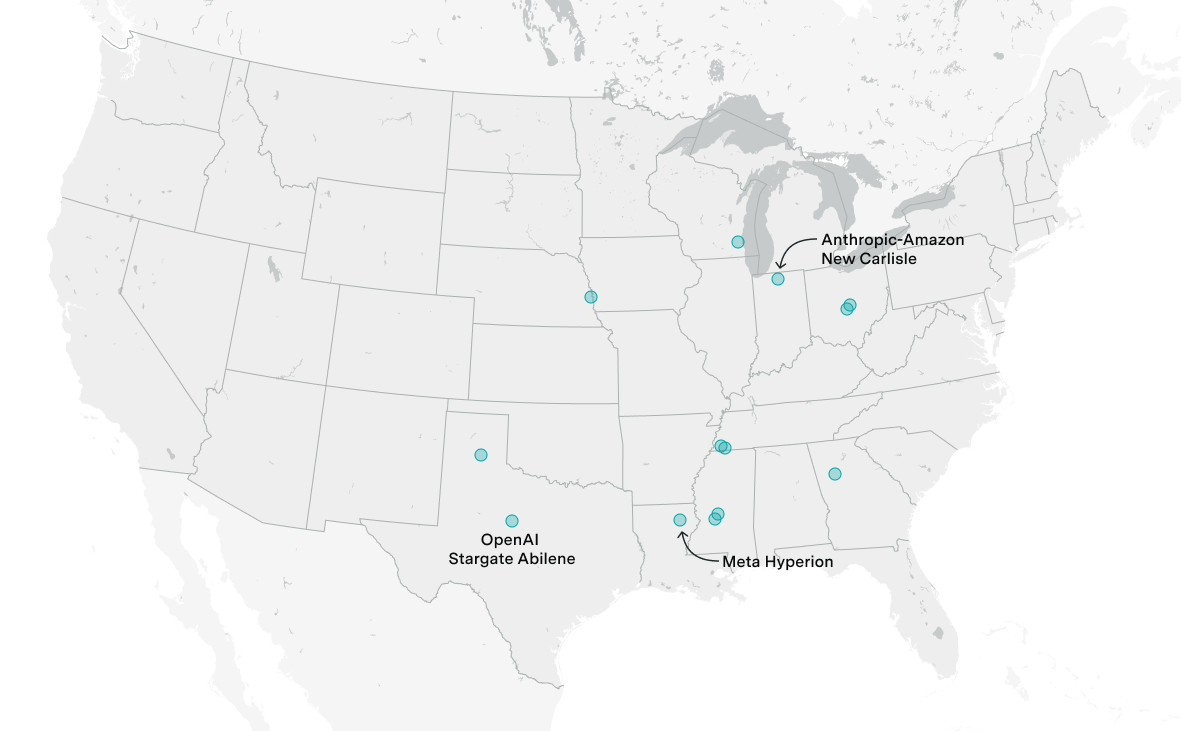

The scale of these developments is striking. The campus for xAI’s Colossus 1, which trained Grok 4, spans several Manhattan blocks. OpenAI’s Stargate campus in Abilene will dwarf this, reaching the size of Central Park once eight buildings are completed in mid-2026. Larger still is Meta’s Holly Ridge campus, reaching almost four times the size of Central Park by 2030.

Learn moreMicrosoft’s Fairwater datacenter will use more power than Los Angeles

Microsoft’s Fairwater multi-building datacenter in Mount Pleasant, Wisconsin, is projected to consume 3.3 GW of power by late 2027, when its fourth building becomes operational. In comparison, the city of Los Angeles used around 2.4 GW of power on average in 2023.

Microsoft’s planned datacenter will require the equivalent of 3-4 large nuclear reactors, with each single building consuming around a gigawatt of energy. Note that our estimates of Fairwater Wisconsin’s power usage are somewhat more speculative than our other estimates – see details here.

Learn moreFAQ

What is an AI data center?

We define an AI data center as a collection of one or more buildings which are located near each other and which run hardware specialized for AI. This hardware includes GPUs or custom chips like Google’s TPUs. These data centers may be used to experiment on, train, and deploy AI models.

We don’t use a hard limit for how close together the data center buildings have to be, but a rule of thumb is less than 10 km apart. The buildings either need to be networked together, or have a shared owner or user.

We do not distinguish between campuses of multiple buildings and individual buildings in what we classify as a data center.

How did you choose what data centers to cover?

We aimed for initial coverage of two to three of the largest data centers for each frontier AI lab in the United States, namely Anthropic, Google DeepMind, OpenAI, Meta and xAI.

Why do you mostly track data centers in the United States?

Based on our prior work we believe most, but not all, of the largest data centers are in the United States. Additionally, focusing on one country allowed us to become familiar with permitting standards, providing an additional source for us to validate our methodology. We will continue expanding the coverage in other countries.

What information do you collect about AI data centers?

We collect general information about each data center, such as the location, owner and users. We also collect satellite or aerial images, and track key metrics over time as each data center evolves. Every data center we track has a timeline of total power capacity, compute capacity, and capital cost. We will increasingly cover other metrics such as building area and water use. See our methodology for details on how we obtain this information.

How comprehensive is the data?

The database is currently a selection of the largest existing or planned data centers globally, most of which are in the US. Our database covers an estimated 15% of AI compute that has been delivered by chip manufacturers globally as of November 2025. We are expanding this coverage in the US and other countries.

The estimated 15% coverage is based on the following reasoning. Nvidia recently disclosed they’ve shipped 4M Hopper GPUs and 6M Blackwell GPUs as of October, excluding China. The “6M Blackwell GPUs” means 3M Blackwell GPUs as traditionally understood. This is the compute equivalent of ~7.5M H100e, for a total of 11.5M Nvidia H100e, plus a minor older stock of A100s, etc. We provisionally estimate, with higher uncertainty, that TPU, AMD, and Amazon Trainium chips are around 30-40% of the Nvidia total, increasing the total stock to 15-17M H100e. Since Nvidia certainly makes up the large majority of the total AI compute stock, uncertainty here does not dramatically affect the total. This means that the 2.5M H100e of operational capacity that we track in this data hub make up 15-17% of the shipped total. We will update these estimates in the coming months.

How is this database different from the GPU Clusters database?

The GPU Clusters database has broad historical coverage of computing clusters used for AI and other applications. These clusters may make up just part of the total compute present in one building or campus. In contrast, this database looks at AI data centers at a project level, with a focus on the largest current and upcoming data centers. It also uses primary sources much more, including permitting and satellite imagery, to get greater detail and accuracy on individual data centers. Both databases will be maintained going forward.

Do you adjust the power/compute/cost estimates per data center?

Power is estimated for each data center based on the available evidence, which may include permitting documents, cooling equipment, and statements from companies. Compute estimates are based on the specific type and quantity of chips if reported. Otherwise, compute is calculated from power, based on the energy efficiency of chips that we believe are most likely to be used. Cost estimates are entirely calculated from power, based on a general cost-per-watt model. For details, see the methodology.

How confident are you in the data?

We are 80% confident that any given metric is accurate within a factor of 1.5. That is, we expect that 80% of the time, our estimate will be between 66% and 150% of the actual value (or the planned value, if it occurs in the future).

Our confidence level is informed by analysis of how our estimates differ from each other and from the most credible numbers. For example, we used six different methods to estimate the power capacity of OpenAI’s Stargate data center in Abilene. The highest estimate was 37% larger than the lowest. Similarly, we had three estimates for xAI’s Colossus 1 data center, and the highest estimate was 16% larger than the lowest. Thirdly, our calculation for the number of Amazon Trainium2 chips online in Indiana in October 2025 was 44% higher than claimed by the CEO of AWS (however, if we had moved our timeline of operation just one month later, it would be consistent with that claim).

Over time we expect to refine our model as we track more data centers, allowing us to make more accurate assessments.

What do "Speculative" and "Likely" mean next to data center users?

By default, if we list a user, owner, or other affiliate of a data center, we have strong evidence for it. However, sometimes we are uncertain about these affiliations, especially the data center users. We indicate this uncertainty with “Speculative” and “Likely” tags.

“Speculative” means that we have no record of an affiliation, but we have some reason to believe it. For example, we speculate that Anthropic will use two Amazon data centers in Mississippi because the New York Times reported that at least one of the Anthropic-Amazon Project Rainier data centers is located there, but they didn’t specify which ones.

“Likely” means we have some record of an affiliation, but we aren’t confident. For example, OpenAI is a “Likely” user for Microsoft Fairwater because a Microsoft spokesperson stated it “initially will be used to train OpenAI models”, but Microsoft’s partnership with OpenAI has been weakening since 2023.

Can an entire data center be used to train one AI model?

This is possible, but does not always happen in practice. The total capacity of a data center is more like a cap on the size of a training run in that data center. Data centers often run multiple jobs in parallel. Even if the entire data center is deployed on a single job, hardware failures will slightly reduce the capacity. Relatedly, when we report the total compute capacity of a data center in 8-bit OP/s, this is the theoretical peak capacity for number formats of 8 bits or above. The computational performance in practice is typically about one third of that, due to inefficiencies.

Can any of these data centers be networked together to do bigger training runs?

Documentation

Epoch’s Frontier Data Centers Hub is an independent database tracking the construction timelines of major US AI data centers through high-resolution satellite imagery, permits, and public documents.

Use this work

Licensing

Epoch AI’s data is free to use, distribute, and reproduce provided the source and authors are credited under the Creative Commons Attribution license.

Citation

Epoch AI, ‘Frontier Data Centers’. Published online at epoch.ai. Retrieved from ‘https://epoch.ai/data/data-centers’ [online resource]. Accessed .BibTeX Citation

@misc{EpochAIDataCenters2025,

title = {“Frontier Data Centers”},

author = {{Epoch AI}},

year = {2025},

month = {11},

url = {https://epoch.ai/data/data-centers},

note = {Accessed: }

}Download this data

Frontier Data Centers: All Datasets

ZIP, Updated February 12, 2026

Frontier Data Centers

CSV, Updated February 12, 2026

Frontier Data Center Timelines

CSV, Updated February 11, 2026

Data Center Cooling: Chillers

CSV, Updated January 22, 2026

Data Center Cooling: Cooling Towers

CSV, Updated January 20, 2026