Overview

Epoch’s Frontier Data Centers Hub is an independent database tracking the construction timelines of major AI data centers through high-resolution satellite imagery, permits, and public documents.

This documentation describes which data centers are included in the dataset, its records, methodology, data fields, definitions, and acknowledgements.

The data is available on our website as a visualization or table, and is available for download as a CSV file.

If you would like to ask any questions about the data, or suggest companies that should be added, feel free to contact us at data@epoch.ai.

If this dataset is useful for you, please cite it.

Use This Work

Epoch’s data is free to use, distribute, and reproduce provided the source and authors are credited under the Creative Commons Attribution license.

Citation

BibTeX Citation

Methodology

The Frontier Data Centers hub is built on detailed analysis of reliable sources, most of which are publicly available. Each data center goes through a pipeline of discovery, research and analysis. Discovery is based on news, satellite imagery, and our previous research. We then use a combination of permitting documents, company documents and satellite images to research key details about a data center. Finally, we apply well-vetted models to estimate key metrics such as power capacity, computational performance and capital costs. The following sections explain each of these three phases in detail.

Discovery

We discover data centers through Epoch AI’s existing work on GPU Clusters, social media posts, news outlets, and company announcements. For example, OpenAI’s Stargate facility in Abilene was already in the GPU Clusters database, while Crusoe’s Goodnight Data Center was reported by Aterio, and the New York Times first alerted us to Amazon data centers in Mississippi.

We also discover data centers while researching other data centers. For example, while looking into Meta’s Prometheus data center in the New Albany Business Park, we discovered new AWS and Microsoft sites.

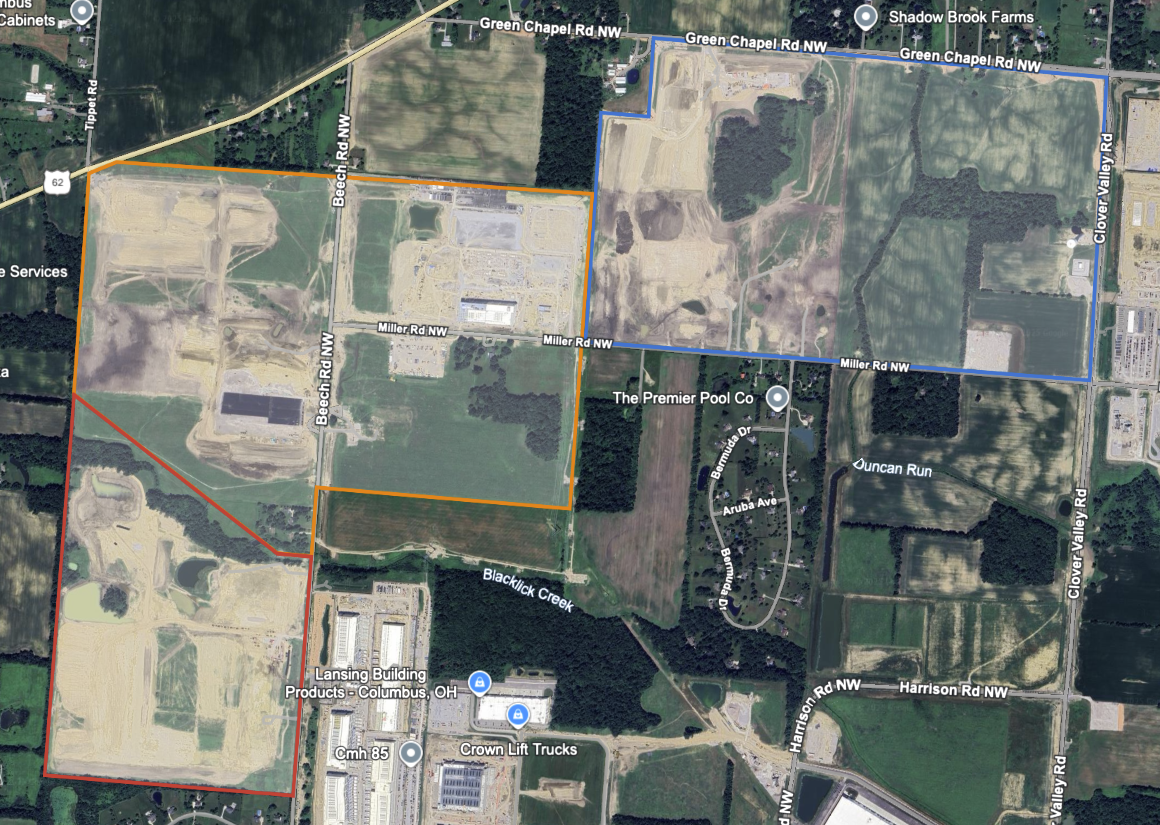

While searching for one of the sites of Meta’s Prometheus data center (outlined in blue), we discovered sites for AWS (orange) and Microsoft (red). Source: Google Earth

Currently, the database covers a subset of the largest AI data centers being built in the United States. As of November 2025, this subset is an estimated 15% of AI compute that has been delivered by chip manufacturers globally.1 We are expanding our search to find the largest data centers worldwide, using satellite imagery and other data sources.

Research

Once we know about a data center, we use several data sources and tools to learn more about it. The most common and useful sources are satellite imagery, permitting documents, and company statements.

Satellite imagery

Data centers have a large physical footprint, which makes satellite and aerial imagery a great resource to learn about them. We have used SkyFi to purchase existing high resolution aerial imagery from Vexcel, and to task satellites from Siwei to take new images for us. We also worked with Apollo Mapping to buy existing imagery from Airbus, and Vantor. In addition we use Google Earth and open-source images from the Sentinel-2 satellite via Copernicus.

If we only have an approximate location for a data center (such as a city or county), then we sometimes go to Google Earth and Copernicus to manually search the area. We might also use prior knowledge of what a company’s data centers look like based on previous examples. For instance, after we read that Amazon data centers were in Madison County, Mississippi, we quickly recognized Amazon buildings in the area.

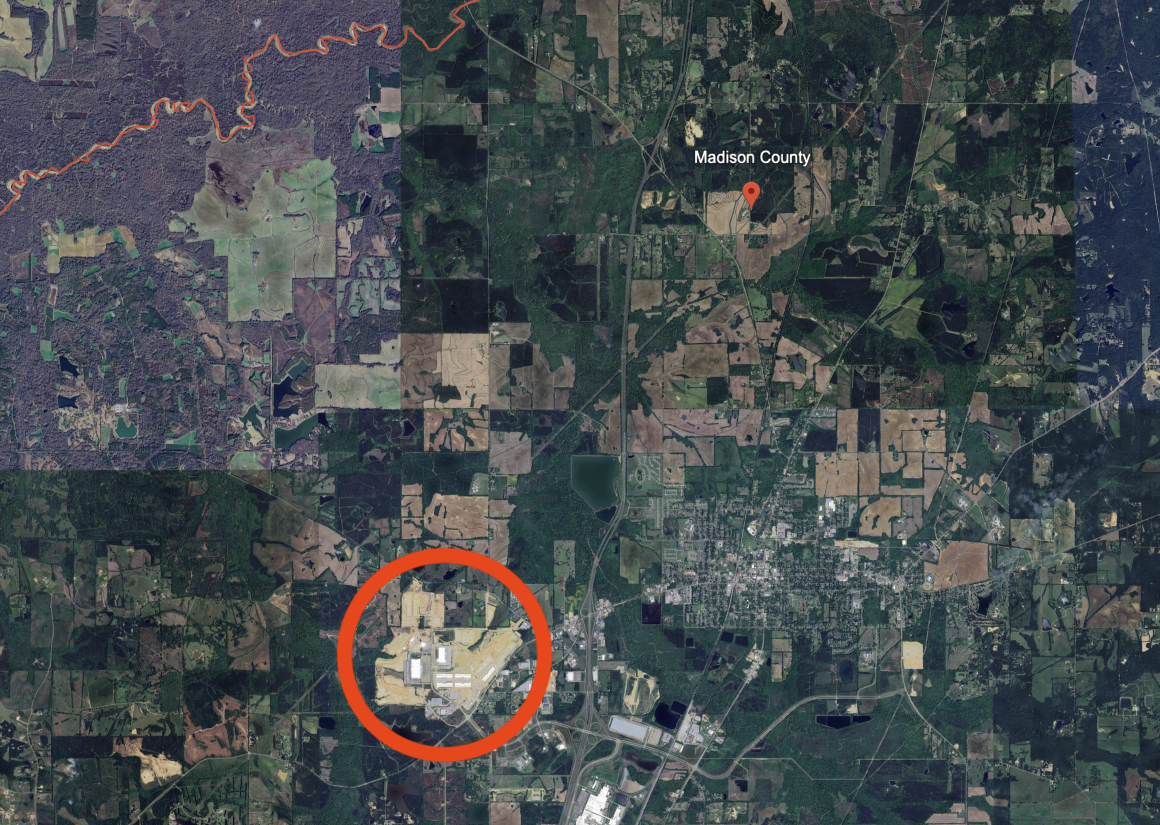

Viewing satellite imagery of a large portion of Madison County, Mississippi, we see a large area of freshly cleared land (indicated by the red circle). Source: Google Earth

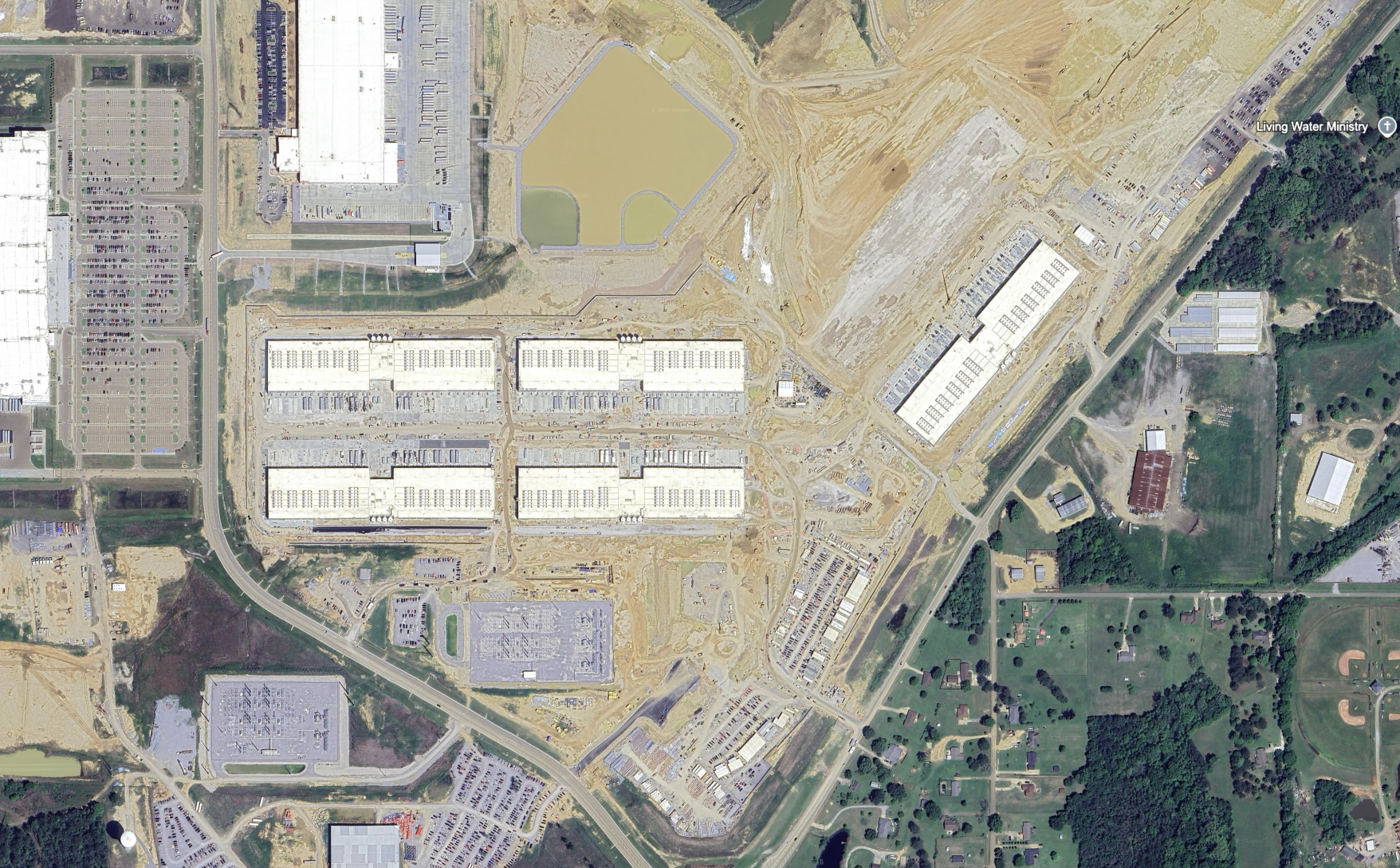

Zooming in, we find buildings that could be data centers. Source: Google Earth

Comparing the buildings in Madison County to an Amazon data center we previously identified in Indiana, we can confirm the discovery of a new Amazon data center. Source: Vexcel, delivered by SkyFi

If searching satellite images doesn’t work, we use ChatGPT Agent to search for text sources, and this often turns up a more precise address (in a permitting document, for example).

Once we locate the data center precisely, we use satellite imagery to learn several things.

Construction timeline: Data centers come online in stages over the course of months or years, so it’s useful to track each data center over time. Satellite imagery tells us when land clearing begins, when roofs go on buildings, and when overall construction is finished. Sentinel-2 images are useful to detect land clearing and buildings: though the images are low-resolution, they are free, frequent and up-to-date.

In this timelapse of OpenAI’s Stargate facility in Abilene, Texas, we can identify the approximate time when land clearing begins, new buildings and facilities start construction, and building roofs are completed. Source: Sentinel-2 via Copernicus.

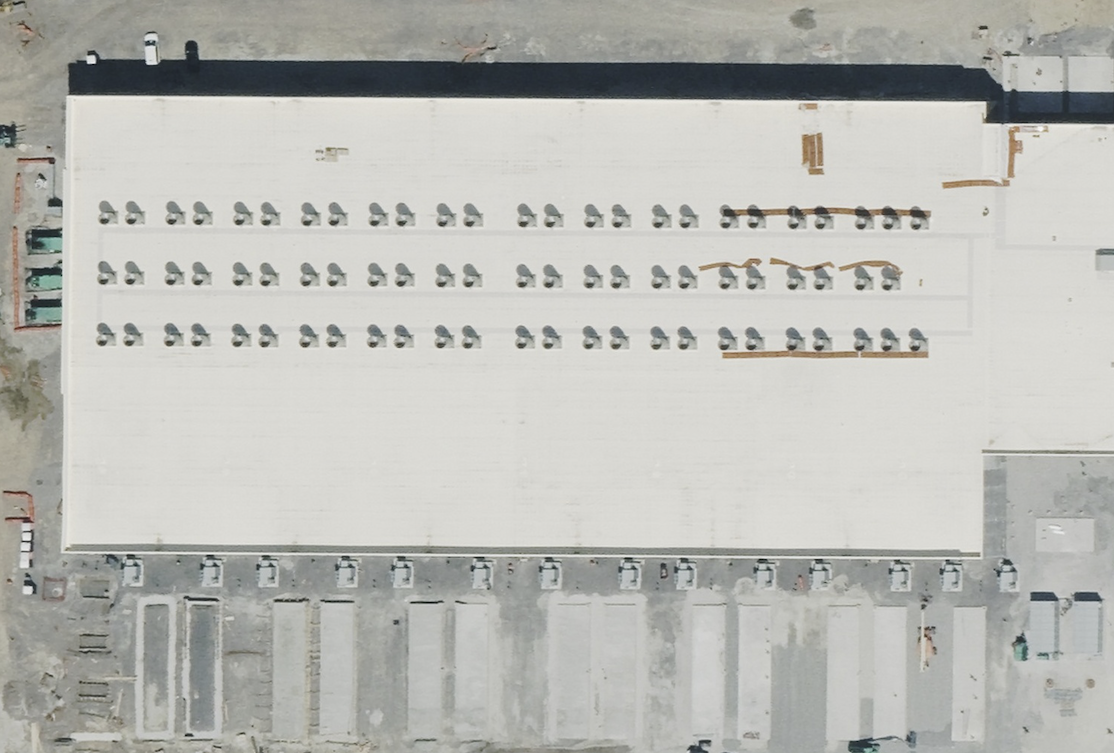

Cooling equipment: Modern AI data centers generate so much heat that the cooling equipment extends outside the buildings, usually around them or on the roof. Satellite imagery lets us identify the type of cooling, the number of cooling units, and (if applicable) the number of fans on each unit. Later we will discuss how we plug this data into our cooling model to estimate the power capacity of data centers. High-resolution images are helpful to identify cooling: though they are costly, they show key details such as the number of fans per cooling unit.

In this close-up of OpenAI’s Stargate data center in Abilene, Texas, we see a row of rectangular units each with 24 fans. Based on our prior knowledge of how cooling equipment looks, we conclude these are air-cooled chillers. Source: Vexcel, delivered by SkyFi

Satellite and aerial imagery tells us a lot about data centers, but can leave many things uncertain. Some cooling methods do not have a clear footprint outside of the buildings (though this is rare for modern AI data centers). The owner of the data center may be unclear. We may also be uncertain of just how many buildings a site will expand to, if the land isn’t cleared yet. Permitting documents can help address these unknowns.

Permits and other legal documents

Data center construction is regulated, requiring companies to file permits with local authorities to build their data center. Typical requirements include air quality, water, and building permits.

Local governments typically have online permit databases, with many documents available to the public. We often use ChatGPT Agent to locate and search these databases for permits related to a data center (example), though some manual searching and inspection is usually needed to get the exact information we’re looking for.

Data centers normally require air quality permits, because they have backup diesel generators that pollute the air when operating. So if an air quality permit document is available, we can at least learn the number of backup generators and the power capacity of each generator. The total capacity of backup generators is often designed to support the peak power capacity of the data center under normal operation. However, sometimes the backup capacity is much lower than the peak power capacity according to other information sources, so it’s important to cross-check.

An air quality permit is also required if natural gas turbines are used as a main or backup power source. Permit application documents are often rich with other information, including blueprints, the number of buildings planned, the address, and the owner (example). Other legal documents are sometimes useful, such as this tax abatement agreement for Crusoe’s Goodnight Data Center.

Company documents and statements

In some cases, organizations will voluntarily reveal information about various aspects of a data center. For example, Crusoe’s 2024 impact report gives information on the power capacity and the number of GPUs for Stargate Abilene. Mortensen discussed their involvement in Meta’s Hyperion data center in Richland Parish. The grid operator MISO reported new load announcements in 2024, many of which are large data centers. Finally, Elon Musk has posted on X several times about the xAI’s two Colossus data centers. Searching the web with LLMs can help find these publications.

Analysis

After collecting information about a data center, we analyze key metrics such as power capacity, performance, and capital cost for different buildings and points in time. We use two main models to accomplish this, depending on the available information.

The first model is for cooling equipment: based on the type, quantity and size of equipment, we can estimate the amount of power consumed by the data center. The second model is for compute: based on trends and specific data points in the Epoch AI hardware database, we estimate computational performance from power and vice versa. We validate the outputs of these models by comparing them to each other, and to primary sources if possible.

Cooling model

We built a model to estimate the cooling capacity of various data center cooling equipment. This model is based on the type of cooling and physical features like the number of fans, the diameter of the fans, and how much floorspace the full cooling unit takes up.2 We found strong empirical relationships between these characteristics, based on specifications for hundreds of cooling products.

The regression model we used to estimate the cooling capacity of an air-cooled chiller based on the number of fans on the chiller. We use a different model for cooling towers.

After using this model to estimate the cooling capacity per unit, we use the following formula to estimate the total facility power of the data center:

Total facility power = (capacity per cooling unit x number of cooling units / cooling overhead) x peak PUE

The total cooling capacity lies somewhere in between peak IT power and total facility power, because heat is generated not only by IT equipment but also by power supplies and lighting. This is why we divide cooling capacity by a “cooling overhead” to get IT power, and then multiply the result by the peak power usage effectiveness (PUE) to get total facility power. By peak PUE, we mean the total facility power capacity divided by the IT power capacity. This is in contrast to regular PUE, which is the average facility energy consumption divided by IT energy consumption over some time period. We default to a peak PUE of 1.2 for hyperscalers such as Google and Amazon, and 1.4 otherwise, unless we have evidence suggesting a significantly different value.3

The cooling model still has significant uncertainty. Specification data suggests that the actual cooling capacity can be as much as 2× higher or lower than our model estimates, depending on the chosen fan speed. However, we have not seen an error that high in practice. In the two cases where we have a ground-truth cooling capacity, our estimates are near-perfect (1% error). In cases where we have some other reference point, e.g. an estimate of IT power based on chip quantity, our estimates differ from that by 50% or less. (See this spreadsheet for the error calculations.)

In this close-up of a building from Google’s data center in Omaha, Nebraska, we see 7 cooling towers, each with two 5.5m diameter fans. Based on that, our cooling model outputs a total cooling capacity of 210.5 MW. This almost exactly matches the nominal 144,480 gallons per minute of capacity stated in a permit document, using industry standard conversions (3 gpm : 1 cooling tower ton : 15,000 BTU/hr : 4396 W). Source: Vexcel, delivered by SkyFi

AI chip model

Sometimes we know the type and quantity of chips used in a data center, and use this to estimate the data center’s power capacity. We estimate peak data center power from chip quantity using the formula

Total facility power = Chip quantity x Server power per chip x IT overhead x Peak PUE

where IT overhead is the ratio of IT power to total AI server power. We set this overhead at 1.14 for all chip types, based on expert consultation and the reference design for the NVIDIA GB200 NVL72 server. The chip-based model of power is more trustworthy than the cooling-based model, but harder to obtain. So this model helps validate the cooling model and other results.

The type and quantity of chips also lets us estimate the computational performance of an AI data center. This is the key measure of how capable the data center is. We measure performance as the theoretical peak operations per second in 8-bit integer or floating point format. This is simple to calculate from public hardware data sheets,4 using the following formula:

Performance = Chip quantity x Performance per chip

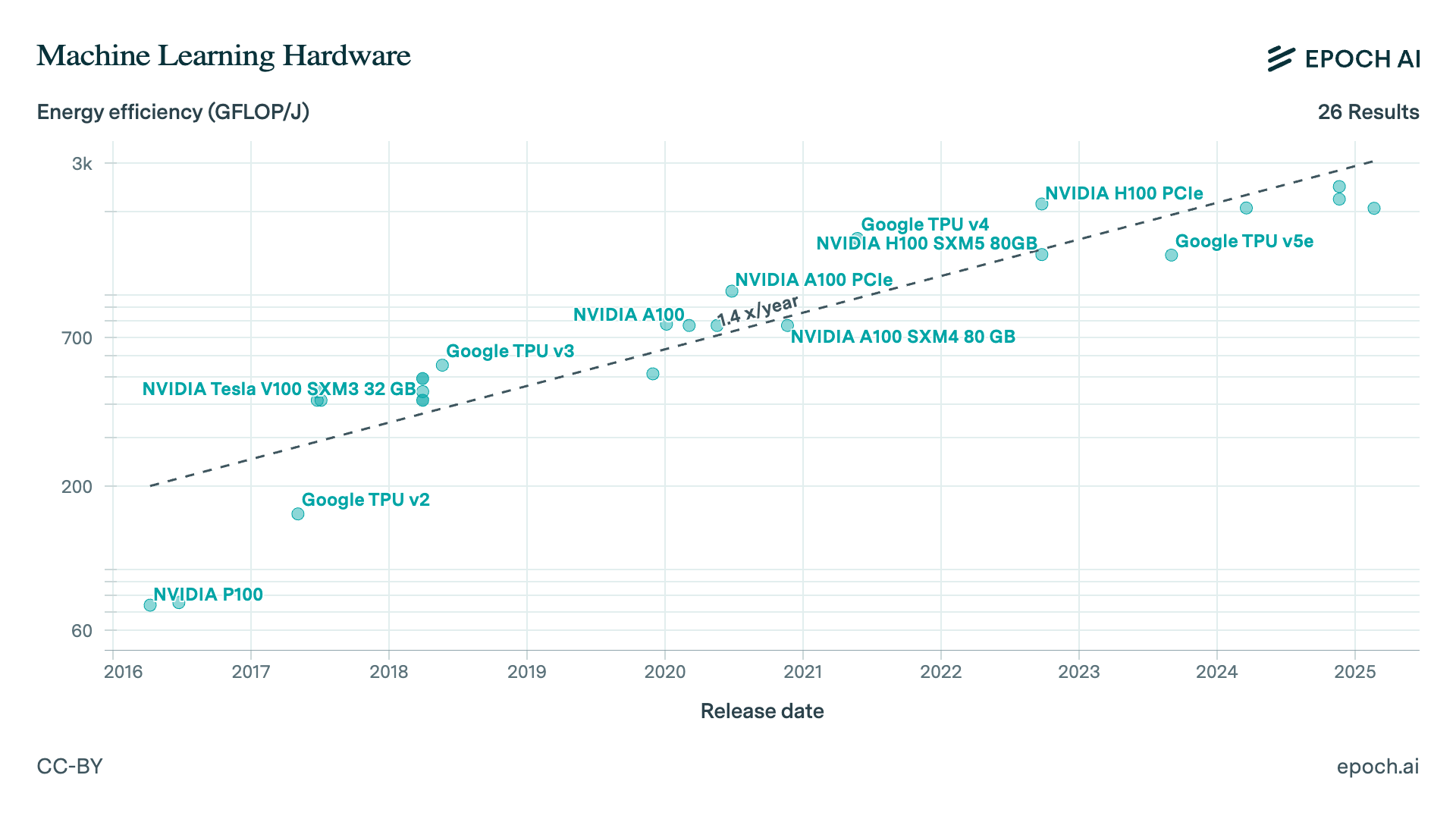

However, in most cases we lack specific hardware details for a data center. In those cases we leverage the strong relationship between the performance and the power draw of leading hardware over time, which is evident empirically:

Trend in the energy efficiency of leading machine learning hardware. The units are gigaFLOP per Joule, or equivalently gigaFLOP/s per Watt.

First, we infer the type of AI chip used based on the owner and the operational data of each data center building. For example, for a Google data center that’s operational by 2026 we assume TPUv7; for an Amazon data center that’s operational by 2025 we assume Trainium2; in other cases we assume the latest and greatest NVIDIA AI accelerator that’s available. We then calculate the performance for each data center building using the formula

Performance = FLOP/s per Watt for the given AI chip x Total facility power / (Server overhead x IT overhead x Peak PUE).

Since we calculate FLOP/s per Watt of chip power, we have to divide by a “Server overhead”: the ratio of total AI server power to total power for the AI chips alone (servers include other hardware like CPUs, memory and networking). For NVIDIA chips, we calculate this overhead from data sheets. For other servers, we use a combined factor of 1.74× for Server overhead and IT overhead, based on specifications for the NVIDIA GB200 NVL72.

Capital cost model

AI data centers require immense capital investment. Our data hub makes this easy to track, with estimates of the total capital cost of every data center. This includes the cost of IT hardware, mechanical and electrical equipment, building shell and white space, construction labor, and land acquisition. To estimate this, we use a cost-per-Watt model, broken down into “IT hardware” and “other” costs. We arrived at the final cost-per-Watt values by aggregating several industry sources, resulting in $44B per gigawatt of server power, with $30B going to IT hardware and $14B for the rest. The cost per gigawatt of total facility power is lower, typically $29B, because overheads decrease the number of servers that can be supported.

IT hardware makes up about two thirds of this cost, and empirically there is a flat trend in AI chip cost per Watt over time. For that reason, we use the same cost-per-Watt values regardless of a data center’s hardware type or operation date. In reality, data center costs vary significantly with the exact hardware used, the negotiated price of that hardware, the local labor market, tax abatements, and many other factors.

Verification

For many data centers, we find multiple sources to corroborate our estimates of power capacity. Each source has strengths and weaknesses. Air quality permits are a trustworthy source for how much backup power is planned, but plans can get outdated. If a new satellite image shows cooling equipment installed on-site, that indicates how much power is really being used today, but with wide error bars. The more independent sources we have, the better the final estimates are.

For example, we have six sources on the total power capacity of Stargate Abilene. Some are based on direct reports of power capacity, while others are based on our cooling and AI chip models. These estimates fall in a relatively narrow range of 139 to 190 MW per building (including overhead for cooling, lighting, etc.). After comparing these six values, we settled on 147 MW as the most trustworthy and consistent one.

Our intel on the OpenAI Stargate data center in Abilene, Texas provides several estimates of power capacity, from the substations at the bottom left, to cooling equipment around the buildings, to natural gas turbines at the bottom right. Source: Airbus, delivered by Apollo Mapping

Limitations

Our approach to finding and analyzing AI data centers is not foolproof. In the discovery phase, some data centers will be so obscure that we won’t find news, rumors, or existing databases mentioning them. While larger data centers are more likely to be reported due to their significance and physical footprint, there are many smaller data centers (<100 MW) that could add up to significant levels of AI compute. As we mentioned above, we’re expanding our use of satellite images and other types of data to help address this limitation.

In the research phase, permitting is one of the most reliable sources about future plans, but plans sometimes change. Schedules can slow down or speed up; the size of the data center may shrink or expand. Worse, there may not be any permitting documents available online for a data center. The publishing of permits varies by local government, and regulations vary across the US and especially across the world. Without regulatory documents, we can’t see as clearly into the future of a data center—especially if no buildings are under construction yet.

The second phase of the Microsoft Fairwater data center in Wisconsin paused construction in January 2025, which suggests it will take longer than initially planned. Source: Airbus, delivered by Apollo Mapping

If some buildings are under construction or already completed, then satellite imagery helps a great deal, but this has limitations too. Despite the growing power density of AI data centers, some of them are still managing without obvious external cooling infrastructure. For example, the standard AWS data center, which has no obvious cooling fans outside, can still house tens of thousands of Trainium chips. However, we expect this design to become less and less viable as power densities continue to rise.

The cooling system for standard AWS buildings is harder to analyze from a top-down view, and is outside the current scope of our cooling model. Source: Vexcel, delivered by SkyFi

Even if we have a perfect analysis of a data center, we may still be in the dark about who uses it, and how much they use. AI companies like OpenAI and Anthropic make deals with hyperscalers such as Oracle and Amazon to rent compute, but the arrangement for any given data center is sometimes secret.

As we’ve researched more data centers we’ve refined our models of construction time, power, cooling and compute. For example, we’ve found that it’s important to identify the correct type of cooling infrastructure. When we used our cooling tower model to analyze the Microsoft Fairwater data center in Wisconsin we got 1.5 GW, which is unrealistic for a building of its size. We learn from mistakes like this to continuously improve our analysis of AI data centers.

Records

The Frontier Data Centers hub contains two record types:

Data Centers: Core site/campus information—location, ownership/tenancy, power capacity, modeled compute performance, capital costs, and metadata (sources, notes, confidence).

Timelines: Dated milestones and time-series figures for each site—construction status by date, power/capacity and performance snapshots, costs, and other progress indicators.

Fields

We provide a comprehensive guide to the hub’s fields below. Examples are taken from a representative site (e.g., OpenAI Stargate Abilene) unless otherwise indicated. For each Data Center, time-varying metrics (power/capacity, performance, costs, construction progress) are stored as individual Timelines records.

If you would like to ask any questions about the database, or request a field that should be added, contact us at data@epoch.ai.

AI data centers

| Column | Type | Definition | Example value | Coverage |

|---|---|---|---|---|

Timelines

| Column | Type | Definition | Example value | Coverage |

|---|---|---|---|---|

Downloads

Acknowledgements

This data and analysis was collected by Epoch AI researchers Ben Cottier and Yafah Edelman. We would also like to acknowledge the help of Epoch AI’s employees and collaborators, including Maria de la Lama, Caroline Falkman Olsson, Aidan Hendrickson, Edu Infante Roldan, Christina Krawec, Jeremy Self, and Josh You.

We would also like to thank the following people for their feedback and suggestions on our research: Isabel Juniewicz, Joanna Lee, Anish Tondwalkar and others.

Notes

-

Nvidia recently disclosed they’ve shipped 4M Hopper GPUs and 6M Blackwell GPUs as of October, excluding China. The “6M Blackwell GPUs” means 3M Blackwell GPUs as traditionally understood. This is the compute equivalent of ~7.5M H100e, for a total of 11.5M Nvidia H100e, plus a minor older stock of A100s, etc. We provisionally estimate, with higher uncertainty, that TPU, AMD, and Amazon Trainium chips are around 30-40% of the Nvidia total, increasing the total stock to 15-17M H100e. Since Nvidia certainly makes up the large majority of the total AI compute stock, uncertainty here does not dramatically affect the total. This means that the 2.5M H100e of operational capacity that we track in this data hub make up 15-17% of the shipped total. We will update these estimates in the coming months.

-

There is similar work focusing on urban locations rather than data centers: https://www.sciencedirect.com/science/article/abs/pii/S030626192300925X

-

These values are based on multiple sources and factors. Uptime Institute reports an average PUE of 1.44 for >=30 MW data centers. Public SemiAnalysis post uses a PUE of 1.35 for a “typical colocation data center” hosting H100 or GB200 (https://newsletter.semianalysis.com/p/h100-vs-gb200-nvl72-training-benchmarks). We expect peak PUE to be slightly higher than average PUE, because more cooling is needed on hot summer days. On the other hand, hyperscalers tend to have lower PUEs—for example, Google averaged 1.09 across their data centers in 2025.

-

For example, the NVIDIA GB200 NVL72 data sheet. One thing to be careful of here is how hardware data sheets report performance, and the number of chips per server. NVIDIA normally reports OP/s “with sparsity”, which is double the number that applies to most use cases. There is also a confusing relationship between Grace-Blackwell “superchips” like the GB200, which comprise two B200 GPUs, and the GB200 NVL72 server, which is the equivalent of 36 GB200s, but contains 72 B200 GPUs. In our experience, when industry sources report something like “50,000 GB200 NVL72 chips”, they usually mean 50,000 B200 GPUs. We cross-check sources to confirm the most likely meaning in each case.