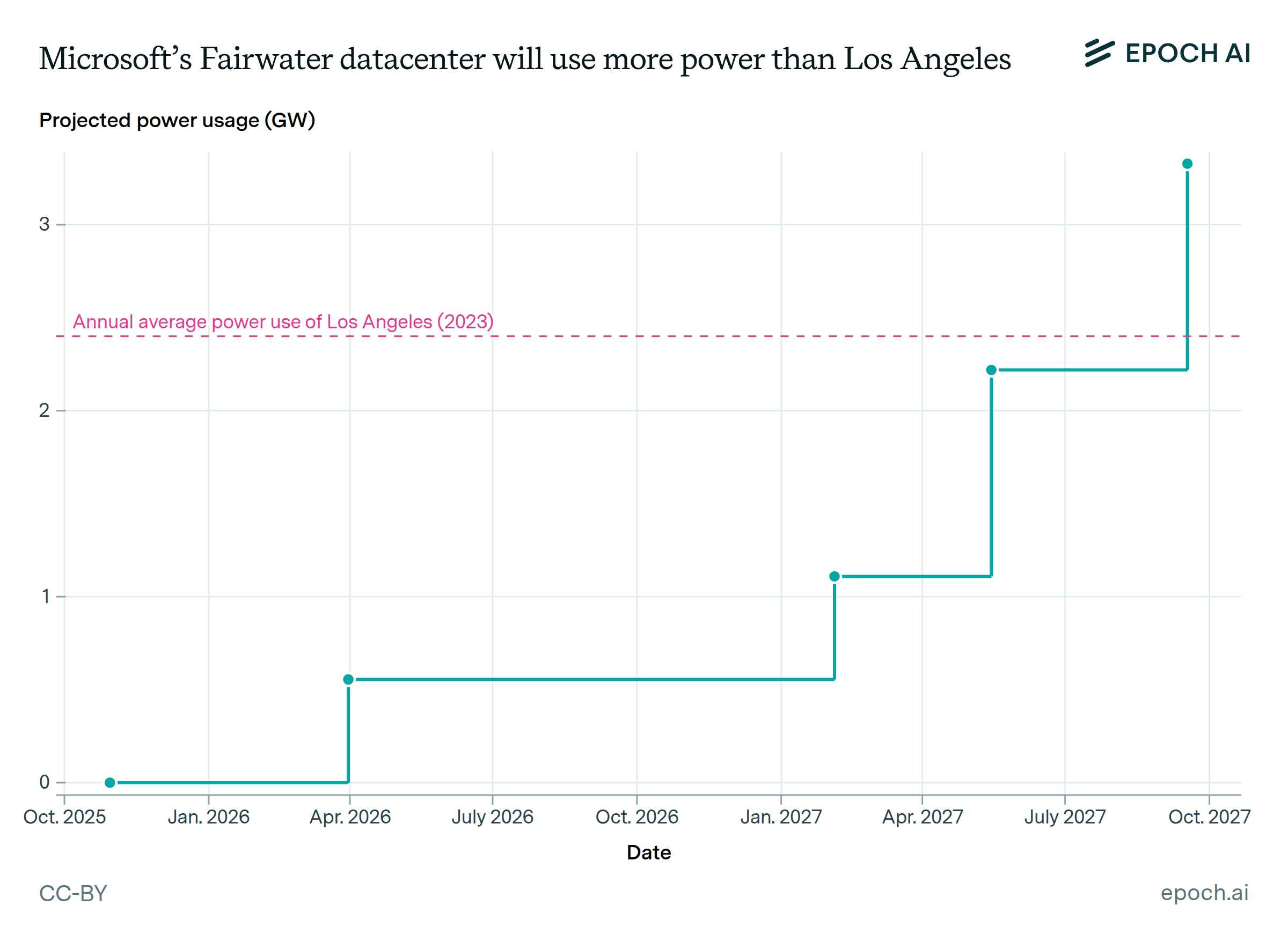

The power required to train frontier AI models is doubling annually

Training frontier models requires a large and growing amount of power for GPUs, servers, cooling and other equipment. This is driven by an increase in GPU count; power draw per GPU is also growing, but at only a few percent per year.

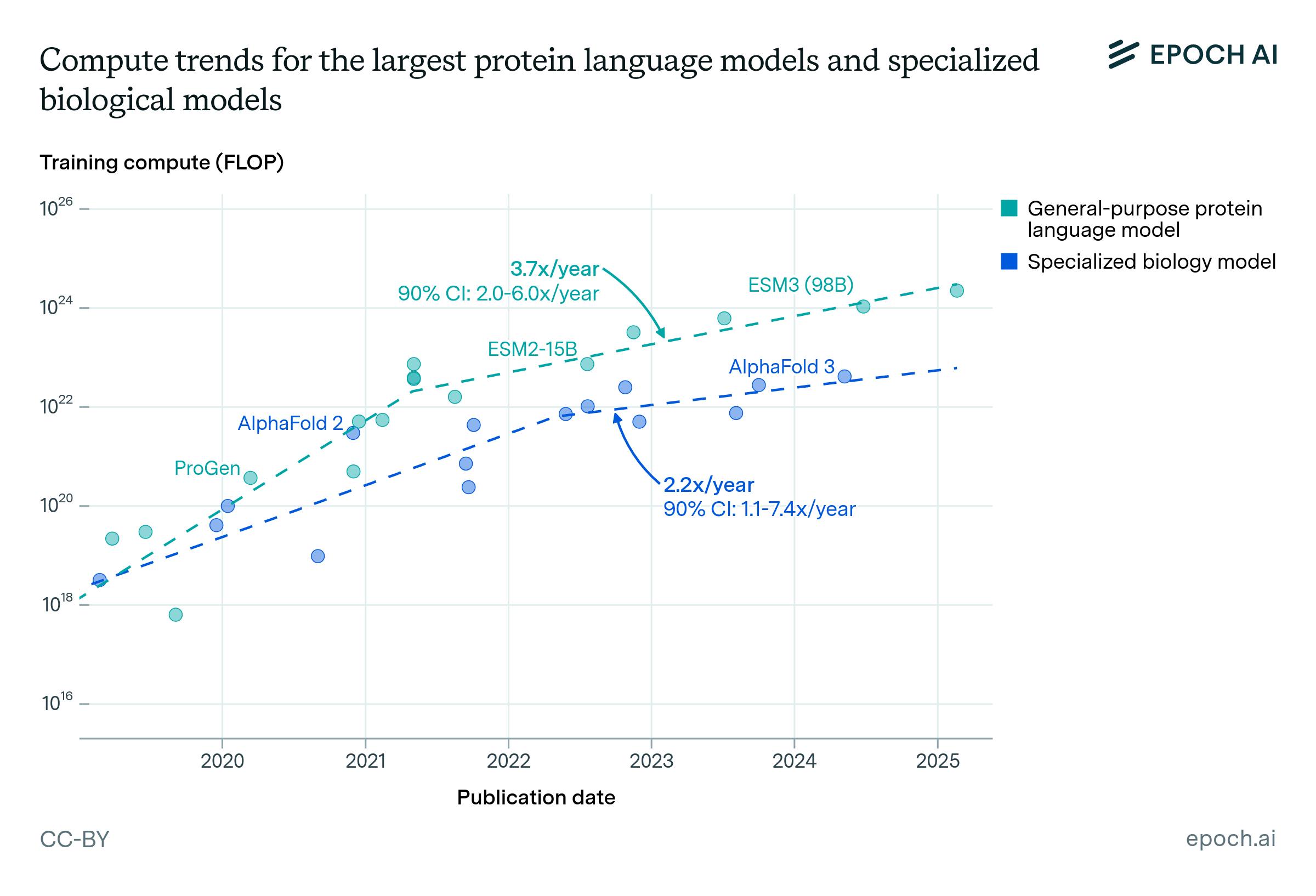

Training compute has grown even faster — around 4x/year. However, hardware efficiency (a 12x improvement in the last ten years), the adoption of lower precision formats (an 8x improvement) and longer training runs (a 4x increase) account for a roughly 2x/year decrease in power requirements relative to training compute.

Our methodology for calculating or estimating a model’s power draw during training can be found here.

Authors

Published

September 19, 2024

Learn more

Data

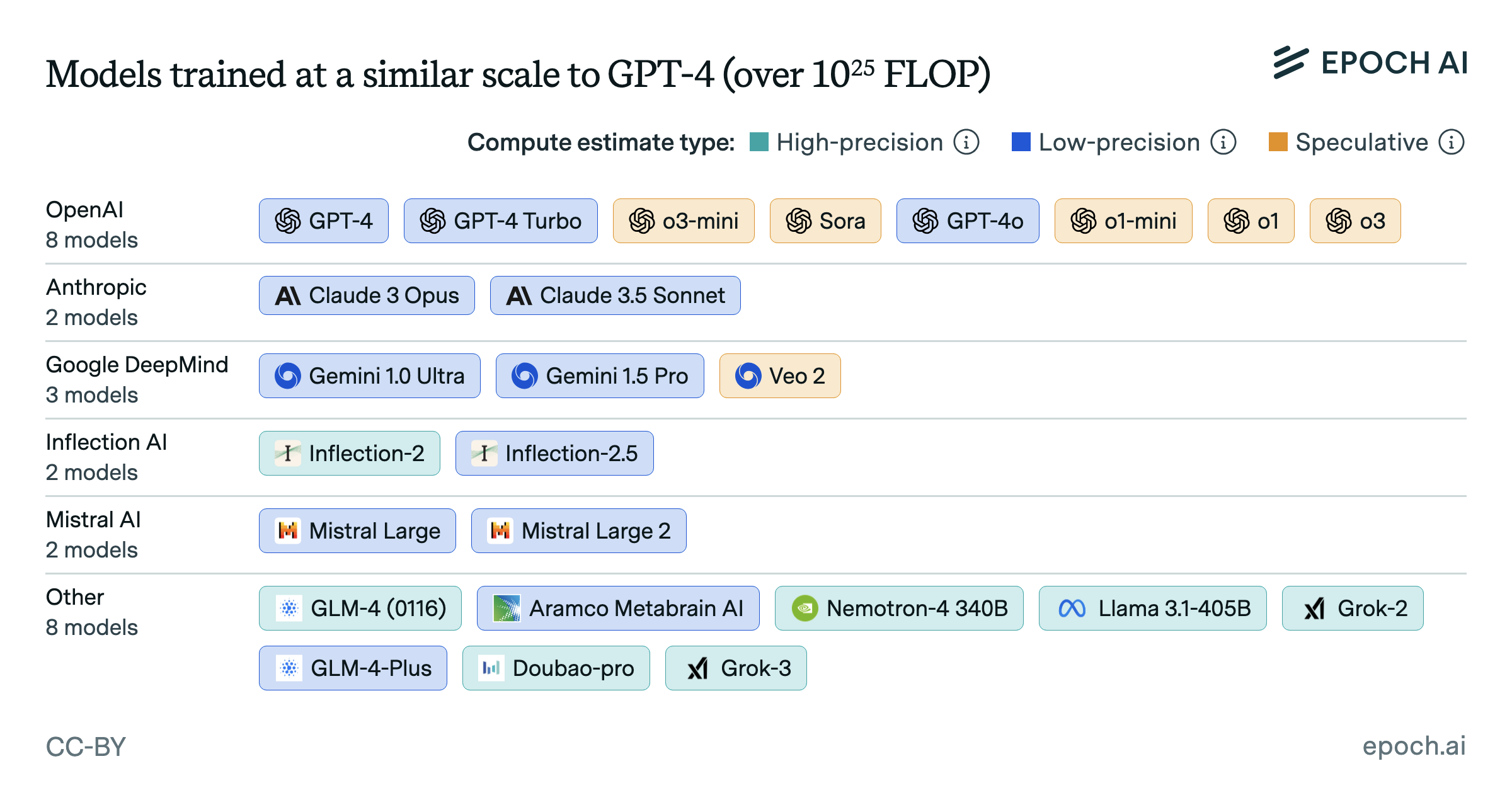

We make use of two datasets: Epoch’s AI Models dataset, which collects information on over 1000 notable AI models, as well as our Machine Learning Hardware dataset, which records information on 161 AI accelerators.

After filtering our AI models data to a subset with values for each of `Publication date`, `Training power draw (W)`, we are left with 211 AI models. Our methodology for estimating training power draw can be found here.

Analysis

We focus our analysis on frontier models released after 2010, which we define as those that were among the top 10 models by training compute at the time of their release. After filtering to frontier models released in 2010 or later, we are left with 45 observations.

We fit a log-linear model to estimate the rate of growth for frontier model power draw, and obtain a 90% confidence interval from the coefficient’s standard errors. We estimate that power draw has grown by 2.1x per year, with a 90% confidence interval of 1.9 to 2.2x.

Assumptions and limitations

In general, we define “frontier models” as those in the top 10 models ordered by training compute at the time they were published. We show the robustness of our results against other choices of top-n, as well as in the following table:

| Inclusion criteria | Number of observations | Estimate (90% confidence interval) |

|---|---|---|

| Top 5 | 29 | 2.2x per year (1.9 – 2.4) |

| Top 10 | 45 | 2.0x per year (1.7 – 2.4) |

| Top 20 | 79 | 2.1x per year (1.9 – 2.4) |

| All notable models | 175 | 1.8x per year (1.6 – 2.1) |