Leading AI chip designs are used for around four years in frontier training

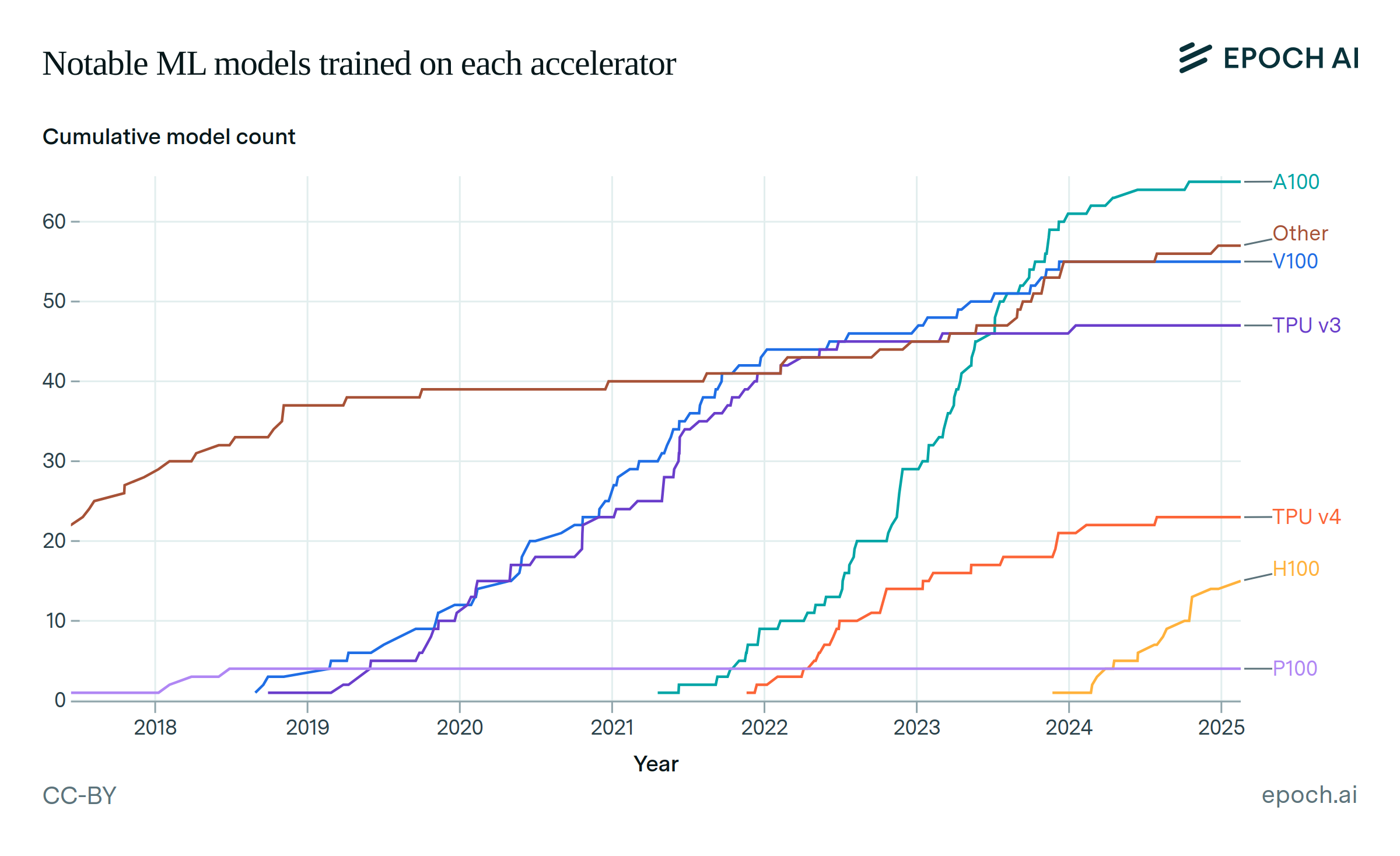

The median time from the release of leading AI chips to the publication date of the last frontier model trained using them is 3.9 years. We focus on AI chips from the NVIDIA V100 onwards, as these chips were used for large-scale language model training similar to present day frontier models.

Including chips older than the NVIDIA V100 shortens the median lifespan from release to final use for frontier training to 2.7 years.

Authors

Published

March 5, 2025

Learn more

Overview

We analyze the “frontier lifespan” of commercial AI chip designs – that is, the duration of time between their public release, and the publication date of the last known “frontier AI model” trained using that chip. We define a frontier AI model as a model in the top 10 largest AI models by training compute at publication. To do this analysis, we combine our Notable Models dataset with our Machine Learning Hardware dataset, and identify the latest publication dates for frontier models trained on each AI chip design.

Code to reproduce our analysis is available here.

Data

We use Epoch AI’s Notable Models dataset to obtain data on the dates at which AI models are published, the amount of compute used in training, and the AI chip design the model was trained on. To define a set of “frontier AI models”, we identify models that were among the top 10 largest by training compute at their time of publication. In the 4 cases where a single AI model was trained using more than one AI chip design, we include all chip designs that were used.

We join this data to Epoch AI’s Machine Learning Hardware dataset, which provides the date at which each AI chip design is released. We restrict our analysis to commercial chip designs, omitting consumer models like NVIDIA’s GTX/RTX series since these are less relevant for the large-scale training runs we expect for future frontier models. After joining, we identify the set of AI chip designs which are used to train at least 1 frontier AI model. This process leaves us with 149 frontier AI models, trained by 14 unique AI chip designs.

Analysis

Before our main analysis, we exclude any AI chip design that has been used to train a frontier model published within the last 7 months, because these chip designs may still be used in future frontier training runs. We chose this 7-month threshold because it corresponds to the 95th percentile interval observed between successive frontier training runs on the same chip design, among chips at least as new as the NVIDIA V100. In other words, historical data suggests that if no frontier model has been trained on a chip for at least 7 months, it is unlikely the chip will be used again for frontier training.

We focus primarily on modern AI chip designs (NVIDIA V100 and newer), because these chips mark a shift towards large-scale industrial AI training runs. Prior to the introduction of NVIDIA’s V100, frontier AI training was spread across a wider variety of chip designs; pre-V100 chip designs trained an average of only 2.1 frontier models each, compared to an average of 9.3 frontier models per chip among V100 and newer designs. This latter paradigm is more relevant for characterizing current and future frontier training runs.

Among the 5 AI chip designs that are at least as new as the NVIDIA V100, the median lifespan from release until final use for frontier training is 3.9 years. For these newer chip designs, lifespans range from 2.3 to 4.5 years. In contrast, older AI chip designs generally saw shorter lifespans. The 7 chip designs released prior to the NVIDIA V100 have a median frontier lifespan of 2.6 years, with individual lifespans ranging from 0.6 to 3.7 years. Considering all chip designs at once, the median frontier lifespan is 2.7 years.

We also calculate the lifespan of AI chip designs when considering all models in the Notable Models dataset, rather than only frontier AI models. The Notable Models documentation explains how we define a notable model. We follow the same filtering process as for frontier AI models, finding that the 95th percentile for the length of time between notable model training runs for a given AI chip design is 6 months. Removing AI chip designs which were seen training a notable model published within the last 6 months, we are left with 17 AI chip designs used to train notable models in our dataset. Looking at the 9 chip designs beginning with the NVIDIA V100, the range of durations between initial release and final use spans 0.3 to 6.5 years, with a median of 2.1 years. Note that the median is lower than in the case of the frontier models, since non-frontier models are trained with a wider variety of chips; most of the chips that were unseen among frontier models are significantly less popular, and consequently tend to have shorter lifespans.

Finally, note that our endpoints are defined by the publication date of the last known model trained using each chip. In practice, there is often a delay of weeks or months between the completion of training and a model’s public announcement. Our results are thus a weak upper bound on the duration between AI chip release and completion of the final frontier (or notable) training run.

Assumptions

- We assume that AI chip designs such as NVIDIA A100 or Google TPU v4 will not be used to train a frontier AI model in future. We think this is likely to be correct, because it’s been more than 7 months since they were last used for a frontier training run, and the gap between frontier training runs is lower than that in 95% of cases in our empirical data. However, it’s hard to rule out the possibility that these or older chips will be used in a frontier training run. If this assumption is broken, our analysis would slightly underestimate the frontier lifespan of AI chip designs. Similar considerations apply for the AI chip design lifespans for notable AI models.

- We assume that missing data in our Notable Models database does not bias our estimate significantly. We identified the chip designs used for 64 of the 88 frontier AI models. For the 24 remaining models, we narrowed down plausible chip designs and determined that 15 of these cases would not affect our results, leaving relevant uncertainty for only 9 frontier models. These frontier models with unknown training hardware may pose a potential source of measurement error and bias, but since they represent only 10% of all identified frontier models, any effect is likely to be small.