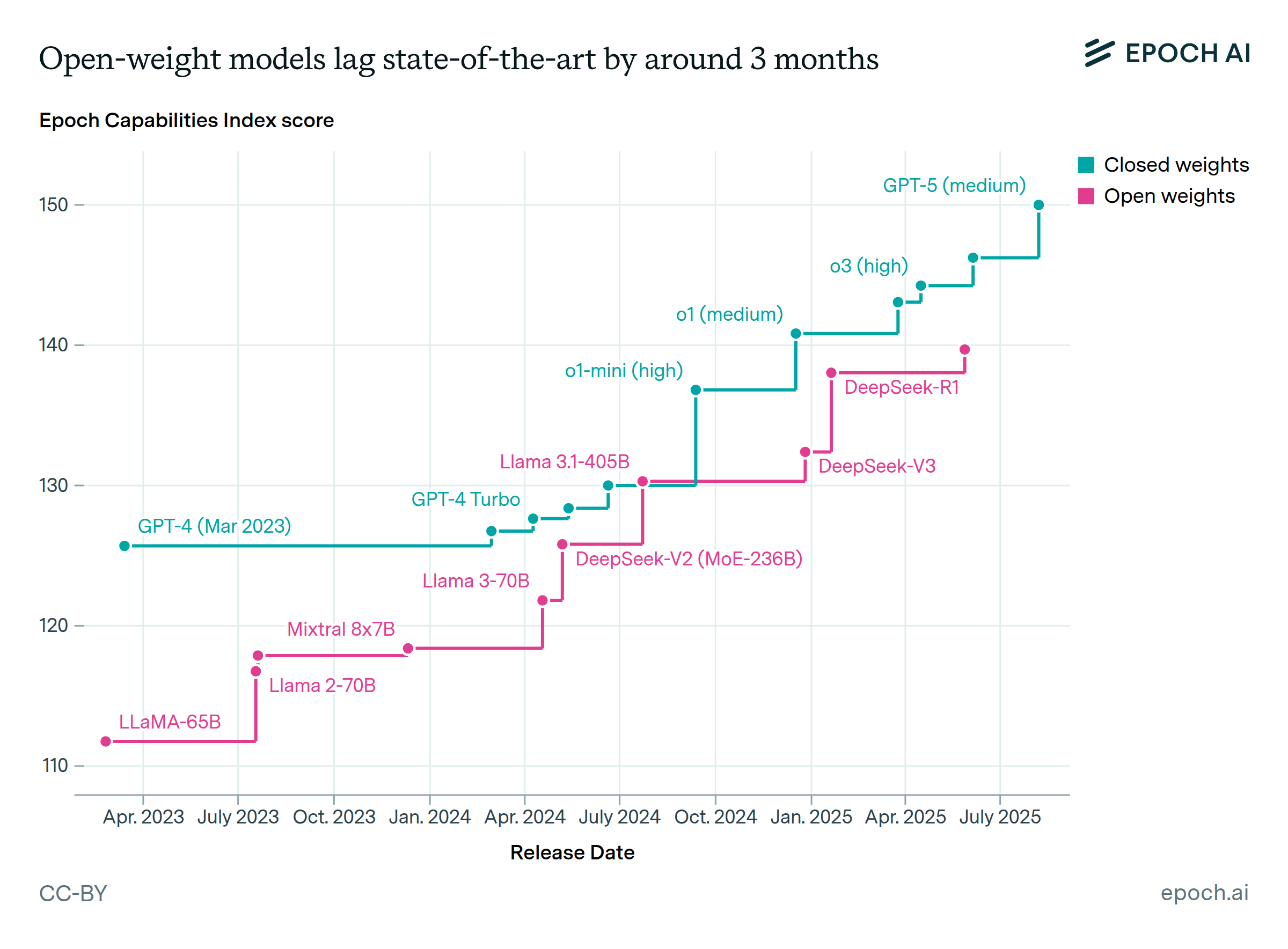

Models with downloadable weights currently lag behind the top-performing models

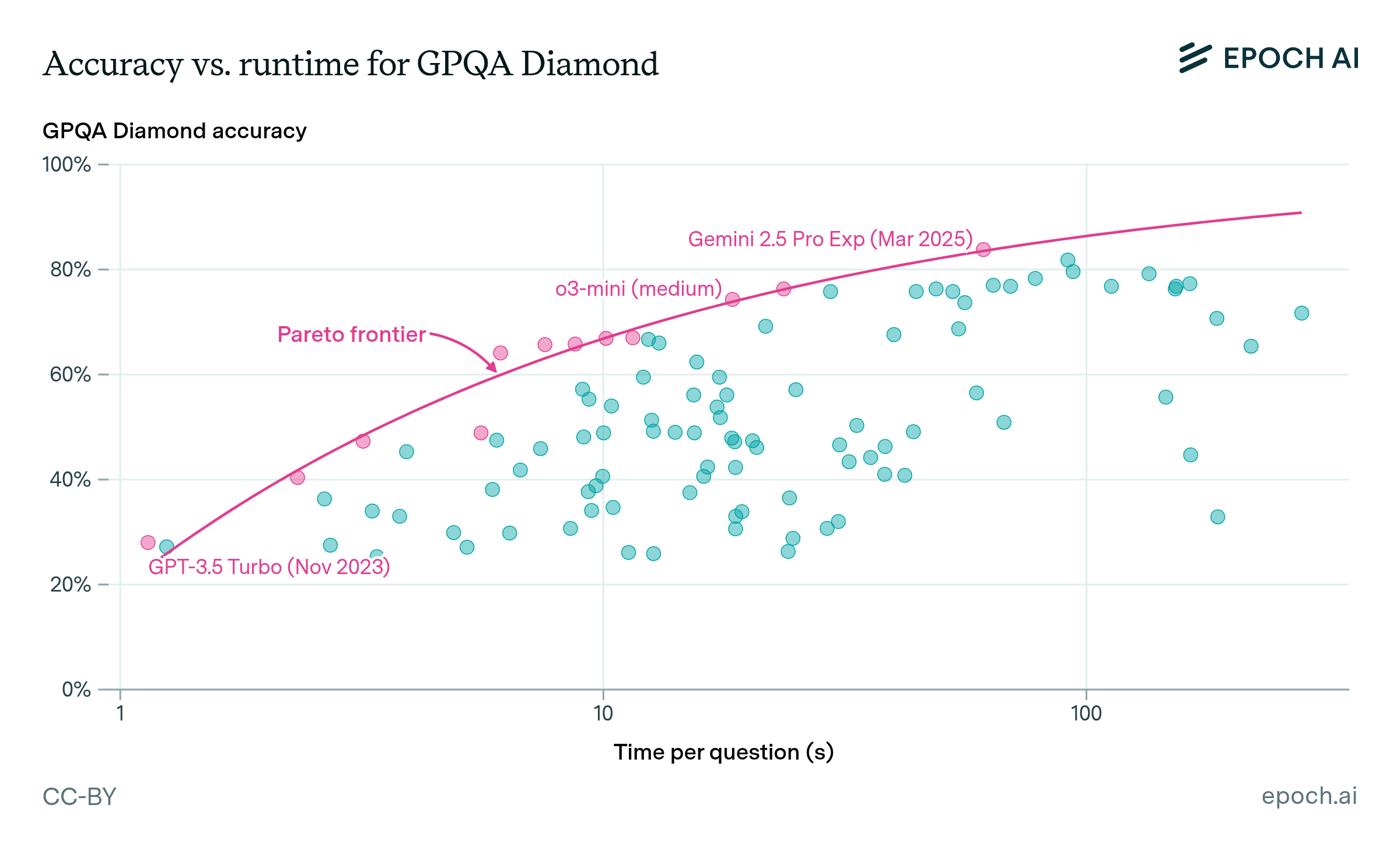

The models with the highest GPQA Diamond and MATH Level 5 accuracies tend not to have downloadable weights. For example, on GPQA Diamond OpenAI’s o1 outperformed the best-performing downloadable model at the time, Phi-4, by 20 percentage points. Similarly on MATH Level 5, Phi-4 lagged behind o1 by 29 percentage points.

We further analyzed how far behind open models are in this article, where we found that the best-performing open LLMs lagged the best-performing closed LLMs by between 6 months on GPQA Diamond and 20 months on MMLU.

However, the release of DeepSeek-R1 in January 2025 showed that the performance gap between open-weights and closed-weights has significantly decreased. For example, on MATH Level 5 DeepSeek-R1 only lags behind the current best-performing model, o3-mini, by 2 percentage points.