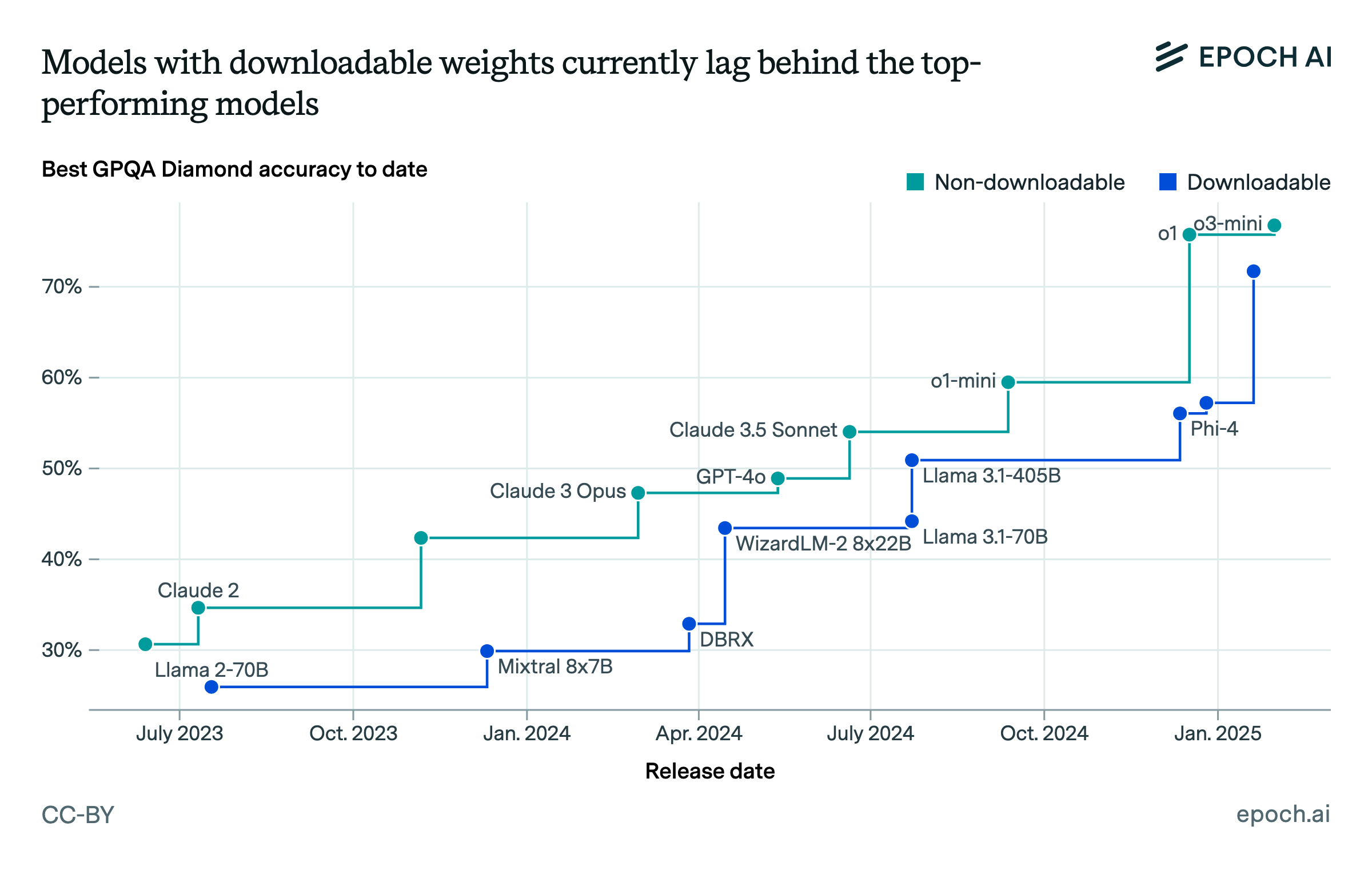

Open-weight models lag state-of-the-art by around 3 months on average

Frontier open-weight models lag behind the most capable models by an average of 3 months in the Epoch Capabilities Index (ECI), our holistic measure of model capability. That corresponds to an average ECI gap of around 7 points, similar to the gap between o3 and GPT-5.

However, the gap varies considerably over time, sometimes even closing completely. Until the release of o1-mini, Llama 3.1-405B was rated on par with the closed-source state-of-the-art model, Claude 3.5 Sonnet.

You can see more detailed analysis about the gap in our earlier article.

Authors

Published

October 30, 2025

Learn more

Overview

We calculate the average gap between closed-weight and open-weight state-of-the-art performance according to our internal capability metric, the Epoch Capability Index (ECI). ECI is a composite measure which captures performance across many benchmarks.

Analysis

To calculate the average time gap, we calculate the horizontal distance between the two lines across the range of ECI values where such lines can be drawn. Since ECI is estimated with some noise, we count an open-source model as “catching up” to a previous SOTA if their difference in scores is not statistically significant. For example, DeepSeek-V2 is counted as having caught up to GPT-4 after about 14 months, despite getting an ECI score of 125 vs. GPT-4’s 126.

We use a similar procedure to estimate the average ECI gap, taking the vertical distance across all dates where such a vertical line can be drawn.

We find an average “horizontal” time gap of 3.5 months, with a 90% confidence interval of 1.1 to 5.3 months. Along the vertical dimension, we find an average gap of 7 ECI points, with a 90% confidence interval of 0 to 14 units.

We also note that the current gap likely appears larger than it really is; we do not yet have enough evaluations of frontier open-weight models like gpt-oss-120b or MiniMax-M2 to assign ECI scores, but it is likely that they improve on DeepSeek R1 (May 2025).

Code for our analysis is available here.