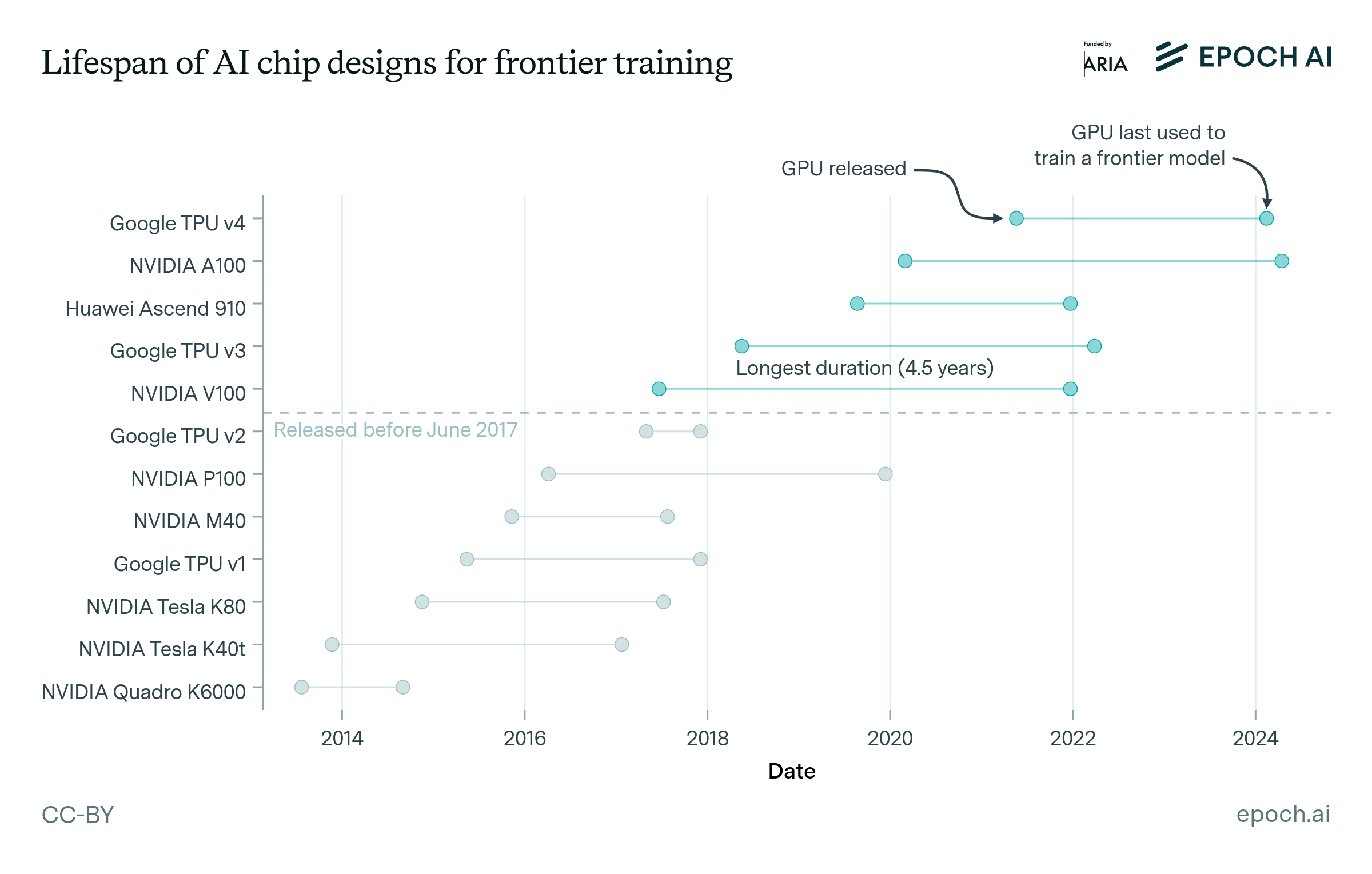

Widespread adoption of new numeric formats took 3-4 years in past cycles

In the past, it has taken up to four years for new numerical formats to reach widespread adoption in model training. Both FP16 and BF16 gained early traction in the first two years, reached 50% adoption by around year three1, and became the default within five years. Shifting to these new formats provided gains in computational performance and training stability, but were contingent on hardware support.

BF16 is on the latter end of its 4 year cycle, and FP8 training is now emerging. If adoption patterns hold, FP8 could be the standard by around 2028. Extending this further, 4-bit training may follow and eventually become the primary numerical format, but training stability at such low precision remains a challenge.

Authors

Published

May 28, 2025

Learn more

Overview

We analyze historical adoption trends of numerical precision formats (e.g., FP32, FP16, BF16) used in AI models’ original training. To determine these precisions, we automatically extract relevant text and code concerning training configurations from the models’ GitHub repositories and technical reports. A two-stage LLM analysis then interprets this extracted text to assign a final precision label to each model, provide evidence, and cite its sources. This process yields the dataset of training precisions used to map adoption cycles over time.

Code for this analysis is available here.

Data

We use Epoch’s Notable AI Models dataset to analyze trends in training numerical format. We begin with 837 models released between 2008 and 2025. From this dataset, we compile links to the model’s technical report and GitHub repository, if available. Open-source models are more likely to have public training code, while closed-source models often still include detailed technical reports, occasionally allowing us to extract or infer training precision information from available documentation.

We are able to identify the numerical format used during training for 272 of the 837 models. Of these, 112 are open-source models, while the remainder are either closed source, or did not have accessibility information.

To validate our results, we also analyze trends in Epoch’s larger “All Models” dataset, which includes both notable and non-notable models from the same 2008-2025 time period. Of the 1500+ models in this expanded dataset, we identify training precision for 570 models. We observe very similar trends across results from both datasets.

Analysis

We develop a Python script to automatically extract information on training numerical format from these sources. This script ingests and processes content from the collected technical reports and code from GitHub repositories, focusing on relevant files like training scripts.

To collect the most relevant context for determining the numerical format used in training, we employ a ‘smart chunking’ technique. This involves scanning the extracted content for a predefined list of keywords (e.g., ‘fp16’, ‘dtype’, ‘mixed_precision’, ‘torch.amp’) indicative of numerical precision or training parameters. When a keyword is found, a segment of text—3000 characters preceding and succeeding the keyword—is captured to preserve its context. If multiple such contextual segments are identified and are close enough to overlap, they are merged into a single, larger segment. This ensures that related information isn’t artificially separated. These extracted segments are then concatenated, with markers indicating where intermediate text has been omitted, forming a condensed version of the original content that is filtered for relevant information.

Then, the script performs a two-stage analysis utilizing GPT-4.1. In the first stage, the information resulting from smart chunking is sent to the model in a single LLM call per source—unless the chunked content exceeds size limits, in which case it is split across multiple LLM calls. Each call yields structured findings that include a key (e.g., ‘training_precision’), a value (e.g., ‘bf16’), and supporting evidence. In the second stage, the script aggregates all findings across sources and performs a final LLM call to generate a synthesized summary and determination. This summary LLM call weighs the evidence, prioritizes high-quality sources (e.g., technical reports over updated code), resolves conflicts, and assigns a concise label (e.g., “FP16 / Mixed”, “BF16”, or “No training precision info found”) reflecting either the most likely precision used during the model’s original training or the absence of any relevant precision information in the available sources.

The script prioritizes technical reports over repositories when resolving conflicting signals, and explicitly distinguishes between direct evidence (e.g. config files or paper statements) and high-confidence inferences (e.g. use of torch.amp suggesting mixed precision). For each model, we produce a standardized label of the original training precision (e.g., “BF16”, “FP16 / Mixed”) and include citations.

When producing the figure above, we collapse labels like “FP16” and “FP16 / Mixed” to the same category, since the majority of computations in mixed precision training are done at the lower precision.

Assumptions and limitations

Our data collection relies on public details about the model’s training context. We are unable to determine numerical formats for many high profile models released in recent years, since these have tended to provide fewer technical details around training. This may produce some bias, especially since we observe that early adopters of new formats tend to be relatively frontier models (often around or above the 90th percentile in compute for the year).

We assume that the training precision formats identified in technical reports and GitHub repositories accurately reflect the precision used during the original training phase of each model. In some cases, training precision is stated explicitly. In others, it is inferred from configuration defaults, library usage (e.g. torch.amp), or other implementation details. Our script distinguishes between direct and implied evidence, but there is still a risk of misclassification—particularly when training code in the model’s Github repo has been updated post-initial release or when precision details are under-specified.

To assess the accuracy of our automated data collection pipeline, we construct a hand-labelled test set of 194 rows covering a subset of models. For each, we manually source training format from technical reports, blog posts, and code. Comparing these ground-truth labels to the script’s outputs, we find 8 cases where the script is inaccurate. These cases typically required inferring precision from contextual clues such as the hardware available at the time of training or standard precision defaults for the libraries in use. In some instances, different sources provided conflicting information and the LLM failed to appropriately express uncertainty. In others, the LLM was overly eager to identify precision information, inferring from weak or ambiguous evidence that did not justify a confident conclusion.

We assume that prioritizing technical reports over GitHub repositories helps more reliably capture the training-time configuration rather than later modifications or fine-tuning settings. However, this prioritization may introduce errors if the report omits key training details that are only specified in accompanying code.