GPT-5 and GPT-4 were both major leaps in benchmarks from the previous generation

At launch, GPT-4 was widely seen as a step-change over GPT-3, demonstrating the high returns to scaling up training compute. GPT-5 arguably drew a more mixed reception. Yet GPT-5 does far better than GPT-4 in some notable capability benchmarks, similar to how GPT-4 advanced on GPT-3 on the widely-cited benchmarks of its day. While these improvements are not directly comparable, they do suggest that GPT-5 and GPT-4 were both large advances from the previous generation.

However, one major difference between these generations is release cadence. OpenAI released relatively few major updates between GPT-3 and GPT-4 (most notably GPT-3.5). By contrast, frontier AI labs released many intermediate models between GPT-4 and 5. This may have muted the sense of a single dramatic leap by spreading capability gains over many releases.

Authors

Published

August 29, 2025

Learn more

Overview

We contextualize the advance from GPT-4 to GPT-5 by comparing benchmark gains from GPT-4 to 5 with gains from GPT-3 to 4. While there is no single, objective measure of long-term AI improvements, we observe dramatic improvements on benchmarks for both GPT-4 and GPT-5 on benchmarks that were salient in their respective time periods.

Data

We obtain benchmarking scores for leading OpenAI models from Epoch’s AI Benchmarking Hub, and supplement this by searching for reports of benchmark scores in model release announcements and on benchmarking leaderboards. You can find more details on these benchmark scores in this sheet.

Analysis

We use a naive approach for comparing benchmark progress, by simply observing the growth in performance observed since the previous major GPT version. To capture “perceived progress”, we aimed to select benchmarks that were widely-cited in the AI community during the periods between GPT-3 and GPT-4 and between GPT-4 and 5, and in particular, those which the earlier version struggled at.

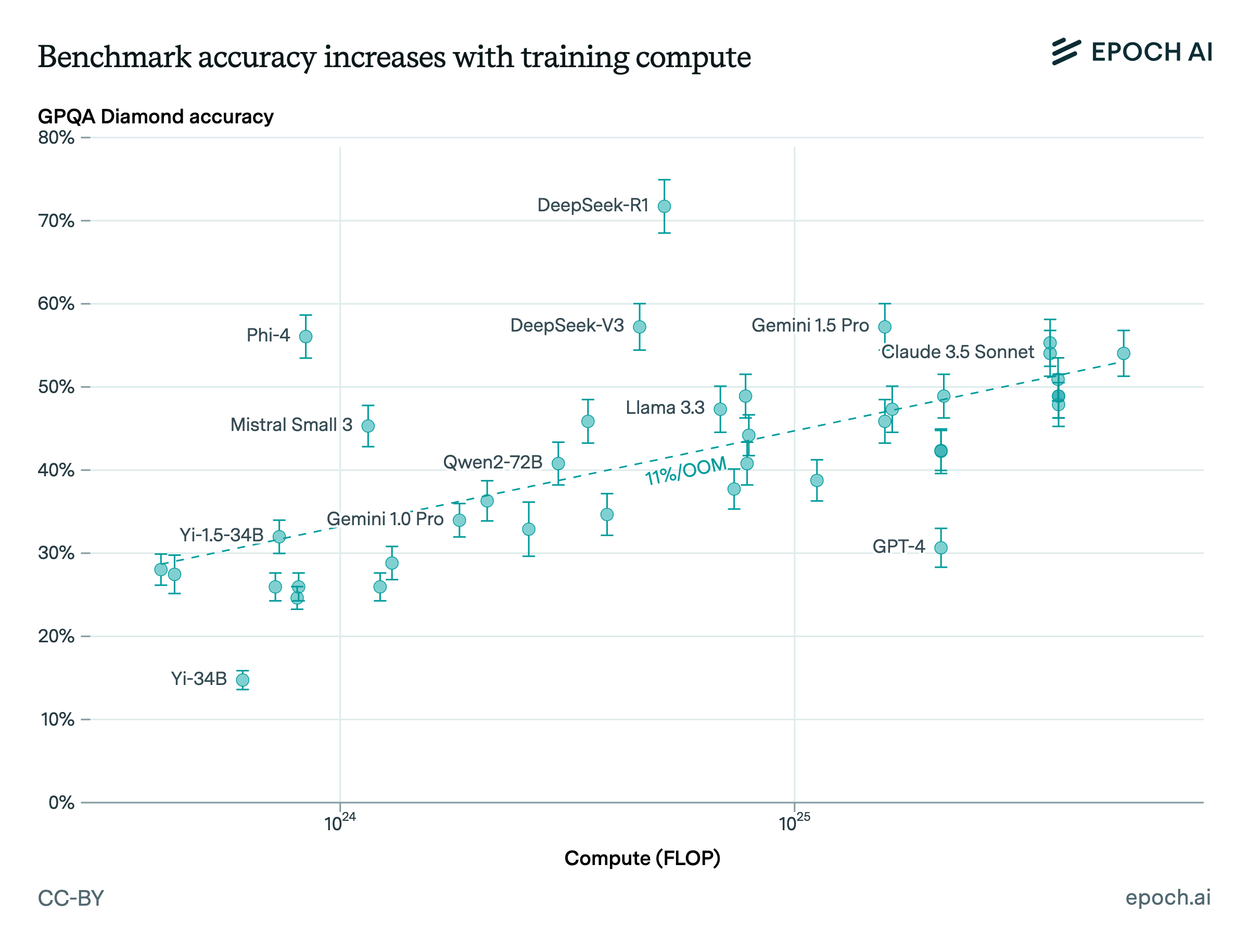

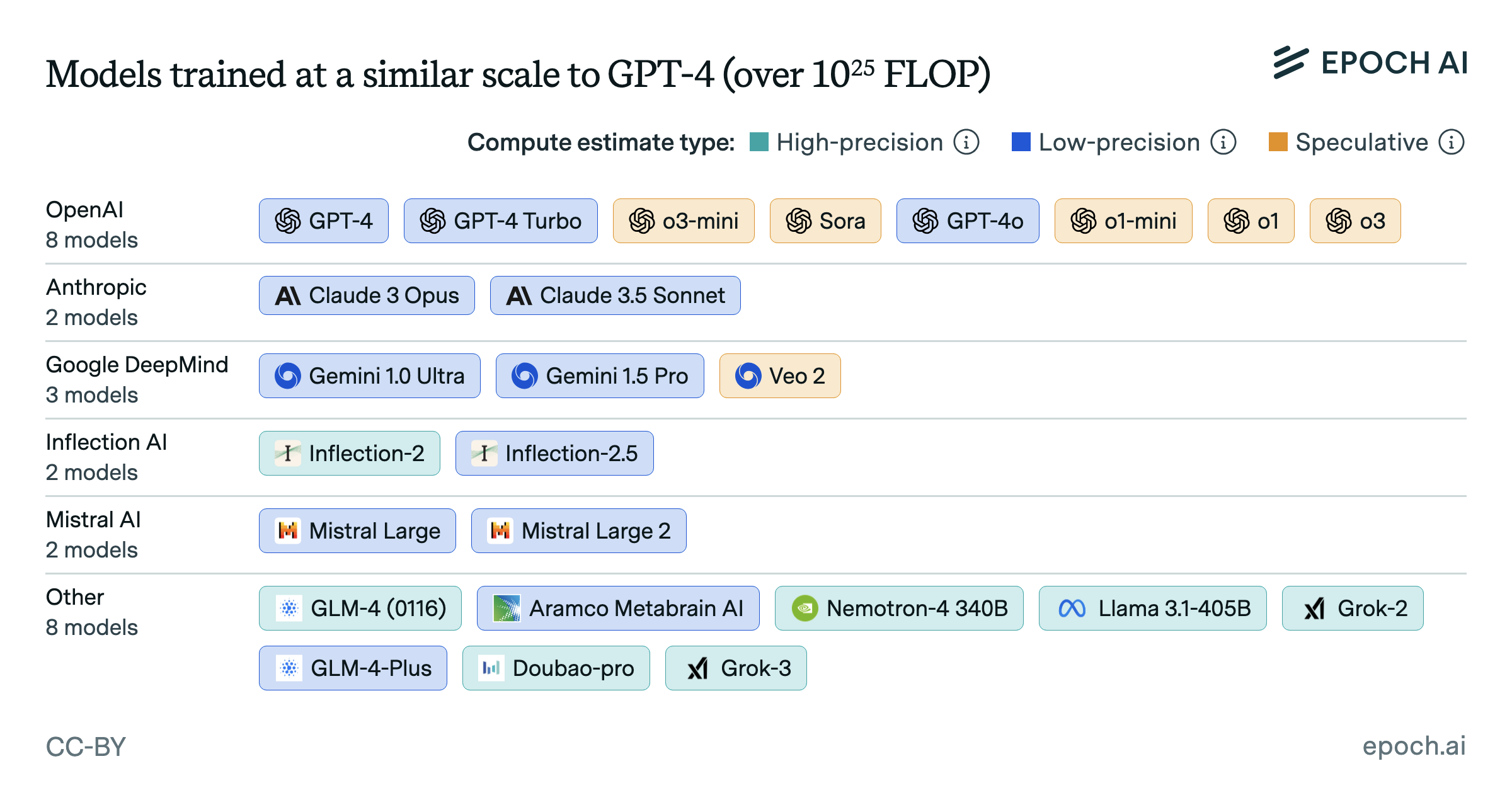

Direct benchmark comparisons between GPT-3, GPT-4, and GPT-5 are difficult because most benchmarks where GPT-3 achieved a non-trivial score were “saturated” before GPT-5’s release, with scores approaching 100% (for example, OpenAI’s o3 achieved 93% on MMLU, and o4-mini achieved 99% on HumanEval). One notable attempt to measure long-term AI progress is METR’s “time horizons” analysis, which finds exponential improvement in the complexity of tasks that AI can do, in terms of the time humans need to accomplish those tasks. GPT-5 achieved a 25x improvement in 50% time horizon from GPT-4, compared to GPT-4’s 36x improvement on GPT-3, and both improvements are consistent with the longer-term trend.

Because we were unable to obtain evaluations of the initial March 2023 release of GPT-4 for GPQA Diamond, Mock AIME 24-25, and MATH Level 5, we compare GPT-5’s performance to the earliest available version of GPT-4. For GPQA Diamond and MATH Level 5, this was GPT-4-0613, an incremental update released in June 2023, and for Mock AIME 24-25 this is GPT-4-Turbo-2024-04-09, a more substantial update from April 2024. These scores provide a loose lower bound on the gain between the initial GPT-4 and GPT-5.

Assumptions and limitations

We aimed to be unbiased in our benchmark selection process, but comparing a single metric of progress across multiple benchmarks is a difficult and subjective exercise without a far more thorough statistical analysis. We plan to do follow up work to improve the objectivity of these results.

For simplicity, we highlight the absolute progress in benchmark performance measured in raw percentage point improvement, rather than relative progress. This is an imperfect metric across some ranges; for example, improving from 90% to 95% on a given benchmark is arguably more difficult and significant than improving from 50% to 55%; this is not reflected in the current analysis.