Frontier AI performance becomes accessible on consumer hardware within a year

Using a single top-of-the-line gaming GPU like NVIDIA’s RTX 5090 (under $2500), anyone can locally run models matching the absolute frontier of LLM performance from just 6 to 12 months ago. This lag is consistent with our previous estimate of a 5 to 22 month gap for open-weight models of any size. However, it should be noted that small open models are more likely to be optimized for specific benchmarks, so the “real-world” lag may be somewhat longer.

Several factors drive this democratizing trend, including a comparable rate of scaling among open-weight models to the closed-source frontier, the success of techniques like model distillation, and continual progress in GPUs enabling larger models to be run at home.

Authors

Published

August 15, 2025

Learn more

Overview

We find that leading open models runnable on a single consumer GPU typically match the capabilities of frontier models after an average lag between 6-12 months. This relatively short and consistent lag means that the most advanced AI capabilities are becoming widely accessible for local development and experimentation in under a year.

Data

Model information, such as release date and parameter count, is drawn from original model reports, the Epoch AI Models database, and HuggingFace model cards. Benchmark scores are aggregated from the Epoch AI Benchmarking Hub, Artificial Analysis, LM Arena, and developer-reported results.

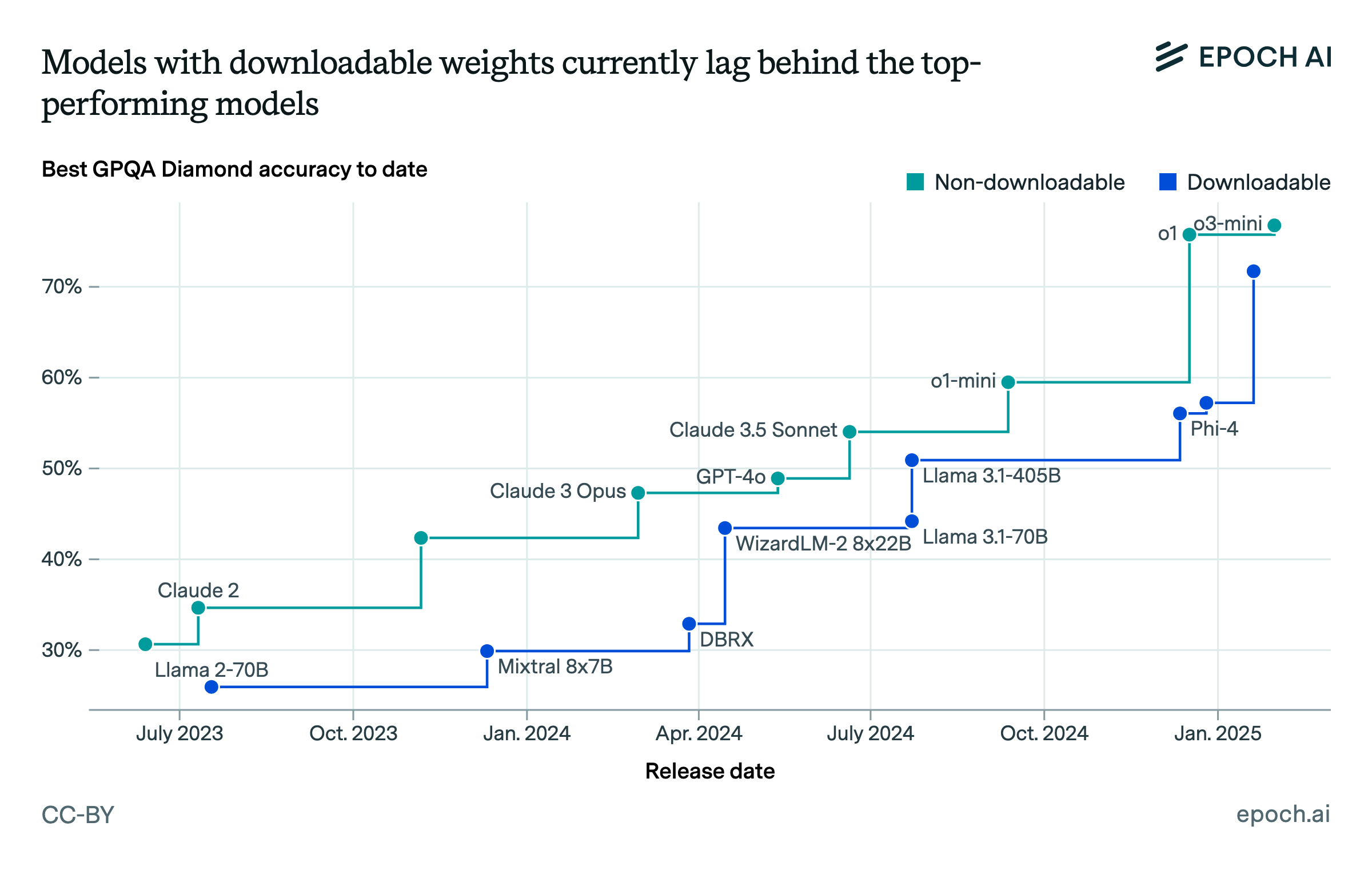

We analyze model performance across four benchmarks/metrics: GPQA-Diamond, MMLU-Pro, the Artificial Analysis Intelligence Index, and LM Arena Elo scores. We selected GPQA-Diamond and MMLU-Pro because they test a broad conception of knowledge and reasoning, have remained unsaturated over a reasonably long period, and have good data availability. We include the Artificial Analysis Intelligence Index as it provides a composite score of model performance. However, this index incorporates GPQA-Diamond and MMLU-Pro, so there is correlation between these metrics. Finally, we include LM Arena Elo scores to capture user preferences, though LM Arena Elo scores have their own limitations.

Epoch’s Benchmarking Hub prioritizes models at or near the frontier of capabilities, and thus tends to underrepresent small, open models. To identify models that push the frontier of the capabilities available on a consumer GPU, we use the following steps:

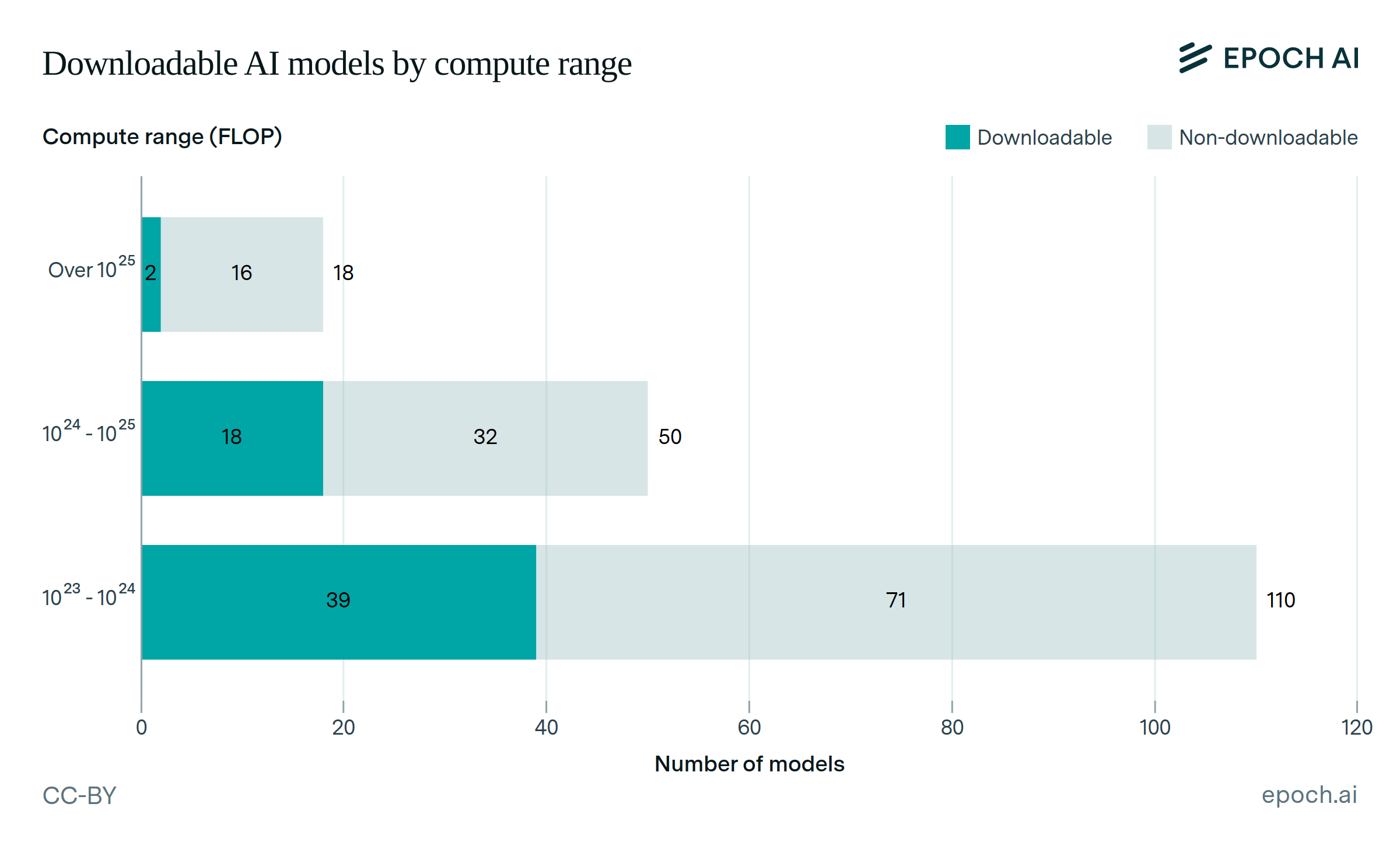

- Defining Hardware Constraints: We first establish the largest parameter size model that can be run on the NVIDIA consumer GPU at the time. Based on memory capacity and common quantization schemes, we defined this limit as ≤ 28B parameters for the NVIDIA RTX 4090 era and ≤ 40B parameters for the subsequent RTX 5090 era.

- Identifying SOTA Open Models: To create a candidate list of high-performing open models within these size constraints, we analyzed evaluation data from sources like the OpenLLM Leaderboard and Artificial Analysis. OpenLLM leaderboard has comprehensive coverage of open models and provides a strong signal for identifying which models were pushing the frontier of capabilities over time. However, the initiative ended in February 2025 so we supplemented and cross-checked this with data from Artificial Analysis to create a set of open models that were at or near the performance frontier for their respective time periods.

- Collecting and Prioritizing Scores: For each model, we sought a reliable benchmarks score. We consider multiple sources and employ a hierarchy for collecting scores. Our prioritization was as follows: 1) Artificial Analysis benchmarking, as they have the most extensive GPQA-Diamond and MMLU-Pro coverage for small models, 2) Epoch AI Benchmarking Hub, and 3) developer-reported results (which tend to be slightly higher than third-party evaluations). For many older models, we relied exclusively on third-party evaluations. Some potentially significant open models are excluded from the analysis due to a lack of available scores from any of the sources. For model Elo values, we pull the style-controlled scores from the LM Arena Text leaderboard.

We use data from Epoch AI’s Benchmark Hub to plot the absolute frontier on GPQA-Diamond . For plotting absolute frontier on MMLU-Pro and AA Intelligence Index, we use data from Artificial Analysis.

Analysis

A key component of our analysis is determining the largest model size that a single node of the RTX 4090 and 5090 can run. We focus on models that could fit entirely on a card’s VRAM with weights quantized to 4-bits. The total VRAM usage is modeled as:

\[ \text{VRAM Usage} = \text{Weights} + \text{KV Cache} + \text{Runtime Overhead} \]

We make the following calculations and assumptions:

- VRAM Conversion: We convert a card’s marketed VRAM from decimal gigabytes (GB) to binary gibibytes (GiB), which is standard for memory reporting. The RTX 4090’s 24GB is ~22.37 GiB, and the RTX 5090’s 32 GB is ~29.80 GiB.

- Component Sizing: We assume typical values for each component:

- Weights: We use an average of 0.54 bytes per parameter, which is typical of 4-bit quantized models.

- KV Cache: We assume an 8k context length with INT8 precision in a Llama-class model depth and width, resulting in a cache size of ~6.6 GiB.

- Overhead: We add ~2 GiB for the CUDA context, allocator fragmentation, and other runtime needs.

Under these assumptions, the theoretical ceiling for an RTX 4090 is ~27B parameters (weights: 13.6 GiB + KV cache: 6.6 GiB + overhead: 2.0 GiB ≈22.2 GiB). For the RTX 5090, the theoretical ceiling is ~40B parameters.

To obtain an estimate for the performance trend, we fit a simple model regressing benchmark accuracy/ score for the top-1 models on release date. To generate confidence intervals, we used bootstrap sampling with replacement (n=500). We then calculated the 5th and 95th percentiles of the bootstrap slope distribution to construct 90% confidence intervals.

Notably, our analysis of LM Arena Elo scores suggests that open models runnable on a consumer GPU have a steeper slope (+125 ELO/year) compared to frontier models (+80 ELO/year), suggesting faster rate of progress for consumer-accessible models.

| Metric | Average Lag |

|---|---|

| GPQA-Diamond | 7.4 months |

| MMLU-Pro | 7.3 months |

| LM Arena Elo | 12.4 months |

| Artificial Analysis Intelligence Index | 6.3 months |

Code for our analysis and the plot is available here.

Assumptions

We rely on benchmark data from multiple sources which introduces variance in evaluation methodologies. To mitigate this, we standardize our sourcing where possible: most of the GPQA-Diamond scores for the open models comes from Artificial Analysis and all of the data for the absolute frontier is drawn from Epoch AI’s Benchmarking Hub. We also face data gaps as some open models lack any developer reported or third party evals against GPQA-Diamond.

We model the gap between our two sets of models (small open models, and top-1 frontier) by calculating the average distance between the trends. We use a simple linear regression for our main analysis, to simplify interpretation. For benchmarks scored between 0 and 100%, it is more appropriate to model the relationship over time with a logistic regression, but results are highly similar in either case.

We only consider models whose full set of weights can fit in GPU memory. This creates a bias away from sparsely activated models, since these models outperform dense models with the same number of active parameters but underperform those with the same number of total parameters.

It may be possible to run larger models than our calculated limits on a single GPU, through techniques like VRAM offloading or more extreme quantizations. However, these techniques tend to require significant tradeoffs in inference speed or output quality, respectively. Accepting these tradeoffs would likely reduce the calculated gap modestly.

Our estimates for the largest model runnable on a single node assume a fixed context length of 8K. Our previous work has found that the average output length is growing 2.2x per year and 5x per year for non-reasoning and reasoning models respectively. Today’s reasoning models can even average 20K token outputs in which case the KV cache memory footprint would grow to 15 GiB rather than the 6 GiB of 8K context length. Our estimates are therefore on the higher end of what is runnable for long-context applications, however there are techniques to reduce the footprint of the KV cache.