Almost half of large-scale models have published, downloadable weights

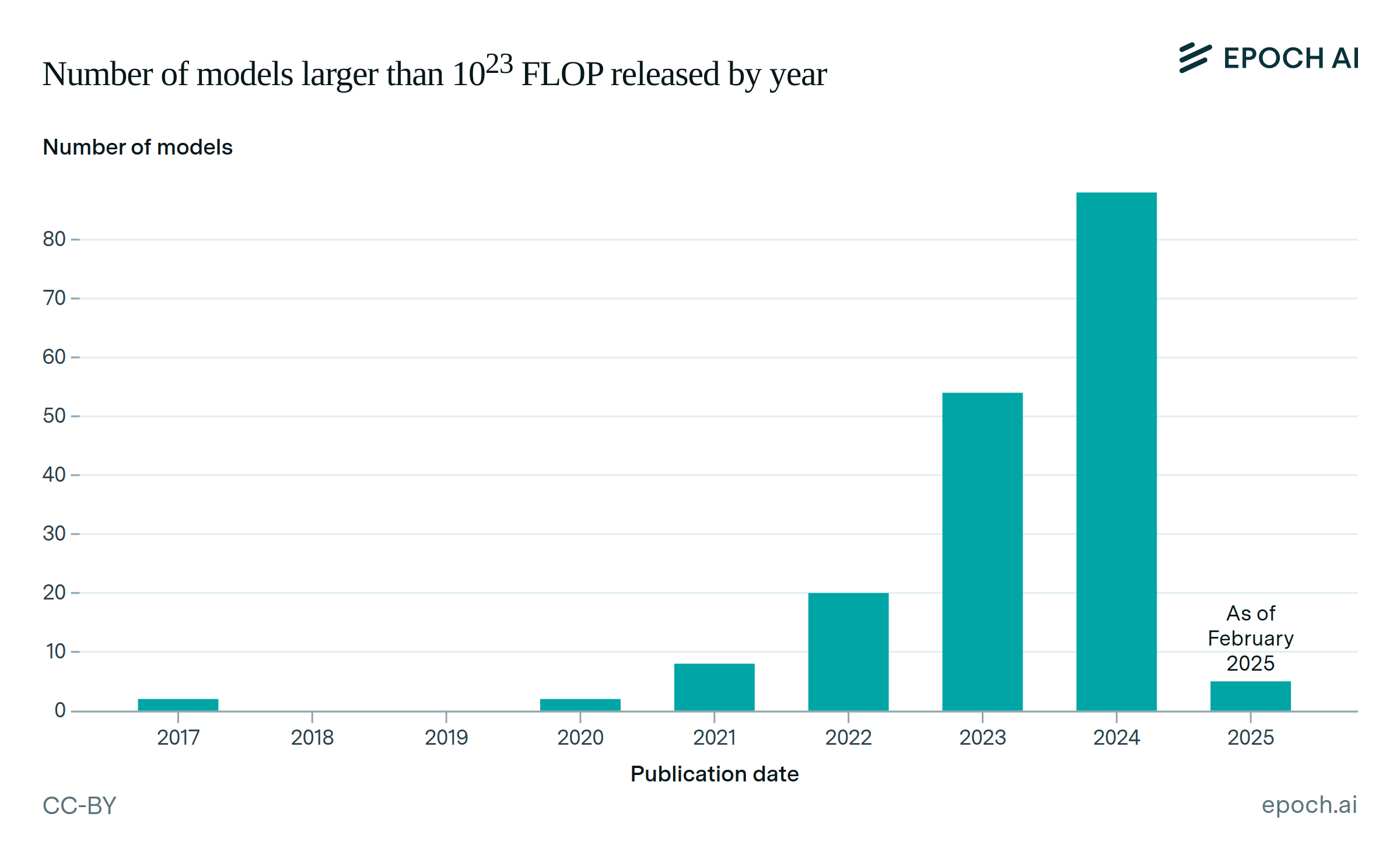

84 large-scale models with known compute have downloadable weights. Most of these have a training compute between 1023 and 1024 FLOP, which is less compute than the largest proprietary models. The developers that have released the largest downloadable models today are Meta and the Technology Innovation Institute.

Published

June 19, 2024