Frontier training runs will likely stop getting longer by around 2027

In “The Longest Training Run”, we argue that training runs that last too long are outclassed by training runs that start later and benefit from additional hardware and algorithmic improvements. Based on our latest numbers, this suggests that training runs lasting more than 9 months may be inefficient. At the current pace, training runs will reach this size around 2027 (90% CI: Aug 2025 to Sept 2029).

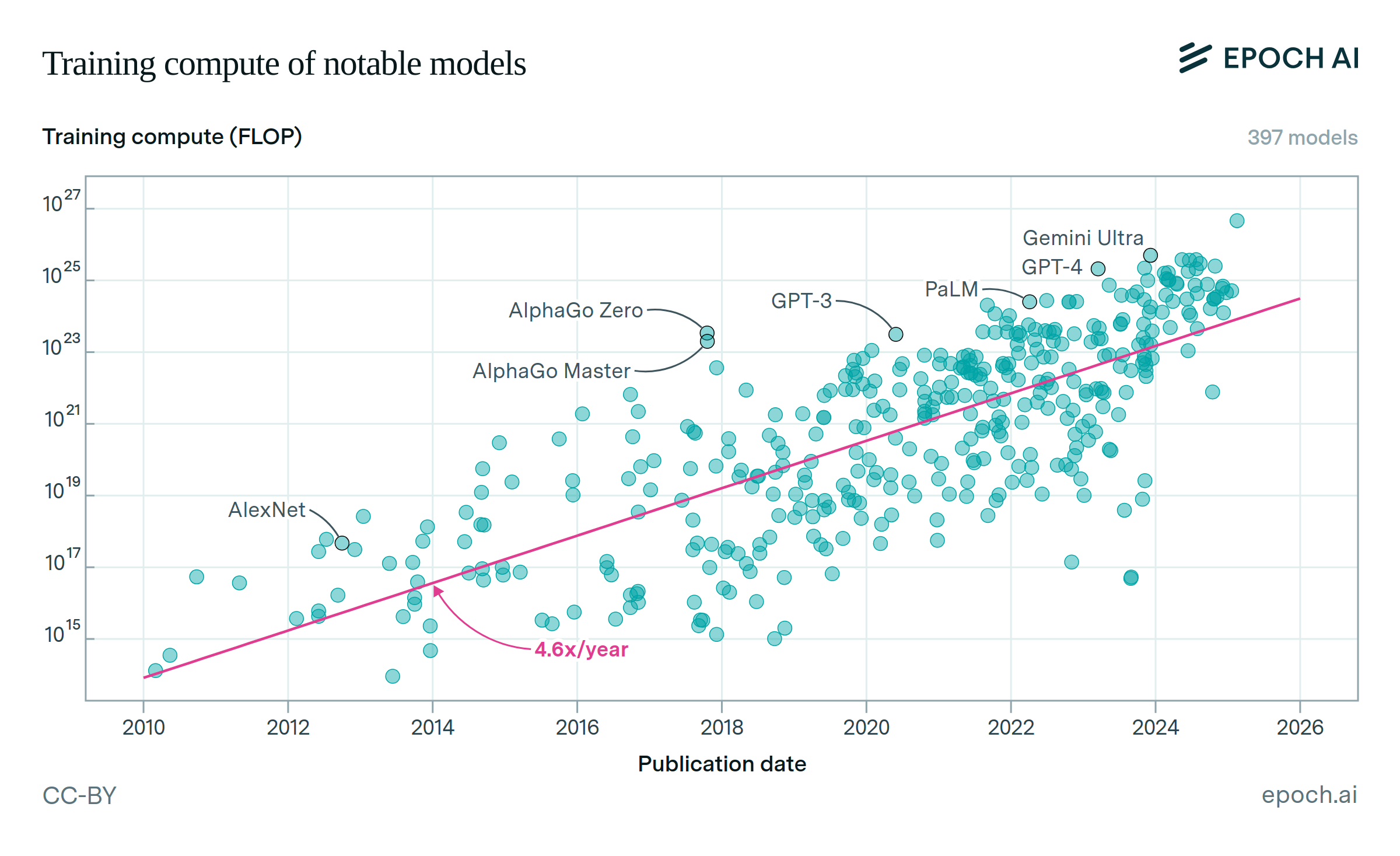

Longer training runs are a significant driver of the rapid growth seen in training compute. If training time stops increasing, training compute growth will slow – unless developers ramp up hardware scaling even faster. This could be achieved by speeding up the build-out of larger clusters, or by spreading training across multiple clusters.

Authors

Published

July 25, 2025

Learn more

Overview

We show that, since 2020, the time that frontier LLM systems spend training has grown by about 1.4x per year (90% CI: 1.3x to 1.5x). Separately, there are economic reasons to expect that training runs longer than about 9 months are sub-optimal. On current trends, frontier AI systems will hit this 9 month limit by around 2027 (90% CI: 2025 to 2029).

Since training time has contributed about 1/3rd of total scaling progress since 2018, an end to this trend could mean slower overall compute growth after 2027. Conversely, model developers could respond by increasing the number of chips they train on, either by speeding up their training cluster build-outs, or by distributing training across more clusters.

Data

Our data come from Epoch AI’s Notable AI Models dataset. We focus our analysis on frontier LLMs, defined as those that were among the top-5 largest by compute at the time they were published. We then filter to models released after 2020, and those which have estimates of training duration, leaving a dataset of 38 models

Separately, we use previous estimates for trends in algorithmic progress, hardware price-performance, and training budgets to estimate the longest training time under a simple model of opportunity costs. The hardware progress estimate is based on data from Epoch AI’s ML Hardware dataset, while algorithmic progress and training budgets each use Epoch AI’s Notable AI models dataset.

Analysis

To obtain an estimate for the trend in frontier LLM training time, we fit a simple model regressing the logarithm of training time on publication date. We bootstrap this regression to obtain a 90% confidence interval.

Our main analysis of the longest training runs focuses on the “hardware and software progress” scenario of our earlier report, “The Longest Training Run”. We update the numbers from that analysis using our latest estimates for each of the underlying trends. Additionally, we propagate our uncertainty over the underlying trends through the model, to get a confidence interval on the longest training duration.

To obtain a confidence interval on the range of dates at which training time might stop growing, we randomly sample from both the training time trend and the longest run bootstraps, calculate the implied date at which they meet, and report the 5th and 95th percentile of these dates.

Key results can be found in the following table:

| Median | Confidence interval | |

|---|---|---|

| Algorithmic progress | 3x / year | (2x - 6x) |

| Hardware price-performance | 1.35x / year | (1.25x - 1.47x) |

| Training time | 1.4x / year | (1.3x - 1.5x) |

| Longest training run (hardware + software) | 8.6 months | (6.1 - 14.2) |

| Date of crossover | May 2027 | (Aug 2025 - Sept 2029) |

A notebook containing our analysis can be found here.

Assumptions and limitations

Our main analysis focuses on a scenario where hardware and software progress continues at historic rates. Our previous analysis of the longest training run also examined the effect of increasing training budgets. However, we think this effect may be limited in today’s environment; with engineering effort, we believe it is possible to add newly acquired chips mid-training run. We still believe that hardware progress matters, since developers still face an opportunity cost to waiting for better hardware any time they buy chips.

Our analysis of the longest training run assumes that progress in algorithms and hardware is smooth and continuous. We do not expect our conclusions would substantially change if we relaxed these assumptions by accounting for discontinuous steps on release of new GPU designs or algorithm breakthroughs.