The computational performance of leading AI supercomputers has doubled every nine months

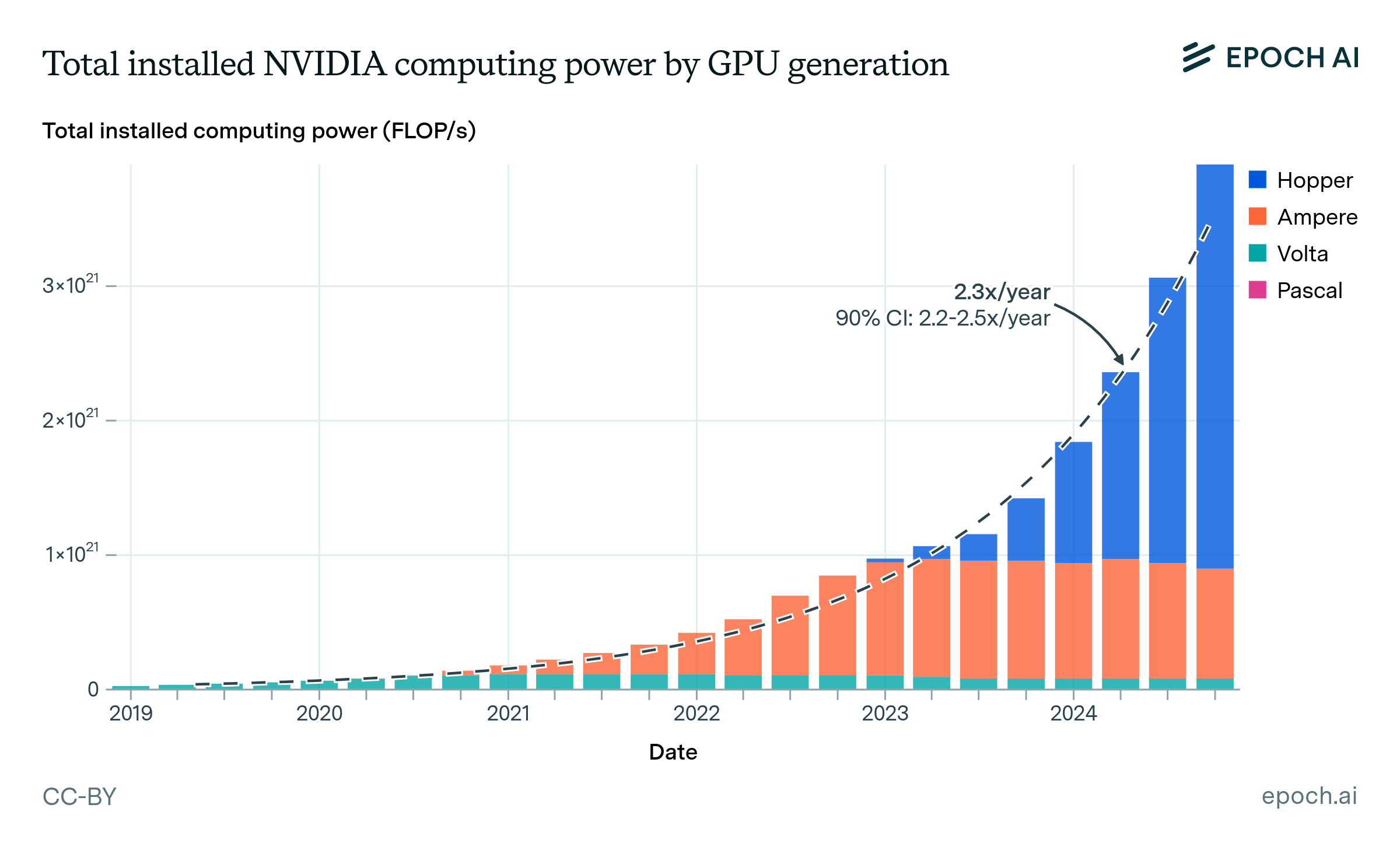

The computational performance of the leading AI supercomputers has grown by 2.5x annually since 2019. This has enabled vastly more powerful training runs: if 2020’s GPT-3 were trained on xAI’s Colossus, the original two week training run could be completed in under 2 hours.

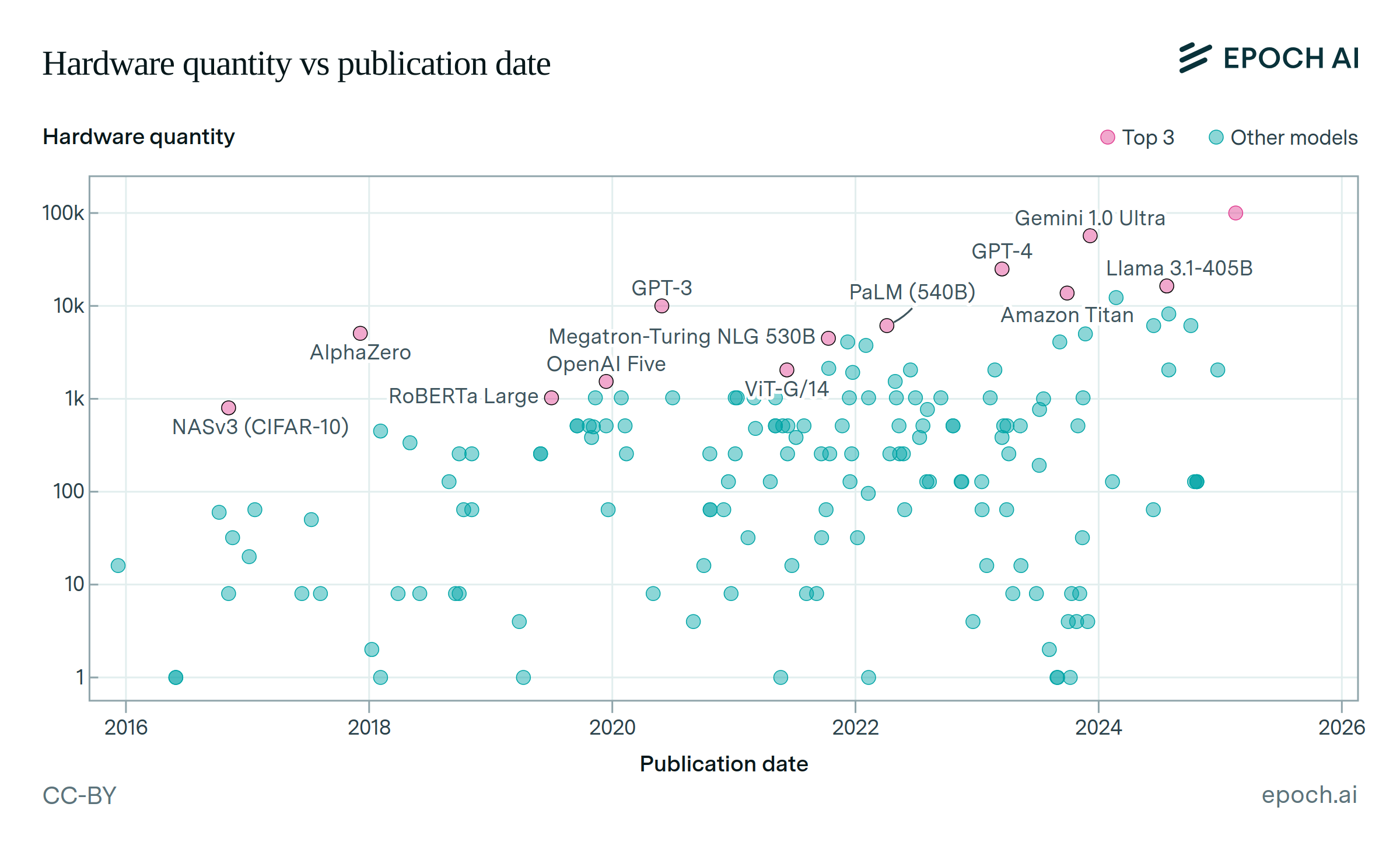

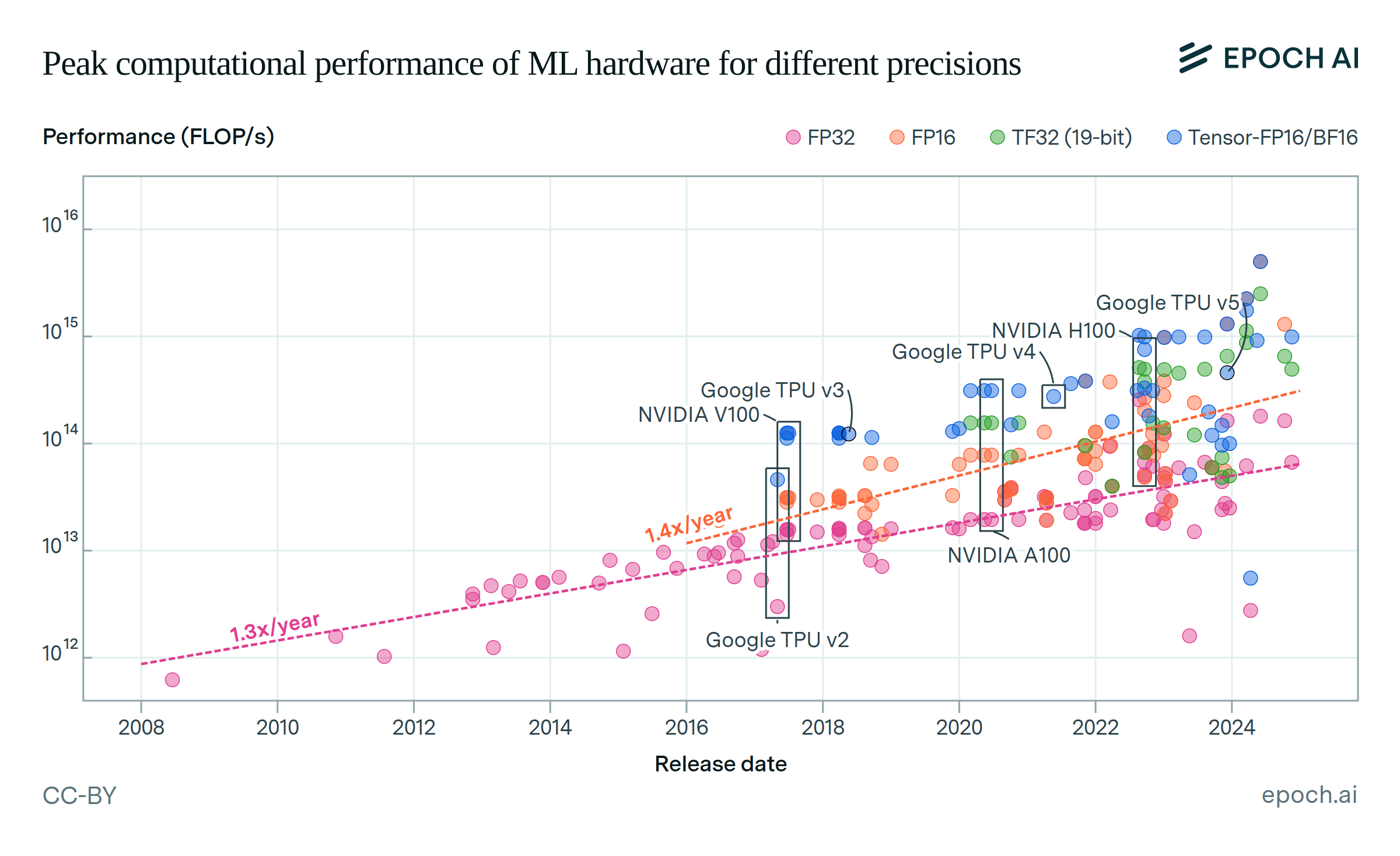

This growth was enabled by two factors: the number of chips deployed per cluster has increased by 1.6x per year, and performance per chip has also improved by 1.6x annually.

Published

April 30, 2025

Last major update

June 5, 2025

Learn more

Data

Data come from our AI Supercomputers dataset, which collects information on 728 supercomputers with dedicated AI accelerators, spanning from 2010 to the present. We estimate that these supercomputers represent approximately 10-20% (by performance) of all AI chips produced up to January 2025. We focus on the 501 AI supercomputers which became operational in 2019 or later, since these are most relevant to modern AI training.

For more information about the data, see Pilz et. al., 2025, which describes the supercomputers dataset and analyzes key trends, and the dataset documentation.

Analysis

We measure computational performance as the peak number of 16-bit floating-point operations per second (FLOP/s) a supercomputer could theoretically perform based on reported hardware specs. Performance values were either taken directly from reported data or calculated using processor specifications and quantity. After excluding supercomputers without known 16-bit performance data, we retained 482 observations. From these, we identify 57 “leading” AI supercomputers, defined as those that ranked among the top 10 most powerful supercomputers at the time they first became operational. We then run log-linear regressions to estimate annual growth rates in computational performance, number of chips, and per-chip performance for these leading supercomputers. We estimate confidence intervals from the standard errors of each slope.

Our results are as follows:

| Total performance | Chip quantity | Chip performance |

|---|---|---|

| 2.5x per year (2.4 - 2.7) | 1.6x per year (1.5 - 1.8) | 1.6x per year (1.5 - 1.7) |

Note that AI training used different numerical precisions throughout our study period. While 32-bit was still common in 2019, most training likely happened in 16-bit format by the early 2020s. By 2024, some AI training workloads had begun to move to 8-bit precisions. When considering the highest performance available across these three precisions, we find the following trends:

| Total performance | Chip quantity | Chip performance |

|---|---|---|

| 2.6x per year (2.3 - 2.8) | 1.5x per year (1.3 - 1.6) | 1.8x per year (1.6 - 2.0) |

Find an explanation and discussion of numerical precision in Appendix B.8.

Assumptions

We chose to focus on AI supercomputers that became operational in 2019 or later. This date cutoff was predetermined prior to data collection in order to cover the time period with best data availability about supercomputers, and modern machine learning practices. Some large clusters, used for traditional scientific research rather than AI computing, existed prior to 2019, and would appear among the top 10 if the start date were earlier. We assume these are not indicative of current growth trends, but if they are included, the growth rate results would likely be slower, especially in the first half of the study period.