Power requirements of leading AI supercomputers have doubled every 13 months

Leading AI supercomputers are becoming ever more energy-intensive, using more power-hungry chips in greater numbers. In January 2019, Summit at Oak Ridge National Lab had the highest power capacity of any AI supercomputer at 13 MW. Today, xAI’s Colossus supercomputer uses 280 MW, over 20x as much.

Colossus relies on mobile generators because the local grid has insufficient power capacity for so much hardware. In the future, we may see frontier models trained across geographically distributed supercomputers, to mitigate the difficulty of delivering enormous amounts of power to a single location, similar to the training setup for Gemini 1.0.

Published

June 5, 2025

Learn more

Data

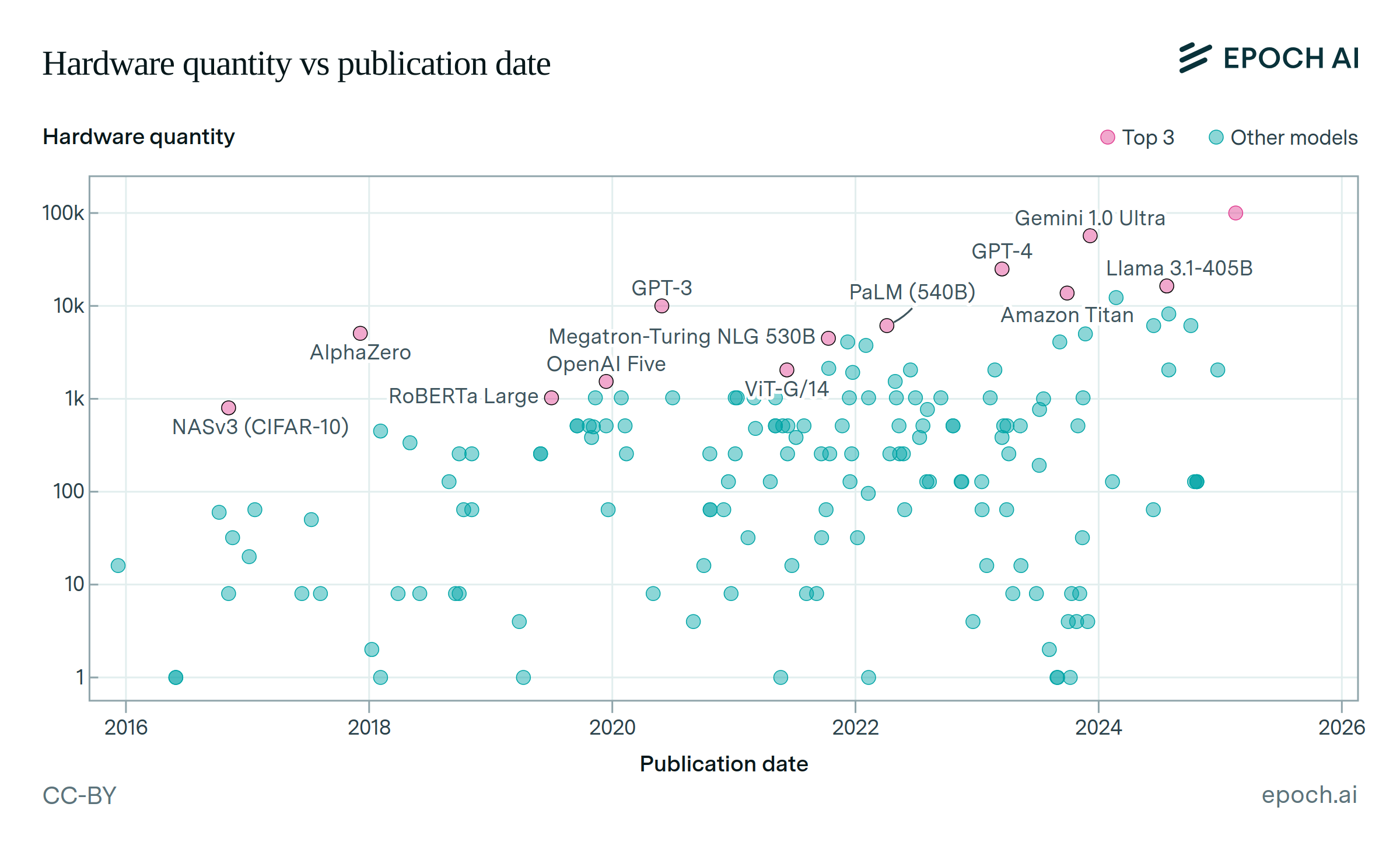

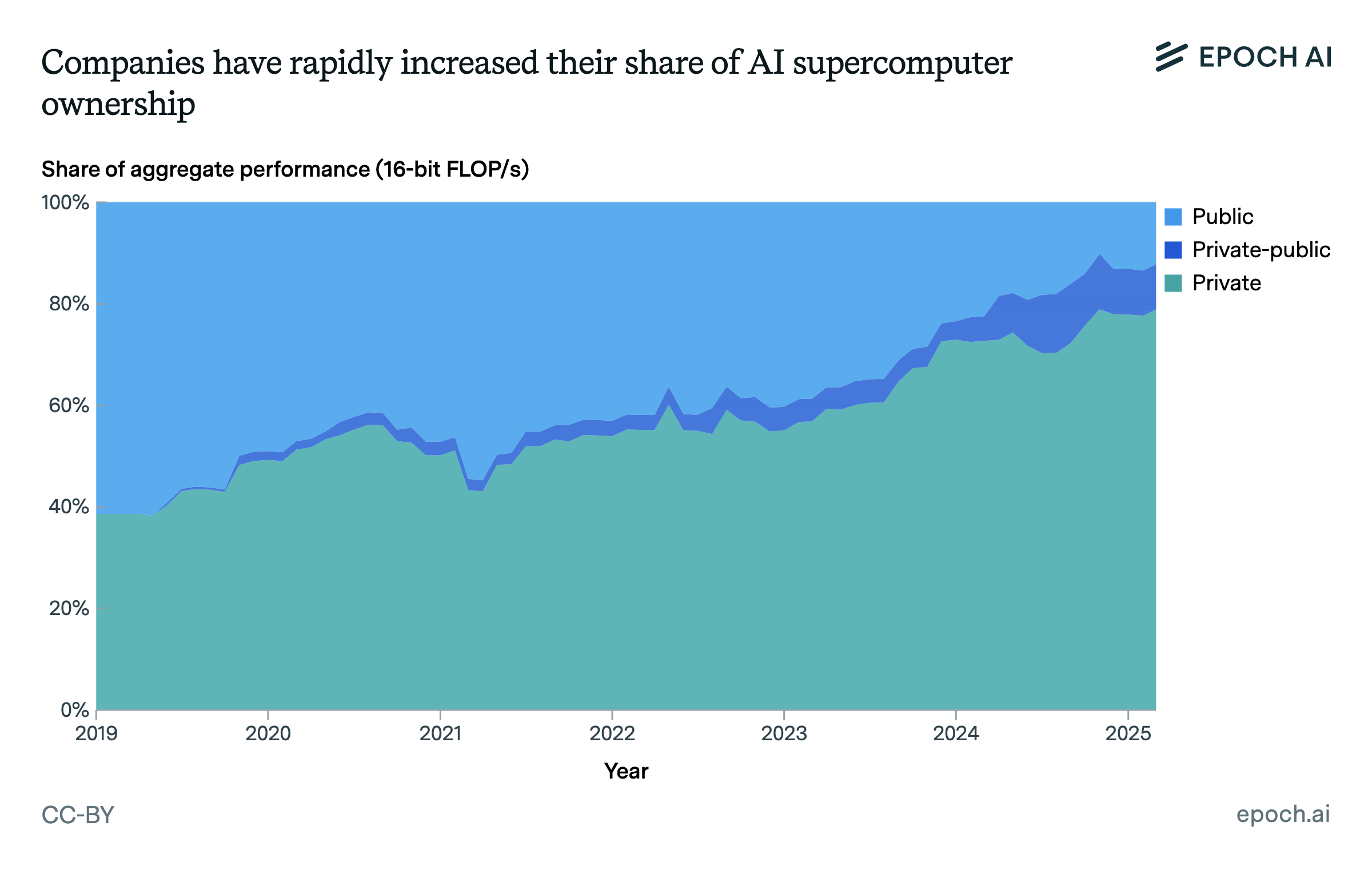

Data come from our AI Supercomputers dataset, which tracks 728 supercomputers with dedicated AI accelerators, spanning from 2010 to the present. By our estimate, these systems account for roughly 10-20% of all AI chip performance produced before 2025. We focus on the 502 AI supercomputers which became operational in 2019 or later, since these are most relevant to modern AI training.

When available, we use the power capacity reported by an AI supercomputer’s owner. When we cannot find a reported figure, we estimate the maximum theoretical power requirements of each AI supercomputer with the following formula:

Chip TDP × number of chips × system overhead × PUE

Chip Thermal Design Power (TDP) represents the maximum power a chip is designed to operate at. This value is public information for most AI chips, but information is not available for some Chinese chips and custom silicon, such as Google’s TPU v5p; we omit these systems from our analysis.

System overhead represents the additional power needed to run non-GPU hardware. We apply a value of 1.82 for all systems, based on NVIDIA DGX H100 server specifications. See the Assumptions section for more details on this figure.

Power Usage Effectiveness (PUE) represents the overhead associated with non-IT equipment (e.g. air conditioning and power conversion inefficiencies). PUE has diminished over time as datacenters have improved their efficiency; we obtain annual figures from Shehabi et al. (2024). We subtract 0.29 from the overall PUE in each year, since the same report finds that specialized datacenters (like AI supercomputers) have an average PUE that is 0.29 lower than the overall average.

When power usage is reported by the TOP500 list, which provides average power draw during benchmarks, we multiply this figure by 1.5 to approximate peak power consumption, based on comparisons from cases where both peak and average values are known.

Analysis

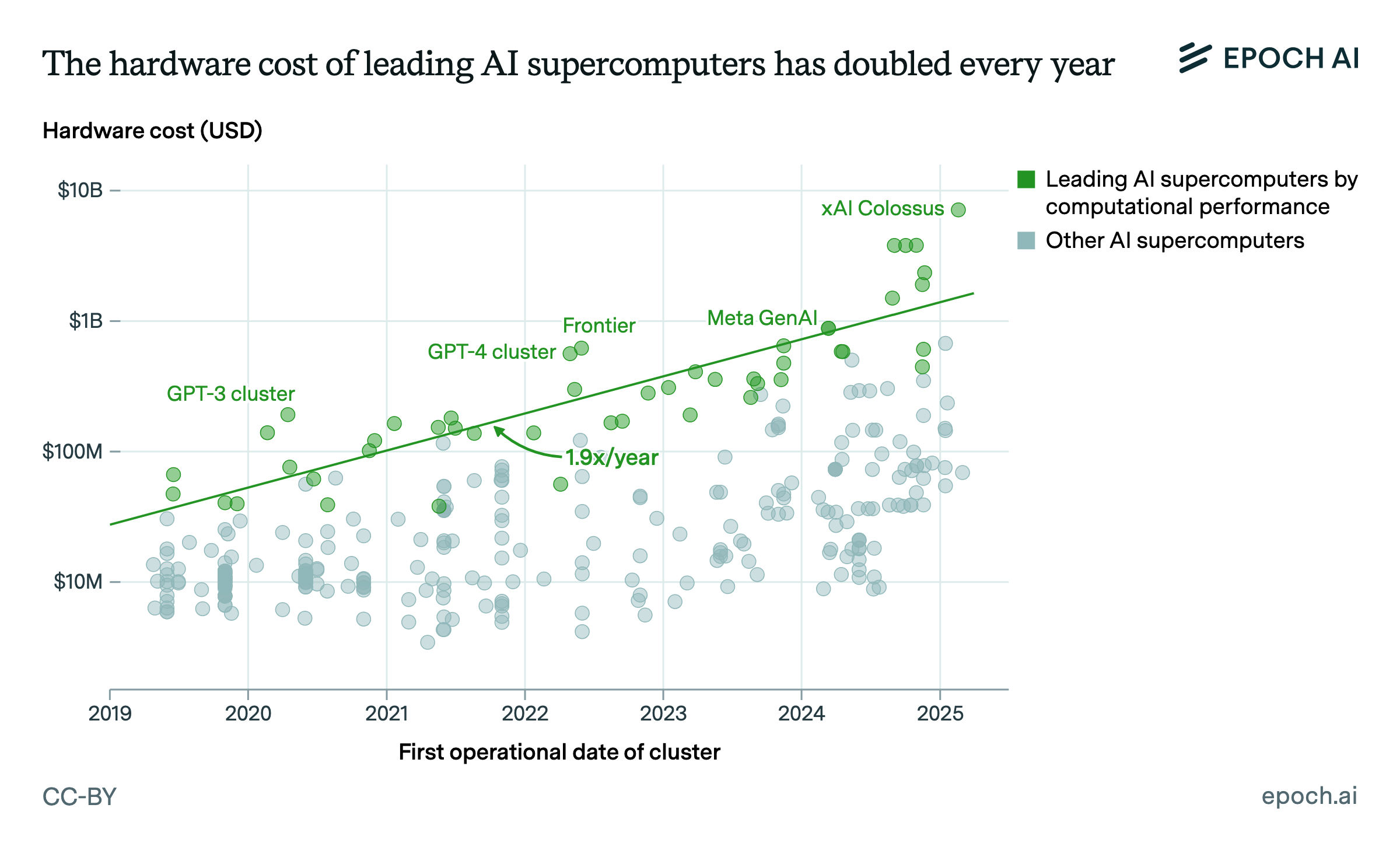

To estimate a trend in power requirements for leading AI supercomputers, we first identify 65 “frontier” AI supercomputers, defined as those which were among the top 10 most powerful supercomputers at the date they began operation (using 16-bit performance). We then filter to 60 frontier supercomputers where we were able to obtain a power usage estimate. To see how the power requirements of these frontier supercomputers have grown over time, we fit a log-linear regression of power capacity on time.

We find that power requirements for frontier AI supercomputers have been growing by 1.9x per year, with a 90% confidence interval of 1.7x to 2.1x.

Assumptions and limitations

- Our estimates represent power capacity, not power consumption. Power capacity corresponds to power usage when a supercomputer is running at maximum utilization. AI supercomputers are usually not fully utilized, so total annual energy usage is substantially less than power draw x hours per year. For instance, we found that Top500 supercomputers consumed about 65% of their peak power on average when running the Linpack benchmark.

- Owner-reported power figures that were not obtained from TOP500 may not be well standardized. For instance, some may represent only the IT load, while others may account for PUE. We assume the noise introduced by this lack of standardization is minimal relative to the trend.

- We assume that our formula for calculating power usage based on chip TDP is appropriate and unbiased. To test the consistency of our calculations, we regressed log(calculated power) on log(reported power), for all supercomputers where both figures were available. We found a correlation of 0.98 and a power law exponent of 1.009, indicating that our calculated figures closely match reported figures across a wide range of scales.

- Our power calculations rely on an estimate of non-GPU system overhead obtained from an NVIDIA DGX H100 system. While Hopper chips likely comprise the majority of all AI computing power, there is significant variation in system overhead – across a sample of other systems we were able to obtain figures for, overhead varied from 1.3 to 2.5. A geometric mean of all of the systems we found data for produced a very similar figure to the H100 system.