Leading AI companies have hundreds of thousands of cutting-edge AI chips

The world's leading tech companies—Google, Microsoft, Meta, and Amazon—own AI computing power equivalent to hundreds of thousands of NVIDIA H100s. This compute is used both for their in-house AI development and for cloud customers, including many top AI labs such as OpenAI and Anthropic. Google may have access to the equivalent of over one million H100s, mostly from their TPUs. Microsoft likely has the single largest stock of NVIDIA accelerators, with around 500k H100-equivalents.

A large share of AI computing power is collectively held by groups other than these four, including other cloud companies such as Oracle and CoreWeave, compute users such as Tesla and xAI, and national governments. We highlight Google, Microsoft, Meta, and Amazon as they are likely to have the most compute, and there is little public data for others.

Authors

Published

October 9, 2024

Learn more

Overview

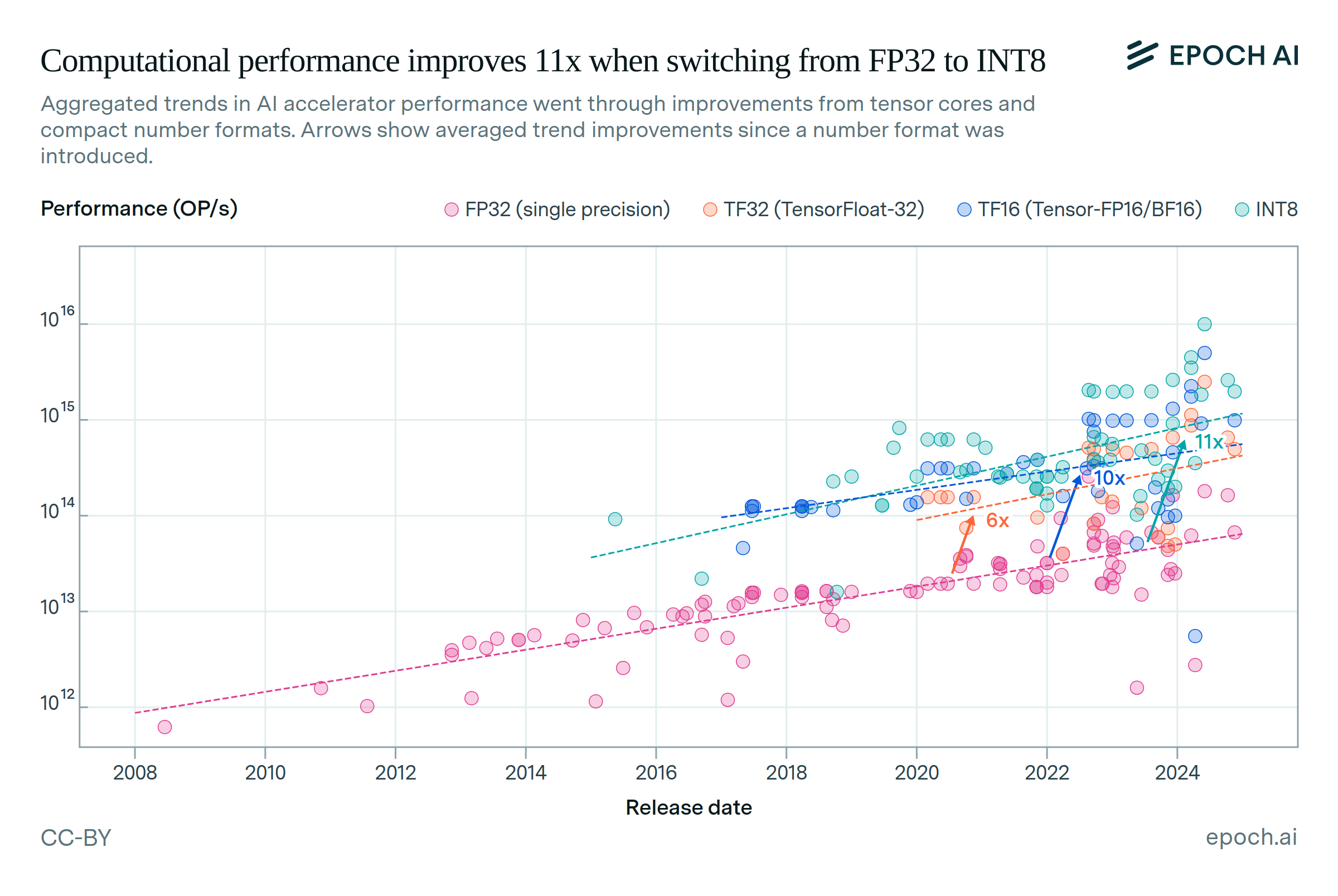

We estimate how many NVIDIA accelerators and TPUs each company owns, then express these in terms of H100-equivalent processing power, based on their tensor-FP16 FLOP/s performance. For NVIDIA, we do this by combining data on overall sales and the allocation of these sales across different customers. For TPUs, we rely on industry analysis about Google’s installed TPU capacity. Note that our “Google” estimate includes all of Alphabet.

A large portion of this compute is rented out to other parties. For example, OpenAI rents its compute from Microsoft, and Anthropic rents compute from Amazon and Google. On the flip side, these four companies may have access to compute owned by other companies; e.g. Microsoft rents at least some compute from Oracle and from smaller cloud providers.

Analysis

We estimate NVIDIA AI chip sales from their reported revenue. NVIDIA’s total data center revenue from the ten quarters from beginning of 2022 to mid-2024, was $111 billion. We estimate that around 80-85% of NVIDIA’s data center revenue comes from AI accelerators, with the rest coming from networking equipment, meaning NVIDIA has sold around $90 billion in AI accelerators in this time period.

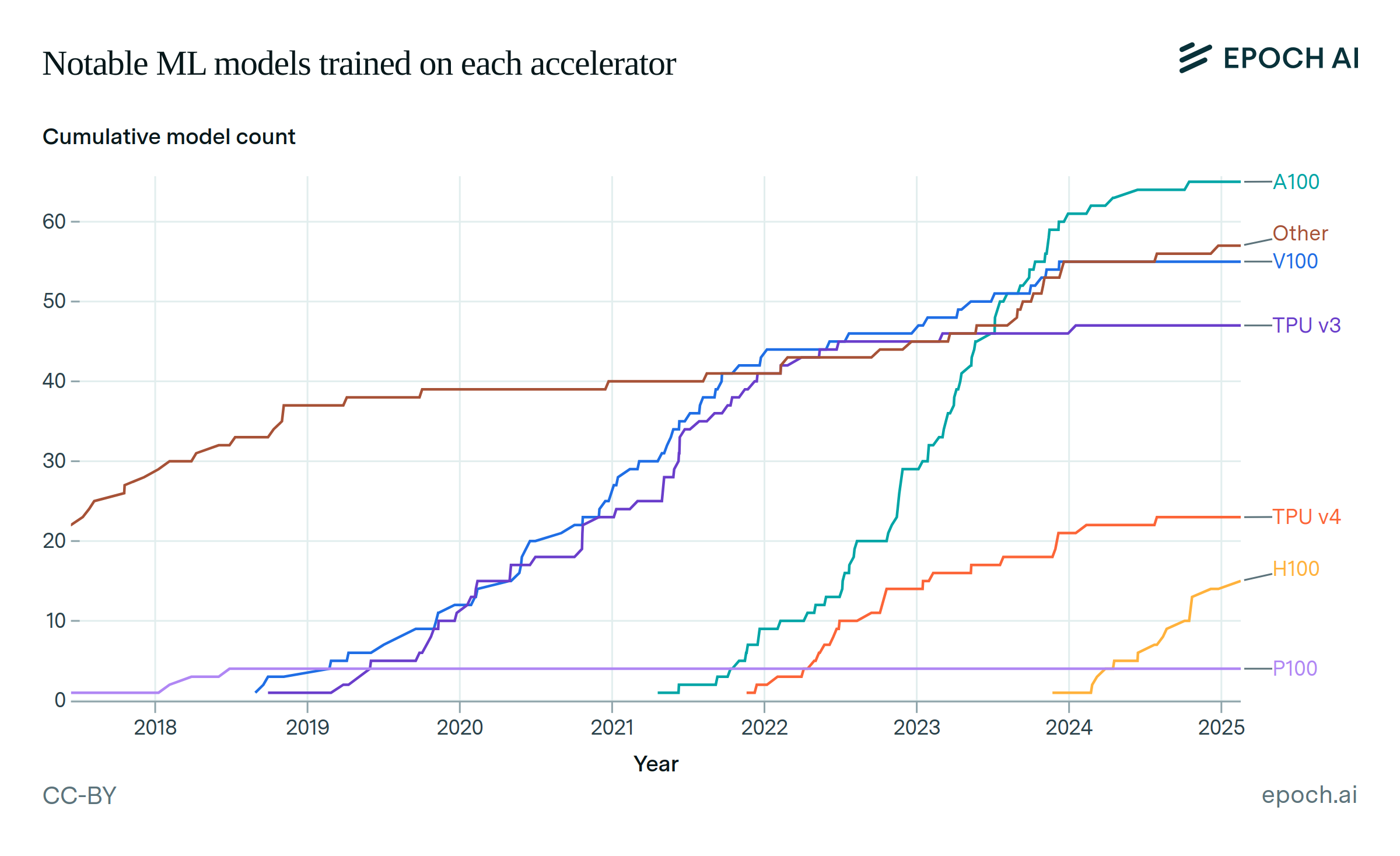

To find the number of chips sold, we divide this by the price per chip. For simplicity, we assume that all the chips sold in 2022 were A100s and all chips sold from 2023 onwards were H100; in reality, there are other chip models and the exact split is uncertain (see Assumptions). We then estimate the average price of A100s in 2022 and H100s in 2023-2024 (likely $10-15k and $20-40k respectively).

This leads to an estimate of roughly 3 million H100 chips (or the equivalent thereof) sold between the beginning of 2022 and mid-2024. Subsequently, we estimate the per-company allocation of H100-equivalents by matching them to their estimated fraction of NVIDIA’s revenue.

We estimate confidence intervals based on differing reports of companies’ shares of NVIDIA’s revenue, and differing reports of H100 prices. We model these as log-normally distributed variables, and create a simple Monte Carlo model to estimate chips owned by each company.

For Google’s TPU chips, we rely on reports from two semiconductor research firms, TechInsights and SemiAnalysis. TechInsights estimated TPU manufacturing volume based on revenue from Broadcom and memory sellers (graph available here). We matched these numbers with TPU version release dates and estimated how many TPUs of each model were manufactured over time. SemiAnalysis reported that as of September 2024, Google has deployed “millions” of TPUs in data centers that draw over 1 GW in power. This is the equivalent of ~650,000 H100s, assuming that the TPU fleet overall has the same energy efficiency as the TPUv4. We again use a simple Monte Carlo model to derive our overall estimates from these sources.

Data

This insight uses several external data sources:

- NVIDIA’s overall sales from their earnings reports. We consider the period from early 2022 to the quarter ending July 2024 (most recently reported). We chose this time frame because a large boom in AI investments began in 2022, so most of these sales are for the AI industry, and these sales represent a large majority of NVIDIA’s AI chip sales to date. Note that these chips must be actually delivered for the revenue to be reported, under generally accepted accounting principles.

- NVIDIA’s disclosures in their earnings reports and their most recent regulatory filings. NVIDIA’s 10-Q form reports the portion of total revenue that came from five customers that made up around 10% of total revenue in the last two quarters. However, the breakdown is anonymized and may not reflect final ownership (as opposed to sales to chip resellers), so we also use other sources to help interpret these results.

- Large tech companies’ capital expenditure on NVIDIA products from recent Bloomberg data. Cross-referencing this against NVIDIA’s regulatory filings allows us to estimate how much revenue came from different leading AI labs. This is corroborated by recent statements from NVIDIA that 45% of data center revenue came from “large cloud providers” in Q2 FY2025. These likely include Microsoft, Amazon, Alphabet, and Oracle.

- Google’s TPU deployment from recent reports on TPU manufacturing and Google’s cluster scaling. We can express these in terms of H100-equivalents using details from the reports and TPU specs.

These data are tabulated below. We estimate uncertainties based on differing reports of NVIDIA’s revenue shares for different customers, and differing reported prices for H100s.

Chip allocation by customer

| 5% CI | 95% CI |

|---|---|

| $96,400,000,000 | $96,400,000,000 |

| 5% CI | 95% CI |

|---|---|

| 0.81 | 0.86 |

| 5% CI | 95% CI |

|---|---|

| $20,000 | $40,000 |

| 5% CI | 95% CI |

|---|---|

| $15,000,000,000 | $15,000,000,000 |

| 5% CI | 95% CI |

|---|---|

| 0.7 | 0.83 |

| 5% CI | 95% CI |

|---|---|

| $10,000 | $15,000 |

| 989 |

| 5% CI | 95% CI |

|---|---|

| 0.12 | 0.25 |

| 5% CI | 95% CI |

|---|---|

| 0.075 | 0.15 |

| 5% CI | 95% CI |

|---|---|

| 0.06 | 0.12 |

| 5% CI | 95% CI |

|---|---|

| 0.05 | 0.12 |

Nvidia Revenue

| Quarter | Datacenter revenue (billions USD) | Total revenue (billions USD) |

|---|---|---|

| Q2 FY25 (ending July 2024) | 26.3 | 30 |

| Q1 FY25 | 22.6 | 26 |

| Q4 FY25 | 18.4 | 22.1 |

| Q3 FY24 | 14.51 | 18.12 |

| Q2 FY24 | 10.32 | 13.51 |

| Q1 FY24 | 4.28 | 7.19 |

| Q4 FY23 | 3.62 | 6.05 |

| Q3 FY23 | 3.83 | 5.93 |

| Q2 FY23 | 3.81 | 6.7 |

| Q1 FY23 (beginning February 2022) | 3.75 | 8.29 |

| Total | 111.42 | 143.89 |

TPUs

| 20% CI | 80% CI |

|---|---|

| 1,250,000 | 1,800,000 |

| 20% CI | 80% CI |

|---|---|

| 0.2 | 0.6 |

| 20% CI | 80% CI |

|---|---|

| 0.1 | 0.9 |

| 20% CI | 80% CI |

|---|---|

| 1,670,000 | 2,400,000 |

| 20% CI | 80% CI |

|---|---|

| 0.5 | 0.8 |

| 20% CI | 80% CI |

|---|---|

| 0.5 | 0.9 |

| 20% CI | 80% CI |

|---|---|

| 1,000,000 | 1,500,000 |

Assumptions

Non-NVIDIA/Google chips: We only estimate quantities for NVIDIA AI accelerators and Google TPUs, because we assume that they make up a very large majority of AI hardware. This seems consistent with available information on other hardware manufacturers. NVIDIA is estimated to have a large majority of AI chip market share. AMD’s AI chip quarterly revenue only reached ~4% of NVIDIA’s in 2024 ($1 billion). Intel has an even smaller share, predicted to sell $500 million of its Gaudi 3 AI chips in 2024. Chinese companies such as Huawei are ramping up their AI chip efforts, but analysts generally still consider NVIDIA to be the market leader in China, despite US export controls preventing the sale of the highest-quality chips to China.

Several large tech companies, including Amazon, Meta, and Microsoft, are developing their own in-house AI chips. Amazon has said it will offer clusters of up to 100,000 of its “Trainium” chips. There currently isn’t public evidence that any of these companies have deployed their in-house chips at large scales.

Non-chip hardware: As noted above, we subtract Nvidia’s networking revenue to find revenue from AI chips. However, the remainder may still include the value of some non-chip equipment, such as non-chip components in NVIDIA’s DGX servers. On the other hand, it is possible that some of this overhead is already included in estimates of H100’s unit price.

Differences between NVIDIA chips: For simplicity, we assume that all NVIDIA AI chip revenue is from H100s. In practice, some of these sales were other chips such as A100s (the flagship AI chip before the H100), H200s (a newer chip with the same peak compute as the H100 and improved memory and bandwidth), L40s (a lower-tier data center chip), and H800s and H20s (lower-quality chips sold to China).

There isn’t good public information breaking down NVIDIA sales by chip, but the large majority of sales have likely been H100s, consistent with projections from last year that NVIDIA would sell 2-2.5 million H100s in 2023 and 2024. The distinctions between these chips should have a small impact on estimates, if price-per-performance was similar across these chip models (e.g. due to discounts on older chips like the A100). This is notably not true of the H20 chip, but they are not a large share of overall sales.

Sales data vs chip ownership: NVIDIA’s sales data may not accurately reflect which end customer ultimately receives their chips, since some companies such as Supermicro are in the business of reselling NVIDIA chips/servers. This contributes to the upside uncertainty on each hyperscaler’s share of NVIDIA chips, since they may own chips that are puchased directly and indirectly.