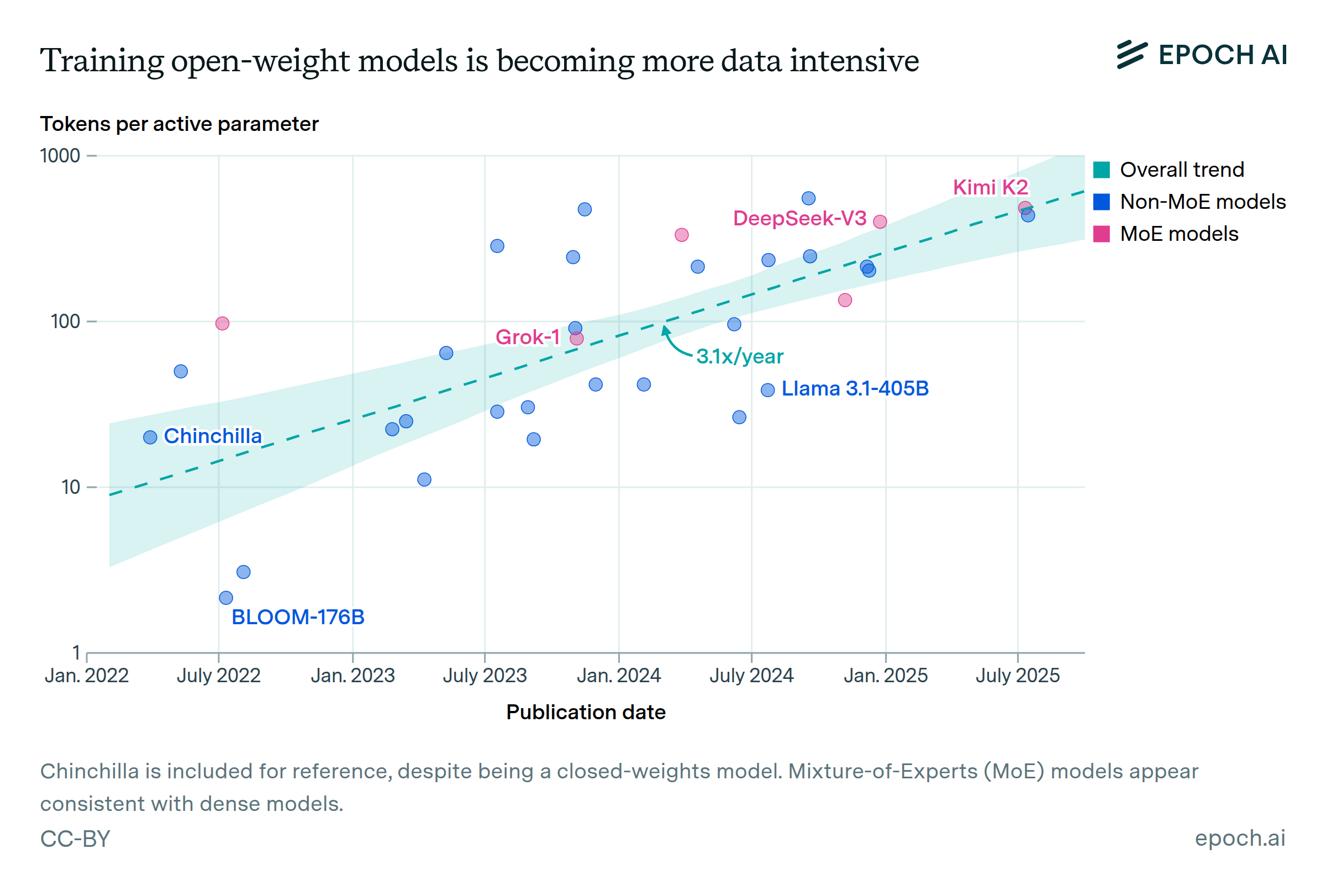

The size of datasets used to train language models doubles approximately every six months

Across all domains of ML, models are using more and more training data. In language modeling, datasets are growing at a rate of 3.7x per year. The largest models currently use datasets with tens of trillions of words. The largest public datasets are about ten times larger than this, for example Common Crawl contains hundreds of trillions of words before filtering.

Authors

Published

June 19, 2024