What did it take to train Grok 4?

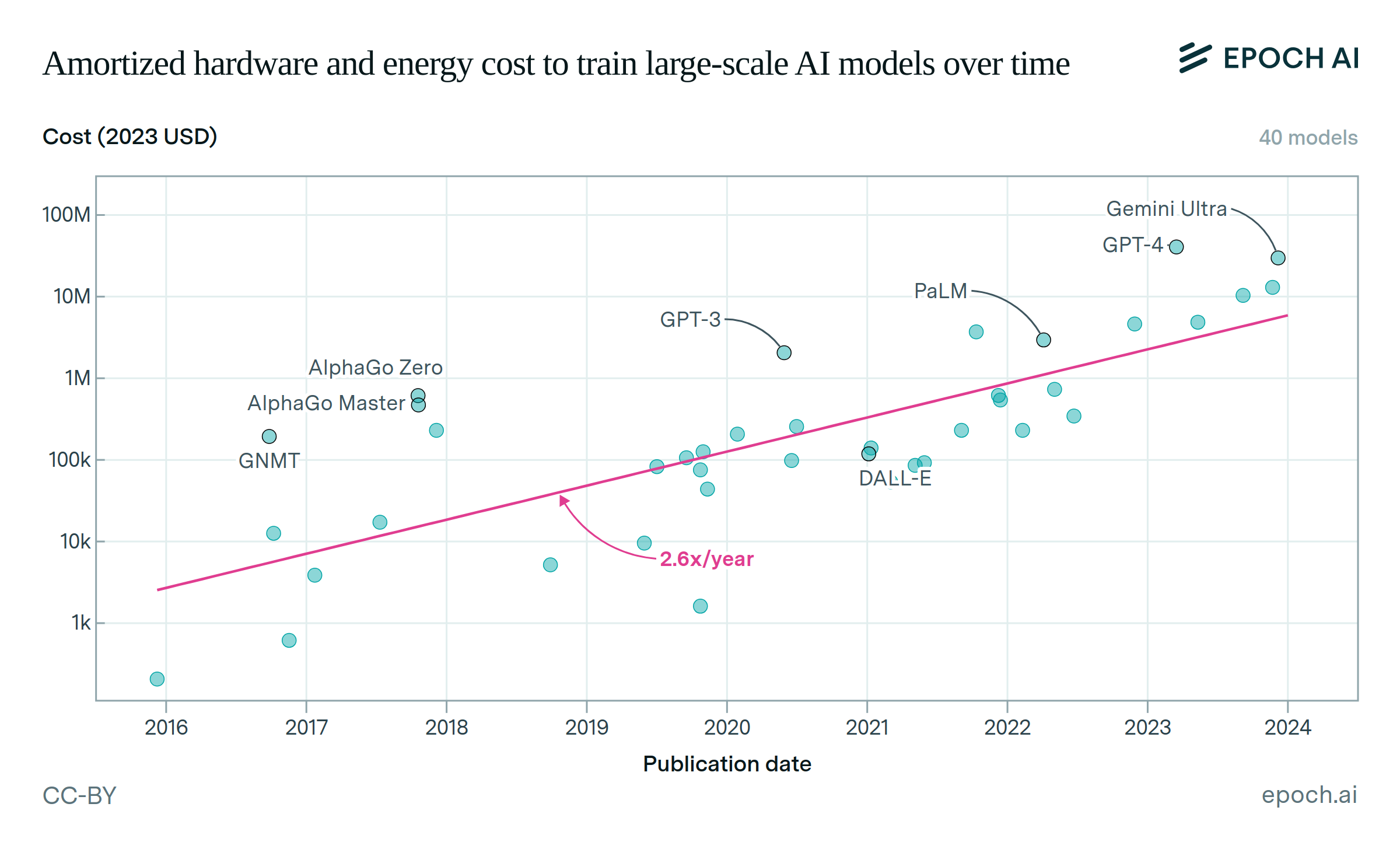

Training today’s leading AI models involves a lot of energy, emissions, water, and money. Consider Grok 4: The training compute cost half a billion U.S. dollars, and required enough energy to power a town of 4,000 Americans. This came with a large environmental footprint, emitting as much as a Boeing aircraft over three years, and requiring over 1.2 billion liters of water for cooling. That’s enough to fill 300 Olympic-sized swimming pools.

These numbers do not even account for the costs of human labor, or the compute costs of running experiments or serving Grok 4 to users, both of which can be very significant. Needless to say, current frontier AI is a very expensive and resource-intensive endeavor.

Published

September 12, 2025

Learn more

Overview

Training today’s frontier models requires substantial investments in the form of electricity, emissions, water, and money. We estimate the resources needed to train Grok 4 and contextualize them against other values. We estimate that training Grok 4 required 310 GWh of electricity, cost $490 million, used about 750 million liters of water, and emitted the equivalent of 150,000 tons of CO2.

Analysis

Power

We estimate that Grok-4 was trained using 246 million H100-hours, based on known details about Grok-3’s training resources and assumptions about the amount by which training was scaled up for Grok-4. We assume xAI’s Colossus uses the SXM variant of H100s, which have maximum power draws of 700 W. We also assume additional factors of 2.03x for non-GPU hardware power usage (e.g. switches), and of 1.2x for power usage efficiency (PUE, e.g. cooling and power transformation). Finally, based on actual power draw figures, the average power draw during training is typically around 3/4th of peak power draw. Multiplying all of these figures gives us a final estimate of 310 GWh. See this notebook for more details.

We compare this to the average yearly energy used per American, given that America consumes 94e15 BTU of energy per year, and there are 340 million Americans. We also compare this to an average wind turbine, which produces 10 million kwh per year.

Cost

We calculate training cost by two methods, incorporating the cost of hardware and power.

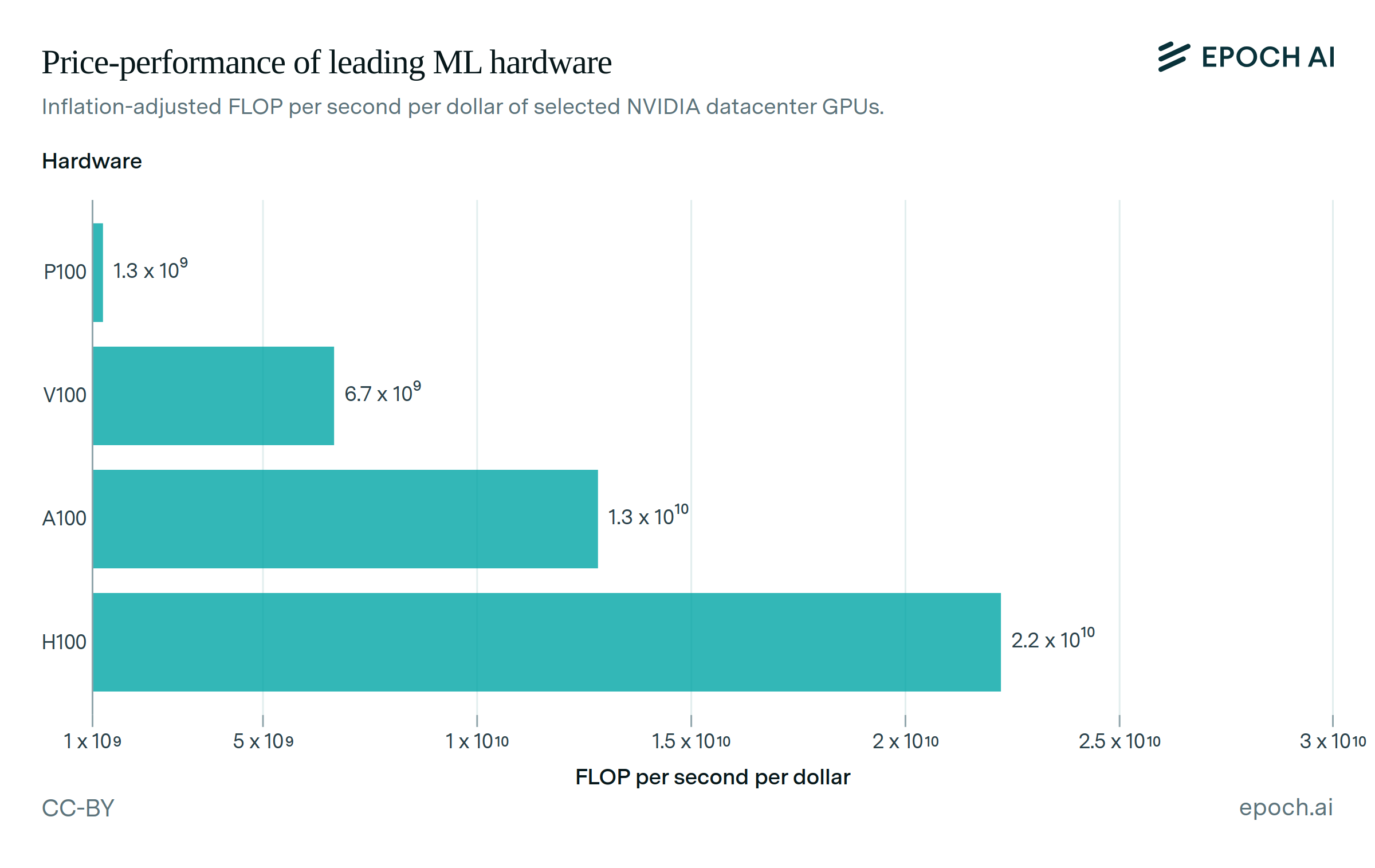

In the first, we use the known number of H100-hours and H100 rental costs, sampling from a log-normal distribution with a 90% confidence interval of $1.90 to $2.20 per H100-hour This gives a median cost estimate of $490 million.

In the second method, we use the rate of hardware improvements to estimate the horizon over which purchased GPUs will depreciate, and prorate by the duration of the training run. We combine this with the power cost, where we assume a cost distributed log-normally with a 90% confidence interval of $0.08 to $0.20 per kWh. We multiply this value by our estimate of 310 GWh to get a median total cost estimate of $490 million. All cost calculations for Grok 4 can be found in this script.

We compare this to the price of a large cruise ship ($1.2 billion).

Water

xAI’s Colossus datacenter appears to have primarily been powered by mobile natural gas turbine generators during the training of Grok-4. Across its lifecycle, natural gas power generation consumes an average of 158 gallons of water per MWh. Additionally, datacenters consume on average 1.8 liters per kWh of electricity. Multiplying by the 310 GWh of total electricity usage, we get an estimate of 754 million liters of water used for training.

We compare this to the average yearly amount of water used to irrigate a square mile of farmland in America, as well as to olympic swimming pools.

Emissions

Grok 4 was trained on the xAI Memphis Colossus supercomputer, which relies on natural gas for power. Knowing the amount of kwh used to train Grok 4, we multiply by the emissions intensity of natural gas (0.49kg CO2-eq per kwh) to get that training Grok 4 was equivalent to emitting 154,000 tons of CO2.

We compare this to the average yearly carbon footprint of 14.3 tons CO2 per American. This is compared to the yearly emissions of an average Boeing airplane, given their lifetime emissions equivalent to one million tons CO2, and an average lifespan of 25 years.

All final calculations can be found here.

Assumptions

The FLOP and GPU-hour estimates for Grok-4 are largely based on public statements from xAI, which are often vague. So there is significant uncertainty around our point estimate for these quantities. We then estimate the energy consumption, water use, emissions, and cost based on the original estimate of H100-hours used for training via the assumptions and methods outlined in Analysis. We compare these to equivalent amounts of more easily contextualized quantities using the conversion factors cited in Analysis.