Global AI power capacity is now comparable to peak power usage of New York State

Total AI data center power capacity reached approximately 30 GW in the last quarter of 2025—comparable to peak power usage in New York State, and outstripping many developed countries.

This estimate is based on the rated power capacity of leading AI chips sold over time. We apply a ~2.5x multiplier to estimate data center capacity, accounting for the added power requirements for servers, cooling, and infrastructure.

Published

January 16, 2026

Explore this data

Learn more

Overview

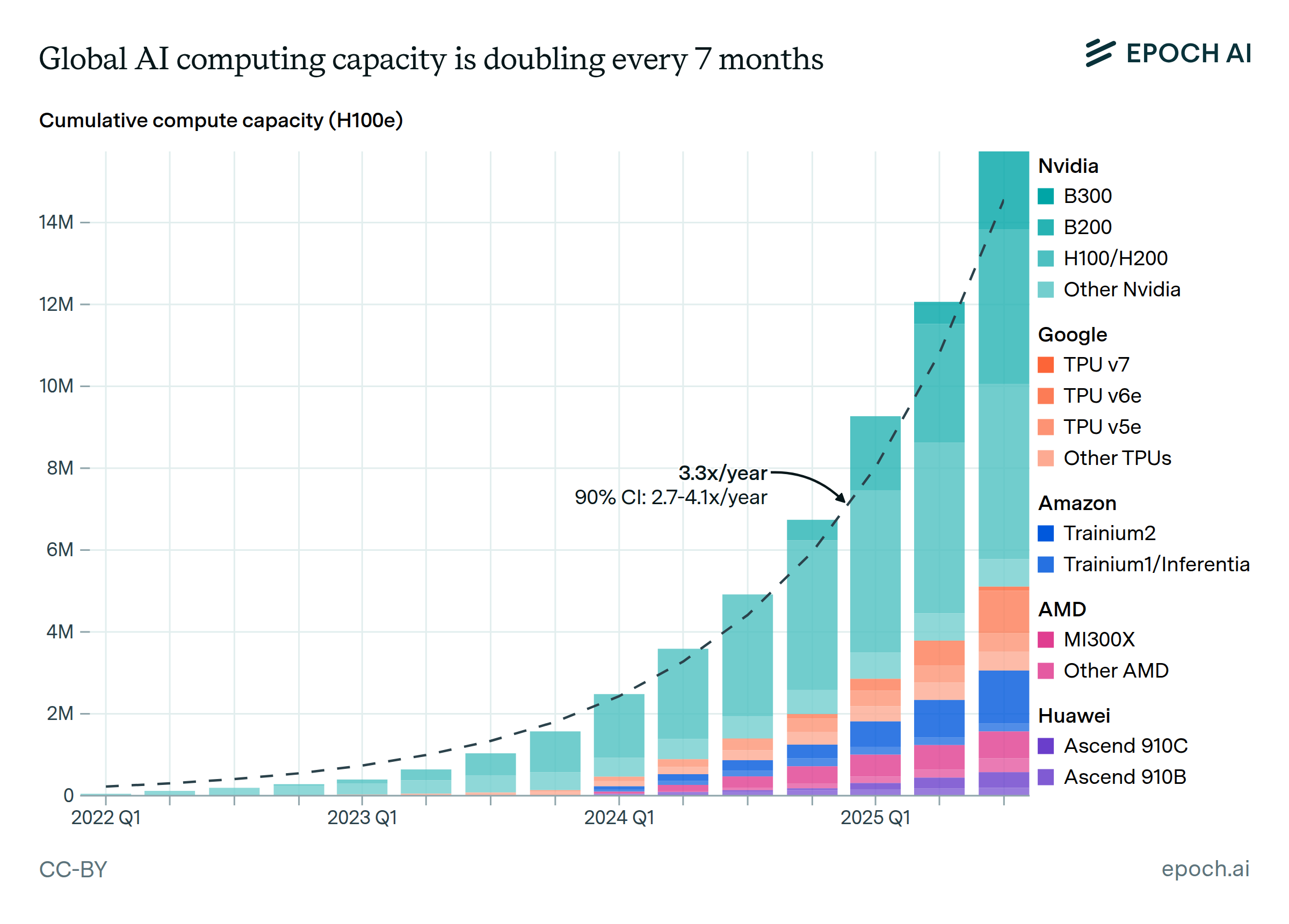

How much power do AI chips use, in total? We estimate it would require about 30 GW of electricity to power all of the chips sold by major manufacturers as of 2025.

We come to this estimate by looking at which chips were sold in what volume on a quarterly basis. After multiplying each chip’s rated power capacity by its quantity, we apply a 2.5x overhead multiplier to account for power used for non-chip uses like servers, networking, cooling, and other infrastructure.

Data

We use data from our AI chip sales database, which contains information on sales from major AI chip manufacturers including NVIDIA, Google, Amazon, AMD, and Huawei. We use the quantities of each type of chip sold, along with each chip’s rated thermal design power (TDP), to calculate the total power capacity of deployed AI chips.

The data spans from 2022 Q1 through 2025 Q4, though we only have partial data in early periods (2022 Q1–2023 Q1) and in 2025 Q4. To estimate the final value at the end of 2025 Q4, we extrapolate Nvidia, Google, and AMD sales using their average growth rate since 2024 Q1.

Analysis

Our underlying chip sales data is based on financial documents from major AI chip manufacturers. Reporting periods in these documents don’t always align with calendar quarters, so we standardize by estimating the amounts sold in each calendar quarter, assuming that sales within a reporting period are distributed uniformly.

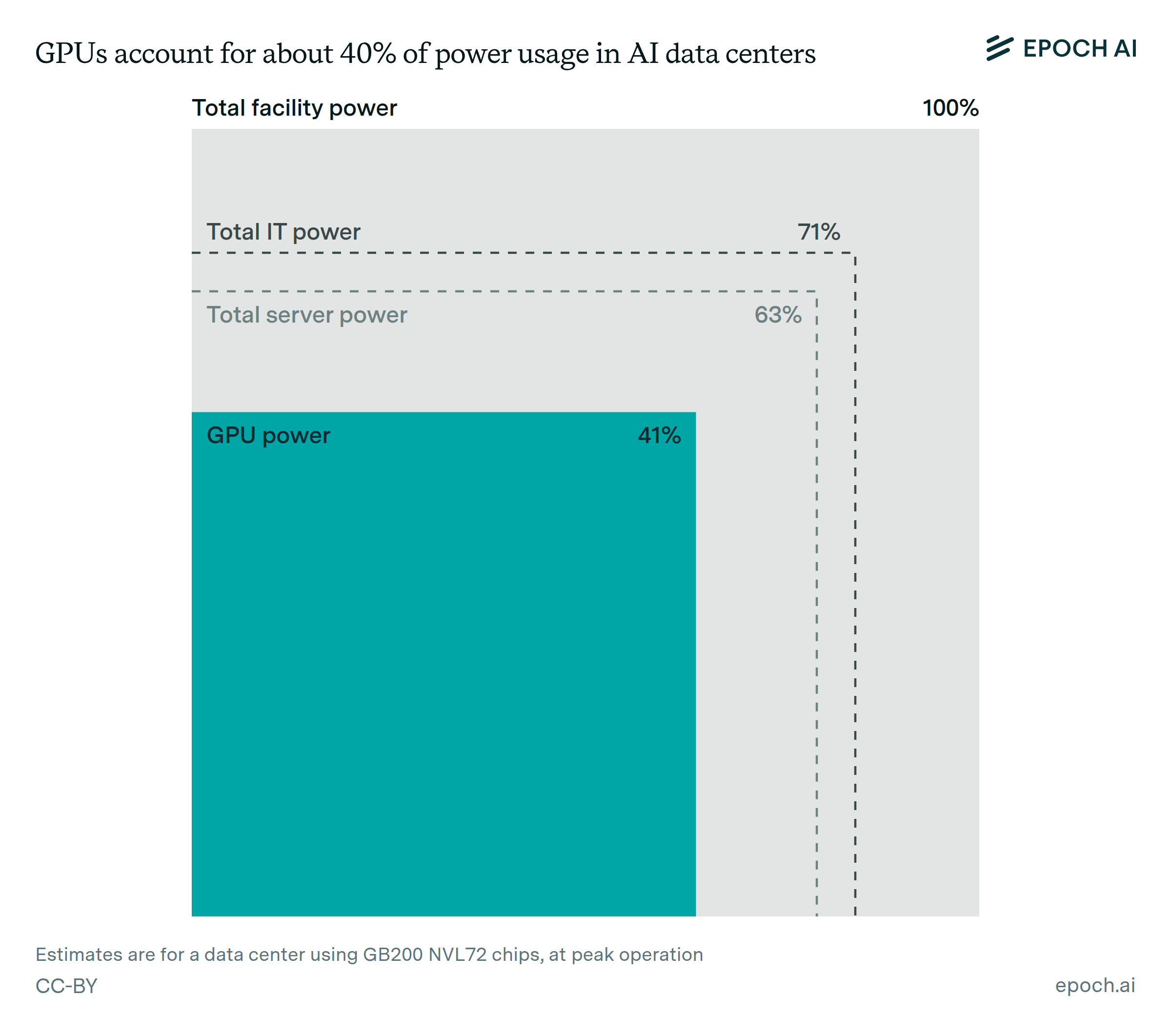

After obtaining quarterly sales quantities for each chip type, we multiply by the thermal design power of each chip (TDP, the amount of power a chip can handle at peak utilization) to get a total quantity of power used by AI chips directly. We then estimate the additional power needed to support these chips within datacenters by multiplying by 2.5, giving a final quarterly total. This 2.5x accounts for things like networking, cooling, power distribution, and other infrastructure, and corresponds to the total power required by the facility (including non-IT uses).

We compare these values against the peak electrical demands of several jurisdictions:

- New York State, at around 31 GW (2025 estimate)

- Netherlands, at 19 GW (source)

- New Zealand, at 7 GW (2024 report)

Assumptions and limitations

- Our AI Chip Sales dataset covers the five largest AI chip manufacturers; however, we don’t have data on smaller manufacturers like Groq, Cerebras, and Intel. We do not believe the contributions from these companies would meaningfully change our analysis, and do not attempt to correct for their exclusion.

- Data early in our cumulative series is limited, since AI data center capacity in 2022 included chips sold before 2022. This has very little impact on our 2025 estimates given rapid growth and chip retirements, but moderately affects the absolute values in our early data.

- TDP represents maximum rated power draw rather than actual consumption, which varies with workload and utilization. Therefore, our estimates represent the power requirements of all chips being run simultaneously at full utilization.

- The 2.5x overhead multiplier used to calculate total power usage is an estimate based on a datacenter using GB200 NVL72 servers. Actual power usage effectiveness will vary by facility.

- Q4 2025 figures include extrapolated values for some manufacturers, and actual totals may differ as complete data becomes available.