Introduction

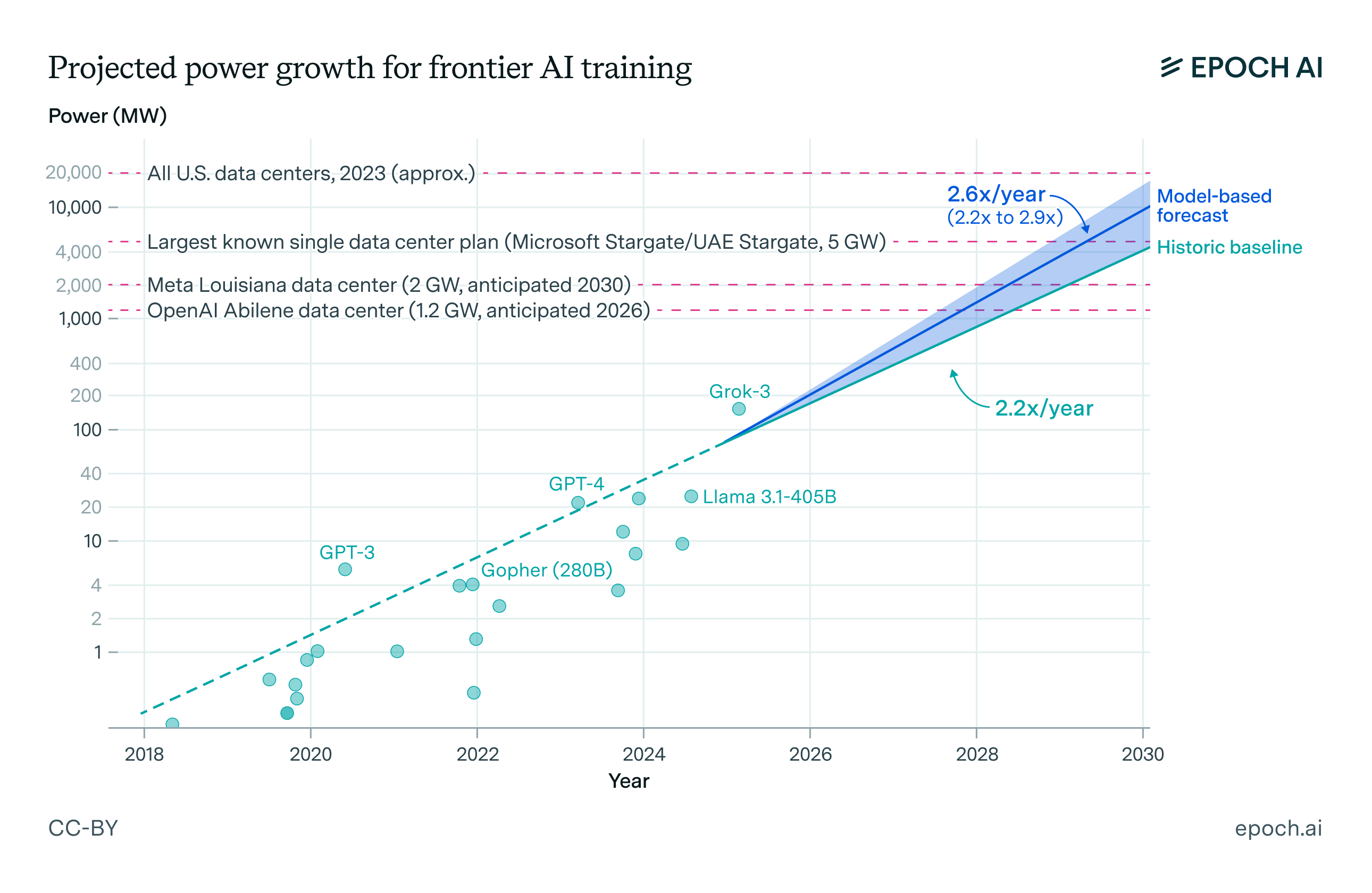

The electrical power required to train individual frontier AI models has been growing rapidly over time, driven by the growth in total training compute and the size of training clusters. Previously, we found that the power required to train a frontier model has been more than doubling every year. If trends continue, how high could these power demands become?

In a new white paper, “Scaling Intelligence: The Exponential Growth of AI’s Power Needs”, written in collaboration with EPRI, we analyze the factors driving power growth for frontier training, and forecast this growth out to 2030. We conclude that the largest individual frontier training runs in 2030 will likely draw 4-16 gigawatts (GW) of power, or enough to power millions of US homes.

Forecasting power demands using model training compute

Power demands for frontier training runs have historically grown at a rate of 2.2x per year, with the largest runs now exceeding 100 MW. This has primarily been driven by frontier training compute, which has been growing at 4-5x per year.

However, translating this compute growth trend into power demand requires dividing the compute growth rate by growth rates in two mitigating factors: hardware energy efficiency improvements, and growth in training run durations, which spreads compute costs over longer time periods and reduces power requirements.

We analyze trends in compute, efficiency, and duration and find that training compute and efficiency growth will likely continue, while duration growth will likely slow:

-

Training compute scaling will likely continue at around 4-5x/year, despite recent advances in compute efficiency and the shift towards reasoning models. Scaling has historically existed alongside algorithmic efficiency improvements, and the reasoning paradigm will still incentivize further compute scaling in reinforcement learning.

In the longer term, especially beyond 2030, compute scaling is the main source of uncertainty in training power trends, as upfront costs for frontier models reach or exceed hundreds of billions of dollars and energy requirements exceed the largest generation sources.

-

Energy efficiency will likely continue on trend. The energy efficiency of leading AI GPUs has improved by 40% per year, while a broader set of GPUs used in ML has improved by 26% per year. This is broadly consistent with the decades-long trend in computing efficiency described by Koomey’s Law. The transition to training in lower-precision number formats will also likely contribute to efficiency gains.

-

Training run duration growth will likely slow down. Historically, training run duration has grown by 26% to 50% per year, but with many training runs now exceeding 100 days this growth rate seems less sustainable, as the rapid pace of innovation in AI creates demand for short iteration cycles. We anticipate a slowdown to 10-20% growth in duration per year through 2030.

We combine these trends into a forecast of the power demand of the largest frontier models. However, there is some model uncertainty around the interplay of these trends: importantly, limits to training run duration could cause a slowdown in compute scaling, rather than motivating faster cluster and power growth. Due to this uncertainty, we also present an extrapolation of the historic baseline in frontier training power growth, which directly measures the power trend in training clusters, as opposed to trends in model training compute. See the paper and code for more details.

Overall, we conclude that power demand for frontier training will likely grow by 2.2x to 2.9x per year in the coming years, implying that the largest training runs will reach 4-16 GW by 2030. The lower end of this range is consistent with already-announced plans for multi-GW data centers. Note that we do not model in detail the bottlenecks or constraints to supplying this amount of power. It is not certain that this much power growth is actually feasible by 2030, especially at the upper end of the uncertainty range.1

Figure 1: Historic trend and forecast for the power demand of the largest individual frontier training runs. The shaded interval is the 10th and 90th percentiles of our forecast based on trends in training compute, efficiency, and training run duration growth. The main source of upside uncertainty relative to the historic trend is whether limits to training duration motivate accelerated scaling in training hardware.

Implications for the energy sector

Frontier training is of special interest to the energy sector because it is a key driver of the scale of the largest data centers, which require large, localized power demands that are challenging to supply. However, there is evidence that distributed training across multiple geographic locations is becoming increasingly technically feasible: for example, Google DeepMind has already trained models across multiple data center campuses. This may enable training runs to grow beyond the power constraints for single sites. In any case, companies are still planning to build several multi-gigawatt data centers by 2030.

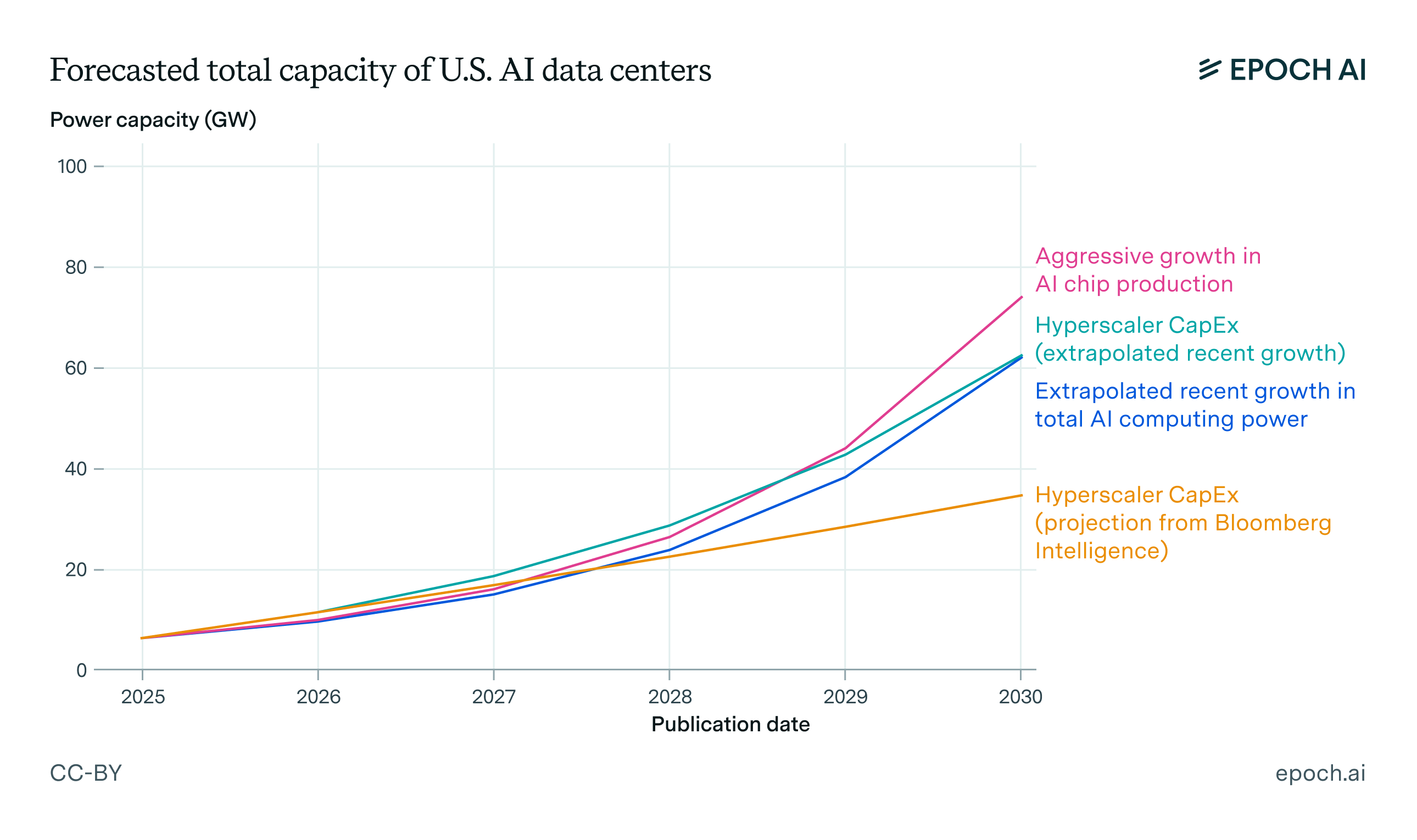

Additionally, we review evidence on how overall AI power capacity will grow based on growth rates in chip production and AI capital expenditures, as well as third-party estimates on data center growth. This places frontier training demands in context: individual gigawatt-scale training runs imply a much higher level of total AI data center capacity, given multiple frontier AI companies and compute demands for inference and experiments as well as training.

Overall, we find that >100 GW of total AI capacity worldwide and >50 GW in the US is plausible by 2030, which would approach 5% of the US’s total power generation capacity.

Figure 2: Projections of growth in total US AI data center capacity, based on several estimates or extrapolations from current trends, assuming the US maintains a 50% share of worldwide AI capacity. The current US baseline of 5 GW is an estimate. See the full report for details.

Currently, AI power demand is split roughly equally between training, experiments, and inference, with inference being naturally suited to distributed power demands. It is not clear how this split will evolve over time: this will depend on growth in inference demand growth, and how inference demand affects the returns to further scaling up training.

To learn more, you can read the full paper here.

Notes

-

See “Can AI Scaling Continue Through 2030?” for an analysis of power bottlenecks for frontier training, where we conclude that 4x/year compute growth is possible through 2030, requiring over 5 GW of power.

About the authors