Today’s largest data center can do more than 20 GPT-4-scale training runs each month

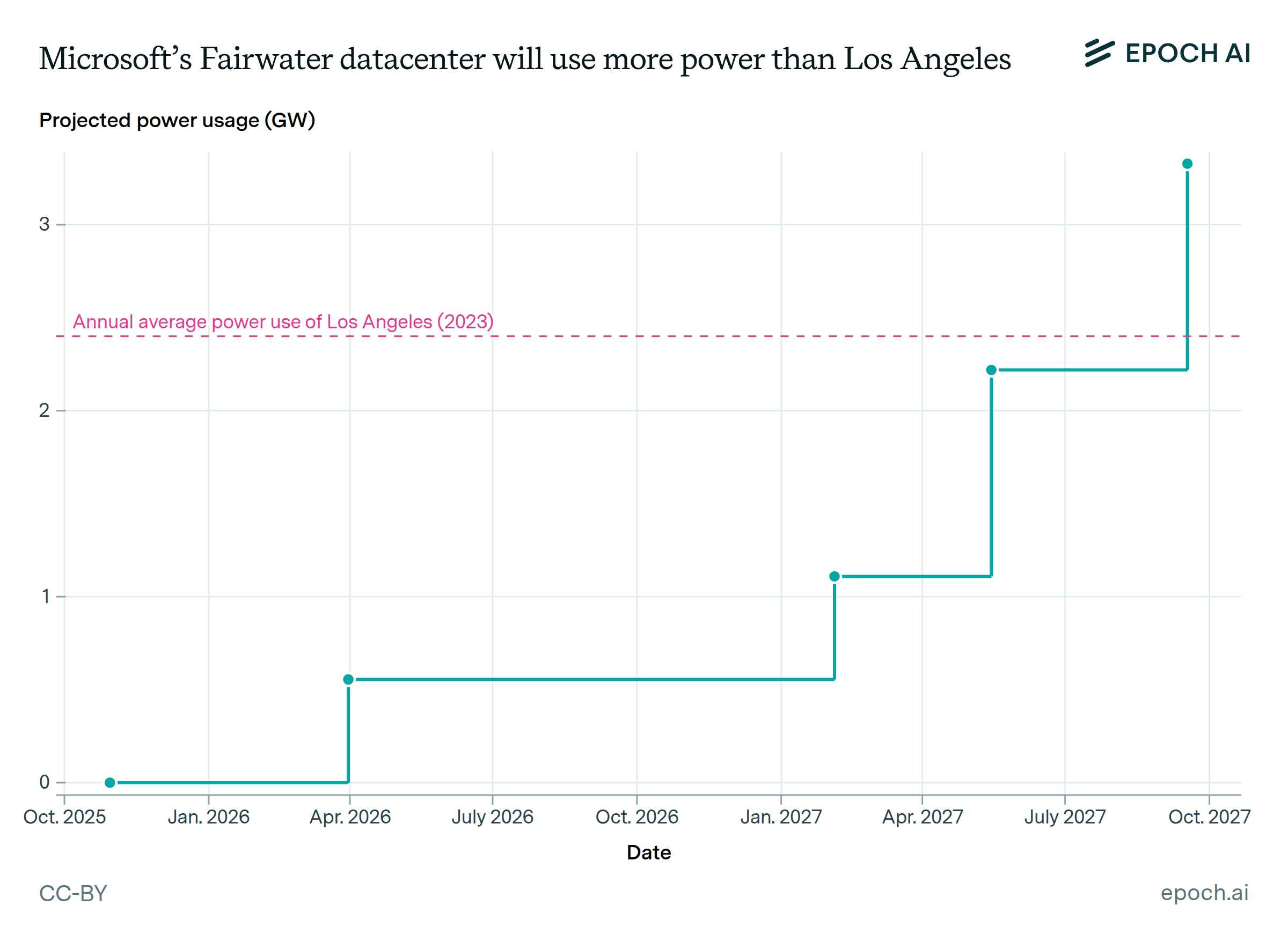

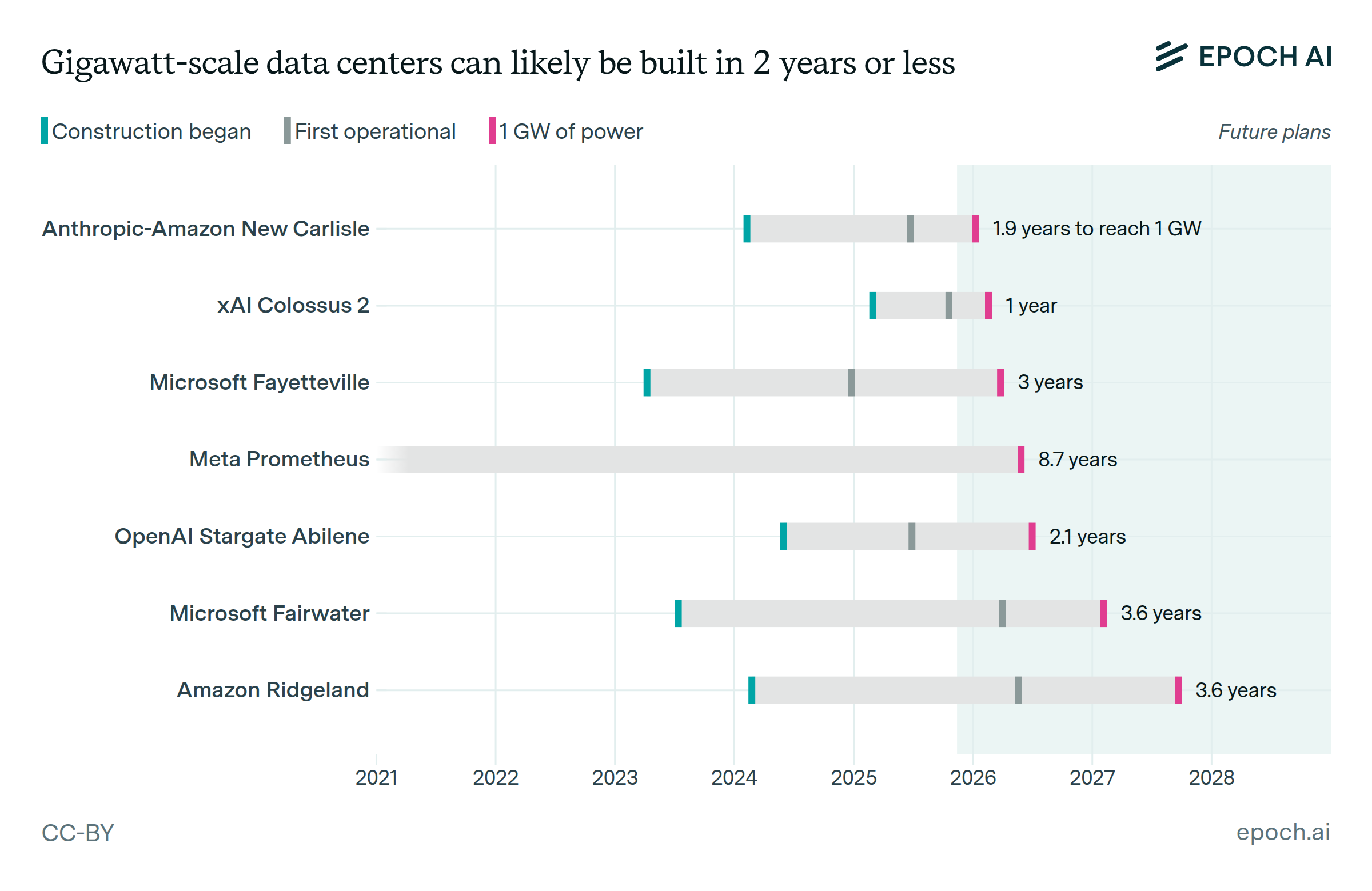

Microsoft’s Fairwater Atlanta data center is already capable of doing 23 training runs as large as GPT-4’s each month, and could do around 70 in the 90 to 100 days it took to train the original GPT-4. Larger data centers allow labs to significantly increase the scale and number of both experiments and final training runs.

Colossus 2 will likely have more than doubled these numbers by the end of 2026. By the end of 2027, we expect Microsoft’s Fairwater Wisconsin site will be able to do over 225 GPT-4 scale training runs each month.

Authors

Published

December 4, 2025

Explore this data

Learn more

Methodology

We combine training compute and training duration estimates for GPT-4 from our AI Models database with computational power estimates from our Frontier AI Data Centers database to calculate how many parallel GPT-4-sized runs could be completed in one month, using the largest data center at each date. To compare directly against GPT-4’s training setting, we assume that calculate our numbers using 16-bit precision performance.

You can learn more about how we estimate current and future data center specs on our Frontier AI Data Centers documentation page.