AI and explosive growth redux

Published

The debate around the macroeconomic effects of AI has shown no sign of convergence.

On the one hand, renowned economists like Daron Acemoglu envision that AI will only increase US GDP by <2% over ten years. On the other hand, others argue that AI could plausibly drive “explosive growth”, with GWP growth rates north of 30% per year.

So who’s right? To shed light on this debate, we recently released the Growth and AI Transition Endogenous (GATE) model, an integrated assessment model of AI automation designed to bridge the gap between economists and AI practitioners. But while we discussed how the model is laid out on a technical level, we’ve yet to detail how to interpret the model’s predictions, and our most substantial takeaways from the model.

As such, in this post we’ll explain our two biggest updates from our work on GATE. Importantly, these are qualitative updates – the model was designed to provide high-level qualitative insights, not make precise quantitative predictions:

- Significant AI-driven growth accelerations happen more easily than we thought: Skeptics of explosive growth often point to Baumol effects as a major constraint on growth, but GATE simulations suggest that these effects are even weaker than anticipated. Furthermore, introducing additional bottlenecks (e.g. labor frictions, investor uncertainty and R&D externalities leading to underinvestment) did less than we expected to dampen these growth accelerations.

- Massive AI investments could be justified, even compared to today’s level: Forget $500B investments in AI infrastructure – GATE simulations suggest that much higher investments in AI development could easily be justified, given the massive increases in consumption associated with explosive growth.

With these considerations in mind, we find it hard to rationalize views that place high confidence in explosive growth being impossible. The same is true for views that consider explosive growth essentially a certainty. The goal of this post is to articulate and argue for a “third way” position: be optimistic about the economic growth effects of AI, but keep your confidence level moderate.

1. Significant growth accelerations more plausible than we thought, as Baumol effects are overrated

If we could only draw one lesson from the literature on explosive growth, it would be this: AI labor is much more accumulable than human labor, and the increase in the size of the labor force associated with widespread AI deployment is what drives explosive growth across a range of standard economic growth models.1

Since GATE also captures this dynamic, we expected that it would predict explosive growth due to AI automation.2 But we were surprised by just how quickly explosive growth seems to arise in the model, and by how robust the finding of explosive growth is.

This surprising observation is driven by the fact that the fraction of labor tasks automated by AI doesn’t need to be very high to yield very large growth impacts. Consider the following plot, based on our default simulation parameters3:

Here we see that even when AIs have only automated 30% of all economically useful tasks, economic growth rates may exceed 20%. Achieving the strict bar for explosive growth (>30%) is harder however, requiring close to 50-70% of tasks to be automated. Nevertheless, we were surprised to see growth effects this large well before full automation.4

Of course, how soon this major growth acceleration occurs depends on the extent to which different labor tasks bottleneck each other, via standard “Baumol effects”. In GATE, the strength of this bottlenecking effect is controlled by the “substitution parameter” across tasks ⍴ < 0, and becomes progressively stronger as this parameter decreases further below zero. As we saw earlier, our default estimate of ⍴ = -0.65 results in massive growth accelerations in the first five years of simulation, but even introducing much more stringent bottlenecks typically fails to preclude this.5

This is of course far too aggressive to be plausible. For example, we consider it extremely unlikely that the model’s default growth predictions of 23%/year in 2027 will turn out to be correct. So when developing GATE we considered what important dynamics we were missing that might “tame” the model’s predictions.

As a result, we introduced three add-ons (not activated in the default model simulations) that account for forces that may temper our findings:

- R&D wedge: This accounts for positive externalities in AI development, where AI developers might not capture all the value of their R&D efforts, and hence choose to invest less than in our baseline scenario.

- Investor uncertainty: This captures how uncertainty about what degrees of AI automation are attainable may result in uncertainty about the returns to investment, dampening AI investments.

- Labor reallocation frictions: This accounts for job switching costs due to automation, pessimistically assuming that no human labor reallocation across tasks occurs, thus boosting the strength of bottlenecks emerging from yet to be automated tasks.

But even after jointly activating all three add-ons, our simulations still predict explosive growth around the point of full automation of all economically valuable tasks. Moreover, while these add-ons make the dynamics less aggressive, it’s still far from a “business as usual” scenario – at the point that 40% of tasks are automated, GATE still predicts 12% GWP growth rates, on par with peak GDP growth rates observed in East Asian economies during the 20th century.

GATE A is the result of default simulations, whereas GATE B is the default but modified to account for positive externalities in AI development, investor uncertainty, and labor reallocation frictions.6

Of course, one could still argue that the model misses core economic dynamics, and this is no doubt true – there’s simply no way that a single integrated assessment model will capture all potential bottlenecks. But the more we introduce bottlenecks to tame the model, the more there’s a question of whether we’re forcing the model to produce a particular desired outcome.7

All in all, we’ve updated towards a view that explosive growth may occur in a wider range of scenarios than we’d previously anticipated.

2. We underestimated just by how much the world could be underinvesting in AI today

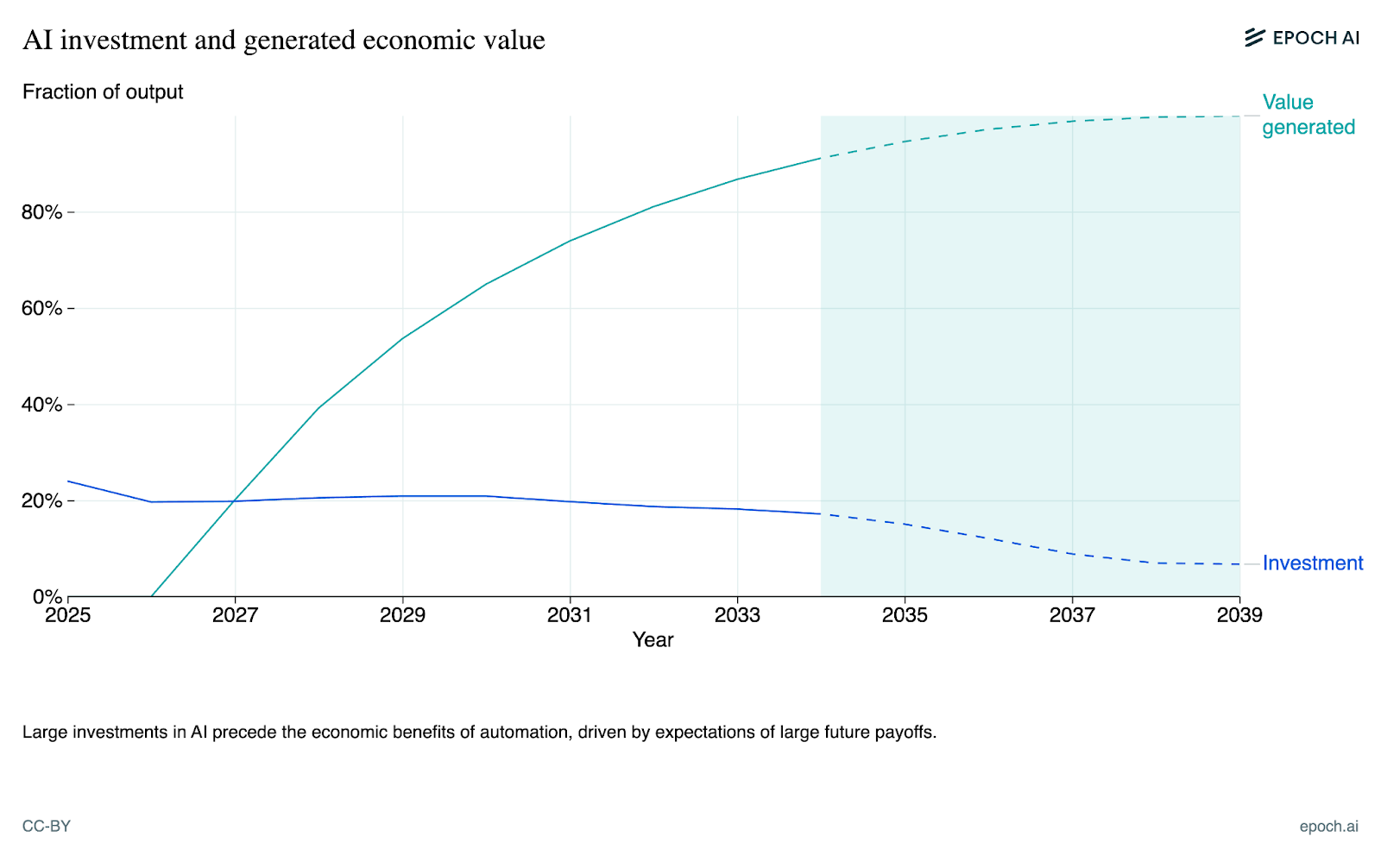

One driver of GATE’s predictions of explosive growth is that the model envisions truly vast amounts of AI investment. For instance, simulation results suggest that optimal investment in AI for the year 2025 amounts to $25 trillion! This makes existing $500 billion investment commitments such as OpenAI’s Stargate project seem puny.

Needless to say, this raises several puzzles. For starters, how does GATE arrive at such an aggressive allocation of investments? A big part of the story boils down to the huge amounts of value that could be generated from increased AI automation. In particular, current worldwide labor compensation is on the order of $50T, much of which would be captured by AI via full automation. For such an enormous amount of value, it could be economically justified to make huge upfront investments, speeding up the arrival of full automation.8 If this is even close to being right, current AI investments are too conservative rather than being driven by a “bubble”, as is often proclaimed.

But skeptics might ask: If this is right, why aren’t people actually investing $25T in AI this year? Several reasons come to mind. One may be the presence of positive externalities – individual AI firms may only be able to capture a small fraction of AI’s economic benefits. But this doesn’t seem like the full story – for one, when we introduce the aforementioned “R&D wedge”, the initial 2025 investment barely changes. Introducing investor uncertainty on top of this does make a substantial difference, but the resulting investment still vastly exceeds those observed today.9

Other reasons might include expectations about future regulatory barriers to AI adoption or punitive taxation of excess returns to AI investment. There is also uncertainty about the cost of automating certain tasks (e.g. physical tasks that require robotics), as well as behavioral mechanisms such as ambiguity aversion, normalcy bias or conformism. Evidently, it is possible that GATE is misspecified in important ways that are observable to investors.

Overall, it’s not entirely clear to us why there is such a large difference between GATE’s investment predictions and currently observed levels. There are many potential explanations for the discrepancy (since the model does not fully adhere to reality), but it is hard to pin down the main culprit. Nonetheless, we think that the results of our GATE simulations should constitute at least somewhat of an update towards current investments being suboptimally low (though this needs to be weighed against the negative externalities from AI development as well).

A blow against the skeptics, many blows against the overconfident

Of course, there are many factors that the GATE model doesn’t fully capture. This raises valid concerns about how much we can trust the model’s predictions and how much we should update in light of them. However, we think there are several considerations that push in the direction of a meaningful update based on our simulation results.

The first is that criticism of GATE comes from both directions. Skeptics have pointed out that we don’t explicitly model bottlenecks like the diffusion of technologies into the economy, as well as robotics that are required for the automation of manual tasks. The model also omits considerations of market power and imperfect competition, which may overstate the scale-up of the digital labor force and hence the expansion of output.

On the other hand, some AI researchers argue that the model is far too conservative. The model of AI R&D could be much more aggressive, allowing for a “software intelligence explosion”, where AI systems recursively self improve without needing a continued expansion of physical compute or rapid economic growth.10 Moreover, the GATE model also does not model growth in total factor productivity, which would presumably make its growth impacts more substantial still.

Overall, these updates suggest to us that those who are confidently either extremely skeptical or extremely bullish about an unprecedented growth acceleration due to AI are likely miscalibrated.11 On both the inside and outside views, credible cases can be made both for and against explosive growth. Existing models, GATE included, are likely to be misspecified and make at least some predictions that deviate substantially from real-world observations, like GATE does with investment. Moreover, their predictions are sensitive, albeit less than we originally believed, to the values of key parameters.

That said, we are increasingly puzzled by the views of highly confident AI skeptics, currently dominant in the economic profession. We have taken a standard macroeconomic model, expanded it to include key AI engineering features and calibrated it using the available evidence and expert opinion. We then employed this machinery to perform simulations, and more often than not we find significant growth accelerations due to AI up to and including explosive growth. This leaves us finding the positions of confident skeptics very difficult to rationalize.

Moreover, in our work we have tried to account for many of the objections raised by the skeptics (e.g. positive externalities in AI development, Baumol effects) and our bullish growth findings seem quite robust. All in all, while GATE still has several limitations, we believe there should still be at least some update towards significant growth accelerations due to AI. We thus would welcome engagement with our work from professional economists, as we believe honing in on our key disagreements will help advance what has become a stagnant and entrenched debate.

Notes

-

In the context of Erdil and Besiroglu 2023, having a much more accumulable labor force due to AI enables you to take advantage of increasing returns to scale in production. Even with constant returns to scale, the rate at which the AI labor can be growth could be sufficient to hit the bar of explosive economic growth. ↩

-

More specifically, increases in the joint AI-human labor force in GATE largely arise due to scale-ups in AI chip production, improvements in chip performance, and improvements in AI algorithms. ↩

-

These “default” parameter values were chosen based on the available empirical evidence and expert opinion. For more details see the GATE technical paper. ↩

-

Note that this refers to the point where AI systems are capable enough to perform any labor task, not when they actually perform all of them. ↩

-

The specific calculation for this is in Appendix D.1 of the GATE technical paper. ↩

-

In particular, the GATE B scenario has the following parameter settings: R&D wedge = 10, investor uncertainty (with a 50% chance of at most 30% automation, and a 50% chance of at most 100% automation being attainable), and labour allocation frictions. ↩

-

This is also why we only include these more conservative dynamics as optional add-ons to the model, rather than present in default simulations. We initially set out to analyze the high-level predictions of this endogenous growth model, rather than force it to produce specific quantitative results. ↩

-

See Sevilla et al. 2024 for an example calculation that illustrates this point more mathematically. ↩

-

We determine this by modifying default simulations to include both the R&D wedge (with a value of 10) and investor uncertainty (with 50% chance of at most 30% automation, and 50% chance of at most 100% automation being attainable). Including both of these add-ons changes the initial AI investment from 24% of GWP to around 15%, which is a substantial decrease but still far higher than those observed today. ↩

-

Note that the term “software intelligence explosion” is often taken to mean a range of different things. This can be mathematically operationalized in GATE by allowing for multiple orders of magnitude of software efficiency increase over a year, which is technically possible but requires allowing the entire economy to grow at absurdly high rates (e.g. >1000% per year). As a result, we say that the software intelligence explosion is not possible in GATE, at least not as conceived in popular descriptions (where AI systems become rapidly better, without necessarily involving much of an increase in economic growth rates). ↩

-

For example, the extremely skeptical position could refer to something like <1% chance on >30% growth per year being possible by the end of the century. The extremely bullish position could refer to something like >99% chance for the same outcome. ↩