Explosive growth from AI: A review of the arguments

Our new article examines why we might (or might not) expect growth on the order of ten-fold the growth rates common in today’s frontier economies once advanced AI systems are widely deployed.

Published

Resources

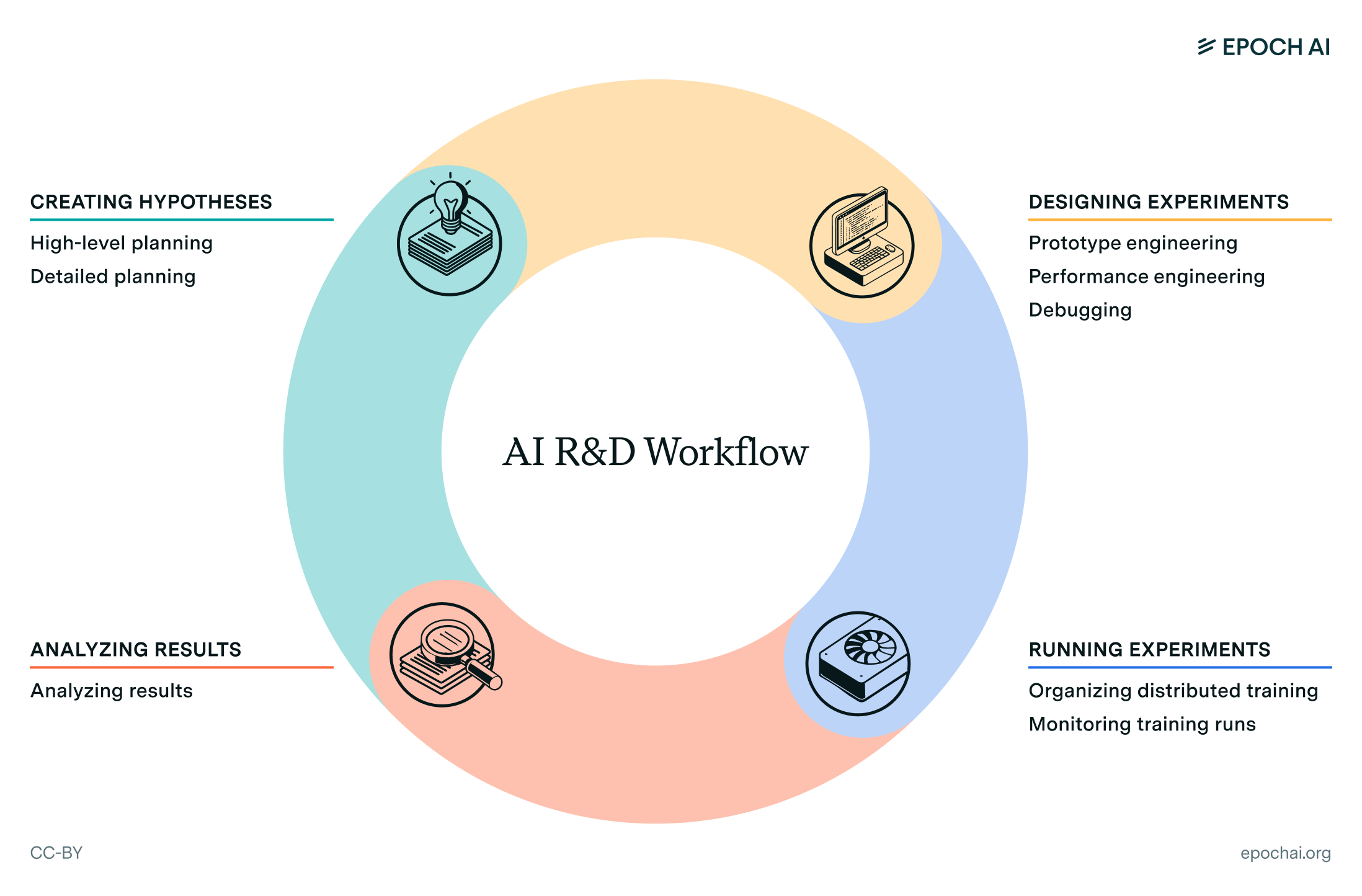

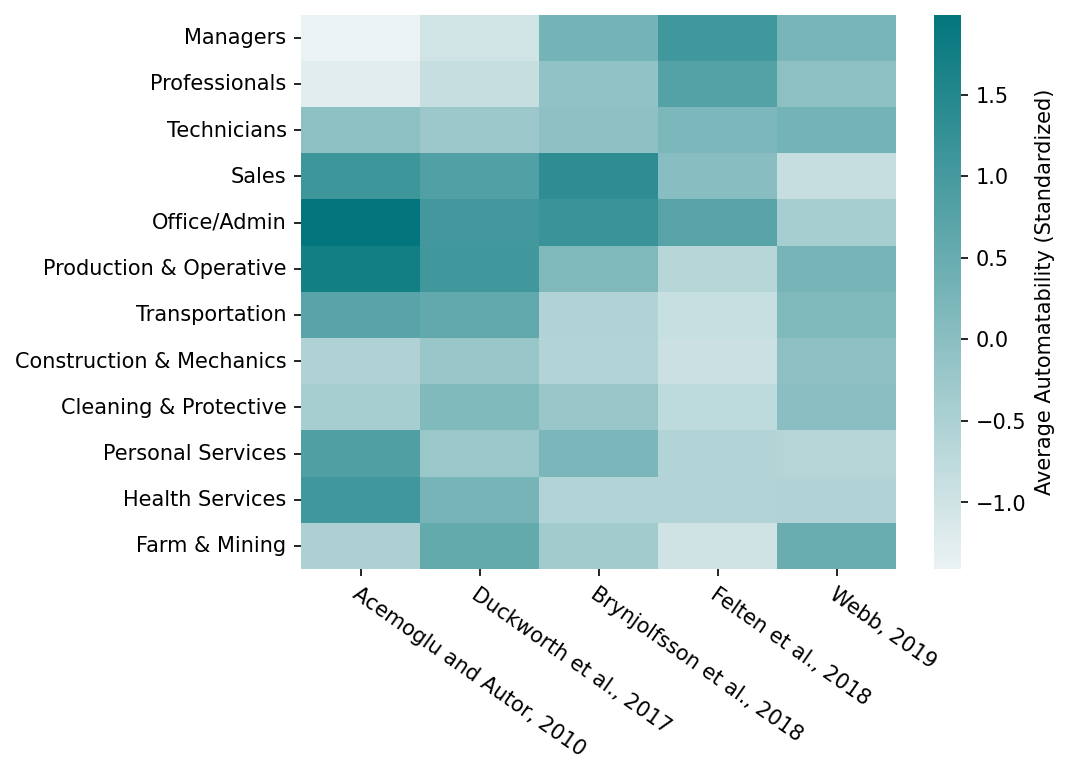

The extent to which AI can automate economically valuable tasks is perhaps the most important measure of the capabilities of AI systems. As we have previously investigated, the rapid automation of such tasks has the potential to accelerate economic growth and technological development. We think the potential for explosive growth serves as a critical factor underlining AI’s transformative impact on society. Yet, questions remain about why or why not extreme accelerations could occur, and if they could, how long such accelerations could last.

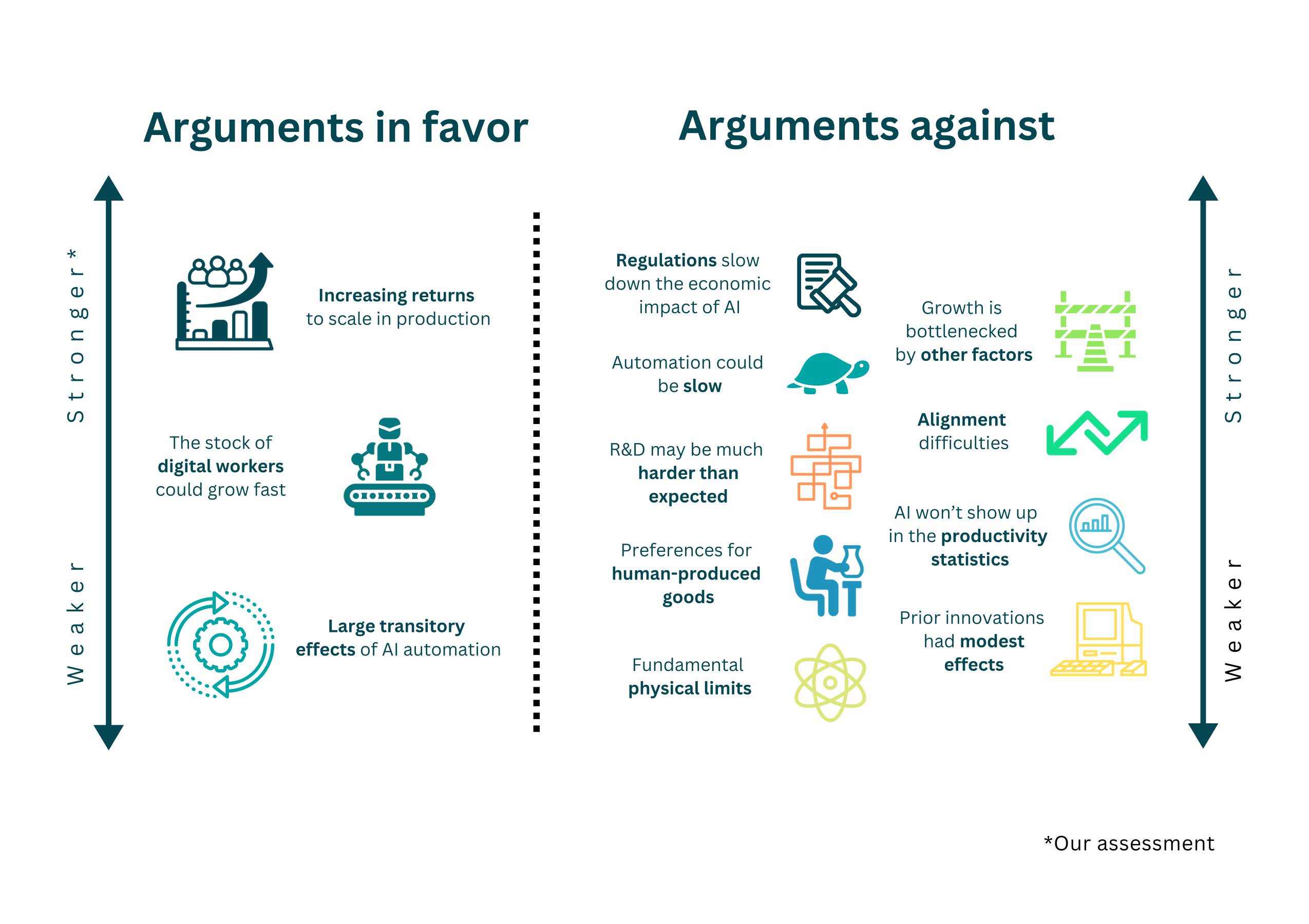

In our new article, available as a preprint on arXiv, we take stock of the key arguments for why we might or might not expect growth that is on the order of ten-fold the growth rates common in today’s frontier economies once advanced AI systems are widely deployed. We spell out these arguments in detail and tentatively assess their force. We aim to elucidate why certain mechanisms—such as regulation, the R&D challenges with developing capable AI, or constraints on other inputs—could influence growth rates in specific directions. While we don’t provide explicit growth rate estimates for each argument, we analyze how extreme several parameters must be for certain bottlenecks to apply.

Our work highlights a few key themes:

Growth theory models predict explosive growth. Standard and well-established growth theory models often predict explosive growth by default when AI can substitute for human labor on most or all tasks in the economy.

Regulation could restrict AI-driven growth. Intense regulation of AI and various restraints (arising from political economy, or risk-related concerns) could be sufficient to prevent massive accelerations in economic growth and technological advancement. Still, it might be unlikely in light of formidable incentives for development and deployment from diffuse actors, along with decreasing costs of AI development due to algorithmic advances.

Many arguments against explosive growth are weak. Many arguments opposing AI-driven explosive growth lack robust evidence. For example, claims about physical limits or non-scalable factors bottlenecking growth often don’t withstand scrutiny, given current resource usage is far from these theoretical limits.

It is difficult to rule out explosive growth from AI, but that this should happen is far from certain. We think that the odds of widespread automation and subsequent explosive growth by the end of this century are about even. Yet, high confidence in explosive growth still seems unwarranted, given numerous plausible counterarguments, and the lack of watertight models of economic growth.

Why we might see explosive growth from AI

Currently, labor is likely the only key economic input that cannot readily be scaled up in line with the growth of the economy. Capital (machines, buildings) and the quality of technology can be scaled up proportionally in this way (with the caveat that technology requires labor, which, again, is hard to scale). It is partly because of this, that fast economic growth is unlikely without automation: we are bottlenecked by the population growth rate. Automating human labor with ‘digital workers’, then, effectively means that all key economic inputs can be accumulated readily and continuously with additional spending.

Moreover, standard R&D-based growth models further predict that the economy exhibits increasing returns to scale: doubling all inputs results in a greater-than-doubling output. This is because scaling up your economy results in innovation, which raises the level of productivity across the board.

So, what happens when labor is automated? If it can partially contribute to improving technology, all key inputs to the economy (labor, capital and ‘ideas’) will be able to grow in line with the rate of growth of the economy. Given the increasing returns to scale, this accelerates growth: inputs will be scaled up proportionally as output expands, but output expands at a greater proportion each time. Such basic economic models predict accelerating growth if labor is fully automated.

While this is a robust implication from one of the most compelling theories of economic growth, the argument only provides strong but not unfaltering evidence. It is for example plausible that current growth rates are shaped by additional bottlenecks beyond the fact that, currently, the labor stock is not ‘accumulable’ or that new bottlenecks may emerge shortly after AI substitutes for human labor. Other factors, such as institutions, also matter for growth, but this story fails to consider these influences.

The stock of AI systems that substitute for human workers could grow very fast once such systems have become technically feasible. We show that if the future price of computation is even just on par with today’s prices, and the computational costs of running AI are similar to computational cost of the human brain, an economy would see a fast expansion of the stock of digital workers. The key intuition is that if AI workers are cheap, additional income is spent disproportionately on expanding digital workers, increasing the growth rate of the stock of ‘effective workers’ in frontier economies into the double-digits percentages.

However, there is substantial uncertainty about the computational costs of running AI systems that are suitable substitutes for human labor, because the requirements for computational throughput are highly uncertain, and the extent of the additional costs, e.g. robotic hardware is unclear. Moreover, price effects from an expansion in demand for computing hardware could drive prices up, though technological improvements in computing hardware are very likely to deliver substantial price reductions too.

AI automation has the potential to significantly boost economic output, even if it doesn’t automate 100% of tasks. This is particularly true if the automation occurs rapidly, within a short time frame like less than ten years. Even with substantial ‘bottlenecks,’ where human input is still required, the model suggests that rapid automation could lead to a short-term surge in growth, termed “explosive growth.”

It’s unclear how strong this argument is. It is quite uncertain how much more productive AI might be in tasks that it automates. AI systems could be superhuman along many dimensions for tasks for which it displaces labor (such as access to information, processing speed, learning abilities, etc.), but it is currently unknown what the economic gains are from such advantages. Additionally, the timeline for this automation could be extended, spreading out the impacts of AI automation. Finally, the actual fraction of tasks that AI can automate may not be large enough to induce the level of economic growth.

Why we might not see explosive growth from AI

Regulations could include restrictions on large training runs, prohibiting uncertified AI systems from performing certain tasks, limiting data access, etc. The objection that regulations could impede AI’s economic impact largely gains credence when considering the role of scaling laws in AI development. These laws emphasize that AI performance scales with the amount of training data and computational resources—restrictions on large training runs could be enforceable given their physical footprint. Intellectual property laws, if interpreted restrictively, could curb data access and incentives for AI development. Overall the large scale of resources needed makes regulating AI development more practical than with many technologies. This lends credibility to the notion that the right regulatory regime could substantially slow or even halt rapid advances in AI systems.

However, while regulations could slow the pace of AI development and deployment, they are unlikely to halt it indefinitely. The potential economic and strategic benefits of AI provide governments strong and lasting incentives to promote its advancement. Continued algorithmic progress and hardware efficiency gains will also make training AI systems more affordable over time, and widen the set of actors capable of developing and deploying AI, undermining regulatory restrictions. Though regulatory roadblocks may emerge, the forces propelling AI forward will be difficult to stop entirely.

The historical record of regulating technologies that could substantially boost output is limited, because few such transformational technologies have previously been developed. The closest possible analogues are the cluster of technologies introduced during the Neolithic and Industrial Revolutions. There is little compelling evidence to suggest that these technologies could have been effectively regulated in a way that would not just delay but substantially dampen their effects. So, while regulations may delay AI’s economic impact, it’s unlikely these would spread out its effects sufficiently to prevent major growth accelerations from AI automation.

Developing increasingly capable AI systems today often requires increasing computational resources and data. Developing advanced AI systems capable of automating a large number of tasks in the economy might require a truly vast amount of computational resources and data, which can only be gradually amassed. If this were so, automation could be gradual and slow. This gradual buildup means that the full automation of the economy by AI would take a considerable amount of time, spreading out its economic impact and mitigating the likelihood of a sudden acceleration in growth and technological change.

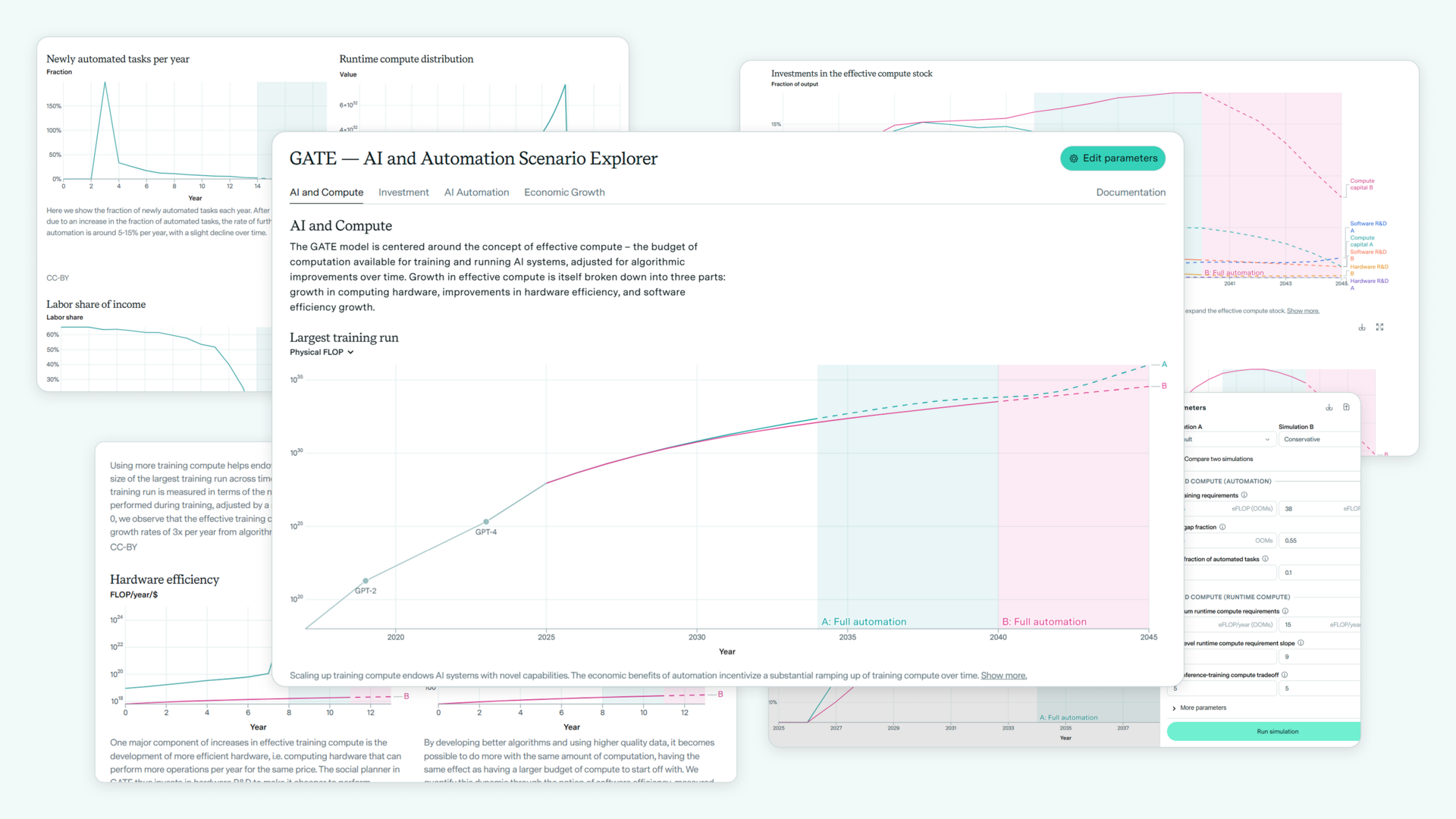

However, existing analyses do not support the notion of large computational or data gaps between the point where AI starts to have an economic impact and full automation. Existing estimates suggest that the largest plausible gap would be around ten orders of magnitude in computation, which, while a vast amount, could be crossed in less than forty years due to hardware scaling and efficiency improvements, and simple models suggest that if substantial automation occurs over forty years, then this will likely produce highly accelerated growth.

Second, even if automation takes longer than forty years, in a world governed by substantial economic bottlenecks in production, the last tasks to be automated would significantly boost economic output. Therefore, it’s difficult to conceive a scenario where full automation is possible and yet doesn’t result in explosive growth. In summary, while the argument that technological progress could be slow is coherent and compelling, it’s considered unlikely to prevent explosive economic growth due to AI.

Difficulties in steering and controlling AI systems and avoiding harmful behaviors could suppress growth effects from AI. If alignment is particularly challenging, there might be less incentive for private actors to deploy AI systems at scale, which would limit AI’s economic impact. Misalignment could necessitate human involvement in decision-making. AI systems providing incorrect or hallucinated facts or being deliberately deceptive could require human supervision.

A simple way, then, to think about AI misalignment, is that it effectively caps the fraction of tasks for which it is economically worthwhile to automate without human oversight. We can simply think about this as effectively limiting the tasks that AI systems will be used to automate. Whether or not being unable to automate some set of tasks could sufficiently suppress growth effects from AI depends on the precise fraction of tasks AI systems will be used to automate.

If a large fraction of tasks, say 25%, cannot be automated due to alignment issues, and if there’s a high degree of complementarity between tasks—so that non-automated tasks bottleneck the added-value from automated tasks—this could plausibly prevent explosive growth. It is currently unclear whether such alignment problems will be sufficiently difficult for AI systems to play such a small economic role. Moreover, our current difficulties aligning AI don’t necessarily imply that aligning AI will be infeasible, as formidable efforts will likely be dedicated to resolving such alignment issues in a world where such issues curtail our ability to derive economic value from AI systems.

Even if AI relieves our current labor bottlenecks, other factors of production (like energy or land) could limit output growth. If these constraints kick in quickly, AI-driven growth could be short-circuited before reaching explosive levels. Perhaps these could kick in relatively quickly: historical growth accelerations, such as during the agricultural revolution, were likely not very long-lived either. Perhaps, then, we should expect an AI-driven growth acceleration to grind to a halt almost immediately after it gets started.

Some version of the argument is theoretically sound, as resource constraints must eventually bind. The key question is whether they bind quickly enough to prevent explosive growth, and severely enough to offset increasing returns to scale.

But if we look at key examples, such as land or energy constraints or limits stemming from the rate at which capital can be built-up, the arguments do not seem to pan out. For instance, energy consumption could expand by 1,000-fold, and roughly 100x more land could be urbanized. Since resources can be used much more efficiently—especially if doing so would enable large economic accelerations—such constraints usually seem to offer enough latitude for scaling. We conclude that this argument is plausible based on the ‘base rate’ at which past accelerations were relatively quickly blocked, but the evaluations of specific cases—such as land or energy constraints—makes it seem less compelling.

Even if other bottlenecks don’t apply, R&D progress could have sufficiently unfavorable diminishing returns that it blocks explosive growth. If R&D has steeply declining returns, growth will not accelerate rapidly when all key economic inputs become scalable.

There is limited evidence to evaluate the plausibility of the premise. Perhaps the best-existing estimates suggest that the rate of diminishing returns are likely not at all steep enough: the feedback between economic inputs and output is more than sufficiently tight to produce accelerating growth.

Moreover, even if true, this argument alone may not preclude explosive growth. For example, the stock of AI workers could still grow very fast once developed, which could give rise to fast economic growth even despite the fact that these being added to the economy produces declining returns. Weak empirical support for the premise and the existence of alternative growth mechanisms make this a relatively unconvincing objection against explosive growth. It seems very unlikely that unexpected R&D challenges will be decisive in blocking explosive growth.

Further arguments, such as bottlenecks arising from human preferences for human-produced goods, AI’s contributions being mismeasured and not showing up in productivity statistics, fundamental physical limits, and so on are further considered in the full report.

Conclusion

In our work, we evaluated about a dozen counterarguments to the explosive growth hypothesis, and a few of the arguments in favor. After reviewing the arguments for and against explosive economic growth from AI, we conclude that it is about even that explosive growth would occur by the end of this century.

What we see as the strongest argument in favor of explosive growth is the straightforward application of the current best model of economic growth to automation—that if AI can contribute to scientific development, it will lead to discoveries in hardware and software that boost its capabilities, restoring the cycle of hyperbolic growth that arguably held for most of human history. The argument does not need to be watertight for the conclusion to hold: we could still see explosive growth even if AI does not contribute to speeding up technological growth or if AI does not automate all economically useful tasks.

What we see as the strongest arguments against explosive growth are the possible checks on accelerated change imposed by regulation, the possibility that the automation process could take a century or more and the challenge of aligning AI systems with user intentions. We also acknowledge that growth might be unexpectedly bottlenecked by some factor we did not consider or R&D might have harsher diminishing returns that we expect.

After taking stock of these arguments, the odds likely stand perhaps slightly better than even for automation of the sort facilitated by advanced AI enabling 30% or greater growth rates this century—a tenfold increase over the growth rate in modern frontier economies.

The lack of previous work on the subject among growth economists serves as both a caveat and an opportunity: it means that the debate isn’t fully mature, but it also signifies an urgent need for more scholarly attention. We have highlighted a few key open questions for further work, including physical bottlenecks beyond labor and capital, the economic value of superhuman abilities to learn, process information, coordinate, and so on, and plausible automation timelines. Advancing our understanding here could substantially clarify the likelihood of explosive growth from AI.