Benchmark scores are well correlated, even across domains

Model rankings are remarkably consistent across most AI benchmarks. Across 15 benchmarks with at least 5 models overlapping, the median pairwise correlation among benchmarks from different categories is 0.68, vs. 0.79 among those from the same category.

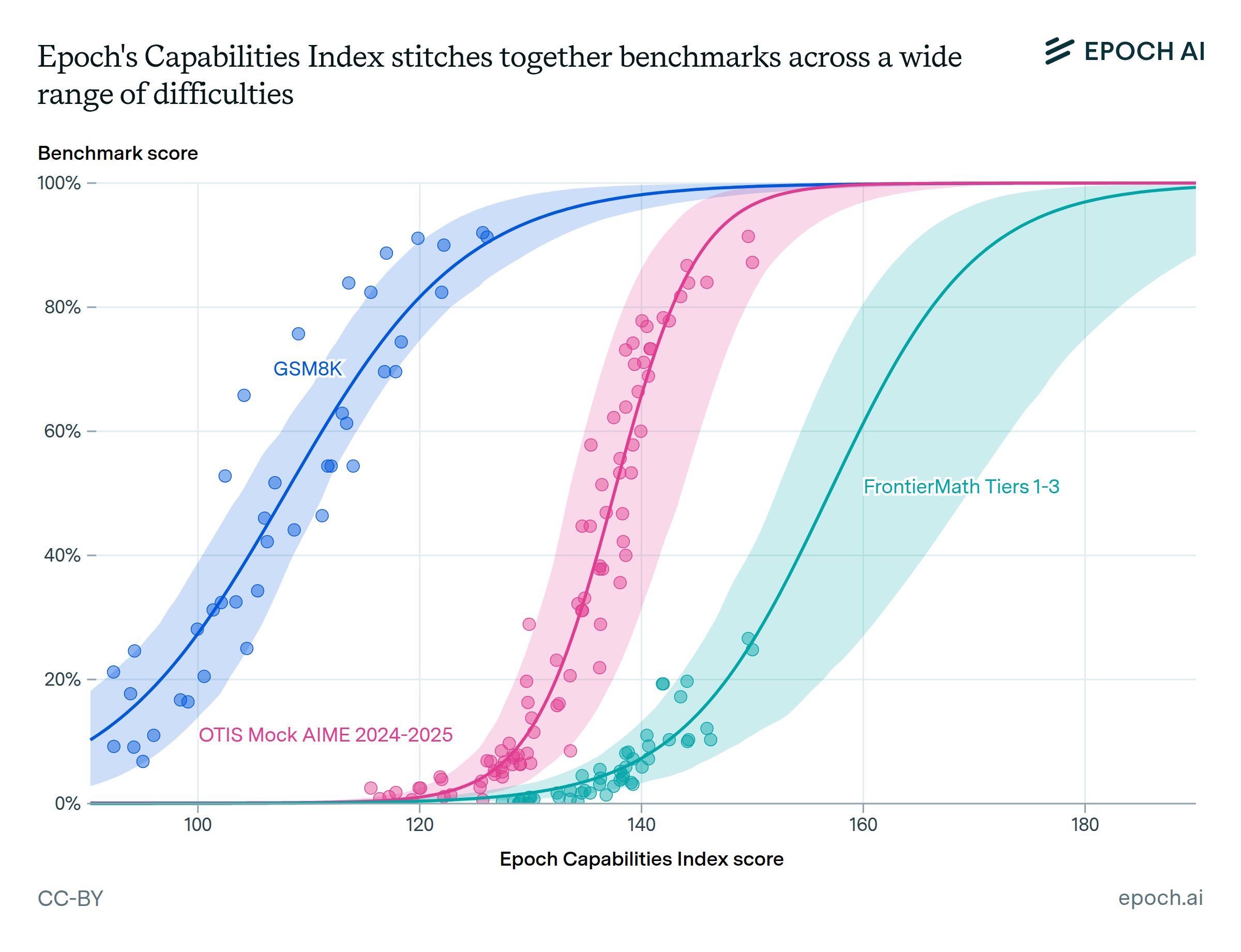

These correlations suggest a common capability factor that improves with scale—the intuition behind our Epoch Capabilities Index (ECI). However, this same fact means that correlation estimates are strongly subject to sampling noise: a model released several years after another will win on almost any task, so benchmarks spanning wide time ranges tend to have higher correlations with each other.

Authors

Published

January 23, 2026

Learn more

Overview

We visualize correlations between benchmarks in our Benchmarking Hub using a pairwise correlation matrix. All correlations correspond to Spearman (rank) correlations.

Across 17 benchmarks with a minimum of 5 models evaluated on each of the other benchmarks, the median rank correlation is 0.73. Correlations are nearly as high across benchmark categories as they are within categories; we find a median correlation of 0.68 among benchmarks from different categories, and 0.79 among those from the same category. This high degree of agreement between benchmarks motivates our Epoch Capabilities Index, which is designed to capture a single capability factor. Unsurprisingly, ECI correlates well with underlying benchmarks.

Data

Benchmark data is obtained from our Benchmarking Hub. In general, benchmarking evaluations are sparse; the models evaluated on one benchmark will not be evaluated on every other benchmark (especially for benchmarks released far apart in time). To create a more easily interpretable visualization with reasonable confidence intervals, we filter our benchmarks to a subset where no pair of benchmarks has fewer than 5 common models evaluated on each.

Analysis

We calculate simple Spearman rank correlations over model scores for each pair of benchmarks. We use rank correlation rather than Pearson correlations, since the non-linear nature of benchmark progress and saturation suggests that relationships between benchmarks will be non-linear.

After filtering to a subset of benchmarks where all pairwise comparisons share at least 5 common models, we are left with 15 benchmarks. Among these benchmarks, we find an average rank correlation of 0.73.

We manually classified benchmarks as belonging to four categories: Math, Coding, Reasoning, and Other. We then calculated the median pairwise correlation among benchmarks from the same category, and compared that to the median among benchmarks from different categories. These values were 0.68 and 0.79, respectively.

We additionally calculate correlations between each of the benchmarks and the Epoch Capabilities Index. Correlations are strong overall, as is expected, since ECI uses each of these benchmarks as input. We found a median correlation of 0.90, with a range of 0.46 to 0.96.

Limitations

Two factors play a large role in the specific correlation values we observe. First, correlation estimates are based on relatively few observations, and these observations are subject to selection biases. Second, because general capabilities are strongly correlated with training compute and release date, the range of models evaluated on a benchmark matters substantially. Benchmarks spanning a wide range of release dates—especially with sparse coverage—will tend to show higher correlations with other benchmarks that share this feature.

For example, evaluations on METR Time Horizons span from GPT-2 XL (2019) to Opus 4.5 (2025). Models far apart in time will rank similarly on almost any benchmark, leading to high correlations. Conversely, Fiction.liveBench covers only models released since December 2024—a narrow enough window that benchmarks might disagree on rankings for some models.