Introducing Epoch AI's machine learning hardware database

Our new database covers hardware used to train AI models, featuring over 100 accelerators (GPUs and TPUs) across the deep learning era.

Published

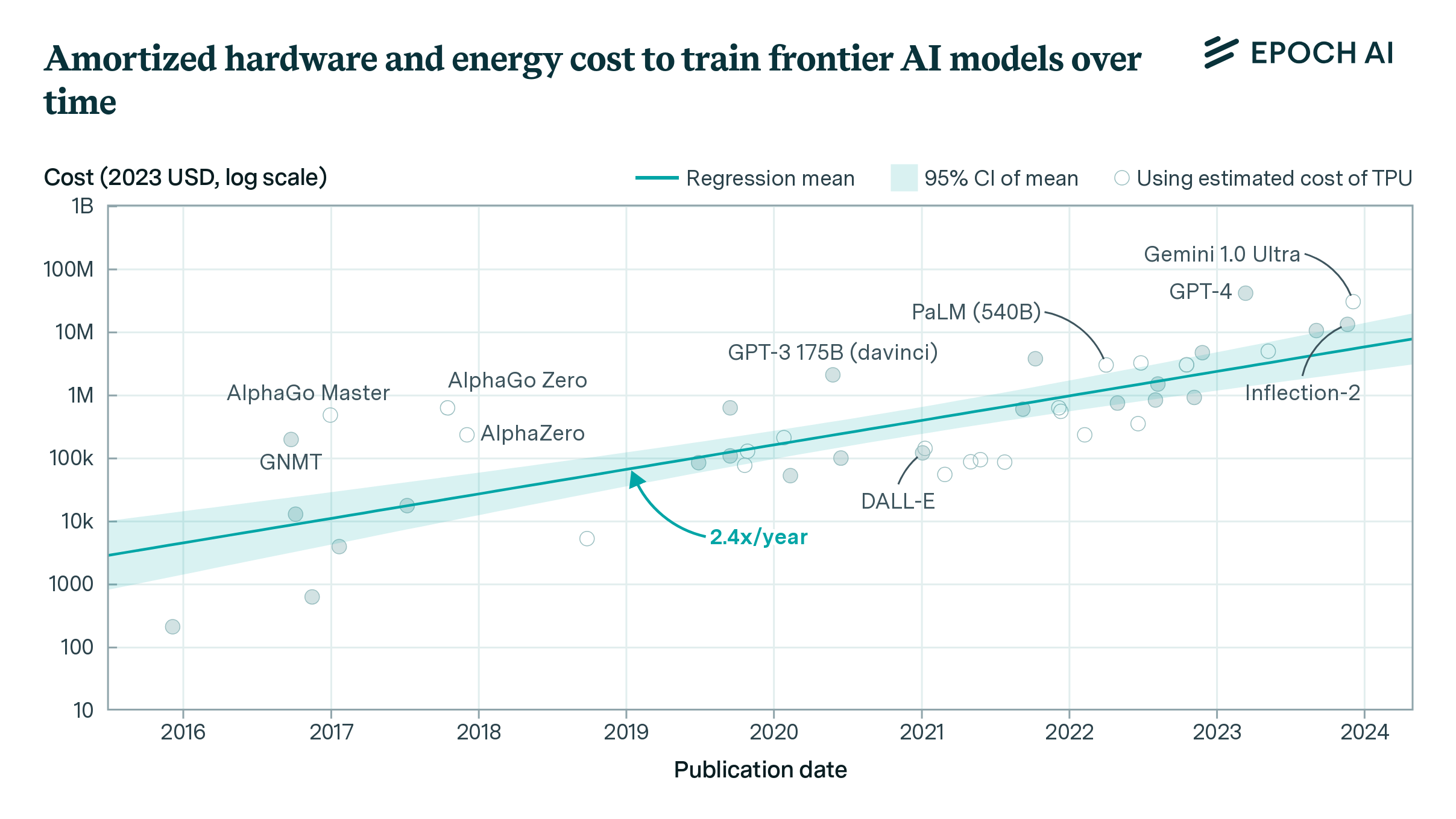

Modern AI models are trained on large supercomputing clusters using specialized hardware. For the leading AI models of today, hardware spending can reach billions of dollars. To explore ML hardware trends in detail, we have added a new Machine Learning Hardware database in our data hub. This covers key trends, such as how hardware performance has improved over time, or how AI clusters have grown larger for training leading models. It also features an interactive visualization, allowing users to explore their own questions using the database.

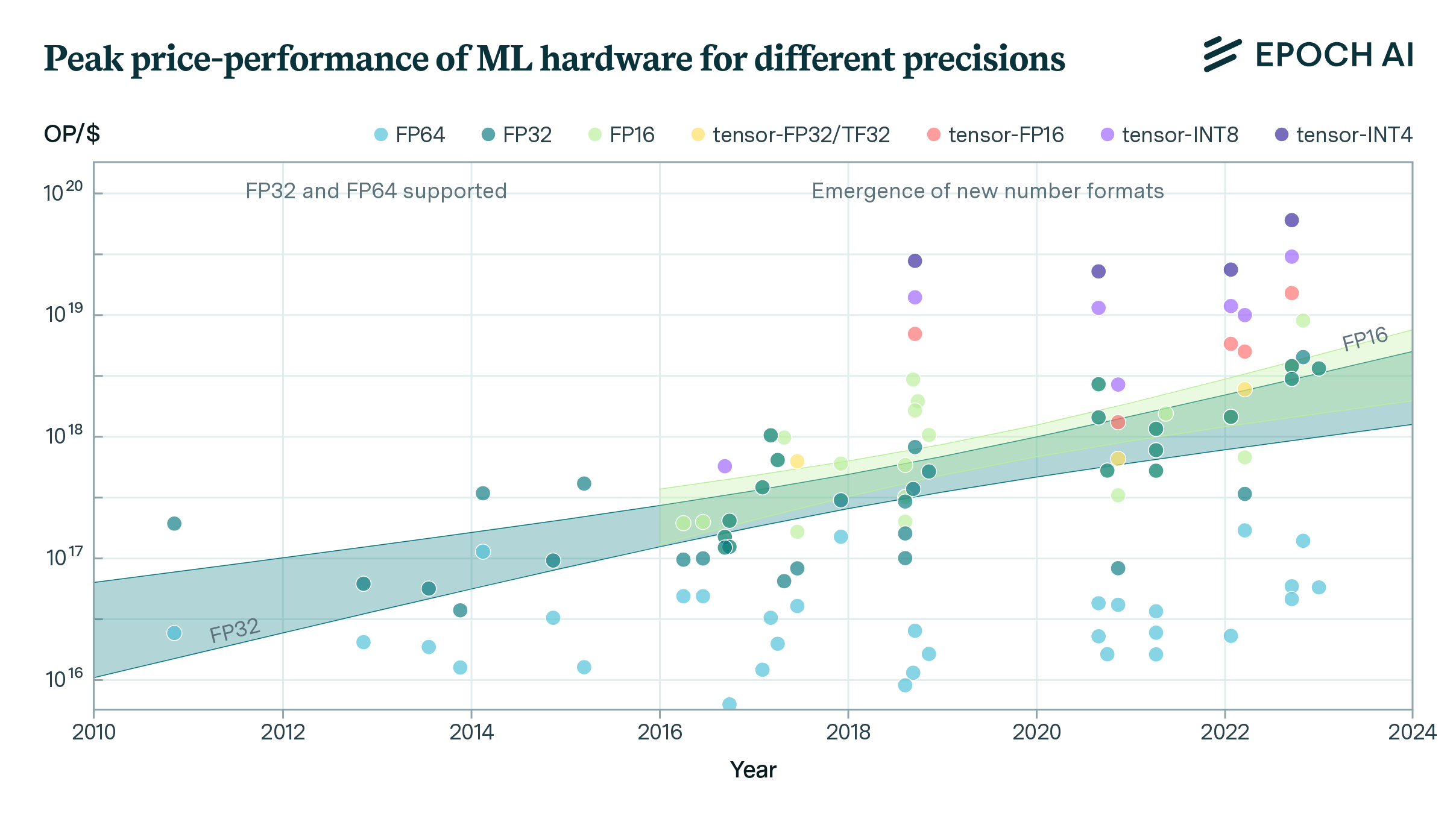

For example, we can use this data to plot how GPU performance has improved in two distinct ways: the raw number of operations per second has increased around 20% per year, but innovations such as tensor cores and reduced precision number formats have provided further improvements.

We also track properties such as hardware prices or energy consumption, allowing us to chart how leading AI chips have become 30% more cost-effective with each passing year. This is one of the key factors driving the growth of training compute at 4-5x per year.

We hope that the new Machine Learning Hardware database will be a valuable resource for anyone interested in the past and future of AI development. We will continue to add new ML accelerators as they are released. Click here to start exploring!