Chess Puzzles

100 novel puzzles, generated programmatically with a chess engine. Each puzzle has a single best next move.

About Chess Puzzles

The benchmark consists of chess positions where models must select the best next move. All positions were generated programmatically by Epoch and do not appear in any other source. Each position has a single best move for the player whose turn it is, as judged by the Stockfish chess engine. Models are graded based on whether they select the correct move.

While the broader significance of chess is low, we find this benchmark useful for several reasons.

- It plausibly measures important aspects of reasoning, including spatial reasoning and planning. In this sense, it is a “lite” version of more involved video game benchmarks.

- It is cheap to create and run: samples are generated programmatically and evaluating a model on a sample requires only a single API call.

- It is not saturated: as of publication (2025-12-10), the highest score by a frontier model is less than 40%. What’s more, we can generate more difficult samples as model capabilities advance.

Methodology

Models are given the board state via Forsyth–Edwards Notation (FEN); they are not asked to process an image. You can see model solutions using our log viewer, for instance here are the logs for GPT-5. Several websites, e.g. Chess.com, have tools to load FEN strings, display them as board states, and analyze positions.

Models are given the following prompt.

Below is a FEN string representing the state of a chess game. Please analyze the position and determine the best next move for the player whose turn it is. You may think as much as you would like, but please conclude your response with the move expressed as a four-character string stating the starting and ending square of the piece to be moved, using the format "MOVE: b1c3" (without quotes).

{FEN}

A model-based answer extractor is tasked with extracting the model’s final answer. This is then compared for an exact match to the answer key.

Board states are generated by a heuristic search and filtering program. The first six full moves are played randomly. Subsequently, moves are played by Stockfish set to a randomly-drawn Elo rating. After a game concludes, full-powered Stockfish is used to find positions where there is a single best move that the player model did not select. Positions are filtered out if the best move is capturing an undefended piece or a higher-value piece with a lower-value piece as these usually constitute obvious blunders. We found that one desired position was generated roughly every five simulated games.

The implementation of the puzzle generator, as well as the Inspect task definition, can be found here. The dataset was generated in a single run, with “chaos” mode enabled. We thank Gemini 3 Pro Preview for the vibe coding.

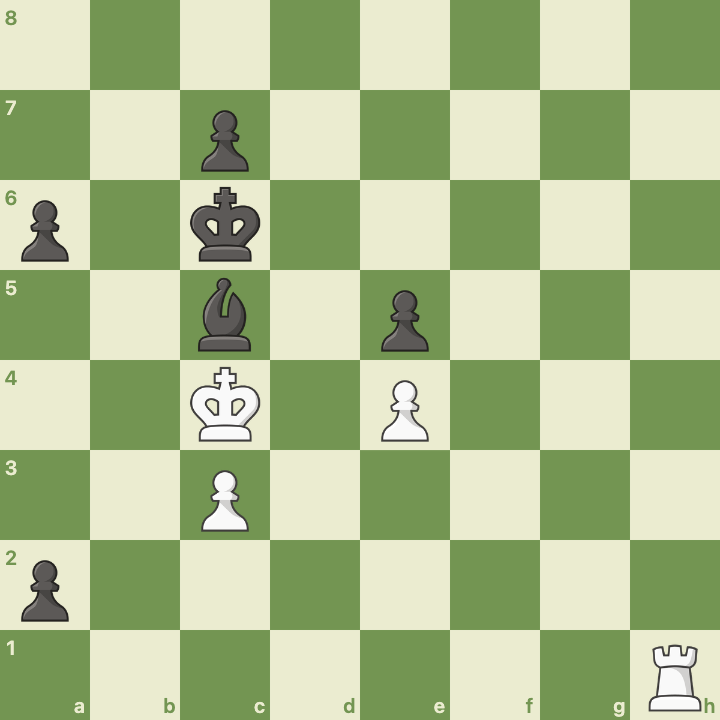

Our subjective assessment of the positions is that they range in difficulty from somewhat straightforward to somewhat difficult. We show one example of each below.

Two positions featured in the benchmark. White’s only good move in the position on the left is relatively straightforward, even if the reasons it is the only good move are less clear. White’s only good move in the position on the right is far less obvious.