Terminal-Bench

Terminal-Bench is a benchmark that evaluates the abilities of AI agents and their models to complete tasks that require planning and multiple steps using a computer’s terminal.

To successfully complete the tasks, the models need to understand the shell environment and how to use its programs, as well as the state of the computer, including its filesystem and running processes. They also require coherence and skill to plan and execute the necessary steps without being explicitly told what they are.

A Terminal-Bench task is a folder containing an instruction, docker environment, and test script. Here is an example of a task instruction:

“You are given the output file of a Raman Setup. We used it to measure some graphene sample. Fit the G and 2D Peak of the spectrum and return the x0, gamma, amplitude and offset of the peaks and write them to a file called “results.json”.

The file should have the following format:

{

"G": {

"x0": \<x0\_value\>,

"gamma": \<gamma\_value\>,

"amplitude": \<amplitude\_value\>,

"offset": \<offset\_value\>

},

"2D": {

"x0": \<x0\_value\>,

"gamma": \<gamma\_value\>,

"amplitude": \<amplitude\_value\>,

"offset": \<offset\_value\>

}

}

from the terminal-bench tasks page.

The team welcomes question submissions from external contributors, though inclusion in the dataset is contingent upon manual review.

The benchmark currently evaluates models on 80 of the 100 tasks which are publicly visible on the website and the project’s Github repository. These entries include a solution in the form of a shell script which has been verified by a human, though the agent’s solution is to be checked by a suite of test cases provided alongside.

The task files include a canary string to assist in checking for contamination in training corpora.

The score reported on the leaderboard is the percentage of the problems in the dataset which the given model together with a specific agent solved.

The benchmark was introduced in this announcement.

Methodology

Models run on this benchmark are paired with an agent tool which helps them continuously interact with the terminal environment. These include Anthropic’s Claude Code, OpenAI’s Codex CLI, Terminus and Goose. Performance is reported for given model-agent combinations because of the difference that the agent can make on performance. Some of these tools enforce or dynamically adjust the level of reasoning effort used for the models in a way which isn’t amenable to correction or elucidation by a user or evaluator in this context.

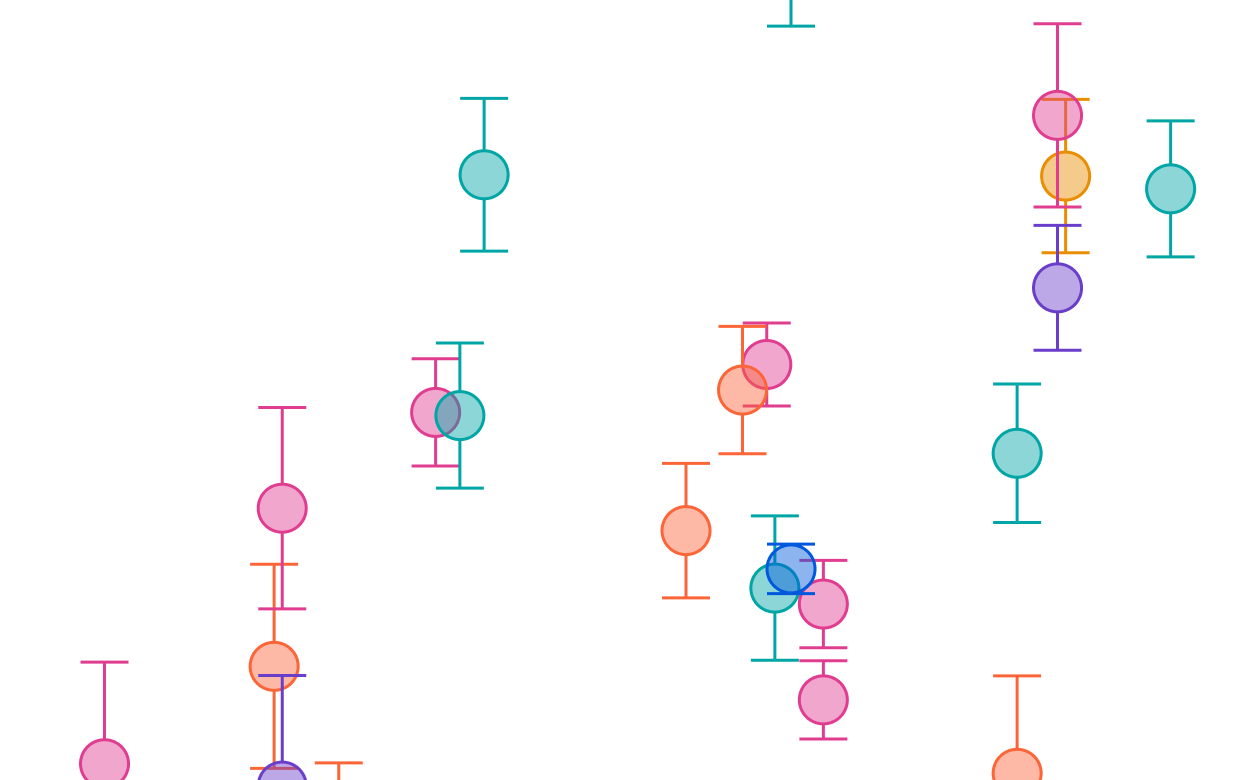

Scores on the leaderboard are determined by calculating the percentage of tasks in the question set which were solved, generally as an average over multiple repetitions of the entire set of questions.

The code for the benchmark is available in the Terminal-Bench Github repository. We get our data from the Terminal-Bench leaderboard.